Designing an assistive wearable

that supports deafblind cat owners

ALEXANDER KÖNIG Interaction Design One-Year Master 15 Credits

Spring Semester 2020

Supervisor: Henrik Svarrer Larsen

Abstract

Cats are ideal non-human companions for deafblind people who spend a lot of their time at home and quite often face problems like social isolation and loneliness. Even with no sight and hearing, deafblind people are able to enjoy the companionship and the haptic experiences of interacting with a cat just as feeling and stroking its soft fur, feeling the warmth of the cat and feeling its calm breathing. However, deafblind cat owners are facing various difficulties when caring for a cat. The-refore, the aim of this thesis project was to develop a concept for an interactive assistive device, that extends a deafblind cat owner’s awareness regarding the activities and needs of his/her cat. This supports cat owners in taking better care of their cat, helps to avoid potential risks of harming the cat and generally enables cat owners to perceive the presence of their cat and therefore creates a better relation, fosters the feeling of companionship and makes them feel less lonesome. In order to reach this aim, a user centered design process was followed which resulted in a final concept and prototype of an interactive wearable with a multi-modal tactile interface.

Content

1. Introduction 5

1.1 Problem Domain ...5

1.2 Research Question ...6

1.3 Aim and Contribution...6

1.4 Ethical Considerations ...6

2. Background 8 2.1 Deafblindness ...8

2.1.1 Definition of Deafblindness ...8

2.1.2 Implications...9

2.1.3 Psychological and Social Consequences ...9

2.2 Tactile Interfaces ...10

2.2.1 Definition of Tactile Interfaces ...10

2.2.2 Types of Tactile Interfaces ...10

2.2.3 Tactile Communication ...13

2.3 Related Work on Tactile Assistive Devices for Blind and Deafblind People ...14

2.3.1 Vibrotactile Navigation Belt ...14

2.3.2 Animotus ...15

2.3.3 Interactive Tactile Controller for Playing Online Board Games ...15

2.4 Animal-Computer-Interaction ...16

2.4.1 Definition of ACI ...16

2.4.2 Human-Pet-Computer-Interaction ...16

2.5 Related Work on Human Pet Computer Interaction Technologies ...17

2.5.1 Pet Trackers ...17

2.5.2 Pet Cameras ...18

2.5.3 Poultry.Internet System ...18

2.6 Implications and Further Considerations Regarding the Design ...19

2.6.1 User Group ...19

2.6.2 The Cat’s Role ...20

3. Methods 22

3.1 Online Survey and Secondary Research ...22

3.2 User Journey Mapping ...22

3.3 Expert Interviews ...22

3.4 Sketching and Prototyping ...23

4. Design Process 24 4.1 Design Process Model ...24

4.2 User Research ...24

4.2.1 Online Survey ...24

4.2.2 Expert Interview and Secondary Research ...26

4.3 Synthesis ...27

4.3.1 User Journey Map ...27

4.3.2 Design Opportunity ...28

4.4 Ideation & Prototyping ...28

4.4.1 Sketching ...29

4.4.2 Rapid User Testing ...31

4.4.3 Expert Feedback ...32

4.4.4 Prototyping ...32

5. Results 34 5.1 Final Design Concept and Prototype ...34

5.2 Use Scenarios ...37 5.3 Expert Evaluation ...39 6. Discussion 40 6.1 Conclusion ...40 6.2 Evaluation ...40 6.3 Future Work ...41 7. References 43

1. Introduction

Vision and hearing are the most important senses in our everyday life. We use them in order to communicate and interact with other people, to orientate in the world, to access any kind of infor-mation and knowledge, to do our work and to enjoy arts and culture. But what if both of those senses are severely impaired or not working at all? Then people are facing serious constraints in the everyday life and in being part of the society. This is the daily struggle of deafblind people. Deafblindness strongly restricts the ability to communicate, interact and socialize with others and thus to participate in society. Furthermore, due to their lack of mobility, deafblind people spend a lot of their time in their home environment where they feel safe and comfortable and where they are able to move around and do different tasks independently. As a consequence of both, there is a huge risk of becoming isolated from social life. Especially for people who become deafblind later in their life, this often leads to serious mental health problems, depressions and a strong feeling of loneliness.

Considered to have a positive impact on these issues is to own and care for a pet as a non-human companion. A great advantage of pets is, that it (except for dogs) does not require verbal communi-cation in order to being able to interact with them. Also, the interaction with pets is in its nature mainly based on haptic sensations, just as feeling the soft fur while stroking a pet or feeling the pet breathing and its warmth while it is lying in one’s lap. Thus, deafblind people can enjoy owning a pet and interacting with it (almost) as much as sighted and hearing people do. Especially cats are convenient pets for homebound deafblind people, as cats are quite independent and need less activity and training than a dog.

1.1 Problem Domain

Resulting from the given situation described above, deafblind people and cat ownership were cho-sen to be in focus of the design work of this thesis. Even though deafblind people presumably are able to care for and interact with their cat quite independently, there might still occur some difficul-ties and constraints due to their disability. For example, for deafblind people it is hard to know:

• Where the cat currently is • What the cat is currently doing

• If the cat is sleeping and there is a risk of disturbing or frightening it • If the cat is lying on the floor and there is a risk of stepping on it • If the cat feels sick or if there are any other problems

• If the cat wants to enter or leave the apartment • Generally feel the cat’s presence

This is where Interaction Design comes into play, as it can help to find possible solutions for the above stated problems. Nowadays, computer assistive systems and devices play and important role in supporting (deaf)blind people in their everyday lives. They enable and support communication, the access to information and to navigate and orientate. The assistive devices thereby mainly work based on tactile interfaces and interactions, addressing the haptic senses of their deafblind users.

Therefore, the human haptic sense gained of more and more interest within the Interaction Design and Human Computer Interaction community. With this thesis located in this context, the research question described in the following section evolved.

1.2 Research Question

How can an interactive device support a deafblind cat owner to gain greater awareness about his* cat by providing tactile information about the cat’s activities?

* NOTE: For an easier readability, the male gender form is used throughout this work. Obviously, the work thereby also refers to female and other gendered persons.

1.3 Aim and Contribution

Resulting from this research question, the aim of the design work conducted in the frame of this thesis is to develop an interactive assistive device, that extends the deafblind cat owner’s awareness regarding his cat. By doing so, it supports the cat owner in taking better care of his cat, as he gets to know more about the cat’s needs and activities. Also, it helps the cat owner to avoid potential risks of harming the cat. But not only the cat benefits from this extended awareness. It is also consi-dered to have a positive impact on the cat owner himself, as the interactive device supports him to perceive the cat’s presence and therefore creates a better relation, fosters the feeling of compani-onship and makes him feel less lonesome.

From an Interaction Design perspective, the design work is aimed to make a contribution in the field of tactile interfaces and interactions as well as to the broader field of designing assistive technologi-cal devices for people with disabilities. Through investigating various tactile modalities and different types of tactile interfaces and evaluating their strengths and limitations in conveying certain infor-mation, a contribution is made to the upcoming field of including haptics in Interaction Design and computational technology in general.

Furthermore, people with physical and/or mental impairments and their special needs often get forgotten when it comes to the design of new technology. Therefore, the design work of this thesis addressing the special and rather small user group of deafblind people, is seen as an important contribution towards making Interaction Design more inclusive and diverse.

1.4 Ethical Considerations

The design work in this thesis follows a user centered design process. Core of this approach is to include the intended users as much as possible in the design process, especially in the user research phase at the beginning and evaluation phase in the end. However, due to the really specific and rather small user group of deafblind people, their limited communication abilities and the current COVID-19 situation, it was not possible to get in direct contact with deafblind people (especially not with deafblind cat owners) at any time of the design process. From an ethical point of view, one

could now ask whether it is legitimate to perform user centered design without asking and involving the actual users. On the other hand, one could then ask what the alternative would be – Not desig-ning for this user group at all?

The author of this work is convinced, that even though it is not possible to perform user centered design in a classical sense, this shouldn’t exclude this user group from design activities, as they have the same rights as everyone else to be considered in design and technology and therefore also des-erve to get designed for. Thus, well aware of the fact that the actual users are missing in the pro-cess, it was constantly attempted to get at least as close as possible to the user group during the design process. In order to do so, experts on deafblindness were consulted, experience stories of deafblind people and their relatives were examined, and user tests were conducted with blindfolded participants. Here again, the author and participants were fully conscious of the fact, that just by getting blindfolded, a person will never be able to step into a deafblind person’s shoes and expe-rience the implications of being (deaf)blind. Yet, this conveyed valuable insights and helped to im-prove the tactile sensations and was therefore seen as a useful and legit method.

Besides deafblind people, the second stakeholder of the system are cats. As for humans, also for non-human species ethical guidelines have to be followed. Mancini (2011) provides various ethical considerations and guidelines for animals in the context of Human Computer Interaction. However, they should not be mentioned in any further detail here, as the cat’s part of the system is not in the focus of this thesis and as the cat is not affected in any direct way by the designed technology (as described section 2.6.2).

During all the research activities that involved participants in some way, a strong focus was set on data protection. Therefore, none of the interviews and user tests were recorded, only written notes were taken. The data gathered through the online survey was stored on an external hard drive and will be deleted after the completion of this thesis. In advance to all the research activities, partici-pants were informed that their participation is completely voluntarily, that they can quit at any time and that the generated data is only used in the frame of this thesis. Furthermore, they were asked for verbal consent.

Due to the current COVID-19 situation, most of the research activities were done remotely. Howe-ver, as it is hard to test tactile modalities and sensations remotely, also presence user tests were necessary. Thereby, sufficient distance between the tester and the participants was ensured and hand sanitizer was provided.

2. Background

2.1 Deafblindness

2.1.1 Definition of Deafblindness

The Nordic definition of deafblindness (as cited in Gullacksen et al., 2011, p. 13) is as follows: “Deafblindness is a distinct disability. Deafblindness is a combined vision and hearing disability. It limits activities of a person and restricts full participation in society to such a degree that society is required to facilitate specific services, environmental alterations and/or technology.”

This definition does not just define deafblindness as a combination of visual and auditory impair-ments but also reveals consequences of deafblindness for the deafblinds as well as for society. Ac-cording to this definition, a deafblind person is not necessarily fully deaf and blind. Most deafblind people have some residual vision and/or hearing, yet there are some, who are totally deaf and blind (Gullacksen et al., 2011). However, even with some vision and/or hearing left, deafblind people cannot automatically make use of services and tools, that are made for people with either visual or hearing impairments, as “the combination of impairments mutually reduces the prospect of using the potential residual vision or hearing” (Göransson, 2008, p. 22). This means, that deafblind people lack the ability to compensate the two senses that carry information from distance (like a deaf or blind person would do with the respective functioning sense). Instead they need to rely on the sen-ses, that carry information within reach like tactile, kinesthetic, haptic, smell and taste (Gullacksen et al., 2011). Additionally, deafblindness can be accompanied by further disabilities. Especially if it is caused by a genetic syndrome or brain injury, that also causes other cognitive and/or physical disabi-lities (Miles, 2008). As Gullacksen et al. (2011, p. 14) summarize, deafblindness must therefore “be regarded as a distinct disability, in which the consequences are far more extensive than the sum of its different parts”.

When speaking of deafblindness, a distinction is made between people who are born with deaf-blindness or have lost (parts of) vision and hearing at an early age, and people who have acquired deafblindness and thus developed one or both impairments later in life (Göransson, 2008). The latter are mostly older people with age related causes for both impairments, but it can also be cau-sed by an illness, accident or syndromes. The Usher syndrome is the most common reason for ac-quired deafblindness among younger people (Gullacksen et al., 2011). Depending on when in a person’s life deafblindness develops, how severe the impairments are and whether a person was born blind and lost hearing later or vice versa, leads to different implications for the development and everyday life of a person.

2.1.2 Implications

Communication and interaction with other people

Seeing the other person’s facial expressions and body language as well as hearing the other per-son’s voice, its tone and its strength are the major elements of human communication and interac-tion. People with deafblindness cannot receive (most of) this information, which severely restricts them in their ability to communicate and socialize with others and therefore participation in their social context (Gullacksen et al., 2011). Thus, people with deafblindness need to make use of other methods for communication, depending on how their impairments had developed. Most people who became deafblind later in life, are able to speak their culture’s spoken language. As they lose the ability to receive spoken language, they have to use different strategies and assistive technolo-gies. This amongst others can be the manual alphabet (a part of sign language), letters written in their hand or other parts of the body or the use of a Braille system. Most people who grow up deaf and become visually impaired later in life use sign language for direct communication, while most of them are also able to read and write the culture’s spoken language. With no residual vision left to use, they receive sign language tactually by feeling the other persons signs with their hands. For people with congenital deafblindness and children on a pre-linguistic communication level, the de-velopment of a tactually based communication is essential. In general, deafblind people try to make use of the residual vision and hearing as much as possible, when communicating and interacting with other people (Göransson, 2008).

Accessing information

For people with deafblindness it is hard to get access to any kinds of information, sighted and hea-ring people take for granted. For example, they mostly do not have access to information from the internet, TV, radio, newspapers and books. This also limits their ability to gain knowledge and there-fore further reduces their ability to participate in current discussions in society (Gullacksen et al., 2011).

Orientation and mobility

Another major consequence of deafblindness is the loss of mobility. Whereas people who are either deaf or blind are able to compensate the impaired sense and therefore are able to maintain their mobility to a large extend, deafblind people become depended on support. This can be transport services, personal guides or personal assistants. The loss of mobility therefore comes along with a loss of freedom and independence (Gullacksen et al., 2011). Lately, there has been done different work on assistive technology, that helps (deaf)blind people to orientate and navigate. Section 2.3 provides two examples thereof.

2.1.3 Psychological and Social Consequences

All the constraints of deafblindness lead to severe psychological and social consequences. Especially for people who become deafblind later in life and who have to experience the progress of deterio-ration, this becomes a huge psychological strain. They feel anxiety and insecure about their future, have existential thoughts and experience identity crises (Göransson, 2008). Further they have to constantly adjust their life and relearn many things people take for granted, like communicating, writing and reading (Gullacksen et al., 2011). Further they might lose a lot of their social relation-ships and most of the activities they were participating in, which can result in loneliness and depres-sion (Altshuler, 1978). All this does not only affect the life of a deafblind person but also has a strong impact on the people surrounding them, like their family and friends. As Miles (2008, p. 2) describes, people who are deafblind to a great extend “must de¬pend upon the good will and sen-sitivity of those around them to make their world safe and under¬standable”.

Yet not all deafblind people per se are depressed and suffer from a lack of social relations. Many deafblinds have managed to cope with the everyday struggles over time and reached a fairly high quality of life (Miles, 2008). They especially find comfort in their home environment, where they are able to move independently and to do different productive tasks (Altshuler, 1978). Important factors for a high quality of life are, that deafblind people accept themselves and their unique experiences in the world, that they receive education that helps them to maximize their abilities to communicate and to do productive tasks and that they live in families, communities or social groups, where they get valued as individuals (Miles, 2008).

Nowadays, computer based assistive technology plays another important role in supporting deaf-blind people to communicate, access information and navigate in the world and therefore in enhan-cing their independency and life quality in general (Göransson, 2008). These systems help deafblind people to substitute the missing or impaired senses by mainly addressing their haptic sense. So does the interactive system, which will be designed in the frame of this thesis. Therefore, the next section takes a closer look on how the haptic sense can get addressed by interactive technology. Further-more, state of the art examples in the context of assistive tactile devices for blind and deafblind people will be presented in section 2.3.

2.2 Tactile Interfaces

2.2.1 Definition of Tactile Interfaces

The human haptic sense was for a long time unexplored and underrated in the field of Hu-man-Computer-Interaction (HCI), as contemporary human-computer-interfaces mainly address the visual and auditory senses. However, recently there is a growing interest in including haptics in hu-man computer interactions, either to enhance visual and auditory information by a new communi-cation channel or to substitute them (MacLean & Hayward, 2008). When speaking of the human haptic sense, a distinction can be made between two modalities. The first is the kinesthetic sense that describes the conscious and unconscious experience of body movements and forces. The se-cond is the tactile sense which is the sense of touch mediated by the skin (Benali-Khoudja et al., 2004; MacLean & Hayward, 2008). Mainly the latter is addressed in human-computer interactions through tactile interfaces. Tactile interfaces thereby can be defined as interfaces that “comprise hardware and software components aiming at providing computer-controlled, programmable sen-sations of mechanical nature, i.e., pertaining to the sense of touch“ (Hayward & Maclean, 2007, p. 88). They are applied in a wide range of areas like entertainment, virtual reality applications, naviga-tion technology and medical technology (Pasquero, 2016). Further they also find applicanaviga-tion in the sensory substitution for people with visual and hearing impairments, which is of special interest in the frame of this thesis.

2.2.2 Types of Tactile Interfaces

In HCI research there are numerous approaches and examples, how various tactile stimuli can be generated through technological and electromechanical systems. Benali-Khoudja et al. (2004) as well as Pasquero (2016) provide an extensive overview hereof. Most attempts focus on devices that stimulate the hand and especially the fingertips because of their high tactile acuity. Yet also other

regions of the human body are considered, just like the back, the torso, the thighs and even the tongue and mouth (Pasquero, 2016). In the frame of this thesis, only the most common and for this work most relevant approaches shall be described in further detail.

Vibrotactile interfaces

Vibrotactile interfaces use vibration patterns to stimulate the human haptic sense. Vibrotactile stimu-lation is currently the most widespread means of providing haptic feedback (Hayward & Maclean, 2007). Most familiar is its application in mobile phones, where the vibration function is providing invisible and silent notifications. Also, in controllers for video games and virtual reality applications, vibrotactile feedback is well established to enhance the game experience (Pasquero, 2016). From a technical perspective, there are different kinds of vibration motors that generate vibrotactile stimula-tions. A big advantage of these actuators (and probably the reason for the popularity of vibrotactile feedback) is, that they are cheap, straightforward to use and program and small in size and therefo-re suitable in small devices (Hayward & Maclean, 2007). Depending on its application, the “atten-tion-grabbing nature of vibrotactile feedback” (Spiers & Dollar, 2017, p. 18) might be a potential downside of this kind of tactile stimulation.

Distributed tactile displays

Distributed tactile displays work with an array of electromechanical pins that raise out of a surface in order to create a representation of a texture or a shape (Pasquero, 2016). The pins create spatially distributed sensations at the skin’s surface, usually on the highly sensitive fingertip (Hayward & Mac-lean, 2007). Most known examples of distributed tactile displays are Braille displays for blind people. These displays work with a 2x4 array of raised or absent dots to display the tactile Braille alphabet. Figure 1 shows a picture of a common braille keyboard.

The technology behind these surface changing arrays is quite complex. There has been done a lot of research in developing different kinds of actuators that for example work with electromagnetic technology (solenoids, voice coils), Shape Memory Alloys, piezoelectric crystals or pneumatic sys-tems (Hafez, 2007). Also, a lot of research was taken into different resolutions of the matrixes to be able to display more complex textures or even imagery. The resolution of distributed tactile displays (the number of pins in the matrix) is indicated in taxels, which stands for tactile pixels (Benali-Khoud-ja et al., 2004).

Figure 1: Braille display and keyboard, (HumanWare, 2020)

Because of their immense technical complexity, distributed tactile displays are very expensive and therefore (apart of Braille systems) they have hardly made it to the commercial market (Pasquero, 2016). Further these displays afford a lot of training of the fingertip’s tactile acuity and thus are hard to use. Pasquero (2016, p.12) therefore concludes that “most of the distributed tactile displays built to this day fail to convey meaningful tactile information and to be practical at the same time”. Thermal interfaces

Thermal feedback, which is the heat transfer occurring between the fingertip (or other parts of the human skin) and a surface, plays an important role in exploring and distinguishing different mate-rials and surfaces. Hence, also this modality is interesting in the context of tactile interfaces (Hafez, 2007). To technically develop a thermal interface, heat can quite easily get generated through Peltier modules. Whereas coldness is harder to produce. Some attempts have been made using an air flow generated by a ventilation system or with water cooling, where cold water is pumped through a hose (Gallo et al., 2012). Even though thermal interfaces and especially heat are relatively simple to implement, it is hard to present information dynamically, as the process of heating up and cooling down the interface takes a longer time (Pasquero, 2016).

Shape changing interfaces

Shape variations are encountered frequently in daily life. They can be perceived by humans with little effort and thus play and important role in tactile sensations. Therefore, interfaces that are ba-sed on shapes and shape changes, provide a naturalistic way of representing and communicating information (Spiers & Dollar, 2017). Shape changing interfaces use physical change of shape as a way to encode information (Bau et al., 2009). The shape change thereby gets controlled through direct or indirect electrical stimuli, that enable the object to return to its initial state and to repeat the shape change (Coelho & Zigelbaum, 2010; Rasmussen et al., 2012). As illustrated in figure 2, Rasmussen et al. (2012, p. 736) describe eight types of changes in shape, that can be applied to interfaces: Orientation, Form, Volume, Texture, Viscosity, Spatiality, Adding/Subtracting, and Permea-bility. Further they emphasize that from an interaction point of view, not only the two (or more) end states of a shape are highly interesting, but also the dynamics of change and therefore the way, how a shape transforms from one state to another. Thus, shape changing interfaces are meaningful and versatile tools for communication and expression. Coelho and Zigelbaum (2010, p. 172) descri-be three ways, how shape changing interfaces can communicate information: “[by] acquiring new forms which in themselves carry some kind of meaning; [by] using motion as a way to communicate change; and [by] providing force-feedback to a user”.

The shape transformation in shape changing interfaces can either serve as an input or as an output modality, what distinguishes them from the other types of tactile interfaces shown above, as these serve mainly as mere output modalities. Another advantage of them is, that “once a shape is assu-med by the interface, the information represented by that shape will continue to be communicated

Figure 2: Eight possible types of change in shape changing interfaces, (Rasmussen et al., 2012)

to the user without any active stimulation” (Spiers & Dollar, 2017, p. 18). Compared to a vibrotactile interface which constantly has to vibrate in order to communicate information, shape changing interfaces can communicate in a more subtle and less attention demanding way.

Shape changing interfaces can be technically implemented in numerous ways through various ac-tuators. Servo motors for example can mechanically extend, move or rotate an object (Spiers & Dollar, 2017; Stanley & Kuchenbecker, 2012). Trough inflating or deflating as well as through elec-tromagnetic actuators, objects can be extended or decreased (Bau et al., 2009). Depending on what kind of shape and transformation should be achieved, physical and technical constraints are consi-dered as being potential drawbacks of shape changing interfaces (Coelho & Zigelbaum, 2010).

2.2.3 Tactile Communication

After focusing on tactile interfaces from a more technical point of view, it is also important to take a closer look on how generally tactile communication works. This section gives a basic overview. In this context it is interesting to first take a look on the differentiation between active and passive touch as proposed by Gibson (1962). Active touch thereby refers to the action of actively touching and exploring. Thus, the receiver (the touching person) himself is responsible for the tactile stimulus he receives on his skin. Whereas passive touch is caused by an outside agency, and therefore the receiver does not make any active movements to receive a tactile stimulus. Hence in case of active touch a person is touching whereas in case of passive touch a person is being touched (Gibson, 1962). Thus, for passive touch to work, the tactile interface has to be in continual contact with the receiver, so that signals will not be missed. Generally, active touch can be seen as the predominant and more naturalistic kind of tactile perception (MacLean & Hayward, 2008). Applied to the context of tactile interfaces, a Braille display is an example for active touch, as a person has to actively ex-plore the surface of the display with his finger in order to read the Braille letters. Accordingly, the vibrotactile stimulus of a mobile phone is an example for passive touch.

With these two modalities of tactile perception in mind, now a further look should be taken on how tactile interfaces can be used in order to communicate various kinds of information. According to MacLean & Hayward (2008) there are two major categories of tactile signals: Simple signals and abstract informative signals.

Simple signals convey information mainly through a binary on/off state. Again, the vibration of a mobile phone is an example. If the mobile phone vibrates, the vibrotactile stimulus conveys the information, that there is an incoming call. If there is no vibration, nobody is calling (MacLean & Hayward, 2008). The authors suggest, “that simple signals are preferable to complex signals when 1) they are all that can be reliably detected, due to limitations of either hardware or context of use […], 2) only limited information need be conveyed, or 3) a strong, fast, and accurate user response is needed” (Hayward & Maclean, 2007, p. 108).

Abstract informative signals are more complex than simple signals, as they can vary in other additio-nal parameters and thereby encode additioadditio-nal meaning (MacLean & Hayward, 2008). Sticking to the example of a mobile phone’s vibration, a certain vibration pattern could convey information about an incoming text message whereas another pattern could inform about an incoming call. Thus, information is attached to the abstract tactile stimuli (different vibration patterns), that has to be learned and interpreted by the mobile phone’s user.

This idea of attaching information to brief abstract tactile stimuli is the core of haptic icons (Maclean & Enriquez, 2003). The concept behind basically follows the same principle as the ones for well-es-tablished graphical or auditory icons. Maclean & Enriquez (2003, p. 2) define haptic icons as “brief computer-generated signals, displayed to a user through force or tactile feedback to convey infor-mation such as event notification, identity, content or state”. These tactile signals can thereby either be experienced passively or be explored actively (Maclean & Enriquez, 2003). Haptic icons can be based on metaphorically derived symbols or more on arbitrarily assigned associations (MacLean & Hayward, 2008). Symbolic metaphoric icons thereby are more intuitive and easier to learn and re-member as abstract assigned associations, yet they are harder to scale for a large set of icons. With regard to the design of haptic icons, Pasquero (2016, p. 7) provides important rules similar to the design principles for graphical and auditory icons. They should be “practical, reliable, quick to identify and pleasant to the tactile sense without being too distracting. Therefore, they should be designed with consideration for context and task […]. In order to bring added value to an interac-tion, haptic icons must be easy to learn and memorize; they must carry evocative meaning or at least convey a discernible emotional content. Finally, they should be universal and intuitive, while, at the same time, support increasing levels of abstraction as users become expert through repeated use”.

2.3 Related Work on Tactile Assistive Devices for Blind and

Deafblind People

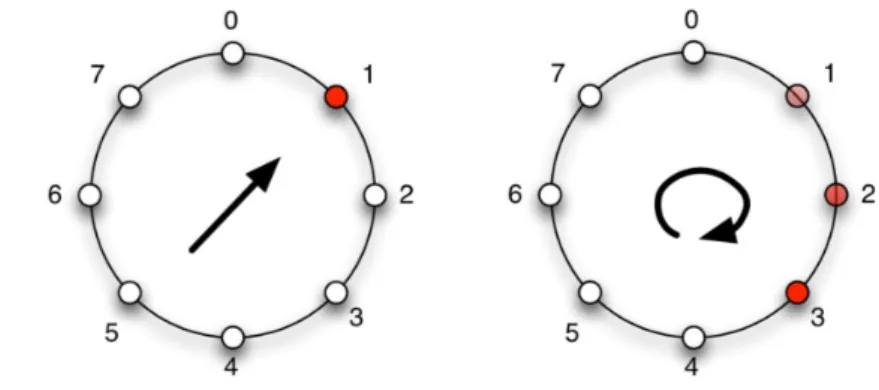

2.3.1 Vibrotactile Navigation Belt

In the research context, a lot of work has been done on tactile assistive technology for wayfinding and navigation. One example is the work of Flores et al. (2015) who developed a vibrotactile inter-face in form of a belt for guiding blind and deafblind walkers. Their goal was to design an interinter-face, “that would be natural, discreet, easy to equip, and would have sufficient resolution for our purpo-se” (Flores et al., 2015, p. 308). Their interface consists of eight small vibration motors that are pla-ced evenly around a waist belt. Based on information from an external localization system, that measures the position and orientation of the person wearing the belt, the belt gives direction signals by activating the vibration motors accordingly. To do so, they defined three types of control: direc-tional, rotation and stop. These controls get communicated by the belt through different vibration signals (figure 3). The results of their testing with blind users show, that this system works success-fully.

Figure 3: Continuous directional signal and rotation signal from the belt’s vibration motors, (Flores et al., 2015)

Regarding the design of the interface, Flores et al. (2015, p. 308) made different considerations: • Ergonomics: The belt needs to be comfortable, easy to put on, and easy to remove.

• Self-sufficiency: The belt needs to include power, communication, and control units for wireless operation.

• Resolution: The belt needs to incorporate a sufficient number of actuators to provide a good direction resolution.

• Aesthetics: The belt should be visually appealing with hidden electrical and mechanical compo-nents.

This is seen as an interesting example as it is in the context of location and orientation. The weara-ble conveys spatial information through different vibration signals and patterns. Also, the considera-tions regarding the design attributes and the waist belt as a wearable device served as important inspiration for the design work of this thesis.

2.3.2 Animotus

Another example from the context of assistive navigation technology for blind and deafblind people is the Animotus from Spiers & Dollar (2017). The Animotus is a cubic hand-held device, that provi-des a shape changing interface through the static bottom and the movable top halve of the cube. Based on external information about the walker’s position, the Animotus provides navigational in-formation about the direction and distance of the target by extending and rotating the upper half (figure 4). Two servomotors (one for the extension and on for the rotation) enable to display infor-mation dynamically. Extension thereby communicates the distance of a target and rotation the di-rection a blind person has to walk. If the Animotus is back in its original cube shape, the target is reached.

Since many tactile assistive devices work based on vibrotactile feedback, Animotus was considered as an interesting example, as it conveys information through another tactile modality, namely shape change. Especially rotation is quite simple to implement and is therefore interesting for the design work of this thesis. Also, this example could be easily combined with vibrotactile feedback in order to convey additional information.

2.3.3 Interactive Tactile Controller for Playing Online Board Games

Located in the context of leisure activities, Caporusso et al. (2010) developed a tactile interactive device that enables blind and deafblind people playing online chess. The device, which is operated similarly to a conventional computer mouse (figure 5), conveys different kinds of information through multimodal tactile feedback. One modality is vibrotactile feedback, which provides naviga-tion feedback. If the mouse pointer gets moved over the virtual chessboard by moving the device, vibration gives certain feedback for example in case the user moves the mouse pointer out of the

Figure 4: Shape changes of Animotus, (Spiers & Dollar., 2017)

chessboard. The second tactile modality is a Braille cell on top of the device. This provides positional information based on where the mouse pointer is placed on the chessboard. As can be seen in figu-re 6, this for example can be information about the squafigu-res and the pawns on them. As with an ordinary computer mouse, actions (e.g. moving the pawns) can be performed with two tactile swit-ches.

Especially due to its multimodality and the way, how different information layers get expressed through different tactile modalities (in this case Braille pins and vibrotactile feedback), this example was an important inspiration. The device designed in this thesis also has to manage different infor-mation layers and has to find ways to convey inforinfor-mation in different tactile ways. One limitation in this example was seen in the fact, that it uses Braille. Braille is quite hard to learn and therefore not all (deaf)blind people are able to read it. Thus, to make a device accessible to as many deafblind people as possible, Braille should rather not be implemented. Also, Braille technology is quite hard and expensive to implement.

2.4 Animal-Computer-Interaction

2.4.1 Definition of ACI

Including animals in the field of HCI is a relatively novel approach and was introduced by Mancini (2011). With what she calls Animal-Computer-Interaction (ACI), the author established a new re-search field on how animal centered interactive technology can be designed. Mancini (2011) there-by proposes three different goals, that can be pursued there-by the design of this technology. The first is to improve animals’ life expectancy and quality by fulfilling their physiological and psychological needs. Second aim is to support and empower animals (especially farm animals) in the legal context they are involved in. Third is to foster the inter-species relationship between humans and animals by enabling communication and promoting understanding between the two. Even though the techno-logy is mainly designed for animal use and thus according to the animals’ needs, both animals and humans can benefit thereof (Mancini, 2011).

2.4.2 Human-Pet-Computer-Interaction

As the field of ACI has evolved, a lot of research has been directed towards the design of technolo-gy that aims to foster human–companion animal interactions, that both human and animal can benefit from. From this research, Human-Pet-Computer Interaction (HPCI) as a subfield of ACI emer-ged. HPCI shares similar objectives to ACI but lies additional emphasis on “understanding how

tech-Figure 5 (left): Interactive tactile controller for playing online chess, (Caporusso, 2010)

Figure 6 (right): Encoded Braille information, (Caporusso, 2010)

which requires innovative approaches to meeting the needs of dynamic, intimate companionships” (Nelson & Shih, 2017, p. 173). Design of interactive technology is thus not just about “tracking pet attributes and relaying them to pet owners in meaningful and interesting ways, but also [about] inspiring new awareness or positive change in human-pet relationships” (Nelson & Shih, 2017, p. 173). Interactive technology therefore serves as a supplemental link between an owner and his pet, that mediates and supports interactions between the two of them. The next section provides exam-ples from research as well as commercial products in the field of HPCI, that are of special interest in the frame of this thesis.

2.5 Related Work on Human Pet Computer Interaction

Technologies

2.5.1 Pet Trackers

In the consumer market a wide range of pet tracking devices can be found, mostly aimed for dogs and cats. Well known examples are products from FitBark, Tractive or Whistle (FitBark, 2020; Tracti-ve, 2020; Whistle, 2020). With minor differences, all these products basically offer the same functio-nalities. The little wearable trackers get attached to a pet’s collar (figure 7). Through a mobile net-work connection, they are connected to associated apps on the pet owner’s smartphone. There, the pet owners can find different status, activity and health information of their pets. For example, all trackers provide real time GPS tracking of the pets, which helps pet owners to locate a cat exploring the neighborhood or to find an escaped dog. Also pet owners can set virtual fences and therefore define areas in the neighborhood where the pets are allowed to go. In case a pet leaves the pre-de-fined area, the owner gets a notification on his smartphone. Further the trackers provide various activity and health monitoring functions that provide information such as walking distance, burned calories or sleeping and resting times (figure 8). Also, they offer social media functions like sharing of activity data or building a network with other friend pet owners in order to compare each other’s pet activities.

Pet trackers are interesting examples, as they reveal information about what ordinary pet owners are interested in tracking and knowing about their pet/cat. As the information is only screen based, it is obviously hard for deafblind people to make use of these systems. Also, it became obvious, that deafblind cat owners need different information than the ones provided by these examples. This is mainly because existing trackers are more focused on the outdoor context and therefore not enable indoor localization, which is crucial for a system for deafblind people.

Figure 7 (left): Whistle pet tracker, (Whistle, 2020) Figure 8 (right): Cat health and activity monitoring in the Tractive app, (Tractive, 2020)

2.5.2 Pet Cameras

Another type of pet Internet of Things (IoT) devices are smart pet cameras that come along with a lot of different additional features. In contrast to pet trackers, these devices are bidirectional, as they not just provide functions for monitoring but also enable pet owners to interact with their pet through the device. Examples hereof are products from Furbo or Petcube (Furbo, 2020; Petcube, 2020). As for the pet trackers, all the pet cameras come together with an individual app for the pet owner’s smartphone. In the app, pet owners can activate the camera and watch their pets and take videos and photos of them. Further, they provide two-way audio, so that the owners can listen to their pets and talk to them. Next to the verbal interaction, some devices contain a treat disposer (figure 9), that allows further interaction with the pet. Particularly for cats, Petcube (figure 10) offers a laser pointer function, that allows owners to play with their cat while watching them on the video.

These are interesting examples, as they show how smart devices can be useful to care for pets and how technology-mediated interactions between pet owners and their pets can look like. Yet, the design work in this thesis aims to keep the interaction between a deafblind cat owner and his cat natural and physical and not mediated through the designed device.

2.5.3 Poultry.Internet System

An example from HPCI research is the work of Lee et al. (2006) that focuses on technology media-ted tactile interactions between humans and poultry in order to support remote connecmedia-tedness. Poultry were thereby chosen, as they belong the worst-treated animals in modern society due to industrial meat and egg production. However, the authors point out, that their interactive system and the underlying principles can easily be transferred on other pets such as cats and dogs. Lee et al. (2006) claim, that basic solutions for pet monitoring while not being with them (e.g. video sys-tems) provide too little sense of presence and no possibilities for tactile and tangible interactions with the pet. Therefore, they developed an interactive system, that addresses this lack of tactile interaction possibilities.

The system consists of two parts, the one they call “office system” on the human side (the idea is that a person is at work while interacting with the pet left alone at home) and the “backyard tem”, the part on the pet’s side. The two systems are connected via the internet. The backyard sys-tem is a mobile wearable in form of a lightweight jacket the chicken wears (figure 11). It contains vibrotactile actuators as well as electrodes that are attached to the chicken’s thighs to sense its walking movements. Further, the backyard is equipped with an array of cameras, that enable com-puter vision tracking of the chicken’s position as well as real time 3D renderings of the chicken. In the office system Lee et al. (2006) developed two different modes. The first is an augmented reality application, where the pet owner can see a representation of the backyard with a real time

Figure 9 (left): Furbo pet camera and treat disposer, (Furbo, 2020)

Figure 10 (right): Petcube Play 2 cat camera, (Petcube, 2020)

to touch the virtual chicken, they attached a little wearable with an ultrasonic sensor and vibrotacti-le actuators to the owner’s finger. The ultrasonic sensor serves to detect the fingers position and in case the finger touches the virtual chicken, the actuators send a vibrotactile signal, so that the pet owner receives a tactile feedback when touching the virtual chicken. At the same time, also the real chicken receives a vibrotactile stimulus through its jacket, with the aim of giving the animal a feeling of getting touched. Yet, the authors still experience a lack of realistic tangible feeling of touch in this AR system. Therefore, they developed a second mode, that incorporates a physical, computer-con-trolled chicken doll on a xy-positioning table (figure 13). Based on the position of the real chicken in the backyard, the system changes the x- and y-position of the chicken doll on the table. Further the doll is equipped with touch sensors all over its body. Depending on where the pet owner touches the doll, it accordingly activates the vibration at the same spot in the real chicken’s jacket. To create a further tactile feeling of connection between the human and the chicken, they also attached elec-trodes to the pet owners’ feet. Depending on the chicken’s movements, the owner can feel a mild electrical stimulation on his muscles.

This example was considered as really interesting in the frame of this thesis, as it brings together the two main topics: pets and tangible/tactile interfaces and interactions. Also, the goal of this example is to create a feeling of the pet’s presence, which is also one of the aims of the interactive device that is to be designed. One limitation is seen in the fact, that the owner’s part of the system is quite big and complex and therefore doesn’t work as a wearable. Furthermore, in this example again interactions between owners and their pets get mediated through technology, which is not inten-ded in the design work of this thesis.

2.6 Implications and Further Considerations Regarding the

Design

The literature review and investigations of existing relevant examples lead to some further conside-rations regarding the design of the interactive device. This section describes the most important considerations regarding the user group, the cat’s role in the system and the device itself.

2.6.1 User Group

As can be concluded from the description of deafblindness at the beginning of this section, it is hard to define a “typical” deafblind user to design for. Deafblindness can be caused by different reasons and the development of deafblindness can happen at different stages in life. Thus, the living situa-tion of these people varies a lot: some live with their families or partners, some manage to life on their own and some live in protected communities. Also, most deafblind people have some residual vision and/or hearing left. However, it varies from person to person, which and how much of both senses are left and to what degree they can make use of them.

Figure 11 (left): Vibrotactile chicken jacket, (Lee et al., 2006)

Figure 12 (center): 3D rendering and vibrotactile wearable on the finger (Lee et al., 2006)

Figure 13 (right): Chicken doll on the xy-positioning Table (Lee et al., 2006)

In order to handle those uncertainties and therefore get a clearer direction for the design, two deci-sions were made. Firstly, the interactive device is intended for people who became deafblind later in life and elderly people who acquired age-related deafblindness. Many of them try as much as possi-ble to live their normal lives with their families/partners or alone, in their own house or apartment. It was assumed that these were most likely the ones who would keep a pet and who might be expe-rienced pet owners. Second, the device should be usable for all deafblind people within this user group, regardless of how much vision and hearing they have left. It should therefore be fully usable by only using the haptic senses. Yet, visual and auditory signals can (and should) be used to comple-ment and support the tactile sensations.

2.6.2 The Cat’s Role

Besides the deafblind person using the device, his cat is another important stakeholder of the de-sign. Tracking the position and activities of the cat is the major input part of the system. As the examples described in section 2.3.1 and 2.5.3 (e.g. Flores et al., 2015; Lee et al., 2006) show, preci-se (indoor) positioning is technologically very complex and most of it requires lots of hardware com-ponents. Existing solutions work with technologies like camera or infrared tracking, WiFi, RFID or Bluetooth (Mautz, 2012).

Going into further technological details is out of the focus regarding the design work and would reach too far. Therefore, in the frame of this thesis, the ideal condition is assumed that all activities can be tracked in detail through a tracker (e.g. Wifi or RFID) at the cat’s collar in combination with other smart IoT devices in the apartment. Also, the cat owner would need to be equipped with a location tracker in order to receive information about his position in relation to the cat.

Also important to mention is, that except of the fact that the cat needs to wear a tracker at its col-lar, it won’t get further affected from the system (at least not from a technological side). The inter-action between a cat owner and its cat is naturally mainly addressing the haptic senses and a lot of aspects in a deafblind person’s life get mediated through technological aids. Therefore, the decision was made, that it is important to keep the interaction between owner and his cat natural and not technologically mediated in a HPCI sense (section 2.4.2) or as in the poultry example from Lee et al. (2006) (section 2.5.3). Thus, the interactive device will work in a more unidirectional way, where the cat and its activities serve as input and the cat owner receives information about the cat as the out-put. Yet, by getting a greater awareness about his cat, the cat owner is able to take better care, which in return fosters their relationship and positively contributes to the cat’s life quality, which are some of the goals of ACI.

2.6.3 The Interactive Device

Regarding the interactive device, it became apparent that this needs to be a wireless wearable de-vice. One reason is, that passive touch (as explained in section 2.2.3), for example generated through vibration signals, is considered to play an (important) role in the device’s tactile interface. Yet, for passive touch to work, the tactile interface needs to be in continual direct contact with the receiver (cat owner), as otherwise signals might get missed. Furthermore, as described above, the location of the owner in relation to his cat plays an important role for the system (e.g. that the de-vice can inform the owner if the cat is close to him). Therefore, the dede-vice would need to move together with the cat owner, in order to be able to communicate this location specific information. The cat owner’s location tracker could thus be embedded in the wearable.

One implication that comes with the decision to design a wearable device that is in constant tact with its user, is to carefully design the tactile signals generated by the device. For example, con-veying all information in form of vibration signals might end up in a very distracting device that constantly claims the user’s attention. Especially because of the fact, that a cat is probably not the most important aspect in a deafblind person’s life, information regarding the cat should accordingly be communicated in a more unobtrusive and subtle way.

3. Methods

3.1 Online Survey and Secondary Research

In order to get a general and broad overview into what it means to be a cat owner and to care for a cat, an online survey was conducted. As it was not possible to get in contact with deafblind cat owners, the survey was shared amongst ordinary cat owners. Surveys and questionnaires are com-mon and efficient tools for collecting data in a short time frame, typically from a large sample of respondents (Martin & Hanington, 2012). The collected data can be versatile types of qualitative and/or quantitative information, depending on the type of questions asked in the questionnaire. Crucial for the success of a survey are therefore carefully constructed questions as well as the overall design of the survey. Surveys and questionnaires can stand alone but are commonly supplemented with additional data, collected through other methodologies (Martin & Hanington, 2012). In the frame of this work, the online survey was supplemented by an expert interview as well as secondary research as explained in IDEO (2015). Online research in different forums and blogs was done in order to gain further specific information regarding (deaf)blindness and pet ownership.

3.2 User Journey Mapping

A User Journey Map was created in order to collect and arrange all the information gained in the user research phase. It helped to map out various cat activities, the owner’s needs and actions and further displayed the aspects where the interactive wearable can and should come into play. A User Journey Map is a way to visualize the user’s journey from start to finish, with the aim to highlight and understand various stages, steps and touchpoints the user needs to go through in order to complete a task (Marquez et al., 2015). Additionally, the user’s actions, experiences, feelings and emotions can be included. A User Journey Map therefore helps designers to point out where along their journey interactive technology can support users in their needs and gives information on how these interactions should be designed (Martin & Hanington, 2012). In case of this thesis, caring for a cat and various cat activities are not tasks that start or finish at some point, yet the principles of the User Journey Map could be adapted for this context, as can be seen in section 3.2.

3.3 Expert Interviews

Since it was not possible to get in direct contact with deafblind people and as they are in general a specific user group to design for, expert interviews were conducted in order to get specific informa-tion and experiences about the user context in quesinforma-tion. Expert interviews were conducted at diffe-rent stages of the design process: In the user research phase, during the sketching phase and for an evaluation of the final concept and prototype. Especially in the inspiration phase of a design pro-cess, expert interviews are valuable tools to gain key insights into the context from different per-spectives and views. They can also be helpful for gaining specific technical advice later in the design process. It is thereby important to carefully determine the kind of expert that should be interviewed, in order to gain the information needed in each individual phase (IDEO, 2015). Furthermore, the

like a verbal questionnaire. However, unstructured or semi-structured interviews allow more flexibili-ty and feel more like a natural conversation (Martin & Hanington, 2012). The interviews conducted in the frame of this thesis where semi-structured. Some questions had been prepared but a strong focus was set to conduct the interviews as a more natural and casual conversation. Therefore, only notes and no recordings were made during the interviews.

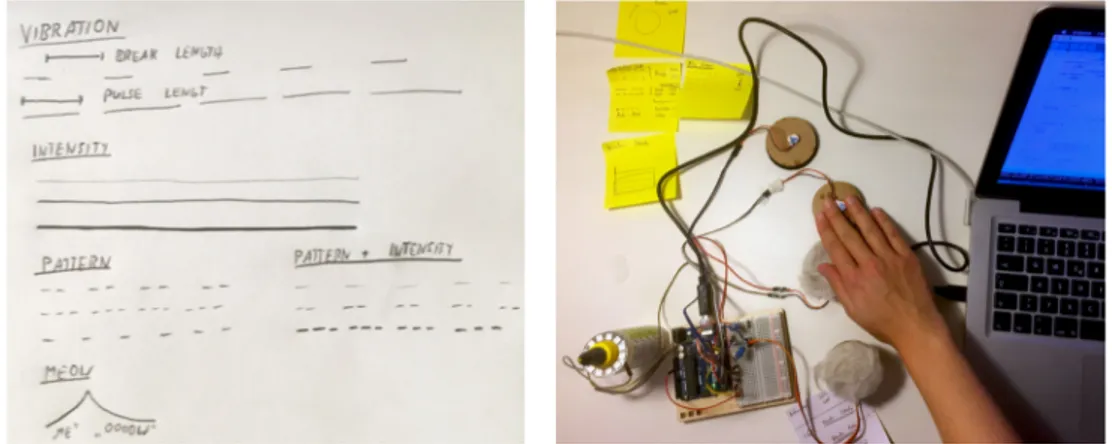

3.4 Sketching and Prototyping

During the ideation phase a wide range of sketching and prototyping activities were undertaken. Thereby different kinds of sketches helped to explore and develop various ideas and concepts. Quick paper drawings and scribbles helped to visualize first ideas and thoughts. Digital sketches and wor-king with microcontrollers in combination with various actuators helped to get the first glimpse of the technical possibilities and limitations. Hardware and material explorations helped to gain first experiences of various tactile modalities and sensations. Furthermore, sketches and prototypes hel-ped to get user feedback and evaluation at different stages of the design process.

Sketches and prototypes are both important manifestations of design concepts. Even though the separation line between them is quite blurry, they are not the same, as they serve different purposes at different stages of a design process (Buxton, 2007; Mousette, 2012). The main distinction can be seen in what Mousette (2012, p. 80) calls the “radiation pattern”. While a sketch is mainly made and most useful as a tool of reflection for its creator, a prototype is made to communicate somet-hing and to reach and affect others. Therefore, sketcsomet-hing largely dominates the early design phases, where it is all about enabling and exploring ideas quickly and cheaply with a minimum of details, whereas more refined prototypes provide an important basis for evaluation and user testing in the later stages of a design process (Buxton, 2007).

It is also important to mention, that sketching in the context of interaction design does not solely refer to the activity of drawing. It rather incorporates general representational activities and skills that help working towards inventive and creative purposes (Mousette, 2012). Especially if leaving the domain of traditional (graphical) user interfaces towards the design of haptic interfaces and tactile interactions, there is a need to open up the notion of “sketching”. Mere visual and symbolic representations (like drawings and scribbles) are insufficient in this context. It requires extensive explorations of various tactile stimuli and sensations as well as explorations of the materials and hardware they can be generated with (Mousette, 2009).

Regarding prototypes it is important to focus on the purpose of a certain prototype and therefore the question, “what it prototypes” (Houde & Hill, 1997, p. 1). Houde & Hill (1997) provide a model with three dimensions of a prototype: role, look & feel and implementation. Role thereby refers to the question, how a designed artefact is useful for its intended users. Look & feel refers to the sen-sory experiences (what a user sees, hears, feels, …) while using an artefact. Implementation refers to the way the artifact works and focuses more on its technological components. While designing an interactive artefact, depending on the design question, prototypes can focus on one or more of these different dimensions in order to get specific answers regarding the design question.

4. Design Process

4.1 Design Process Model

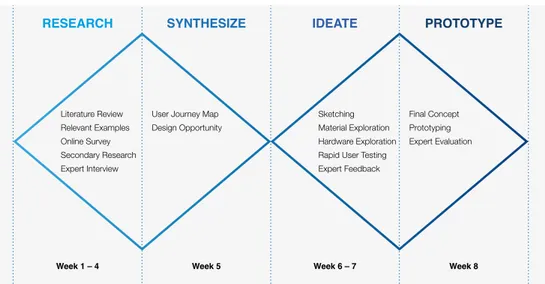

The user centered design process of this thesis follows the well-established double diamond model. Figure 14 gives an overview of the four phases of the design process and illustrates, at which stage of the process the previously described methods got applied. It further gives a rough temporal esti-mation regarding the time frame of this thesis. With this overview in mind, the following sections provide detailed descriptions for each phase, how the different methods got applied, what results they led to and how this affected the next steps.

4.2 User Research

4.2.1 Online Survey

In order to design an interactive wearable, that supports a deafblind cat owner to get a greater awareness about his cat, it first needs an overview what it means to be a cat owner and to care for a cat as well as a solid knowledge about the various activities of a cat. Since it was not possible to find and get in contact with deafblind cat owners in order to investigate their firsthand experiences, an online survey was shared and conducted amongst ordinary cat owners. The goal was to gain a broad and general overview and feeling about cat ownership in order to transfer these findings to the context of deafblind cat owners. Therefore, the survey contained various open questions, which gave the respondents the possibility to answer unrestrictedly in their own words. The survey was divided into four parts, addressing different fields of interest.

1. Caring for a cat and cat habits:

General questions about most important points of caring for a cat, different habits of cats and con-cerns of cat owners. Goal was to get a basic overview.

RESEARCH SYNTHESIZE IDEATE PROTOTYPE

Literature Review Relevant Examples Online Survey Secondary Research Expert Interview

Week 1 – 4 Week 5 Week 6 – 7 Week 8

User Journey Map Design Opportunity

Sketching Material Exploration Hardware Exploration Rapid User Testing Expert Feedback

Final Concept Prototyping Expert Evaluation

Figure 14: Double diamond design process

2. Use of cat activity trackers:

Questions about the imagined use of cat activity trackers and what kind of information respondents would be interested in. Goal was to get a feeling what ordinary cat owners would need/use cat activity trackers for.

3. Associations with different cat activities:

Respondents were asked what they associate with different cat activities. Goal is to later use these associations as an inspiration for the design of tactile sensations representing the different cat activi-ties.

4. Blindness and cats:

Respondents were asked about their experiences of meeting their cat in darkness at night (as kind of a simulation of being visually impaired) and about the problems they imagine regarding blind cat owners. Goal was to get insights closer to the context of (deaf)blind cat owners, based on the re-spondent’s experiences.

The survey was hosted on the sunet.se survey portal and was set up in German language, as it was only shared amongst German cat owners. A detailed introduction and information regarding data protection was provided. Screenshots of the survey can be found in the appendix.

Since the survey served to only get some first qualitative insights, it only run for four days and there-fore reached a rather small sample size of ten respondents. Yet valuable insights could be gained, that can serve as an important starting point for the following design activities. Nine of the respon-dents were experienced cat owners (> 3 years), whereas one was quite a fresh cat owner (< 1 year). The following tables with the four categories of the survey, present the results which were summari-zed and translated by the author of this thesis.

1. Caring for a cat and cat habits

Most important factors for the cat

Worries and concerns of cat owners

What the cat does if it wants to interact with the owner

• Food and Water • Going outside • Health • Litter box

• Human company, interaction, affection and comfort

• Wrong diet • Locking cat up or out

• Frightening the cat e.g. with sud-den loud noises

• Not recognizing a sickness or pain • Cat having an accident while

owner is at work

• Spending too little time at home • Stepping on the cat’s tail

• Meowing

• Moving to owner and stroking around the owner’s legs • Tries to get attention in various

ways: touching legs with head or “attacking” feet with claws • Jumping on the owner’s lap • Licks the owner´s feet

2. Use of cat activity trackers

Would be interested in accessing information about

Would like to get actively notified in case the cat

• Food and water consumption and availability • Movement and activity

• Sleeping times

• Location if cat wasn’t at home for a longer time • Location in the neighborhood

• Sickness

• In case the cat is looking for owner

• Wants to enter of leave apartment • Is stuck somewhere and needs help • Is sick or has pain

• Leaves the backyard • If cat misses the owner Tables 1 – 4: Results of the

4.2.2 Expert Interview and Secondary Research

Besides investigations regarding cat ownership, further secondary research has been conducted in order to learn more about the general living situation of deafblind people and to find more specific information regarding pet ownership of (deaf-)blind people. Thereby an interview with an expert from the Nationellt kunskapscenter för dövblindfrågor (NKCDB) was conducted. This revealed, that it is quite hard to define a “typical” deafblind person, as the life situations and stories vary a lot from person to person. In most cases deafblind people became deafblind later in their life and therefore often elderly people are affected. What all these people have in common, is the fact that they try to stay as independent and continue their regular life as much as possible. On example the expert gave, was the story of a man who used to be a car mechanic all his life. Even after becoming deaf-blind and almost completely losing his sight and hearing, he continued fixing cars.

3. Associations with different cat activities

Eating and drinking Sleeping

• Special taste and smell • Calmness

• Always if possible and everywhere • Very important

• Ritual • Picky

• Various places • Whole day long • Satisfied and relaxed • Has a calming effect • Calmness, peace and comfort • Preferably in my lap • Pleasure

• In human company in the bed

Cat wants to interact Cat strolls around neighborhood

• Meowing and purring • Stroking around legs • Cuddling in the morning • Jumps on lap

• Connection • Licks the owners’ feet

• Natural habitat • Freedom • Worries • Catching mice • Gets fed by other people • Worried about dogs • Cat’s world I’ll never discover

Cat strolls around the neighborhood

• Tapping on balcony door or window with its paw • Clearly shows its needs

• Sitting and waiting in front of the door • Meowing loud and demanding • Obtrusive

4. Blindness and cats

Encountering cat in darkness at night Imagined problems, deafblind cat owners would

face

• Cat was really active • Cat was frightened • Both were frightened • Stumbling over the cat

• Cat lying on the floor and sleeping • Stepping on the cat

• Frightening the cat

• Location of cat in the apartment / room • Would not notice if cat makes a mess or destroys

something

• Cat is really sovereign – should work well • Wouldn’t see if cat is injured

Regarding deafblind people and pet ownership no information could be found. Research on blind people and pet ownership revealed some information regarding the relationship between blind people and their guide dogs, which is really strong and emotional. Also, blind people manage to care for their guide dogs themselves, yet this is hard to be compared with an ordinary pet, as guide dogs are highly specialized and trained.

4.3 Synthesis

4.3.1 User Journey Map

In order to collect and structure all the information gained in the user research phase, an adjusted version of a User Journey Map was created, as can be seen in figure 15. Starting point of this map were the various activities and states of a cat that were extracted from the user research phase (whi-te sticky no(whi-tes). They were arranged in three big clus(whi-ters: Indoor activities, outdoor activities and health state. Next step was then to analyze the deafblind cat owner’s potential problems, needs and questions with regard to the displayed cat activities and states (yellow notes). Furthermore, the factors where defined, where it requires actions or attention of the cat owner (orange notes).

Especially the situations where the cat requires actions or attention of its owner are potentially the points, where deafblind people are facing the most difficulties and problems and therefore would need an assistive device. Thus, the next step in the map was to analyze where and how an interacti-ve device can support the cat owner in getting a greater awareness of his cat and its needs. Thereby it turned out, that an interactive assistive device would have mainly two communication tasks. The first is to inform the deafblind cat owner about the cat’s activity or state by displaying according information. This is rather peripheral information, that does not require the owner’s attention cons-tantly. Therefore, it should be displayed by the interactive device and its tactile interface in a subtle way and/or just upon request. The second task is to actively provide notifications in case the cat requires the owner’s attention or actions.

This differentiation between displaying peripheral status information, which is less important and urgent, and actively notifying the owner to get his attention, was seen as an important starting point for the design process. Therefore, the next step was to extract and define the requirements and design opportunities regarding the interactive device.

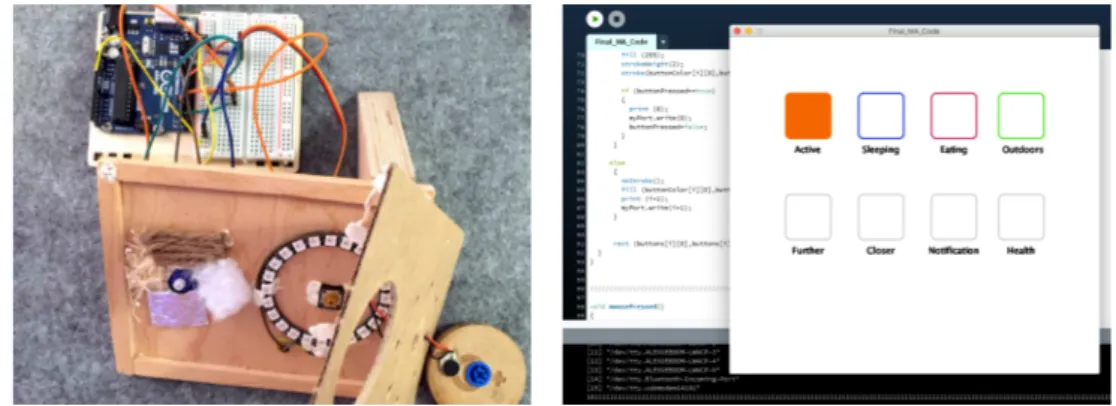

4.3.2 Design Opportunity

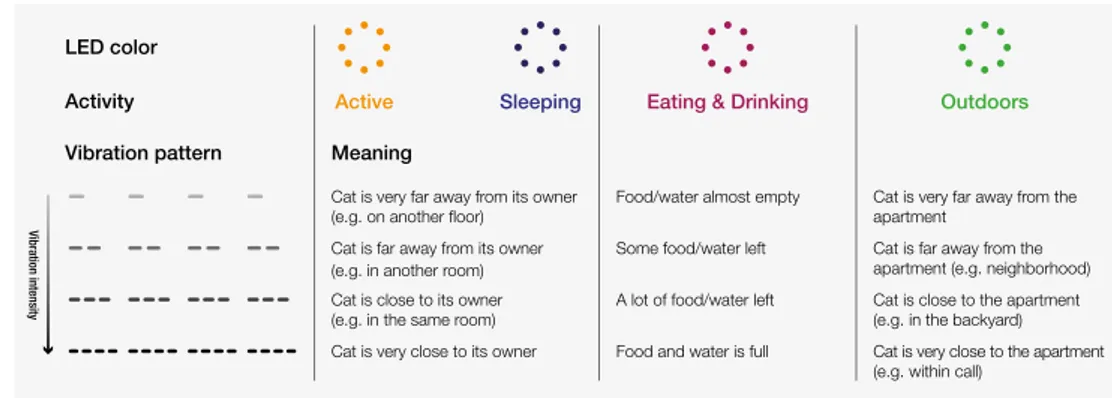

As can be seen in figure 16, there are three information layers. First layer is the four relevant (activi-ty) states a cat can have. Second layer are additional information regarding those states. These two layers are referring to the informative task of the device. The third layer refers to the notification task of the device. Depending on a certain state of the cat, specific notifications can be necessary. Inde-pendent of the cat’s state are notifications in case of health-related problems.

These three information layers and the question, how they can be displayed by the interactive devi-ce’s interface through different tactile modalities, were determined as the design opportunities for the following ideation and design process.

4.4 Ideation & Prototyping

Based on the design opportunities that came out of the user journey map, the ideation phase star-ted. Core of this phase were wide ranging sketching activities, that helped to explore different kinds of tactile sensations with their benefits and limitations. The activities incorporated paper scribbles and drawings, material explorations as well as Arduino based digital sketches with various actuators. The explorations and sketches helped to develop ideas and concepts, how different cat activities and related information can be represented through various tactile modalities. A rapid user testing as well as expert feedback based on those ideas and sketches helped to make design decisions re-garding the final prototype, which was developed.

ACTIVITY STATES

ADDITIONAL INFORMATION

NOTIFICATION

Active Sleeping Eating&

Drinking Outdoors Close (Backyard) <–> Far (Neighborhood) Amount Food/Water empty Health related problem

Cat wants to get in / out Close (Same room, around owner)

<–> Far (Other room)

Owner is getting close to cat or cat is getting close to owner

Figure 16: Three layers of information regarding the cat