Privacy management in a digital age: A study of alternative conceptualizations of privacy in digital contexts

Linnea Åkerberg

Master thesis, 15 credits, advanced level Media Technology: Strategic Media Development

Malmö University September 26th, 2018 Supervisor: Suzan Boztepe

ABSTRACT

Digital technologies are challenging the notions of integrity. This has clearly been proved by people’s use of digital services and products that constantly is increasing. This means that digital services and products continue to develop to fit in on the user’s behavior patterns, and thus meet individual demand. But what developers and users during this development have failed to take into account, is the matter of privacy where the limits of perceptual information and public information lack clear boundaries. The aim of this master thesis is to collect valuable insights into users perception of integrity and privacy in both digital and analog contexts. By using mixed methods with a reversed exploratory sequential design approach, it was possible to explore and map out users perception and prerequisites for when and under what circumstances they choose to share private data. In order to reach the purpose of this study, an online survey and an adapted Cultural probe were conducted. The results of these methods then became the base of a design process that suggests a proposal on how alternative integrity concepts can be constructed.

Acknowledgments

I wish to express my sincere gratitude to my supervisor, Suzan Boztepe, for your invaluable thoughts and advice of the research process. I would also like to thank my family and fiancé Jon, who has supported and encouraged me along the way.

Table of Contents

1. Introduction 7

1.1 Background 8

1.2 Aim of the study 10

1.3 Research question 11

1.4 Overview of the thesis 11

1.5 The social change 11

1.6 The matter of convenience 13

1.7 The Facebook scandal: Cambridge Analytica 14

2. Theoretical Framework 16 2.1 Literature review 16 2.2 What is privacy? 18 2.2.1 Definitions 19 2.2.1.1 Integrity 19 2.2.1.2 Privacy 19

2.3 A timeline of privacy and integrity 19

2.3.1 The notion of privacy from the 50’s and forward 19 2.3.2 The notion of privacy from the 80’s and forward 21

2.3.3 Privacy in general from then to now 23

2.4 Privacy and new technology 26

2.4.1 Embodied privacy vs. digital privacy 30

3. Privacy in practice 31

3.1 General Data Protection (GDPR) 31

3.1.2 Privacy by design 33

3.2 End-user License Agreements 34

3.3 Clickwrap and Shrinkwrap Licenses 35

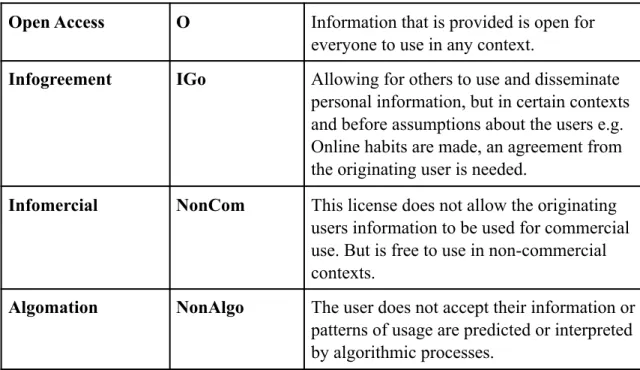

3.4 Alternative concepts of privacy 37

4. Method 38 4.1 Research design 40 4.2 Online Survey 41 4.2.1 Sampling 42 4.2.2 Data collection 42 4.2.3 Data analysis 43 4.3 Cultural probe 44 4.3.1 Sampling 45 4.3.2 Data collection 45 4.3.3 Data analysis 46 4.4 Ethical considerations 47 4.5 Limitations 47

5. Propositions: Alternative concepts of privacy 49

5.1 Creative commons as a concept of privacy 50

5.2 Digital ecosystems 54

5.4 Remediation 58 6. Cultural probe: Scenarios and alternative concepts of privacy 61

6.1 Results of the Cultural Probe 64

6.1.1 Scenario I 64

6.1.2 Scenario II 66

6.1.3 Scenario III 68

6.2 Findings and discussion 69

6.2.1 Survey findings 69

6.2.2 Cultural Probe findings 71

6.2.3 Discussion 72

6.2.3.1 Disclosure and presentation of the self 73

6.2.3.2 Convenience 73

6.2.3.3 Determination of the digital self 73

6.2.3.4 The impact of the GDPR 74

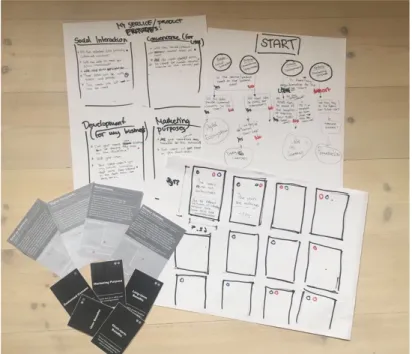

7. Prototype: The Toolkit for Decision Making on Digital privacy 75

7.1 Construction of the prototype 75

8. Conclusion 78

8.1 Future work 79

9. References 81

List of figures

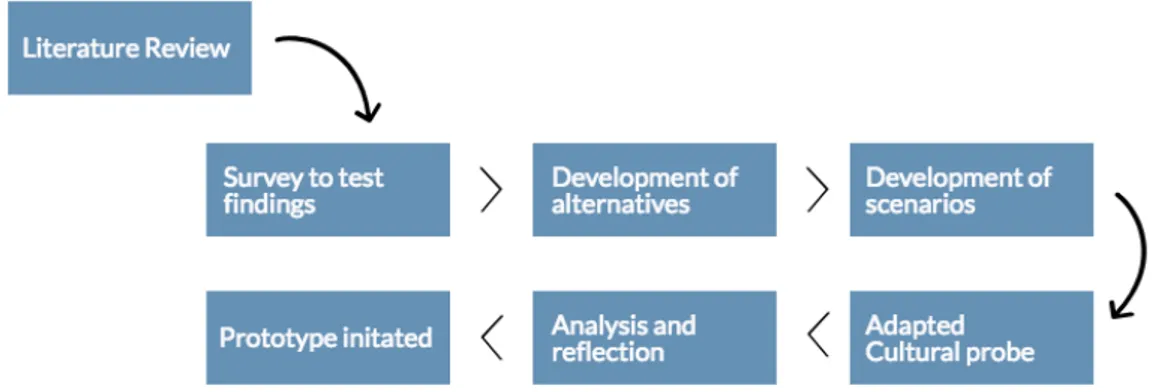

Figure 1: A model of the research process 11

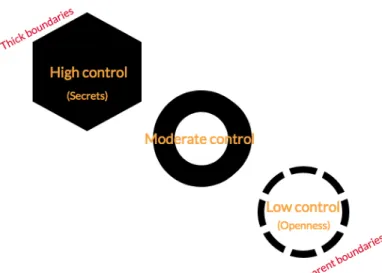

Figure 2: Levels of control: Privacy Boundaries 22

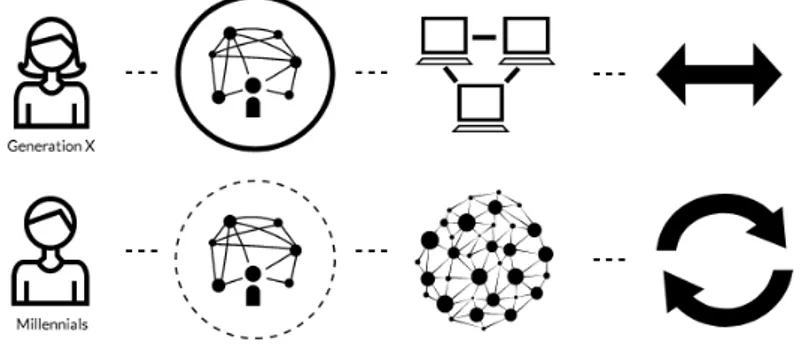

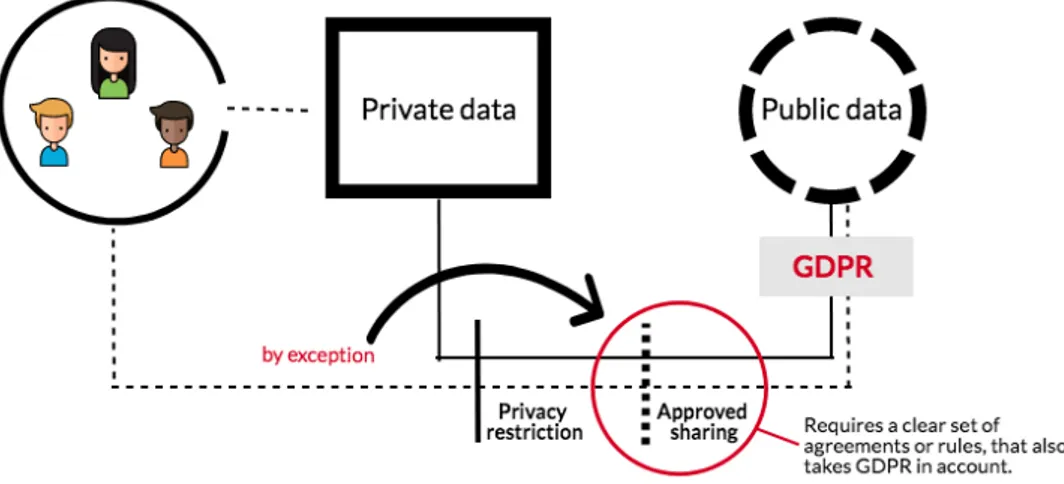

Figure 3: Perception and need for privacy depending on generation 25 Figure 4: Restrictions and obstacles between private and public information 29

Figure 5: The Research Design 40

Figure 6: Process of data analysis procedure 44

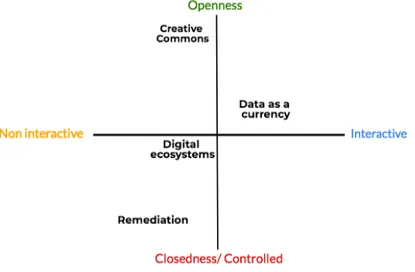

Figure 7: The four propositions of alternative privacy concept in dimension 50

Figure 8: The prototyping process 76

Figure 9: Decision card 77

Figure 10: Premise card 77

List of tables

Table 1: Concepts of privacy from the 50’s to present time 18

Table 2: Types of Creative Common Licenses 51

Table 3: Types of suggested Privacy Licenses 52

Introduction

There is a gap - a privacy gap in the digital era, that not only concerns the individual user, but also the society in general. This gap includes both new rules of conduct and appliances due to the development of new technology, that thus demands what kind and the amount of information the users should give away, in order for this technology and services etc. to be customized. The issue of privacy has probably become an increasingly prominent issue since users might find it difficult to determine whether they want to maintain a higher integrity level or not, but then to risk of being excluded from the digital benefits such as customized services and content, as well as the social interaction that modern technology often refers to.

The Facebook Cambridge Analytica scandal is one of the latest examples of a privacy breach that took place in early spring 2018. The whistleblower Christopher Wylie leaked that Facebook had leaked user data of about 87 million of its users, in order to use their information to construct political gain for the American election campaign 2016 (Lindhe & Hamidi-Nia, 2018). Cambridge Analytica’s findings pointed out Facebook lack of security and easy access to intrusion, which in this case could disclose user- information to third parties without major barriers (Jazeera, 2018). This scandal forced the social platform to take action and simplifying the access for users to their privacy settings. Despite the seriousness of this event, the active users do not seem to take action for stronger terms regarding their privacy or integrity-demanding measures regardless of their

uninformed consent of the usage of their information. Taking into account the European Union's new General Data Protection Regulations which came into force May 25th, 2018, which stresses the importance of users awareness of where and what their data is being used for, the awareness

regarding this issue should be emphasized. The new regulations have received a tremendous amount of attention from companies worldwide. Is this a sign that privacy as we know it is no longer

considered as valuable? or could it be that digital technology and social convenience such as what digital platforms provide, weighs heavier in such contexts more than privacy? due to this event, the curiosity of the situation and privacy problem grew stronger, wondering how users' interpretation and possible concerns for what might happen with their information, is increasing.

Furthermore, there is a certainly implied convenience for users as they accept their data to be saved and collected, then that data can simplify logins and other interactions on other platforms that share the same application programming interface as the original platform that saved their user data. Convenience, in this case, includes easy accessibility and easy access primarily for users, but thus also becomes a winning concept for third parties to access personal information. A win-win situation for both users and companies, which in this paper, amongst other things, will be questioned depending on the context.

This essay will primarily focus on privacy issues seen from the users perspective, exploring on how users interpret and value their integrity when digital technologies are increasingly introduced to the market. Is it a noticeable problem or is the original concept of integrity a mere memory?

1.1 Background

The issues of privacy have for some time now been a well-discussed subject where several different factors and opinions have shaped the concern in various ways, especially since the constant

connectivity became a fact. With such a complex problem and yet unexplored notion of privacy from a digital perspective, researchers have suggested different theories depending on the circumstances and contexts. One of them is José van Dijck (2014) who’s theory, advocating that there could be beneficial outcomes while introducing private information as a currency that allows third parties to pay to access private user information. Furthermore, Drucker (2014) believes that there is a difference between data collected with user approval and data that has been taken. She distinguishes these by calling approved data collection as Data, and taken information, without the

users' consent as Capta. Such a reasoning also simplifies the circumstances when further exploring and developing the concept of integrity and technology.

By mapping the concept of privacy, using theories about the subject ranging from 1960 to the present, the subject has been discussed on most levels, and since we introduced the digital era, the debate as a whole has changed and been questioned by the different theorists. Westin (1967), who in the 1960s was one of the most prominent theorists within the subject, generally discusses the

positive and negative characteristics of connected technologies that can affect human integrity. During the 70's, Altman (1976) continued on what Lewis regarded as a controlled society but rather focused on boundaries and what this could mean for people and society's conception of privacy. Further, in the 80's, Habermas (1986) talks about the public sphere and where the boundaries go for what should be considered private and public. Petronio (2002) continued Habermas (1962) previous research, where she questioned how the self can choose how the disclosure of certain information and thus also the integrity should be handled by each individual. Both Christian Fuchs (2011) and José Van Dijck (2014) have in the 2000s continued to research on finding alternative concepts of privacy and being able to find a way to value it in order not to be seen as infringing or exploiting people's private information. These proposals have remained in different economic perspectives, such as the socio-economic and political economy. Fuchs (2011) writes in his conceptual paper, that he wants to challenge the liberal notion of privacy, and how to base a different alternative to

integrity conception. This report has thus provided a clear path for identifying the knowledge gap in this area. The collection of main researchers within this subject have altogether been touching upon the most important factors of privacy, but none of the researchers talks about the subject neither from a user perspective and neither suggests solutions of how to come to an agreement of what a common understanding of privacy can look like and be conceptualized, especially in digital

as proclaim an alternative common understanding that in particular can be used for digital purposes and contexts.

1.2 Aim of the study

Due to the extensive change that digital technology has provided for people, the complex situation of controlledness and openness appears to have increased. As well as digitization has opened up for e.g. convenience and other beneficial attributes, it has also led to concerns regarding individual privacy, where the management of personal data within the software can be questioned.

By exploring integrity as a concept, questioning how the term privacy is perceived by users of the internet and other connected devices, the goal of this study is to discover how integrity as a phenomenon can be distinguished, seen from a user-centered perspective. From these

understandings, a set of reconceptualized alternatives for privacy will be created - where each concept might fit different contexts better. These alternatives will later become the components of a prototyped toolkit which will be a tool for companies to use while designing new services or products.

1.3 Research Question

The issue of integrity and privacy in present digital contexts are somewhat complex and includes several different aspects depending on the situation, and probably also what generation you belong to. In order to understand how the concept of privacy is conceived by users - there are two questions that will be of importance to answer for this thesis.

RQ 1: What alternative concepts of privacy can align with users perceptions of privacy in a digital world?

As a deeper research and better understanding of people’s perception of privacy in digital contexts will be explored, the following question will be answered during the research process.

RQ 2: What notions of privacy and integrity are conceived by users in digital contexts?

1.4 Overview of the thesis

Figure 1. A model of the research process.

This model explains how this study is going to be designed, in order for the research and chosen methods to benefit from each other.

1.5 The social change

There has for the last couple of years, been multiple debates about personal integrity online, whether it can be considered as something outdated that people shouldn’t care about anymore. Since Facebook was released and became quite a popular social platform, questions about what is private and public, have been questioned.

claims that privacy no longer should be considered as a social norm (Johnson, 2010). This statement clearly shows that the digital transition has and will have a major impact on people's social

behavior, both private but also socially. Again, we should consider thinking about the phenomenon of integrity and digital technology. Should we change the norms and our perception of integrity, or should we create new tools that help us maintain and own our own integrity? Choosing the first option will require a major conversion and new ways of thinking for active users of digital devices and platforms as well as respect for the sensitive data that can thus become public. Mai (2016), claims that if privacy is a bigger issue in a digital age, the already existing laws and restrictions that now is protecting users against integrity intrusion can be reintroduced into an information age. This will, however, require a double amount of security efforts, in order for this original concept to work. The belief that new ways of interaction and sharing information can be changing the idea of what is appropriate and considered as private or public will require a certain social change, where users shouldn’t see privacy as a tool for integrity intrusion, but rather as an asset for communication and it’s opportunities.

Furthermore, new tools of apprehension might help us respond to new kinds of social behaviors and awareness of what these changes might bring. The issues of privacy in digital contexts is also an increasing problem that will continue to grow if we don’t start balancing and be more aware of how our previous understanding of this matter, can be tied together with the fast-growing technological concepts. As Agre (1994) suggests, the idea is not to change any privacy regulations, but instead try to reconceptualize from a “Surveillance Model”, to a structured “Capture Model”, in order for our social norms to be better applied to modern and digital circumstances.

1.6 The matter of convenience

Does open access mean easy access?

The problem of integrity has many sides, why therefore it is important to see relationships and yet weigh the pros and cons to each other in order to identify the issue and come to a conclusion. Another very important question to consider before such a conclusion, as if the matter of convenience can be considered as a real issue, seen to its obvious value in particular contexts. Seen from a holistic perspective, digital convenience has many benefits of what it may provide for both users and companies (regardless of context). To achieve the right level of convenience, without exceeding to a personal intrusion, it must be extremely necessary for technology and social norms to start adapting to each other, balancing opportunities with their characteristics, rather than re-creating our society where privacy no longer exists. Since the digitalization, we are now able to do the same things we always did, as well as recently presented ones. However, whatever we choose to do, we leave a digital footprint that companies want to use to make their services more accessible and tailored to our needs, as this is ultimately what gives them good profits and opportunities for further development (Banerjea, 2017).

Mark Zuckerberg claims that online users have gone from being very careful about what

information they share online, to very comfortable - more open with information sharing in general. He also argues that the social norm as we know it is just an evolving phenomenon that will look different over time (Johnson, 2010). This increased developed openness is most likely determined by generation since not all users are comfortable to share private information online. Another factor may be that users feel compelled to share certain parts of their data, as this is often a requirement for using e.g. social platforms, mobile applications and other services that provide fast management and support for you as a customer. Have users, therefore, become more comfortable and less prone to integrity in order to create quick shortcuts in our daily lives? If this is the case, privacy within new technology should thus not be seen as a potential threat to the human integrity, but rather as a

matter of adaptation, where exclusion can be a side effect if users actively resign from technology. Privacy in a digital age is a complex concern that needs to be reviewed from all topics involved (such as from a marketing, economy as well as a general social perspective). Due to this obvious complexity, introducing a heavier type of integrity plan that applies to all online activities could be beneficial. This plan could then have been applied differently depending on the area and usefulness of these. Although privacy can be seen as a possibility and positive outcome of convenience, Petronio (2002) questions why people are willing to give away their integrity for the convenience and positive opportunities of modern technology. This leads us to question if users are uninformed, therefore, shows a somewhat uninterested attitude towards privacy and ownership of data, is worth paying with? And are users aware of what the consequences might be for not reading and agreeing – or do they simply not care?

These questions will be the base of my continued exploratory approach, highlighted in the qualitative/quantitative research, as well as I’m moving forward with the prototype.

1.7 The Facebook scandal: Cambridge Analytica

As mentioned in the introduction, Cambridge Analytica’s discovery of Facebook’s data leakage, have had a big impact on platform users in general and their conception and awareness of privacy, as a matter of fact, it became a hard hit for about 50 million Facebook users - as well as for the founder Mark Zuckerberg. When Cambridge Analytica revealed how Facebook's handling of user data was treated when they have accessed all users information through a downloadable app (a personality test created by a British academic, Aleksandr Kogan) (Jazeera, 2018) the whistleblower Christopher Whiley took action. The test required login through the user's Facebook account and this was Zuckerberg aware of, but because Kogan claimed that the information would be used for academic purposes, it was allowed. From here, a large amount of information could be collected if the logged in data, including name, city, age, gender and what pages they liked - but also data about

those logged in to Facebook friends could be collected (Schori, 2018). Kogan, who could then forward this data to Cambridge, breached Facebook's rules. In addition, he contradicted Facebook's terms of downloading information about users' friends, which allowed Facebook to register and demanded immediate deletion of all data collected by the 50 million users, but without results. However, some media companies believe that Cambridge Analytica hasn’t committed any data breach or stolen the information, but rather thinks that Facebook should take the responsibility and the blame for this event (Campanello, 2018).

That Facebook would be accused of the event, there are split opinions about, but the fact that Facebook intentionally made their application programming interface readily available for other types of services, now has been cut off (Statt, 2018), it can be worth to take into consideration that a privacy issue like this, stating the ownership of the information that before was shared, can be worth investigating.

Since the application programming interface used today obviously has loopholes that cause user integrity to be compromised, it needs to be reviewed and replaced with a new model or

development process that gives the user an overview of how their data can and will be used. The question is whether such an overview may be given in a legal document as most EULAS is designed today.

2. Theoretical Framework

In this section, I will discuss and distinguish the future of privacy and integrity by using existing research on this specific topic as well as relevant related issues. I will go deeper into

privacy as a phenomenon, as well as including how this might have an effect on modern technology. With a selection of the most influential theorists about the subject, the ability to create a framework for my own research and thus find an interesting field of research was given. Based upon these theorists, it was possible to draw conclusions built upon their theories: which also showed that there was a reason to choose an approach with a greater user focus and their perception of privacy as a phenomenon. Furthermore, this part of the research will enable me to further explore how related topics and concepts of privacy can be put into a new context and thus make us see the issue in a different light. By providing an overview of the area, and divide the essay into sections, I intend to map out the problem area and summarize the previous research and include my own conclusions and theories in a timeline.

2.1 Literature review

In order to clarify the background and research that was fundamental to the study, the following scholars and their theories have been of importance in order to build an understanding of the general phenomenon of privacy. During the 60’s Westin’s focus on privacy in a digital age (Westin, 1967) was the concern of control from stakeholders of various kinds. Control still seems to be one of the most successful methods for developers and companies to attract and make users loyal. Using Google as an example, which expanded their services and became an indispensable phenomenon online, has thus gained control over those who know and use their services on a daily basis (Statista, 2017). Altman (1976) who speaks mainly of the need for personal space in digital contexts, and what boundaries that implies, means that the social norms need to be re-configured to fit the need

for personal space in online contexts. Habermas (1986) published studies on the issue of what can be considered as private and public, and where our invasive behavior and norms draw the line from open/closed, have had a big influence on my field of research.

Goffman (1956) stresses that there is a correlation between how you want people to perceive you in different contexts, and depending on the situation, you can control what type of information and impression about yourself you share with others. This theory also seems to correspond with the notion of integrity in digital, online contexts where a decisive choice between invasive social norms (where privacy is key), is challenged against a certain online convenience that will give easy access services that will make people's lives more effective as a whole. For a user, given such control, a different layer is implied on top of the general notion of integrity. As we approach present time in this timeline, theories about how we interpret the general concept of integrity, and in digital contexts, in particular, has slowly become a new field of research which also tends to broaden the investigation of privacy. Petronio (2002) and Fuchs (2011) on the other hand, have different approaches to the subject, but despite that, their different theories have a common denominator where they both point out that alternative forms or views on privacy should be reviewed and introduced.

Due to the scholars various theories about privacy and integrity, it seems undeniably involve a certain development that changes the way human’s act, depending on the degree of social interaction. Due to this, the choice of structuring the theorists and their concepts by a timeline is mainly to be able to map the differences and similarities they demonstrate, as well as differentiate and find a possible barrier between the embodied and digital integrity that co-exists in present time. To explore whether the concept of integrity could have changed further, or if the need for privacy has been neutralized with the need for convenience, are two interesting areas to explore.

2.2 What is privacy?

Privacy can be explained as a principle of control, a human behavior as well as a question of cultural background and social habits (Altman, 1967). It is hard to determine specific theories and explanations of what privacy is and what it actually propose, due to people’s different perception of the phenomena and their needs for privacy. This is usually determined fairly quick during a social interaction between two or several people. But people’s desire for privacy is never absolute, since participation, and communication with other humans is just as an important desire to keep a certain integrity (Westin, 1967). Most theories of privacy, however, holds a more traditional understanding, which entails more focus on the embodied notion of privacy. This can briefly be explained as the need for privacy while e.g. using the bathroom or other intimate activities, but also personal conversations and other situations which preferably isn’t shared with other people. This behavior can be considered as a congenital need for humans in general. The digital privacy is, on the other hand, a somewhat unexplored area, where the barrier between them, still isn’t clear. In the model that follows, the various areas theorized by different researchers in specific decades are identified. The highlighted parts are the matters of privacy that was chosen to merge and study further, that can combine a solid understanding of privacy in digital contexts, and thus answer parts of the research question. The reason for structure the various theories as a timeline, is thus to clarify the barrier of where embodied privacy later becomes a matter of digital privacy.

!

2.2.1 Definitions. In this essay, both integrity and privacy will be addressed. They will both hold a more digital focus and have due to the general topic of this paper become the main

keywords. I’ve chosen to refer to both definitions throughout the thesis, both as a supplement to each other, but also due to the fact that they imply different meanings depending on contexts. 2.2.1.1 Integrity. This keyword will be defined as the notion of the individual perspective and their perception towards the general concept of privacy. This term will thus be used for the purpose to include users and their conceptions of privacy and their need to have a private life, and when the barrier between private life and social situations is distinct.

2.2.1.2 Privacy. The concept of this keyword, on the other hand, is here rather used as a term to describe certain aspects of the matter in general. A term for explaining the common definition of the concept without reference to users perceptions or feelings. The term will thus rather be used for explaining the general concept and phenomena, which is more suitable while discussing all parties involved (such as the perspective of companies, third parties, developers and users etc.).

2.3 A timeline of privacy and integrity

2.3.1 The notion of privacy from the 50’s and forward. Privacy and integrity is, as earlier explained, a complex issue that differs depending on whom you ask. A shorter, less complex

definition of what privacy is purely conceptual, can be explained as the claim of individuals or groups to determine when, how and what information can be shared with others (Westin, 1967).

Altman (1976) similarly claims that privacy is about a selective control, that suggests that it allows different social units within the privacy phenomena: Individual - individual and individual - group etc., further it can be described as a bidirectional process, such as inputs and outputs from others to the self, as well as from the self to others. It can also be an active and dynamic regulatory process, that implies a certain selective control as well. The last mentioned process can be

considered as the most common of privacy regulations, where the self is in control of how much and what kind of information you want to share with others, during different circumstances (Westin, 1970). Goffman (1956) stress the differences of the presentation of the self-depending on context and social interaction - which indeed also can be a theory worth considering talking about privacy in digital contexts, where the self is presented differently depending on the circumstance.

However, what these theorists are lacking in their arguments, is if privacy is a product of innately social behavior or a taught one. This can be an important factor in order to get a general understanding of what the concept means for individuals and how they value privacy. What also can be questioned, is if it isn’t really a matter of background and how we see social benefits depending on the situation.

Furthermore, an important factor to take into account regarding privacy and integrity is that depending on culture, the understanding and interpretation of this phenomena can be differentiated accordingly. Dorothy Lee (1987), states an example that there is a vast difference between privacy in the American society and the life of, for example, Tiktopia of Polynesia. An example of this is that Americans private offices are a mark of status, where a personal assistant or receptionist can guard him or her from having social interaction with others, as for the inhabitants of Tiktopia: work is a socially conceived and a structured concept, where working alone is considered as a failure. This theory conclusively demonstrates a cultural approach to the matter, but it might also refer to older generations way of thinking. Another explanation of privacy is from Margret Mead’s story of Samoa, where she explores what the basic American concepts of privacy is yet undiscovered, where they have a different belief and approach to integrity than what we are used to (Westin, 1967). “Privacy here is hardly known among them. [...] Curiosity is well developed and anything out of the ordinary, as an accident, a birth, a death or a quarrel, never fails to draw a crowd [...] They walk in and out of one other home’s without knocking on the door” (Mead, 2016). Although these

widespread, and becomes thus difficult to draw conclusions about what privacy is and how it should be addressed in different contexts.

During a lecture given by Dr. Nippert-Eng 2012, about her book “Islands of privacy”, she explains that during her extensive interviews, she understood that the subject of privacy was immensely important to her participants in general and therefore, she could conclude that the subject of privacy is something that requires great focus and accuracy no matter the context or culture, but this might also depend to a large extent if the users are aware of the outcomes, and how they are enlightened of the possible circumstances (McLuhan, 1967).

This closure is thus important to take into consideration since privacy obviously has an essential priority for people in general. Depending on the context, individuals behavior should, therefore, be applied accordingly depending on the given information and context. Altman (1976) argues that privacy must be very much depending on the self to restrain social participation and interaction as well as what kind of information you want to share with others. This can be explained as a certain openness and closedness, a privacy regulation that involves the desired level of privacy and it’s outcomes.

2.3.2 The notion of privacy from the 80’s and forward. The regulation of privacy can also determine what should be considered as public or private information and is thus one of the pillars of privacy as a concept. Habermas published work “The Theory of Communicative Action: Reason and the Rationalization of Society” (1986) has been significant for this era's perceptions and

conclusions of privacy which questions the private and public sphere, and where to draw the line for communicating certain information. Habermas provocative way of describing the concepts of private vs. public have had a big impact on the matter of seeing integrity and privacy, and how to deal with it regardless of context. In today’s society such way of thinking is of utmost importance to be aware of in social interactions, since these spheres only can be limited by oneself as for when, how and to what extent private information can go from being private to public. Petronio (2002)

claims that if private information can be made the content of disclosure, it would allow us to

explore and learn how to separate privacy and intimacy from each other. So, the question remains if we, with both of these theories, can claim that privacy and integrity only can be considered as controlled by the self, rather than a collective responsibility for e.g. Disclosure or privacy.

The following model shows different levels of individual control - depending on the preferred and achieved control of privacy.

!

Figure 2. Levels of control: Privacy Boundaries Based on Sandra Petronios model (2002).

The different stages within this model can as well as being a product of how to see privacy in general, also be applied to the concept of openness and closedness depending on what kind of interaction or context that is debated. The self does not control their privacy ruled by one of these models, it simply depends on what kind of information it concerns, which you choose to keep from others and information you share. The upper level in this model includes secrets that you do not want to share with strangers or even close relatives, while other information that is considered personal may still be open to the public. This model might explain an older approach to privacy regulations, yet there are components that can be used to understand the main context and gain insight to what integrity really means to us, and where the individual boundary between openness

and closedness should be drawn - even from a digital perspective. What also should be considered to further investigate as we proceed in this research, is how millennial’s (children born after 1982) perspective on privacy are. Since many of the millennials was introduced to social media and other interactive platforms (such as the Swedish Lunarstorm, MSN Messenger, hamsterpaj.net,

bilddagboken etc.) during their childhood, they have been developing a habitual behavior to share different kinds of data to friends and other users. Could this mean that their privacy is not as framed as for people who weren’t introduced to different social platforms at such an early age?

2.3.3 Privacy in general from then to now. Recent perspectives of privacy, take into account the shift from analogous to partially digital behavior - realizing that effective data

collection could be done and saved on a computer with internet connection changes the perspective of privacy tremendously. As mentioned in the previous section, the understandings and

interpretations of privacy can have a different signification, mostly depending on culture and the environmental factors (Altman, 1976).

In recent research, privacy has an important role due to the social and political as well as economic systems in general. Therefore it is of paramount importance to balance privacy settings with people’s need for integrity, where certain patterns of regulations, disclosure, and surveillance are being better informed to the users, in order for them to consider the technology as safe for use. To do so, the concept of informational information should be considered and applied for a better balanced privacy. Informational information is in this contexts referring to thoroughly presented information, where rules and obligations that concern the user is given with pedagogic methods, easy to read and receive. To understand how these concepts can be designed and

implemented, we need a deeper understanding about what informational privacy is: who shall have access to the data that is shared, and how this access shall be regulated. These questions will be discussed later in this research and will provide preeminent conclusions and understandings while moving on to the prototyping stage. Petronio (2002) refers to the rule-based theory of

Communication Privacy Management (CPM) which represent a map that assumes that private disclosures are dialectical, that the self makes choices of what information to share with others or not, depending on what they perceive as important. This is thus letting users regulate the boundaries of openness or closedness in various levels, depending on the occasion. This also leads us further into ownership and access to information and the concept of informational privacy, keeping the users aware of what kind of data is collected and disseminated. If we have to rethink the concept of privacy, for the reason that we cannot convey the understanding of what the term means in digital contexts or make integrity into a common understanding, there are certain patterns (such as invasive behaviors), that we need to take into consideration. One example could be to highlight and create models that suggest new ways of understanding what privacy in digital contexts is and how it is perceived and regulated depending on the context. Another important factor to take into

consideration is that the perception of privacy is different depending on generation. In an article by Emily Schiola (2017) she stated the fact during research that no matter the age of users, the

common wish is that the web is a safe place to be on, but depending on generation, they see security and safety differently. Sherry Turkle argues in her book “Alone together” (2011), that depending on generation, people tend to see the benefits of digital technology differently, and due to this, different levels of the area of use as well as addiction can be determined. Turkle also reveals the myth that the generation who is growing up with digital devices, doesn’t care about privacy. But, on the other hand, they believe it is a social obligation to constantly be connectable through digital platforms and is thus less concerned about the need for integrity. The following model shows a suggestion of ways of thinking about privacy depending on age group.

!

Figure 3. Perception and need for privacy depending on generation, adapted from (Turkle, 2011 & Schiola, 2017).

The model shows that the older generation prefers full control of their communication and the preferred kind of information that can be shared via digital channels, a kind of two way

communication where only invited parties may be involved in the information provided. On the other hand, the younger generation (the millennials) shows a larger communication need, where several users can share the information that once has been shared by the owner, further. A more positive attitude towards transparency and the activity of sharing information. Several original factors have been taken into account, comparing social norms and openness depending on age group, as well as modern understandings and privacy regulations due to the development of new technology.

Each generation has had a different introduction to the means of privacy and digital technology and has thus had the opportunity of building their own framework and understanding of what the concept of privacy means, for them. Privacy could, therefore, be described as a question of interpretation in which each individual may take responsibility for his or her decision to share information in the information age (Fox-Brewster, 2014).

The first step (Figure 3), shows the differences between generation X and Y's attitude and

preference to openness vs closedness, where the younger generation is looking for digital platforms where they can deliberately and transparently share their information with others (Ramasubbu, 2015). Step two shows their potential purpose of connectivity, where the older generation might see digital devices as a tool for communicating digitally, while the younger could see the opportunity to communicate with new people and sees the benefits of expanded acquaintance (Walpole, Jacobs & Jorgensen, 2004) The third and final step shows - depending on generation - how users can look at the process of information sharing. As the younger opportunity sees an endless exchange of

information with all connected users regardless device or medium, while the older generation rather prefers a two-way information exchange between selected individuals, using the various mediums and devices differently (Alton, 2017). The model is based on conclusions from previous research and can not, therefore, be supported with regard to the arguments that belong to the model.

However, the model demonstrates the importance of different personalities regarding the different perspectives on integrity issues and what it means depending on generation.

2.4 Privacy and new technology

Since the internet was introduced, it quickly changed people’s way of living, working and act in social situations – where multiple tools and platforms of interaction were introduced one by one. It has now also become a strong medium of a social, political and economic transaction (Global Innovation Outlook, n.d.) that from many perspectives also can be seen as indispensable.

This new era thus puts the issues of privacy in a new spotlight and questions if it is a human right or a social construction. Due to all the new changes that new digital technology has brought about, privacy remains a complex construct that doesn't always include the individual user.

During an RSA Conference 2017, Hughes (2017) argues that there is a wide solution gap between new technology and privacy, which again makes the concept of privacy more of a complex matter,

and questions whether it is a controllable phenomenon or not. He claims that the fast development of new technology and the impact of the digitalization, have had a tremendous effect on our already existing social norms, public policies etc. Due to this, we need to develop new tools to respond to the changing terms regarding the privacy in those circumstances. Hughes also questions if we sometimes aren’t aware of what those privacy issues are, or can be while introducing new technological devices. Are we at all likely to understand what privacy will mean in the future as technology gets way out of our ability to control single ecosystems?

Mai (2016) has a similar approach and belief towards technology and the issues of privacy but suggests that there is no real need for new tools or notion to tie old norms or preferences together with new technology, but rather try to think differently in general about what privacy conveys in a digital information society. Both Mai (2016) and Hughes (2017) therefore argues for a conceptual change in order for privacy rights and the awareness of it, fitting new technology.

Regardless of the appearance or conceptual change of privacy in the future, we need to be aware of that this process will take time to explore and learn with new ways of thinking. We might consider this field as yet unexplored and therefore, walking on privacy landmines will continue to occur until we succeed in balancing the issue, by finding a solution. To discover new standards, develop new social norms, laws etc. will be a question of development, not to mention the amount of time to implement it into individuals behavior and social norms. Furthermore, the gap this creates, between innovation and the human ability to design solutions for this, will suffer while managing and implementing privacy in those (Hughes, 2017). What is important to keep in mind while debating privacy and new technology is that we must also see it from a perspective where we pay for

accessibility and convenience of services and products with our personal information. The fact that we pay with personal information is, in turn, questionable, but if such services can continue to be completely free and not require anything in exchange for customers or consumers, ecosystems nor the digital economy will be able to exist to the extent that they do today (Strandberg, 2016). Privacy

and personal information could also be discussed as currency (Van Dijck, 2013) in order to form an understanding of how third parties value personal information and the importance for individual, critical thinking creating valuable data for these. But say, if we go completely open while being online, will personal data be as valuable as it is today, or will it rather be taken for granted in order to maintain a digital sharing economy? Leontine Young (cited as Westin, 1967) claims that there can’t be privacy without individuality, only different types. She argues that there can’t be individual feelings and interpretations if there is no opportunity to be alone with thoughts and feelings. Such a phenomenon could threaten the value and the collection of personal information if there is no privacy to the private information left. What would it mean to third parties if such data can no longer be considered individual and people are becoming more and more aware that there is no longer called privacy? A new kind of privacy model, as Mai (2016) advocates for, could, therefore, be prevented and provide insight into the importance of a clear barrier between open and private information. Furthermore, this model should also explain different levels of interaction and

openness, to provide the ability to control your private sphere. Giving the user options, mechanisms and controllable devices to be able to achieve the desired interaction with others, that probably also will provide a better effect in the long run.

The model below is my original model, inspired by what Mai (2016) among others, stresses about levels of interaction and different barriers between preferences regarding privacy and convenience. It shows how preventions of leakage with valuable and sensitive information during a

!

Figure 4. Restrictions and obstacles between private and public information.

The model explains how proprietary information, that also can become public for various reasons, can be dealt with, as well as how voluntarily communicated but sensitive data (shared between known parties) can be spread and thereby become open access for all users connected. The given restrictions in the model above, consist of a main barrier which applies to all information that can be considered as private but may be overlooked if a particular consent from the user is given. Such an agreement must, therefore, be correct mediated, detailed and very clear so that the user can decide whether it might have good or bad outcomes. If such informed consent is given, the next restriction will naturally become the GDPR and its applicable rules on what the information is approved to be used for. If the data can pass this filter, with the user's own guidelines and consent, while still being adaptable for GDPR, the information may be considered as open and public. In order to clarify, this model is not based on any particular age group or generation, but rather sees it from a wider perspective, where limits to private and public content should be drawn.

Despite the fact that privacy is something that is considered as needed by humans, there are several ways of seeing how integrity can look different within a ubiquitous usage. Hughes (2017) argues during a conference that we need to be better at balancing our traditional understanding of the concept of privacy with fast-evolving technology and therefore, a common understanding for

privacy, such as one Mai (2016) suggests which claims that what we need is a new set of conceptual tools that will help us understand and manage our privacy, can come in handy. The two theories draw attention to a need of change regarding the traditional norms of privacy. This common

understanding of the phenomenon is important to convey as we see a continuous rapid development of technology.

2.4.1 Embodied privacy vs. digital privacy. While doing research on the subject of privacy in general, I came to an understanding of the importance of differentiating the matter of integrity from an embodied privacy perspective, and a digital privacy perspective. The reason for clarifying the division between the two different variations on privacy, felt relevant due to the fact of the decreasing concern of users and their need of integrity as we move in digital contexts (Lohr, 2010). As the research moved from the 60’s to present time, the notion of privacy seemed to have a

stronger focus on embodied privacy (such as intimate activities, that otherwise happen behind closed doors) than digital privacy. It became clear that as further we came a digital age, confusion appeared as both concepts became important to discuss during a certain transition, especially in the theories from the 70’s and 80’s about what privacy and integrity include. The closer research regarding privacy I came in regards to a digital age, digital privacy became a greater focus, but talking about intimate and other physical situations, it has been more about privacy issues regarding personal information such as social security numbers, political opinions, addresses, income,

pictures, etc. The possible reason for why users have shown tendencies of not to worry about cross-border activities (offline/online) and the effect of privacy intrusion, might have to do with the fact that users can consider their interaction behind their computer screens or other devices as a way of hiding, since the perception of reality doesn’t automatically have to include the reality of digital actions.

3. Privacy in practice

Due to the complexity of the subject, I’ve chosen to have a quite a broad background research of the existing privacy agreements that are applied in various contexts. Which all are touching upon the issues of privacy, in different ways. With these components, I have been able to link the different areas of science and theory together and make the basis for my quantitative and qualitative study strong enough to build on my own theories. After analyzing each and every component and finding connections between them, I could start to make assumptions and draw conclusions as I put them together in different contexts. This turned out to be different proposals of alternative conceptual privacy, and finally, a final prototype could be done.

3.1 General Data Protection Regulation (GDPR)

The General Data Protection Regulation (GDPR) is a new privacy regulation from the European Union. This regulation will enable consumers and customers to take more control over their own data. A stricter system of user consent will be enforced, and the general requirements for how companies and third parties will handle the user's information will be harder. Although this new regulation compels companies to work with new methods and policies for potential marketing campaigns etc. It won’t however, have an effect on the procedure of information collection in general: how, where and when you find it. But, it will require a given consent from the user, before personal data can be collected. This is currently not restricted to a certain procedure in any way, but you do need to have a purpose and be able to declare why it was collected from the start, as well as being transparent of what information you are using and for what reason. During a presentation by Kjellin, 2018 he stresses that a so-called Cookie “... can be presented or designed as a cookie that the user needs to agree on, before he or she will be able to use the service or product” (Malmö, 2018-02-21) to agree on these kinds of understandings, can thus become a problem in regards to the

idea of GDPR, which is why people start to question the use of cookies, without letting the users be able to “opt-out” if they change their minds (Irwin, 2017). You are also required to erase the

information collected if this is requested from the customer, and you are not allowed (Tibbelin, 2017). But more importantly, Banerjea (2017) accentuate that if information or data is about to be collected from any individual, for whatever reason, it cannot be done without a explicit consent from the user. This explicit agreement also speaks for that the Restriction model that was previously announced (Figure 5) would fit in even from a GDPR perspective.

New technology and different companies services will therefore become very much affected by GDPR in general, as the purpose of potential information gathering requires much more attention and general accuracy by the company, as when a user questions the use of their data, despite the fact that they have given their approval, companies must be able to present this and be able to detach the information from their system (Hennig, 2018). This rule can put many businesses and their industry in uncertainty and perhaps even excessive caution on what is allowed to be saved and collected about their users without exceeding any of the regulations. If any of the regulations are waived in any way, the company in question may be fined up to 4% of its global sales (Kjellin, 2018) which also makes it even tougher for companies to overlook their database procedures, and reconsider their procedures and processes of e.g. marketing. The GDPR also stresses that “A purpose that is vague or general, such as for instance 'improving users' experience,' 'marketing purposes' or 'future research' will - without further detail - usually not meet the criteria of being 'specific.” (Banerjea, 2017, pp A11). This definition clearly indicates the power that users actually holds, which also states that an alternative conceptualization of privacy, must go in the same direction as the GDPR clearly suggests: allowing the user to be at the center regardless of context.

The most essential in the new regulation is that the user has full rights over his data and have thus the ability to determine when and where their information can be used and for what reason, as well as deciding when it should be removed from various systems. The implementation of GDPR is

intended to give individuals more freedom and choice about information about this. This also states that the need for privacy is not inevitable, but will probably still change for conceptual purposes. However, unlike the concept Privacy by Design, where privacy protection is integrated during system developments, GDPR may be more of an after-applied tool for preventing privacy violations and preventing concerns about and when prompted to disclose personal information.

By using GDPR as part of the fundamental research for this study, the decision of keeping a user-centered approach makes it much clearer, due to the fact that the user has the most essential role when it comes to the privacy definition.

3.1.2 Privacy by design. Privacy by design is a concept of privacy, not entirely different from the European GDPR act, but differs in terms of expressions and application of information since privacy by design is taken into account already throughout the engineering process. Privacy by Design was first initially developed and designed by Ann Cavoukian (2011) who presented the approach in a report of privacy-enhancing technologies. The concept is based on a so-called sensitive design, that takes human values into account during a session of use. This approach has proved to be an essential tool for increasing trust while using different digital platforms and services. Using the idea of privacy by design can also increase the awareness of privacy for

companies, organizations as well as private users. What can be seen as a striking and unique about this concept, is that potential problems or other privacy intrusive issues can be identified at an early stage (Information Commissioners Office, n.d), which in turn also will increase the awareness and in long-term build trust between users, developers and companies. The Information Commissioner's Office (n.d.), also explains that by taking a privacy by design approach it will help businesses and organizations understand their obligations towards users under the legislation. The Privacy by Design concept can also be considered a very thoroughly developed restriction since they impose integrity retrenchments as an early part of the development process, rather an extension when the system in question is fully developed, or prove with a document what is legal and not. Focusing on

user integrity will, in my opinion, have an ultimately beneficial effect for all those who choose to apply this concept to the development of platform, application or technological devices.

Furthermore, the fact that integrated privacy offers full visibility and transparency for all users that are affected by data collection within IT-systems, are aware of what information is collected, processed and spread, and for what reason, this method is distinguished in particular from others seen from a security perspective (Bylund, 2013).

As the GDPR is introduced within the European Union, in order to make the requirements harder for collecting personal information about users, one can question why built-in integrity has not yet become a more popular arrangement for Swedish-developed IT systems. As an extension of security applied, it is considered as less safe since that this doesn’t have as a strong function to prevent possible violations, as a built-in restriction has (Bylund, 2013).

3.2 End-User License Agreements

An End-User License Agreement (EULA) is a contract between the licensor and the purchaser, where the user's rights and obligations regarding the use for a particular software, service etc. are being described and agreed on. These agreements, however, are more resilient in Sweden, than what they are in other countries such as America (Håkansson, 2014). These agreements often express what the user gives their approval to, both regarding their own rights towards the developer, but it also approves what the developers are permitted to collect, information wise. The general problem with these agreements, even if they are strictly provided for the company in question to not be held accountable if any of their contractual points go against the user’s actions, is that according to several surveys, a very small percentage of users actually read them before using the product (Obar & Oeldorf-Hirsch, 2016). According to various articles and previously done research, there are mainly two reasons why so many choose not to read these agreements. The larger proportion believes that it takes too much time to read them through, and as a user, you have to agree to these

terms in order to use the product. Another major reason for the inconvenience is due to the text which is perceived as being too legally heavy for users to understand (Berreby, 2017). Another survey that was made by MeasuringU (Sauro, 2011), suggests that fewer than 8% read the

agreements. TechDirt claims that only a small fraction of 1% reads them, regardless of the matter (Masnick, 2005). The small fraction of users reading the Terms of Agreements before using a product, service or software indicates that the agreements that are to be found for each case should be considered to be remodified, depending on the medium and context - since it doesn’t always seem to fulfill its purpose of informing users at all times.

In an article by Jeff Sauro, 2011 he questions WHY users should spend time reading these deals because they really have no choice if they want to use the product or service in question. He refers to the choices that are given to the user - “Accept“ and “Don’t accept” shouldn’t be considered as real ones, if the user wants to use the e.g. software, they need to accept.

In regards to this, there has been much debatation about how EULA's actually are presented. Most often, they are designed according to two different kinds of constructions called "Clickwrap

License" or "Shrinkwrap License", which depends entirely on what it is (Ekblad, 2012). Shrinkwrap Licenses are usually used for tactile devices or software that you approve while opening the

package in which it is located. Clickwrap Licenses are agreements that must be read and approved with a button before using the service or product in question, such an agreement requires a screen for you to read them (these different methods will be explained more in detail in next section).

3.3 Clickwrap and Shrinkwrap Licenses

In terms of agreements in general, there are different ways and methods for companies to present them - but this is determined based on the type of medium it concerns.

The clickwrap-method is one of the most often used ones for software and platforms and other digital mediums and is a further development of the shrinkwrap method. The difference is, however,

that the clickwrap license has an electronic seal of the product that requires the user to click on a button for agreeing on the agreements, as opposed to shrinkwrap that requires you to break the physical package to approve. The problem with licenses such as these is that the conditions are given regardless of the circumstance, not giving the user a chance to access or confirm the

agreements before a purchase. The way the agreements are constructed and presented can hence be questioned if they properly meet the requirements that are demanded by the developers, company, and customer. Due to this, it is doubtful if this method can be considered as a valid agreement between the developer and user if problems occur (Ekblad, 2012).

Forasmuch as Clickwrap is a further developed concept of the now, somewhat aged, Shrinkwrap license - there are many qualities that resemble each other, but also many differences. Shrinkwrap licenses have a more analogous approach, which also determined the design of these licenses. Although shrinkwrap license may be preferred in certain situations, as the agreement can be considered more solemn, they are not always suitable in digital environments. On the other hand, Clickwrap licenses is perceived to be more legal - in regards to how users accept the agreements (By interact with the document and click “I agree” after getting a chance of reading it) it tends to be in danger due to how we are moving away from devices with screens in that sense (with for

example technology as AI, IoT etc.). If EULA’s are staying in the same medium as we are moving further into technological development, something called “Modified Clickwraps” can, therefore, be an option to consider. The Modified Clickwraps are a hybrid of the two earlier mentioned methods, but is rather focusing on informing about the consequences when a user is giving his or her consent to their data is being used (Boykin, 2012). Although this couldn’t be considered as a new method, it is undeniably a new concept of informing - where it is understood that the intrusion of a person's integrity isn’t optimal.

3.4 Alternative concepts of privacy

Due to the fact that various studies and data collections, mentioned above, clearly indicates that a very low percentage actually reads these agreements, although the agreements thoroughly clarify what the user gives their informed consents to, one could state that this method has its

shortcomings. Additionally, a study from 2016 concluded that during a conducted test, almost all participants out of 543 university students, didn't read the agreements while signing up on a fake social networking site called ”NameDrop”. The terms of Service required them to give up their first born child, and if they didn't have any children yet, they had until 2050 to do so (Berreby, 2017). These numbers and events may question why users, in general, don’t read the agreements more carefully, and what the consequences of it may be if that continues - as we go further in digital and connected devices that require users to give away personal information.

Due to this, my choice of research was based on curiosity about how much importance users of various digital products or services put on privacy and privacy regulations, depending on context. The goal of presenting such concepts would partly be to better fit in the digital era, as well as

4. Method

To be able to design new alternatives from the already stated theories of privacy, gathering insights on the dilemmas between digital privacy and analog and embodied privacy was central for the study. The matter of privacy is to a large extent an individual question, and can thus not defined by a clear set of guidelines of what integrity is and how it is defined. Due to this, and with the chosen problem formulation that indicates a user-centered research perspective, this study took an Exploratory Sequential Approach, using mixed methods viewed in two phases (Creswell, 2012). This approach allows for collecting and analyzing each dataset individually, but ultimately end up using both the qualitative and quantitative to recognize patterns and relationships. However, Creswell (2012) describes that Mixed Methods with an Exploratory Sequential Design approach, begins with the collection of qualitative data in order to explore a specific phenomenon, then proceed with quantitative data to explain the context and relations to the qualitative data. This order of collecting data, and the way it is used, does not fit this study’s research design, which is why I decided to make a reversed version of The Exploratory Research Design. The reversed version of the exploratory research design, means that the quantitative study is conducted before the

qualitative study, which is contrary to what Creswell (2012) advocates.

By using a Reversed Exploratory Sequential Design, each phase could be analyzed individually and thus provide better insights into the field of research. The purpose of starting with a quantitative study - an online survey, was to confirm if the key findings and insights from the various sources were of relevance. With this, the online survey could serve as a downscaling to find additional key concepts to work with. The procedure for identifying and analyzing users general behaviors and feelings towards the privacy issue, could thus narrow down the research field and create a better foundation for the qualitative study. The qualitative study took form as an adapted Cultural probe. By bringing an inspired version of a probe, the respondents would find it less difficult to articulate

their positions on the abstract concepts, due to the fact that they were given material and a foundation as a starting point, when they would make their choices for alternative integrity

concepts. Through the use of a specific type of material, which could be obtained using conclusions and analysis from the online survey (Gaver, Pacenti & Dunne, 1999). Brown et al (2014) define a Cultural Probe, as a method to inspire ideas in a design process and a technique in order to collect data and insights about people’s lives and values. They also stress the importance of what a cultural probe can entail, in terms of getting a solid understanding of the user's lives and potential

experiences of a certain product. The way the Cultural probe was designed for this study, a small package including a form, a description of the four given alternative concepts and three given scenarios, based on the ‘Reilly Top Ten List’ (Baron, 2012). Instead of giving each respondent a set of artifacts (Brown et al., 2014), different kinds of printed material was distributed for them to read, and then a discussion about the respondent's thoughts about the subject could start. Due to these choices and the fact that the probe was not carried out by the respondents themselves (Brown et al, 2014), but rather through a discussion, the choice was made to call this qualitative study an

"adapted” Cultural Probe. The choice of this research approach can be argued by highlighting that the intention of the qualitative study was not about developing the design of the privacy alternative, test an idea, or formally educate a group of people, such as an e.g. Design research approach

demonstrates (Anderson & Shattuck, 2012), but rather to gain an understanding and insight of the respondents' values and experience regarding the subject of integrity, and thus receive a source of inspiration to the design (Erva, 2011). The study uses both primary sources (such as interviews in the adapted Cultural probe) and secondary (non-academic resources), where advocacy research will be the core of the study (Given, 2008). The advocacy research approach, argues for the social responsibility and the community empowerment as the paradigm for this study also suggests. Since the four alternatives of privacy specifically demonstrating concerns regarding public safety that also include digital integrity, this research approach can also strengthen the choice of a cultural probe

that includes informal information collection and individual interviews which holds an objective view of the stated research questions (Given, 2008).

Furthermore, the chosen problem formulation and research question involve a process of

developing new alternatives to privacy in a digital context. These suggestions will depend on the answers and findings from the quantitative study, and be the base for the given alternative

suggestions of privacy. In order to validate and analyze the given answers, and thus provide a clear and concise process of measuring these results, I used Grounded Theory as a method for analyzing the collected data, both inductive and deductive. This choice was made in order to conceptualize and be able to develop a theory from the given results, and from these build a prototype that is created based on respondents' experiences and social patterns (Scott, 2009).

4.1 Research Design

The research design for this study is constructed as followed: A literature review, quantitative study (online survey), development of material for the Cultural Probe (alternative concepts of privacy and scenarios with different technical contexts), qualitative study (Cultural Probe) analysis and

reflection and in conclusion: a prototype. The chosen research procedure; Mixed Methods Design thus provides a better understanding of the chosen research area, used in sequential phases

(Creswell, 2012).

!