Augmented Reality Navigation

Compared to 2D Based Navigation

Pontus Knutsson

Oskar Georgsson

Datavetenskap och Applikationsutveckling Bachelor’s degree

Malmö University 180 HP

2019-06-04

Supervisor: Alberto Enrique Alvarez Uribe Examiner: José Font

Abstract

For almost three decades GPS coordinates and directions have been displayed with a top-down 2D view. At first in dedicated navigation systems, commercially used for driving, into today having GPS systems available in our everyday smartphones. The most common way to display the coordinates today are still by some sort of 2D view showing the user where it is and what roads/streets to take. This however has some problems that we believe can be solved using Augmented Reality combined with GPS. This paper sets out to answer the question if and how Augmented Reality displayed navigation can make it easier for pedestrians to navigate through a city compared to a 2D displayed navigation. In order to answer the question at hand this study presents a navigation application that combines the two technologies GPS and Augmented Reality which then is used in a user test. The results from the user tests and the questionnaire indicates that Augmented Reality based navigation is best used in scenarios where there are a lot of streets and it is hard to tell on a 2D map which street to take.

Sammanfattning

I nästan tre decennier har GPS-koordinater och rutt-instruktioner visats i en top-down 2D-vy. Först i dedikerade navigationssystem, kommersiellt används för körning, till idag med GPS-system tillgängliga i våra smartphones. Det vanligaste sättet att idag visa

GPS koordinater är fortfarande i någon form av 2D-vy som visar användaren var den är

och vilka vägar / gator som ska tas. Detta har emellertid några problem som vi tror kan lösas med hjälp av Augmented Reality kombinerat med GPS. I denna uppsats beskrivs om och hur en Augmented Reality baserad navigerings vy kan göra det lättare för gående att navigera genom en stad jämfört med en 2D baserad navigering. För att svara på frågan presenterar denna studie en navigationsapplikation som kombinerar de två teknologierna GPS och Augmented Reality som sedan används i ett användartest. Resultaten från användartesterna och frågeformuläret visar att Augmented Reality-baserad navigering används bäst i scenarier där det finns många gator och det är svårt att berätta på en 2D-karta vilken gata du ska ta.

Table of Contents

1. Introduction ... 1

1.1 Research framework ... 2

1.2 Research method ... 3

1.3 Purpose and Contributions ... 3

2. Method ... 4 2.1 Completion of guidelines ... 5 2.2 Course of action... 5 2.3 Questionnaire ... 5 2.4 Selection of participants ... 6 2.5 Demarcation ... 6 2.6 Method discussion ... 6 3. Design... 8 3.1 Technical... 8

3.1.1 Bearing/degree and distance calculation ... 8

3.2 Application description...10

3.2.1 Location tracking with GPS...11

3.2.2 Augmented directions ...11 3.2.3 Iteration one ...12 3.2.4 Iteration two...12 3.3 User interface ...12 4. User test ...13 4.1 Setup...14 4.2 Participants ...15 4.3 Objectives ...15 4.4 Procedure ...15 5. Result...16 5.1 Answers – Summary ...16 6. Analysis ...20

6.1 Evaluation of research questions ...20

6.1.1 RQ1: How can augmented reality-based navigation help pedestrians navigate through a city compared to a navigation system displayed in 2D? ...20

6.1.2 RQ2: What cons are there by using an augmented reality-based navigation compared to a traditional 2D one?...20

6.1.3 RQ3: What challenges does AR navigation bring for the developer? ...21

6.1.4 RQ4: Can AR based navigation be understood by people no matter their technical background?...21

7. Discussion ...22

7.1 Application and design ...22

7.2 User test, questionnaire and result ...22

8. Conclusion and future work...24

References ...25

1

1. Introduction

Navigation gets more and more important in the everyday society, people move around a lot in the cities and every year the cities grow bigger which in turn, makes them more complex to navigate. Tourists also use different navigation apps when they visit new places in order to not get lost. GPS is today primarily used in smartphones, displayed as a 2D view, when walking from one place to another and you do not know the best/fastest way to get there.

There are many different navigation systems such as RFID, beacons, QR-codes, systems using fingerprinting as well as those using dead reckoning/different sensors in your phone [1][2][3]. Though there exist many different systems, GPS is the one used in most cases because it is easier to use when navigating outdoors and it can be used all over the world. Even though it is the most used one, there still are some problems with it. The accuracy tends to fail from time to time and it is not always easy to use the information from the GPS displayed as a 2D view and apply it to the real world [4]. It also becomes harder to see which street to take when many of them are close together, this leads to the pedestrian focusing more on finding the right street than the surroundings and final destination.

A better way is if you can actually see in the real world where you are supposed to go. An alternative to solve such an issue can be to use Augmented Reality (AR). Together with the camera in your phone, it is possible to place and see artificial objects in the real world and interact with them. With this technology, navigation can take the next step to help people navigate. By placing hints and markers in the world it is possible to see more precisely where to go whilst at the same time being more alert as to what is happening in your surroundings.

The questions that this paper will address are:

RQ1: How can Augmented Reality based navigation help pedestrians navigate through a city compared to a navigation system displayed in 2D?

RQ2: What cons are there by using an Augmented Reality based navigation compared to a traditional 2D one?

RQ3: What challenges does Augmented Reality navigation bring for the developer?

RQ4: Can Augmented Reality based navigation be understood by people no matter their technical background?

The focus in this paper is on pedestrians in cities as opposed to the countryside due to the fact that it is easier to follow the roads in the countryside compared to the many streets in a city. That makes navigation based on AR more helpful in an urban environment.

2

1.1 Research framework

Augmented Reality (AR) is a technology field that mainly involves displaying virtual 3D objects in the real world, and at the same time the user can interact with the virtual 3D object in real-time [5]. Research into how navigation can be evolved has been done many times before and since AR became popular and easier to implement, researchers have tried to combine them in many different ways to create the next big breakthrough in navigation systems [6][7].

One area is tourism where AR was used to help tourists find popular places and buildings when they visited a new country. By the use of AR together with GPS and other techniques, such as points of interest, a new way of navigation is presented by Mata and Claramunt [6]. They proposed a solution were a mobile app using AR was used to move away from the 2D plane and into the real world. The user was able to choose a point of interest and be guided there with additional information and services by visual information. By using the point of interest that the user chose, the app found similar buildings and showed information about them over the real scenery captured by the camera on the way. This differs from the solution tried in this paper but the way they presented the information on the phone-screen using AR is still relevant to the project. The conclusion found was that test-subjects liked the idea and thought it worked well but further studies have to be done regarding energy consumption and image recognition in poor visibility conditions.

Similarly, Huang and Hsu also investigated how AR could be used as a tourist guide [7]. By using a database with coordinates of different buildings the user could choose a destination and the application would guide the user, by using the GPS and built in sensors such as gyroscope and compass. An arrow in the corner showed the way and displayed how far away the destination was, when the user was 20 meters away it would ask the user to find a QR code. The QR code was somewhere on the building and when the user scanned it, information was shown. They found that the potential of their solution was good, and the application worked well as a tourist guide. There were however things that could be improved. The GPS was unstable which sometimes lead to errors and the use of QR to get information should be eliminated and instead only use the position of the user. The GPS problem is relevant to our application and something that can affect the outcome.

Furthermore, Shahriar and Kun researched if a driver could keep more focus on the road if they had a navigation system that in the AR world showed a line in the middle of the road. That information was then shown on a screen on the dashboard, known as a

Camera-View personal navigation device (CVPND) [8]. They compared that system with two other navigation systems, a personal navigation device(PND) which was like the normal GPS systems of today, and an AR personal navigation device (AR-PND) were a line was shown in the middle of the road with AR and could be seen on the driver’s window screen like an overlay. The result from their research showed that a CVPND allowed drivers to keep more attention to the road compared to PND, but not as much as with the AR-PND. It also proved that the driver’s performance did not change depending on the different navigation system. After asking the drivers they concluded that the drivers preferred the AR-PND.

3

The use of GPS and the accuracy of it was studied by Sathyamorthy et al. in 2015 [4]. They found that the use GPS tends to drain the battery life of phones at a high speed. Ways of fixing this has been proposed, the one solution they studied was power savings. This resulted in lowered accuracy which made exact positioning harder to get which affects how good the AR solution could be. Though they found a solution it would require further work until it is ready to be implemented.

In 2018 Google announced their own AR navigation tool at Google I/O [9], their yearly event where they present new technology and innovations. The application was released to a handful of people in the beginning of 2019 to test it and leave feedback to Google [10]. Their version is meant more as an addition to the normal Google Maps, it only shows the AR view when the user points the camera straight forward in front of their face and then reverts back to the 2D view when they lower it again. Their application uses image recognition to get a more precise location of the user and then shows arrows pointing in the direction the user should go. More information about the application is not available right now as it is still in early development and is subject to furthe r changes.

1.2 Research method

To answer the research questions an AR prototype was developed for an Android smartphone. The prototype targets pedestrians and uses AR together with GPS

technology to guide the user to the selected destination. After the user had finished the route, they were asked to walk a similar route by getting directions from Google Maps. To gather data from the user study, the users were asked to answer a questionnaire about their experiences with the two different ways of navigation after they completed their test run of the prototype.

1.3 Purpose and Contributions

As was discussed earlier, it is sometimes hard to translate the 2D view of a traditional map to the real world. Combine this with the fact that when you use a navigation tool, you are most likely not too familiar with the territory and it is very easy to get lost even with a map. If it instead was possible to see the path in the real world, it would make it a lot easier to navigate. Google's solution also feels more like a way of showing off what is possible with AR and navigation without really researching first what users really think of it and what they feel would be useful. The research made will try to broaden the understanding of how AR and GPS can be used together to evolve the navigation field and to know the pros and cons it can bring. By doing so it will shed some light on what users think about AR in navigation and if they think it helped them going from one place to another or if it made it harder than using Google Maps directions.

4

2. Method

Design science was chosen as research method because to answer the research questions a prototype had to be developed, that prototype was then used to gather data for this paper. As according to Van Aken, the main purpose of design science research is to develop new knowledge that then can be used in order to design other solutions [11], which was the purpose of this paper. Furthermore, Hevner similarly states that the purpose of design science is to achieve knowledge and understanding of a problem by designing and applying an artifact to that problem [12].

Design-Science research methodology (DSRM) is an iterative research methodology used in computer science and information systems to create new artifacts to help people, and in this case helping them navigate. The artifact is used to understand the domain of the problem and how to achieve the solution. There are seven guidelines to follow

throughout the process were each step is equally important to the final result. The seven guidelines are:

• Design as an artifact, a viable artifact must be produced in the form of a model, method or instantiation.

• Problem relevance, the solution developed during the Design-Science research must be useful to an important and relevant problem.

• Design evaluation, the quality and efficiency of the artifact must be

demonstrated by well-executed methods such as questionnaires, interviews or other evaluation methods.

• Research contribution, the research done must provide clear contributions to the area the artifact was developed for.

• Research rigor, when constructing and evaluating the artifact, Design-Science research relies upon the application of rigorous methods.

• Design as a search process, using all available means to reach desired ends while satisfying laws when designing the solution. The means are the actions available and taken to construct a solution, ends are the goals and constraints on the solution and the laws are uncontrolled forces in the environment which changes depending on the domain. When designing an artifact, it is important to both know about the application domain e.g. requirements, and the solution domain e.g. techniques.

• Communication of research, research done must be presented both to technology-oriented and management-oriented audience. Technology-oriented audience needs to understand the process to be able and utilize the artifact and to do further work on it while the management-oriented audience needs to

understand the process in case they want to construct or purchase the use of the artifact.

5

2.1 Completion of guidelines

1. Design as an artifact: By creating a viable prototype as our artifact and using it for testing.

2. Problem relevance: Using AR as navigation has been researched before [6][7][8] but no solution has been found to be optimal. By exploring new ways of presenting the way from point A to point B our research and solution contributes to overcome the problem.

3. Design evaluation: A qualitative approach was used to gather data together with the use of the artifact. A usability test was done followed by a questionnaire [13] where the user answered a open-ended questionnaire to give feedback on the artifact and the proposed solution [14].

4. Research contribution: Connected with guideline 2, the created artifact tries to show new ways of presenting the navigation to the user.

5. Research rigor: By working iteratively when constructing the artifact and using an open-ended questionnaire to evaluate it, the research was based on rigor and well-established methods.

6. Design as a search process: By testing different solutions and methods

throughout the iterations, all possible actions were used to come up with the best solution to the problem and reaching the desired ends.

7. Communication of research: Showing the results and discussing the solution provides the technical-oriented audience with information needed to further develop the artifact and at the same time inform the administrative-audience of the process.

2.2 Course of action

First of all, a prototype was developed which was worked iteratively in order to develop a viable and rigorous prototype. At the end of each iteration we tested the prototype, the results from that test were then analyzed and used to improve the prototype. The tests was performed by us, selecting a short route to walk in the inner parts of Malmö and directly analyzing what went bad and what could be improved. Following the creation of the artifact a questionnaire was designed for the users to answer after they had

participated in the user test [13]. When the questionnaire was completed, we started to perform the user test. Before each test started the users were given a small introduction to the test and area of concern. The user test was split into two parts where in the first part the user used the AR prototype to navigate, then in the second part they used

Google Maps. The two parts used different routes but with similar environment in the

same neighborhood. At the completion of the whole test they were prompted to answer the questionnaire. When the test was completed, the answers from the questionnaire were analyzed, compared and evaluated to see which improvements could be made to the artifact and what the users thought of the AR solution as a mean for navigation.

2.3 Questionnaire

We opted for an open-ended approach to the questionnaire in order to move away from a quantitative approach towards a more qualitative one where the questions were

answered with thoughts, comments and feedback rather than yes/no or by rating [15]. The main data that was collected from the questionnaire would be how the artifact was perceived and if the solution was better than the available 2D ones like Google Maps. The questions had to do with how they liked the implementation of the artifact, if there

6

could be any improvements, how using AR when navigating worked and how they found the artifact better/worse compared to the traditional 2D view of Google Maps.

2.4 Selection of participants

The users who were selected to test the artifact were chosen based on their technical background. In order to get a broader opinion on the subject this seemed to be the optimal solution. It is interesting to understand how it differs between people with previous experience of AR and those with no experience. From the test it became clearer what different groups thought of the artifact and AR.

2.5 Demarcation

The artifact was developed using AR together with GPS rather than any other navigation technique due to the fact that GPS was:

1. very simple to use outdoors when navigating and provided the information necessary to display instructions in the AR-world.

2. simple to implement in Android as Google provides a good and well documented library.

Other techniques such as RFID, beacons, QR-codes would be unsuitable if the solution would to be tested in another city or another part of the city, and requires more setup and provides less freedom compared to GPS [1][2][3]. The benefits of those techniques are better utilized indoors where the area is more closed off and restricted in size compared to outdoors where the route can span over a much larger area.

The artifact was compared against Google Maps, which also uses GPS, because it is available on all Android phones and it is one of the most popular navigation tools, which helped when the users compared it with the AR solution.

The reason to perform the tests only in cities instead of also including countryside was because it can be more complex for pedestrians to navigate in cities compared to the countryside. Only testing it with pedestrians instead of also including car drivers was because it would require a completely different application. Also, looking at your phone while driving is illegal in Sweden which would further require a different solution.

2.6 Method discussion

Another possible research method would be to use a design and creation type strategy. The reason for this not being used was because it is more focused on providing a new IT artifact, either a product but could also be a new model or method of developing artifacts [13]. Meanwhile this paper was more focused on providing new knowledge of how a specific type of artifact can be used and its pros and cons instead of the development of that artifact.

The reason behind using open-ended questions in the questionnaire was because we needed the participants to express their opinion more freely without being influenced by the researchers with leading questions [14]. This means that participants could

spontaneously answer the questions which could lead to interesting responses that closed-ended questions would not have been able to provide. The trade-off was that open-ended questions were harder and takes more time to analyze which resulted in having a

7

smaller test group. Another approach to get more detailed information would have been interviews [13]. The main drawback with interviews would be the time needed to

prepare and analyze the answers, there was not enough time for that. The number of participants could have been reduced to make interviews viable but then we would not get the same broad point of view. In addition to an open-ended questionnaire, a think-aloud method [16] could have been used to not only get the thoughts from the users after they were done but also what they thought during the test. The reason we did not use it was because we feared it might distract the users from navigating if they also have to say everything they think. We wanted full attention to the artifact without anything disturbing.

8

3. Design

The prototype developed for the project was created in order to make it easier for its users to navigate through cities. It does this by guiding them from their current position to their selected destination using AR. The directions are displayed using a line on the ground in the direction the user should walk, and arrows at the intersections to indicate where and in which direction the user should turn.

The prototype does not contribute to any new technology but more of what can be done by combining the two technologies GPS and AR. Even though Google already have done something similar [9] [10], as was discussed in related work, there are key differences in the implementation. The artifact developed in this paper uses AR as its main way of guiding the user as opposed to Google’s application that uses AR as an extra function that can help the user in some situations. Our artifact also solely relies on the GPS at the start of the application in order to get the start position of the user. Google’s

application uses image recognition together with GPS to constantly update the current position and then changes the directions given in the AR view.

3.1 Technical

The artifact was developed as an Android application for mobile phones with Java as the primary coding language and was written using Android Studio as IDE [17] [18] [19]. For the implementation of the AR layer Google’s ARCore for Java together with

Sceneform was used [20] [21]. ARCore is a platform for building AR applications and Sceneform is a library for creating 3D objects together with ARCore. For the location

information and route planning the application uses Google Directions API, Google

Places SDK and Google Maps SDK [22] [23] [24].

3.1.1 Bearing/degree and distance calculation

The artifact uses a number of different of geographical formulas in order to translate the

GPS coordinates of the route into positions and distances in the AR view. To calculate

the bearing of the different lines used to show the directions, the following formula is used:

𝜃 = 𝑎𝑡𝑎𝑛2(𝑠𝑖𝑛(𝑙𝑜𝑛𝑔𝐸𝑛𝑑 − 𝑙𝑜𝑛𝑔𝑆𝑡𝑎𝑟𝑡) ∗ 𝑐𝑜𝑠 𝑙𝑎𝑡𝐸𝑛𝑑, 𝑐𝑜𝑠 𝑙𝑎𝑡𝐸𝑛𝑑 ∗ 𝑠𝑖𝑛 𝑙𝑎𝑡𝑆𝑡𝑎𝑟𝑡 − 𝑐𝑜𝑠 𝑙𝑎𝑡𝐸𝑛𝑑 ∗ 𝑠𝑖𝑛 𝑙𝑎𝑡𝑆𝑡𝑎𝑟𝑡 ∗ 𝑐𝑜𝑠(𝑙𝑜𝑛𝑔𝐸𝑛𝑑 − 𝑙𝑜𝑛𝑔𝑆𝑡𝑎𝑟𝑡))

In this formula the latStart and longStart is the latitude and longitude of the starting point of the line, and the latEnd and longEnd is the end position of the line. Because the earth is round the start and end point of a line does not have the same bearing, so this formula calculates the bearing for the start point of the current instruction. In the artifact the bearing is then used in combination with the following formula to determine the lines rotation:

𝜃 = 𝑀𝑖𝑛((𝑏1 − 𝑏2) < 0 ? 𝑏1 − 𝑏2 + 360: 𝑏1 − 𝑏2, (𝑏2 − 𝑏1) < 0 ? 𝑏2 − 𝑏1 + 360: 𝑏2 − 𝑏1) The variable b1 is the bearing for the line already drawn and b2 is the bearing of the next line to be drawn. This formula calculates the difference between the bearing of the two lines in order to get the degree difference. It is used in combination with the

9

𝑥𝑃𝑟𝑜𝑑 = (𝑙𝑎𝑡𝑀𝑖𝑑 − 𝑙𝑎𝑡𝑆𝑡𝑎𝑟𝑡) ∗ (𝑙𝑜𝑛𝑔𝐸𝑛𝑑 − 𝑙𝑜𝑛𝑔𝑆𝑡𝑎𝑟𝑡) − (𝑙𝑜𝑛𝑔𝑀𝑖𝑑 − 𝑙𝑜𝑛𝑔𝑆𝑡𝑎𝑟𝑡) ∗ (𝑙𝑎𝑡𝐸𝑛𝑑 − 𝑙𝑎𝑡𝑆𝑡𝑎𝑟𝑡)

This formula uses 3 lat and longitude positions start, middle and end which in the formula above is described as: (latStart, longStart), (latMid, longMid) and (latEnd, longEnd). The formula above provides a negative number for left turns and a positive number for right turns. The application uses the resulting number to determine if the degree difference should be added or subtracted from the current line degree depending on if it is a left or right turn. To calculate how long a line should be, the distance between the instructions start and end node is calculated by using the following haversine

formula:

𝑎𝑛𝑔𝑙𝑒 = (𝑠𝑖𝑛 ((𝑙𝑎𝑡𝐸𝑛𝑑 − 𝑙𝑎𝑡𝑆𝑡𝑎𝑟𝑡)/2))2 + 𝑐𝑜𝑠 𝑙𝑎𝑡𝑆𝑡𝑎𝑟𝑡 ∗ 𝑐𝑜𝑠 𝑙𝑎𝑡𝐸𝑛𝑑 ∗ (𝑠𝑖𝑛((𝑙𝑜𝑛𝑔𝐸𝑛𝑑 − 𝑙𝑜𝑛𝑔𝑆𝑡𝑎𝑟𝑡)/2))2

𝑑𝑖𝑠𝑡𝑎𝑛𝑐𝑒 =6371𝑒3 ∗ (2 ∗ 𝑎𝑡𝑎𝑛2(𝑎𝑛𝑔𝑙𝑒, 1 − 𝑎𝑛𝑔𝑙𝑒))

The formula above first uses the lat and longitude of the start (latStart, longStart) and end (latEnd, longEnd) nodes to calculate the line’s angle. It then uses the angle together with a constant for the earth's radius to calculate the shortest distance between two GPS points. In the artifact this formula is used to decide the length of each line. An

alternative to using this formula would have been to use the Vincenty formula which also calculates the distance between two latitude and longitude pairs. Even though the

Vincenty formula is more precise, it comes at a cost of using more processing power

which is the reason for the formula not being used in the developed prototype as

10

3.2 Application description

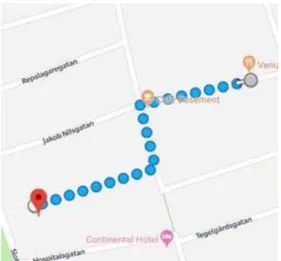

The process is divided into two steps as shown in Figure 1,3a and 3b. First, the user chooses a destination and requests the route which are then drawn on the map. The second step as shown in Figure 3a and 3b guides the user from their current location to the end destination in the AR world.

Figure 1: User chooses destination and the route is drawn on the map.

Figure 2: Asking the user to look in the right direction to help with calibration.

11

Figure 3a: First line drawn when the user enters Figure 3b: User is at the end of the line and the the AR world. next one is drawn with an arrow showing the way.

3.2.1 Location tracking with GPS

As previously mentioned, the prototype guides a user from their location to their destination. To achieve this, the prototype uses a combination of Google Places SDK,

Google Maps SDK and Google Directions API (Figure 1). The Google Maps SDK is used

to show the user its location on the map and later on showing the printed route from the start position to the end destination. When the user start typing something into the input box the Google Places SDK shows different alternatives matching the input and the users can then choose where they want to go. When a destination is chosen the longitude and latitude of start and end position is sent to the Google Direction API and the route is returned. Because the route is printed as straight lines on the map the route-array contains a substantial amount of coordinates (longitudes and latitudes) marking each time the path curves.

3.2.2 Augmented directions

When the user has entered their destination and the application has gathered the coordinates from the Google Directions API, which is used to calculate the length and bearing of each line drawn in AR, the user can choose to display the directions in AR. When the user first enters the AR world, they are asked to point the phone in the correct direction (Figure 2), this needs to be done in order to calibrate the initial bearing which is later used to calculate the bearing of the following line. Another approach to this would be to use the phones built in compass but in the sensors current quality they are

12

not precise enough for an application like this. The first line is then drawn in front of the user (Figure 3a). To draw a line the latitude and longitude of its start and end nodes are used to calculate the bearing and the length of the line. For every line except the first one, the rotation is decided by rotating the difference between the last line placed and the new one to be placed. With those calculations the lines can be placed correctly and without depending on too many unreliable sensors. When the user is two meters away from the end of the current line the next one is drawn (Figure 3b). To decide when the user is at the end, the application calculates the distance by comparing the user’s AR position with the AR position of the end point.

3.2.3 Iteration one

During the first iteration of the application a library for ARCore called ARCoreLocation developed by Appoly was used [25]. This library can be used to draw a node in the AR world on a specific real world long and latitude. We used this to draw a node at the start and end of each instruction in the directions and then draw a line in-between those two nodes. The issue we found with this was that because the library has to use the phones compass to decide true north the start and end nodes were often placed at the wrong locations which led to the line displayed pointing in the wrong direction.

3.2.4 Iteration two

During the second iteration we had to rework our AR directions part completely and decided to not rely on a compass for correct placement. Instead we used calculations of bearing and length of each instruction in the directions. In order to know the correct placement of the first line the user is asked to point the phone in the correct direction and then the application calculates the degrees of the other lines based on that first lines location. By doing this the lines are placed at the correct locations as long as the user is pointing the phone in the correct direction at the start. The first solution did not give any visual hints as to where the first line would be drawn. After some testing we understood that this made it very difficult to place the line precisely. Instead the user can see the line in the AR view. This gives the user a visual indication and makes it easier to place the initial line at the correct location before locking it into place. This is not an optimal solution but with the current hardware available in phones this is the current best solution.

3.3 User interface

When designing the artifact, we opted for a clean and simple UI because it was important to not confuse users who had never before used AR. When the application starts a map is shown (Figure 1 but without the route drawn), zoomed in on the current position of the user with no possibility of switching to the AR view. This was done to prevent the user from switching to AR without having already chosen a destination. The only option available after choosing a destination is to start the AR view which leaves no room for confusion. Limiting the number of options available ensures that the user knows what to do next. When the AR view has started, a blue line is drawn in front of the user one meter below the position of the phone making it clear in what direction the user should walk. Blue was chosen because it is an easy color to see in most situations, and to make it similar to how the route is shown on Google Maps making it more obvious to the user what the line means. An arrow is also placed at the end of each line drawn, pointing in the right direction.

13

4. User test

User tests were done followed by an open-ended questionnaire to evaluate what the users thought about the artifact and the proposed solution to the problem. Getting feedback about the design and function of the artifact is important as it affects how the proposed solution with AR as navigation is viewed by the user. For that reason, feedback about the artifact is also collected alongside feedback about the solution.

Question Purpose

Name:

-Age:

-1. What parts did you find better

with AR compared to Google Maps? To get an understanding of the pros of our AR implementation. 2. What parts did you find were

worse with AR compared to Google

Maps.

To get an understanding of the cons of our AR implementation.

3. How clear were the directions given in the AR world? Did you ever get lost?

It is important that the directions given to the user were clear and easy to understand.

3a. What did you think about the

arrow being at the end of each line? Did the user find it good or did they want it to show up earlier to help them plan in advance? 4. What did you think of the

calibration step? Was it easy to understand?

As this is the most important part in getting the right path in the AR world it is important that they understood what to do.

4a. What could have been done better to help the user understand how to calibrate?

Input on what they thought could be improved.

5. What did you think about the

visibility of the lines and arrows? If the lines or arrows were hard to see it could lead to the user missing a turn or having to look to much at the screen.

5a. How did the color of the lines and

arrow affect the visibility? Did we choose good colors or are they blending in with the surrounding? 6. How was the flow of the artifact?

Was it easy to understand every step?

If the user didn’t know what to do at some point it will affect what the user thought of the artifact and the AR solution.

7. Did you find our implementation of AR navigation useful? Evolve your answer!

Feedback of what they thought of the usability of

AR navigation.

7a. What changes would you make to the application to improve it?

Improvements the user think would be useful to the artifact.

14 8. What other useful information

would you like to see in the AR world?

Feedback about work that could be done in future iterations to improve the artifact.

9. Other thoughts that you would

like to share? An open question where the user can write about things we have not asked.

Figure 4: Questionnaire that the users answered after the test.

4.1 Setup

An open-ended questionnaire was created using Google Forms (Figure 4). As can be seen the user is encouraged to give more elaborate answers regarding the artifact, how they felt the AR solution worked, what could be improved in future iterations and what problems they might have found during the test. Because the participants were in different cities, we selected 3 different AR routes in the respective town of the

participant. We tried to keep all the routes as similar as possible, the selected routes can be seen in figure 5a-c. We selected the routes based on how many turns they contained and that they were in an uncrowded area. The application was deployed to an OnePlus 6T which was then handed to the user, this so every test would use the same hardware and software decreasing the chance of different user-experience.

Figure 5a: The route in Malmö. Figure 5b: The route in Hjortsberga.

15

4.2 Participants

As aforementioned in the method section of the paper, the participants were chosen based on their technical background and split into two groups, one consisting of people which had used AR before and another group with people which did not have any earlier experience of AR. The participants were people we know with different technical

backgrounds. In total there were six people, three in each group. Three of the

participants tested the route in Malmö (Figure 5a), two of the participants tested the route in Hjortsberga (Figure 5b) and one tested the route in Ystad (Figure 5c). We wanted to keep the number of participants’ low because we believe an open-ended

questionnaire requires more analysis, thus having more participants will fall outside the scope of this paper. The results will not be enough to drag any generalization or strong conclusion, but they will be an indication to what people think about AR as a means of navigation.

4.3 Objectives

The objective of the test was to get an understanding of what people thought of our AR solution, how it compares to Google Maps, the way we implemented the calibration, the way directions are given and general thoughts about AR as a way of navigating. We also wanted to see how the perspective differed between peoples with different technical backgrounds. This to understand if AR as a navigation option is feasible for everyone and if not, how it has to be improved.

4.4 Procedure

The test started by us giving a short introduction about the artifact and research.

Because it is vital to receive good and clear feedback, we asked the users to be observant and note anything they thought was worth mentioning. At the start of the test the user was given a phone with the application running and were asked to enter the first pre -selected destination for the AR portion of the test. When the user reached the

destination of the AR test, they swapped to Google Maps.

The user was given the second pre-selected destination and asked to enter it into Google

Maps and navigate to the destination. When the test was over a pop-up asked the user to

16

5. Result

In total six participants tested the artifact and the results gave useful information about the current features and further work that can be done with AR as a navigation system. The user test was performed at three different locations but with very similar route and environment. The participants were from the two different focus groups previously mentioned. They were given the same information at the start of the test and they all received help with the calibration part of the test. Beyond that they performed the user test without any further instructions or help by us, this in order to not affect their answers and perception of AR as navigation.

The goal of the first two questions besides name and age were to get an understanding of their view on AR versus the traditional 2D view of Google Maps. Questions 3-5 regarded the design choices of the instructions in the AR view and question 6 were about the design of the artifact. The rest of the questions asked the participant about further development and what could have been done better. The results presented below are a summary of what the users answered on each question. For exact answers see appendix.

5.1 Answers – Summary

Table 1: A presentation of the general responses from the questionnaire 1. Which areas did you prefer with AR compared to Google Maps?

Users with non-technical background

general answers: Users with technical background general answers:

Pros: They liked that they could see through the camera where they should go and when it is time to turn. They found it very useful when there are a lot of streets and when it can be hard to determine via a traditional 2D map which street you should enter. They thought they had a better knowledge about their surroundings. One thought that it could be useful while driving as you could see the road at the same time.

Pros: The users thought that it was not as abstract and you get a better understanding of where you should go. They liked that they did not have to “translate” the 2D map into the real world. No confusion regarding in which direction you are looking.

In general users from both groups were positive to the idea of AR as a tool for navigation.

2. Which areas did you find worse with AR compared to Google Maps?

Cons: One user sometimes found the line hard to follow. They thought that in the AR version you don’t get an indication of how far away the next turn is and how far away the end destination is like they did with Google Maps. One user thought it would be good to also see which street they were on.

Cons: Compared to Google Maps the users thought that you were “forced” to look at the screen the whole time and that it was harder to plan ahead because you did not get the same overview as with Google

maps. One thought that AR in its current

state would not be good for car traveling. Here the thoughts of potential use differ a bit between the two groups. In the previous question a user in the non-technical group thought that AR navigation might be good while driving. Meanwhile in this question a user in the technical group thought that it might not be a good idea to use AR navigation while driving.

17

3. How correct were the directions? Did you get lost?

Pros: They thought the directions were very clear and easy to get to the

destination. None of them got lost. Cons: They found the lines to be a little bit wrong in some locations, but they still found them clear.

Pros: The users found the directions to be very clear and obvious. The line and arrow made it near impossible to get lost. They also found that the lines and arrows stood out from the real world making them easier to see.

Users from both groups found the directions to be understandable and easy to follow. Some users from the non-technical group found the line to be a little bit inaccurate.

3a. What did you think about the arrow at the end of the line?

Pros: One user liked that the arrow was at the end of the line.

Cons: Another user would have preferred the arrow to be placed in the middle of the line (or closer to the user) in order to get the next direction a little bit sooner as now they thought you got the next direction a bit too late.

Pros: Two of the three test users thought that the arrow placement were good and that it helped them see in which direction they should turn.

Cons: One user thought that the arrow should be replaced with the line just being drawn earlier or possibly the whole route should be drawn at the beginning.

In this question users from both groups think that the next instruction should be visible sooner.

4. What did you think about the calibration step? Was the implementation easy to understand?

The users were informed what to do after we understood that it was hard to

understand.

Pros: The users found that the calibration step was clear after they got it explained. Cons: They thought that there could be a better instruction of what to do in the application.

The users were informed what to do after we understood that it was hard to understand.

Pros: After an explanation as what to do the users thought it was easy to calibrate.

Cons: Though some indication where to point would be helpful and one user did not really understand why the calibration step was needed.

Here the answers were nearly identical in both groups and they all thought that the calibration step needed further work.

4a. What could have been done better to help understanding the calibration step?

They would have liked a demonstration of how the line should be placed inside the application. One would have liked a note shown on the screen which with words described how the line should be placed and would also have liked that the user should not walk until the line was calibrated.

They all agreed that an animation or picture that shows what to would be useful and maybe better than just a text asking the user to point in the right direction.

Similarly, to question 4 all the users had the same or a similar idea to what a solution to the calibration step should be.

18

5. How was the visibility of the line and the arrow?

Pros: They all found it very visible and easy to see. They thought it was good that strong colors were used.

Pros: Two of the users found the visibility to be good and the lines and arrows to be easy to distinguish from the rest of the surrounding. None of the users found the sun to be a problem either.

Cons: One user thought that the arrow was a bit big and that it would have been better to just draw the next line earlier.

Mainly both groups found the visibility of the line to be good. One from the technical group thought that the arrow was a bit large and distracting.

5a. How did the color of the line and arrow affect the visibility of them?

Pros: They found that the colors helped very much with the lines visibility.

Pros: The colors chosen were appreciated by the users and they thought that the colors popped out from the real world making them easier to see. One user also highlighted that it was good that we did not use the color red as it may have been too much.

6. How easy was it to understand the application? Were there some confusing parts?

Pros: They found the application relatively easy to understand.

Cons: One thought that the calibration was hard to understand before the explanation and when the next line would appear or if they were at the end of the route. Another user thought that the way to enter a destination was unclear.

Pros: They found that most parts of the application were easy to understand and self-explanatory.

Cons: There were two parts that they found harder to understand and that were the calibration step and where to click when they were to enter a destination. All three users had problems regarding input of a destination.

Both groups found that the calibration step and where to click when they should enter a destination were confusing.

7. Did you think our implementation of AR as a navigation system was useful? Elaborate!

Pros: They all found it useful even though they thought some

improvements to the application were needed. One said that they would use it if their previously mentioned improvements were implemented.

Pros: All three users found that the

implementation of AR could be useful in some scenarios such as for shorter paths in the city where it is easier to see which street to enter compared to a 2D map where it sometimes is harder to decide. One user also noted that the implementation could be combined with AR glasses in the future.

19

7a. What changes could be made to improve our implementation?

They would all like to have a better

instruction of the calibration step inside the application. They thought distances to the next instruction and to the destination should be added. One user thought that both versions of the maps both 2D and 3D should be visible in order to get an

overview. One user would like an indication if the user walks the wrong way either by voice or by some visual indication.

All users suggested a more obvious UI in some parts of the application. Most of them also suggested that more arrows should be drawn along the line to better prepare the user where the next turn will point. Showing how far it is to the next turn and until the final destination were also suggested by some of the users.

Users from the non-technical and the technical group thought that more arrows would be a good feature to add as well as some sort of indication of how far it is until the next turn and final destination.

8. What other information would you have liked or found useful to see in the AR world?

They would have liked to see stores and restaurants they walked by and which street they were on.

They would like to see information about restaurants or other useful buildings and distance to the next turn and to the final destination.

20

6. Analysis

Based on the data presented in the results chapter we will in this section of the paper analyze and search for indications as to what the results mean. The results will be used to evaluate our research questions in a hope to provide an answer to each of them.

6.1 Evaluation of research questions

Because the study only involved six participants the results will not give any final conclusions, but they will be able to give indications of what users think about AR and how it can help them in cities compared to Google Maps 2D view.

6.1.1 RQ1: How can augmented reality-based navigation help pedestrians

navigate through a city compared to a navigation system displayed in 2D?

Question 1 in the questionnaire gave good insight where the users stated that it helped them see when they should turn, especially when there are a lot of streets. They also pointed out that it was easier to follow the route and that they did not have to translate the 2D view into the real world. Furthermore, the answers to Question 3 shows that the users found it easier to not get lost and the directions were very obvious. Combined with the fact that the answers from Question 5 states that the visibility of the lines and arrows were good indicates that objects in the AR world can be made easy to see. This further indicates that AR can be helpful in the following ways:• By making it easier to follow the route, especially when there are narrow streets. • By being clearer and more intuitive as there is no need to translate from a 2D

view.

• By lowering the risk of getting lost through obvious and clear directions. • By providing visible lines and arrows that directly guides the users.

6.1.2 RQ2: What cons are there by using an augmented reality-based

navigation compared to a traditional 2D one?

In order to answer this research question, we analyzed the negative responses from the questionnaire. In the results section these responses are the ones marked as “cons”. The answers from Question 2 in the questionnaire indicates that users found it hard to plan the route ahead because there was no overview of the complete route in the AR view. Because of this they also found that in the AR view they had to keep more attention to the screen compared to when they used the 2D view. Questions 4 and 6 shows that another con with an AR view is that there often needs to be a manual calibration in order to keep the directions precise. Users from both groups thought that it was a complex step compared to what is needed in a 2D view.

The results from this research question points towards that in order to make AR a complete option for navigation, advances in smartphone sensor hardware have to be made. This in order to build fast and ease-of-use AR navigation applications which does not rely on manual calibration. The results also point towards that a better way to implement AR navigation might be in combination with a 2D view of the full route inside the AR view.

21

6.1.3 RQ3: What challenges does AR navigation bring for the developer?

To answer this question, the results from the questionnaire will not be used, this will instead be based on what we have experienced during the development of the project. The biggest problem we had were the sensors; to provide precise directions it is important to know the direction the smartphone is facing in order to calculate true north. However, the sensors in today's phones are not precise enough to provide an exact pose, which means that the compass cannot be used when calculating where each

coordinate should be. We overcame this issue by adding a calibration step, explained in the design chapter, and by performing calculations based on the calibration. That was also another problem, as there today if no easy way to convert GPS positions we had to use a combination of multiple formulas to convert them into positions in the AR view. Another problem we faced was when we tried to rotate the lines in the right direction.

ARCore uses Quaternions to calculate the rotation of an object which sometimes leads to

the object rotating on more than one axis. This makes the lines in the AR view to have a downslope which makes each line smaller than the previous one. Our fix for this was to with each line increase the scale, making the visible change minor. However, this is not the favorable solution to the problem.

6.1.4 RQ4: Can AR based navigation be understood by people no matter

their technical background?

Based on the answers from Question 1 and 3 in the questionnaire, indicates that both people with technical and non-technical background found that they liked AR based navigation and that it was sometimes easier to understand than the traditional 2D based navigation view. They stated that there was no confusion regarding which way you should go which sometimes is the case in 2D maps.

22

7. Discussion

7.1 Application and design

The application developed for this paper is not the perfect AR navigation application, there are flaws and design choices that can be improved, which can be seen in the answers from the questionnaire. However, it has provided us with the possibility to perform user tests and made the users understand the possibilities of AR as a mean of navigation which was one of the goals of this application. Another goal of the application was to give us as developers an insight into what the hardships are when developing a navigation application with AR which was automatically achieved during the

development of the application.

The questions in the questionnaire regarding the design choices in the application often had negative responses from both groups. For example, in Question 6 participants from both groups found the way to enter a destination to be unclear. The main issue the users found for the input being unclear was that the input field looks more like a text field than a place to enter a destination. The reason for it being unclear is probably because we did not have anyone but us as developers test the application before our user test and we as developers did not think about the errors of that design. The questionnaire also shows in Question 4 that the users understood the way we implemented the calibration step after we explained it. This indicates that even though a complex step is needed in an application the users will not have an issue with it as long as it is explained properly. This is further supported by the answers to Question 4a where both groups would have liked a better explanation. In the future it would be optimal to completely remove the calibration step or at least not have it depend as much on the user. As was discussed earlier, if the sensors in the phone were better the calibration step would not be neede d. Exactly how Google’s solution handles this is unknown, the only thing that has been stated and shown is that they use image recognition to help calculate the user’s position. Although there are some negative responses about the design choices, Question 3 and 5 shows that the directions in the AR view are clear.

Regarding other improvements and wishes by the users, information such as nearby restaurants, stores and distance indications to the next turn and the end point would be good to have. This has already been done as discussed in the related work, where Mata and Claramunt showed nearby buildings which were of interest [6]. A way of showing if the user is moving in the wrong direction is something that was not implemented in the application. As the results showed the users did not find it hard to follow the directions and no one reported getting lost but if further developments and improvements were to be made, it would be something to try and add. This to further minimize the risks of walking in the wrong direction.

7.2 User test, questionnaire and result

The answers from the users who participated in the user test provided interesting

results and it was interesting comparing what people with technical background thought versus people with no technical background in AR. Even though both groups identified good and promising features of AR as a navigation view there are some problems such as the precision of the lines, better UI and more information in the AR world that needs to be further studied and worked on. For most part of the results both groups answers were similar, and they highlighted the same strength and weaknesses. One interesting part to

23

discuss is the difference in the opinion about how AR and the implementation made by us would work in cars. In Question 1 in the questionnaire one user in the non-technical group pointed out that they thought the solution would work well in cars because you would be able to see the road and the directions at the same time. Meanwhile in Question 2 a user in the technical group stated that it would not be good for car traveling. This may point to users with technical background having a deeper

understanding of how easy it is to get distracted by items on a screen, which would affect the driving. This was also discussed in related work where they found that having a screen showing the AR view was more distracting [8]. Users with less technical experience may focus on the features but forgets how it would work when it is

implemented. Furthermore, the results show that both groups found it negative that you could not see the whole route and plan ahead as well as have arrows showing the next direction a bit earlier. This was not something that we had reflected on before, however it would be hard to implement because if the whole route were to be drawn at the

beginning the lines would go through buildings which probably would have confused the user. It could possibly also clutter the screen leading to further confusion. What could have been implemented in the AR solution is to have the arrows show up both at the end but also in the middle of the line. This would help the user and make it a little bit easier to plan ahead and maybe take some shortcuts.

24

8. Conclusion and future work

An artifact has been made to study how AR can be used to navigate as a pedestrian in a city and how it can be a contender to the 2D view navigation. We have with this paper proven that it is possible to develop a functional AR based navigation. Furthermore, it also provides an indication that AR based navigation with today’s tools can be

complicated to achieve and that some form of compromises is required by users.

RQ1: How can augmented reality based navigation help pedestrians navigate through a city compared to a navigation system displayed in 2D?

We found indications that AR makes it easier for pedestrians to navigate a route and not having to translate the 2D map and especially in areas with complex streets.

RQ2: What cons are there by using an augmented reality based navigation compared to a traditional 2D one?

The main negative part we found users thought about AR navigation was that they had to keep their eyes on the phone at all times because they were not able to see the full route ahead.

RQ3: What challenges does AR navigation bring for the developer?

The largest challenge for developers found in this paper was that they have to work around the inaccurate readings from the sensor in the phone. We found that the sensors in the phones of today cannot be trusted to provide an exact reading of true north. RQ4: Can AR based navigation be understood by people no matter their technical background?

We found that both our technical groups could understand our version of an AR based navigation.

In summary, we have found indications that AR as a navigation view can be helpful for pedestrians in cities where there are many streets and it can be difficult to see on a traditional 2D map which to take. We have also found indications that point to the fact that AR is viable for both technical and non-technical users as long as there are clear and understandable instructions that helps the user in the beginning. Though some problems were found, all the users expressed positive thoughts of AR as an alternative and that it has its place in navigation.

For future work there will need to be a larger study with more participants to find stronger conclusions that can give clear indications as to how AR can be a helpful way for pedestrians to navigate in cities. More tests regarding arrow placement and how frequent they appear on each line should also be conducted to find the optimal user experience when navigating. In addition to that, as mentioned above, some users wanted to see more of the path drawn. We argued that it would be too much clutter on the

screen, but this is also something that can be tested in the future to see if more users agree and how it would look on the screen. Another users also mentioned how our solution could be used together with AR-glasses which would be very interesting to test. Lastly, design choices of instructions and directions as well as the application as a whole could be studied in order to make a better user experience.

25

References

[1] Zu-Hao Lai, Chian C. Ho, “Real-time indoor positioning system based on RFID Heron-bilateration location estimation and IMU angular-driven navigation reckoning”, IEEE 7th

International Conference on Cybernetics and Intelligent Systems (CIS) and IEEE Conference on Robotics, Automation and Mechatronics (RAM), 2015, pp. 276-281.

[2] Ding-Yu Liu, Cheng-Yu Hsieh, “Study of Indoor exhibitions using BEACON’s Mobile Navigation”, IEEE International Conference on Advanced Manufacturing (ICAM), 2018, pp. 335-337

[3] Ying Zhuang, Yuhao Kang, Lina Huang, Zhixiang Fang, “A Geocoding Framework for Indoor Navigation based on the QR Code”, Ubiquitous Positioning, Indoor Navigation and

Location-Based Services (UPINLBS), 2018, pp. 1-4.

[4] Dinesh Sathyamorthy, Shalini Shafii, Zainal Fitry M Amin, Asmariah Jusoh, Siti Zainun Ali, “Evaluating the trade-off between Global Positioning System (GPS) accuracy and power saving from reduction of number of GPS receiver channels”, International Conference on

Space Science and Communication (IconSpace), 2015, pp. 221-224.

[5] R. T. Azuma, “A Survey of Augmented Reality”, PRESENCE: Virtual and Augmented

Reality Volume 6 Issue 4, 1997, pp. 355-385.

[6] Felix Mata, Christophe Claramunt, “Augmented Navigation in Outdoor Environments”,

SIGSPATIAL'13 Proceedings of the 21st ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems, 2013, pp. 524-527.

[7] Da-Yo Huang, Kuo-Hsun Hsu, “An application towards the combination of augmented reality and mobile guidance”, International Conference on High Performance Computing &

Simulation (HPCS), 2013, pp. 627-630.

[8] S. T. Shahriar, A. L. Kun,” Camera-View Augmented Reality: Overlaying Navigation Instructions on a Real-Time View of the Road”, AutomotiveUI '18 Proceedings of the 10th

International Conference on Automotive User Interfaces and Interactive Vehicular Applications, 2018, pp. 146-154.

[9] “Google I/O 2018”, [Online]. Available: https://events.google.com/io2018/recap/

[Used April 16th 2019].

[10] David Pierce, The Wall Street Journal, “It’s the Real World—With Google Maps Layered on Top”, 2019, [Online]. Available: https://www.wsj.com/articles/its-the-real-worldwith-google-maps-layered-on-top-11549807200 [Used April 16th 2019].

[11] J. E. Van Aken, “Management Research as a Design Science: Articulating the Research Products of Mode 2 Knowledge Production”, British Journal of Management, Vol. 16, 2005, pp. 19–36.

[12] A. R. Hevner, S. T. March, J. Park & S. Ram, “Design Science in Information Systems Research”, MIS Quarterly Vol. 28 No. 1, 2004, pp. 75 - 106.

[13] B. J. Oates, Researching Information Systems and Computing, London: SAGE Publications, 2006.

26

[14] H.J. Adèr & G.J. Mellenbergh, Advising on Research Methods: A consultant's companion, Netherlands: Johannes van Kessel Publishing, 2008.

[15] Foddy, W. (1993): Constructing Questions for Interviews and Questionnaires: Theory and Practice in Social Research. Cambridge: Cambridge University Press.

[16] M. E. Fonteyn, B. Kuipers, S. J. Grobe, “A Description of Think Aloud Method and Protocol Analysis”, Qualitative Health Research, vol. 3, no. 4, Nov. 1993, pp. 430–441. [17] “Android”, [Online]. Available https://www.android.com/. [Used March 5th 2019]. [18] “Java”, [Online]. Available https://www.oracle.com/java/. [Used March 5th 2019]. [19] “Android Studio”, [Online]. Available https://developer.android.com/studio.

[Used March 5th 2019].

[20] “ARCore”, [Online]. Available https://developers.google.com/ar/discover/. [Used March 5th 2019].

[21] “Sceneform”, [Online]. Available

https://developers.google.com/ar/develop/java/sceneform/. [Used March 5th 2019].

[22] “Google Directions”, [Online]. Available https://cloud.google.com/maps-platform/routes/. [Used March 5th 2019].

[23] “Google Places”, [Online]. Available https://cloud.google.com/maps-platform/places/. [Used March 5th 2019].

[24] “Google Maps”, [Online]. Available https://cloud.google.com/maps-platform/maps/. [Used March 5th 2019].

[25] “ARCore Location”, [Online]. Available https://github.com/appoly/ARCore-Location

27