K3 School of Arts and Communication

Interaction Design

master thesis

"Hold That Thought"

– sign language and the design of gesture interfaces –

Student:

Job van der Zwan

Studies:

Interaction Design

Semester:

2

Student ID:

831217T310

Birth date:

17 December 1983

Address:

Segevångsgatan 3A

Phone-No.:

+46762073048

E-Mail:

j.l.vanderzwan@gmail.com

Malmö, 6 February 2014

Table of Contents

1 INTRODUCTION...1

2 RELATED LITERATURE AND PREVIOUS WORK...3

2.1 SIGN LANGUAGE LITERATURE 3 2.2 GESTURE-BASEDINTERFACEDESIGNPAPERS 6 2.2.1 The Kinematic Chain as a model for asymmetric bi-manual actions...7

2.3 TEXT- ANDGRAPHICALPOINTER-BASEDINTERFACESINTHECONTEXTOFLANGUAGEAND METAPHORS 9 2.3.1 Language in contemporary computer interfaces...10

2.3.2 The importance of Metaphors...11

2.3.3 The metaphors underlying the design of the Xerox Alto...13

2.3.4 Language in contemporary computer interfaces, part II...15

2.3.5 In conclusion, and relating this into sign language...19

3 HOLD THAT THOUGHT - DESIGN PROTOTYPE AND USER

“

”

FEEDBACK... 21

3.1 DESIGN CONCEPT 21 3.1.1 Starting Metaphor...21

3.2 IMPLEMENTATION, SET-UPAND USER FEEDBACK 27 3.2.1 Implementation...27

3.2.2 User Feedback – Session Set-Up...27

3.2.3 User Feedback – Results...30

4 CONCLUSIONS...32

Fig. 1: Seven Morpheme Categories of ASL Verbs of Motion...5

Fig. 2: An artistic interpretation of a verb of motion expressed in a vocal

language (top) and a sign language (bottom)...5

Fig. 3: Two hands, one gesture...7

Fig. 4: Abstract conceptualisation of the left hand (red circle), the right hand

(green square) and the emergent complexity of their bimanual cooperation

(blue triangle)...8

Fig. 5: Left: the result of right-handed handwriting. Right: the actual

positions of the right hand during the writing process...8

Fig. 6: Type to search...10

Fig. 7: Extreme skeuomorphism: the interface of the Redstair

GEARcompressor AU-Plugin (OS X)...14

Fig. 8: An example of flat interface design: elements from the Flat UI Kit by

DesignModo...14

Fig. 9: Something inbetween: icons from the Xerox Star interface...14

Fig. 10: The ACME text editor...17

Fig. 11: Code for a flying bat in Scratch

“

”...17

Fig. 12: Patch written in Pure Data...17

Fig. 13: Drawing Dynamic Visualisations by Bret Victor

“

”

...18

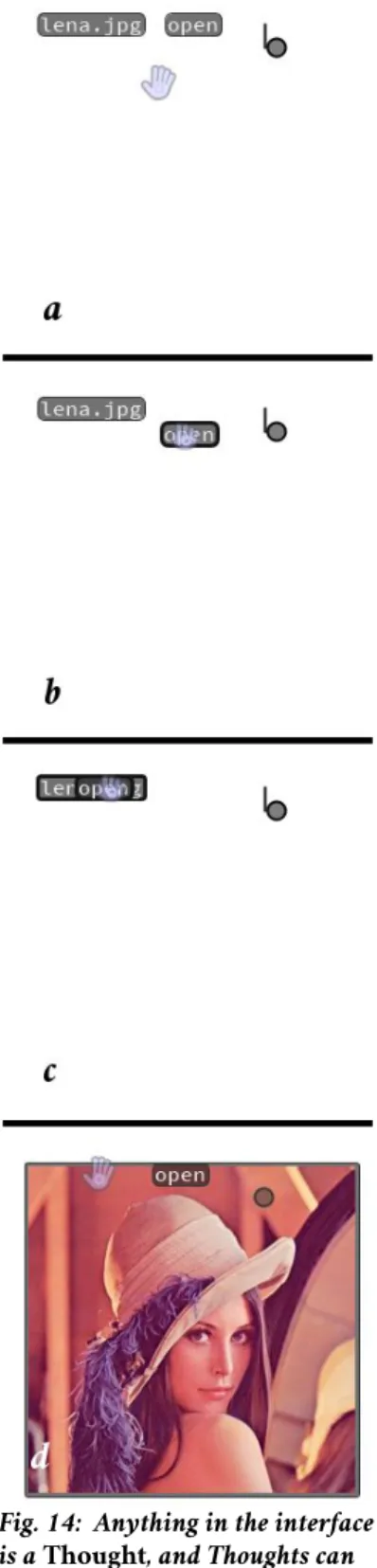

Fig. 14: Anything in the interface is a Thought, and Thoughts can be

grasped, held and applied to each other...23

Fig. 15: Applying multiple Thoughts...24

Fig. 16: Typing and executing multiple thoughts in parallel...25

Fig. 17: Applying the trash button – the del command saved as an icon

“

”

...26

Fig. 18: Two screenshots of a recorded discussion about multi-tasking and

using two hands (due to a bug the hand icons were not saved in the image).

...28

Fig. 19: Two screenshots of a recorded discussion about the role of buttons

and pointers during a user test session (due to a bug the hand icons were not

saved in the image)...29

List of Tables

Tab. 1: Three Mental Stages...15

Tab. 2: Pointer input...22

Tab. 3: Keyboard input, special keys...22

ASL American Sign Language

CLI Command Line Interface

GUI Graphical User Interface

HCI Human-Computer Interfaces

IxD Interaction Design

NoUI “No User Interface” - in practice usually meaning no tangible interface NUI Natural User Interface

OOP Object Oriented Programming

OS Operating system

TUI Text-based User Interface

WIMP Windows, Icons, Menus and Pointers

Terms

Shell Software that provides an interface between users and the OS of a computer Pointers Input based on a pointing device, such as the mouse. Not to be confused with the

programming term.

Morpheme The smallest grammatical unit in a language

File Manager A program providing an interface for working with file systems Skeuomorph A design element visually emulating objects from the physical world

1 Introduction

Within Human-Computer Interfaces (HCI), the domain of gesture interfaces re-search has been studied for over forty years. Similarly, it has been over fifty years since William Stokoe published Sign Language Structure: An Outline of the Visual“ Communication Systems of the American Deaf,” in which he showed the academic

world that sign languages are true languages, although in some ways fundamentally different from vocal1 languages, and not only sparked a revolution in the fields of

(sign) language studies, but also changed Deaf2 education and the treatment of Deaf

communities [Stokoe 2005].

A large body of research exists on the creation of robust sign language detection systems within the field of computer vision. Yet it appears that sign languages have rarely been used as a starting point for general gesture interface designs, and it ap-pears that people who natively sign have never been consulted as expert users on the topic of gestures. A review of the literature suggests this is because compared to other gesture styles, sign language as a category is defined by its linguistic nature; therefore, dismissed outside of the field of sign language recognition.

I believe this dismissal is premature, as it implies that we already know what sign languages and gestures are. It overlooks the fact that as a whole sign languages incorporate all gesture styles, and misses the possibility of breaking them down into elements that might provide insights and ideas for designing gesture interfaces.

Furthermore, where the application of gesture interfaces is appropriate is still an open question, but looking at the current NUI and NoUI design movements, the general consensus appears to be intuitive and simple interfaces; manipulating“ ” “ ” things in a way approximating how you would use your hands in real life. How“ ” -ever, that could also be a reflection of an unfamiliarity with gestures at the con-scious level leading to simple, basic designs. Yet people for whom sign language is a

1 This report will distinguish between sign and vocal languages. When speaking of spoken languages it

refers to both as distinguished from written language.

2 There is a convention to distinguish between the Deaf who are part of a sign language community

and those who are not by capitalising the former. This report only discusses Deaf who know sign language but will still follow this convention.

first language have more hands-on experience with gestures than any other group, and in a symbolic and abstract way unfamiliar to those of us who do not know sign languages. Investigating this might lead to concepts that allow for richer, more ex-pressive gesture interface designs. It seems rather odd then not to consult the Deaf beyond the creation of sign language recognition systems. This is the main subject of this thesis: to explore if new gesture interface design insights could be gained from studying sign language and its users.

This report starts with an overview of relevant literature in the fields of both ges-ture interface design and sign language. The overview is followed by a discussion of other graphical and text-based user interfaces in the context of language. This dis-cussion is effectively a short essay on language and metaphors in interface design, going into more detail why I believe dismissing sign languages based on their lin-guistic nature is a mistake. Another reason for this discussion is the choice of using the desktop metaphor or Windows, Icons, Menus and Pointers (WIMP) paradigm“ ” as a starting point for the eventual design prototype. This is explored in the last part of this report, which consists of an example user interface that was designed by tak-ing an insight from sign language as a starttak-ing point to replace the desktop meta-phor and combining it with the discussed text-based interfaces to extend the WIMP paradigm. This experimental design was used as a way to pose questions to users about potential uses of gesture interfaces. This is followed by a conclusion.

2 Related Literature And Previous Work

2.1 Sign Language Literature

Sign languages were not considered a full language by the academic majority un-til William C. Stokoe published Sign Language Structure: An Outline of the Visual“ Communication Systems of the American Deaf” in 1960. As a result, modern sign

lan-guage research is a relatively young field and a subset of Deaf studies, due to the strong relation between language, culture and identity. Examples include the role that sign language plays in the cognitive development of the pre-lingually Deaf (and by extension the role of any type of language in cognitive development), and analys-ing the lanalys-inguistic features of a sign language and what that tells us about languages in general and the origin of language in itself.

Many misunderstandings surround sign languages, and to avoid miscommunica-tion we will summarise some of the common ones [Liddell 2003] here. Sign lan-guages are not pantomime, but full natural lanlan-guages. They are not universal: Deaf communities spontaneously develop their own sign language in a process similar, if not identical, to how pidgin and creole languages form. Similarly, there is no stand-ardised sign alphabet that allows sign to be written, although a few attempts have been made at designing such an alphabet3. In practice, this means that most literate

Deaf people are at the least bilingual, knowing sign and the written form of the loc-ally spoken vocal language. Sign languages are not vocal languages with gestures re-placing sound – the mediums used are fundamentally different, and because of this some aspects of sign language cannot be mapped one-to-one to auditory languages, and this is true the other way around as well. Furthermore, a sign language consists of more than just hand gestures, as stated by Keating and Mirus [2003]:

A signed language is much more than a manual system. Signers communicate important “

grammatical, affective, and other information through facial expressions. Shape of the hands, orientation, location, and movement are all important components of sign language communication. American Sign Language (ASL) uses a system of classifier handshapes to refer to objects, surfaces, dimensions, and shape. There are important functions served by

non-manual expressions such as head movement, eye movement, and specific facial expres-sions. A question, for example, can be signalled by raised eyebrows, widened eyes, and a slight leaning forward of the head. Eyes are powerful turn-taking regulators. Finger-spelling is used for names or new terms, and also some borrowed terms, such as 'well' or 'cool', and sometimes for emphasis. Involvement can be shown through affective displays, role playing, and direct quotation. Signed languages can communicate several things simultaneously where spoken languages would do this sequentially. The majority of signs are made in the neck or head area (though this has changed over time). ”[Keating and Mirus 2003]

This suggests that sign language and its users may not only be a rich source of spiration and insights for gesture research, but for a broad range of multi-modal in-put such as eye-tracking and facial expressions, but this was outside of the scope of this thesis and not investigated further.

Notably different from vocal languages is that the medium of gestures allows for simultaneous expression of certain concepts – vocal languages would do this se-quentially. Verbs of motion in American Sign Language (ASL) are an example of this, stacking up to seven morphemes in one sign (fig. 1 and 2). People who are only used to vocal languages tend to have difficulty with this; those who learn sign lan-guage after vocal lanlan-guages often make so-called split verb errors: “ ”

For example, if the target showed a vehicle moving uphill (normally represented by a “

single complex verb of motion with three simultaneously articulated morphemes VEH+LIN-EAR+UPHILL), a SPLIT error might contain CAR, MOVE, STRAIGHT, UPHILL as separ-ate lexical signs. ”[Singleton and Newport 2004]

While language and thought are not the same, the former does provide structure for the latter [Sacks 1990], [Mcgilchrist 2009] and with this in mind it is possible that the non-Deaf tend to implicitly design in a way that fits the sequential nature of vocal languages. The overview of interface design papers seems to suggest this: even two-handed gesture interfaces appear to be structured sequentially for the most part. It is this ability of sign language to express multiple morphemes simultan-eously that has been used as a starting point for the design prototype, in an attempt to design an interface that allows for two hands to be used for richer forms of ex-pression.

Fig. 2: Seven Morpheme Categories of ASL Verbs of Motion

Source: "When learners surpass their models," based on earlier work by T. Suppala

Fig. 1: An artistic interpretation of a verb of motion expressed in a vocal language (top) and a sign language (bottom)

2.2 Gesture-based interface design papers

Before discussing gesture interface design, it is prudent to make sure we do so in unambiguous terms. The work by Maria Karam and m.c. schraefel (lower-case in-tentional) on classifying gesture interface research[Karam 2005], [Karam 2006] provides us with a clear framework for this, as well as an overview of previous ges-ture research. In this framework, five different gesges-ture styles are defined: deictic,

manipulative and semaphoric gestures, gesticulation and sign language. Note that sign

language is considered a distinct category.

Deictic gestures involve pointing as a way to establish the identity or spatial loca-tion of an object within the context of the applicaloca-tion domain. Manipulative ges-tures are defined as those whose intended purpose is to control some entity by applying a“ tight relationship between the actual movements of the gesturing hand/arm with the entity being manipulated.”[Quek et al. 2002] Semaphoric gestures are gestures defined to

have a symbolic meaning, for example when a specific handshape communicates a specific command. Gesticulation focusses on the technical challenge of interpreting the gesturing that happens when speaking to clarify the speaker's intent. Mean-while, sign language appears to only have been studied in the context of automatic sign language recognition:

Gestures used for sign languages are often considered independent of other gesture styles “

since they are linguistically based and are performed using a series of individual signs or gestures that combine to form grammatical structures for conversational style interfaces. [ ]… Although it is feasible to use various hand signs as a means of communicating commands as with semaphoric gestures, this is not the intention of the language gestures. Rather, because sign languages are grammatical and lexically complete, they are often compared to speech in terms of the processing required for their recognition. In addition, the applications that are intended for use with sign language are communication based rather than command based and most of the literature is focused on the difficult task of interpreting the signs as a mean-ingful string” [Karam 2005]

There is a large body of technical research papers on sign language recognition systems, but neither the technical challenge of detecting gestures nor the transcrip-tion of sign language as computer input are the focus of this thesis. More significant

for the topic at hand is that no research papers were found in which sign language was taken as a starting point for gesture interface design.

Numerous designs using gestural input already exist. One of the earliest demonstra-tions an HCI context is Put-that-there, “ ” [Bolt 1980] which made use of deictic gestures in a multi-modal context: one-handed pointing combined with voice commands. Two-“ Handed Gesture in Multi-Modal Natural

Dia-log, ” [Bolt and Herranz 1992] also explores gestures in a multi-modal context, in this case with two-handed manipulative gestures augmented by voice commands and eye-tracking (fig. 3). The paper of Boltz and Herranz [1992] suggests that users were more likely to use two-handed gestures when the scale of the task increases, virtually mimicking real-life tasks. This multi-modal pantomiming was presumed to be intuitive and natural, and the work could be considered a predecessor of the current Natural User Interface (NUI) movement.

Two-handed input is not limited to gestures, and a number of papers exist on the topic in a broader sense. [Buxton and Myers 1986], [Kabbash et al. 1994] and [Leg-anchuk et al. 1998] explore different designs for two-handed input in the context of navigation/selection tasks, with the paper of Leganchuck et al. also discussing the cognitive benefits of certain designs. Finally, [Guiard 1987] gives a framework for abstractly modelling asymmetric two-handed action, which is referred to by many gesture interface papers and worth summarising here.

2.2.1 The Kinematic Chain as a model for asymmetric bi-manual actions

Guiard defines a kinematic chain as a model describing serially linked motors, where the preceding motor influences the motion of those that follow (fig. 4). This could for example mean shoulder, elbow and wrist: shoulder movement influences both elbow and wrist location and therefore movement, and similarly elbow movement influences wrist location and therefore movement.

In a more abstract sense, Guiard also argues that the left and right hand (which really means the whole kinematic chain from shoulder to fingertips in this context)

Fig. 3: Two hands, one gesture

can be seen as two functional mo-tors in a kinematic chain, where for right-handed people the left hand precedes the right hand in the chain. This model foregoes the notion of a dominant hand, instead defining

“ ”

what we usually call the dominant hand as the final link in the kin-ematic chain. For example, a

right-handed writer holding the paper with the left hand to set the reference frame for the right hand, and dynamically moving the paper around while writing (see fig. 5).

This motion moves from left to right in three ways: first of all, by having the left hand set the reference frame for the right hand. Second, from coarse to fine-grained motion, in the sense that the left hand does the coarse movement, with the right hand refining it. Third, the left hand has a temporal precedent, which follows from the other two: the right hand cannot do its work until the reference frame has been set by the left, nor can it refine the movement of the left until the left hand has done the motions.

At first glance this kinematic chain model appears to overlap with the analysis of the ASL verbs of motion, which has a central and sec-ondary object handshape, the latter being the frame of reference for the former. However, the simultaneous expressiveness present in these verbs of motion does not appear to fol-low from the model, which is a linear chain. It does give a suggestion why so-called bi-manual cooperative action allows for“ ” more complex motions (and therefore gestures) than single-handed actions alone (fig. 4).

Finally, in a to-be-published paper Miguel A. Nacenta, Yemliha Kamber, Yizhou Qiang and Per Ola Kristensson describe how users consider creating their own

ges-Fig. 5: Left: the result of right-handed handwriting. Right: the actual positions of

the right hand during the writing process.

Source: [Guiard 1987]

Fig. 4: Abstract conceptualisation of the left hand (red circle), the right hand (green square) and the emergent complexity of their bimanual cooperation (blue triangle)

tures to be faster than memorising predesigned gestures, are better at recalling and using them later and in general experience these gestures as easier, more fun and less“ effortful.” [Nacenta et al. 2013]. This ties into what is often an open question in

ges-ture interface research: which gesges-tures are most appropriate and when? Perhaps the reason that this question is hard to answer is because it is the wrong question to be-gin with. The assumption is implicitly made that gestures are intuitive and nat“ ” “ -ural, which at least for semaphoric gestures cannot be true because symbols are in” -herently learned. This mistake is very similar to the misunderstanding mentioned earlier that sign language is a universal language, and to use language as a metaphor: trying to find the best gestures for start, stop, louder and softer for a media“ ” “ ” “ ” “ ” player program, is like looking for which human language has the best words for these four concepts. Yet the meaning of word itself is what matters, not the form the word takes. Instead of focussing on the form, the “how to gesture , we should per” -haps first focus more on “what to gesture, and in which context we want to use ges” -tures. There is of course value in consensus and standardisation, but at the moment gesture interfaces are arguably not at the stage where that should take centre focus. That means putting more emphasis on the design reasoning behind gesture

frame-works and platforms and how the gestural commands relate to each other in a

broader context, while putting less emphasis on the specific motions of the gestures, and ideally leaving those as easily configurable.

2.3 Text- and graphical pointer-based interfaces in the context of language

and metaphors

The fact that sign language is placed in its own category of gesture interface design, defined by its linguistic nature, might explain why it has been overlooked in gesture interface design: the command line and similar text-based interfaces are generally considered outdated and only preferred to graphical user interfaces and pointer based input by expert users in certain niches, like programming. Yet, in those situ-ations the use of language still appears irreplaceable. Analysing why this is the case provides some ideas as to how sign language can be useful for gesture interface re-search.

2.3.1 Language in contemporary computer interfaces

To start with, let us look at the presence of language in the mod-ern PC. All operating systems with a graphical shell provide a kind of start menu that can be navigated with the mouse or ar-row keys on the keyboard, yet all

modern versions have some form of type to search functionality as well (fig. 6).“ ” The browser still fundamentally relies on URLs – a string of text signifying the loc“ -ation of a web site – or at the very least typing a question into a search engine.” “ ” Language lies at the foundation of all of our day-to-day software: web pages are built with HyperText Markup Language and often use JavaScript. Digging deeper, computer programs are built with programming languages. Why is language so hard to root out? The answer probably lies in its unique qualities when it comes to expressing and manipulating ideas and concepts:

Language refines the expression of causal relationships. It hugely expands the range of “

reference of thought, and expands the capacity for planning and manipulation. It enables the indefinite memorialisation of more than could otherwise be retained by any human memory. These advantages, of memorialisation and fixity, that language brings are, of course, further vastly enhanced when language becomes written, enabling the contents of the mind to be fixed somewhere in external space. And in turn this further expands the possibilities for ma-nipulation and instrumentalisation.”[Mcgilchrist 2009]

Language allows for turning abstract thoughts into virtual things in our mind,“ ” which can then be virtually structured and stored. Humans who have been denied language tend to have little conception of past or future, always being in the present , and are very limited in the types of memory that are available to them

“ ”

[Sacks 1990]. Furthermore, having a language allows a mind to manipulate thoughts in new ways it could not do before, allowing for complex, abstract conception:

“Language permits us to deal with things at a distance, to act on them without physically handling them. First, we can act on other people, or on objects through people Second, we…

Fig. 6: Type to search.

can manipulate symbols in ways impossible with the things they stand for, and so arrive at novel and even creative versions of reality We can verbally rearrange situations which… themselves would resist rearrangement we can isolate features which in fact cannot be iso… -lated we can juxtapose objects, and events far separated in time and space we can, if we… … will, turn the universe symbolically inside out. ”[Sacks 1990]

There is a quote by Stephen Fry that illustrates this in a humorous context: Hold“ the newsreader's nose squarely, waiter, or friendly milk will countermand my trousers.” It is

unlikely that this sentence describes something that ever happened or will happen, yet that is precisely the point: a language can depict or refer to something that is not there. It also permits the creation of anything that can be expressed in words“ ” without actually creating that which the words refer to, which gives them great ex-pressive power. A pure GUI cannot do this, because in this sense its greatest“ ” strength is also its greatest weakness: its mode of interaction works by virtue of the

immediacy of interacting with what is seen, and an icon that is not there cannot be

clicked on. The WIMP paradigm circumvents this problem with the menu part of“ ” the acronym, offering pre-defined lists of options, or a form field that users can type in – essentially by invoking language! If one wants to design interfaces that move beyond the WIMP metaphor, one should put thought into what role language will play in this design.

2.3.2 The importance of Metaphors

Alan Kay, one of the main designers of the Xerox Alto user interface that defined the WIMP paradigm, is known to be quite sceptical of how metaphors are used in UI design, and calling them user illusions “ ” [Kay 1990]. This is understandable: for decades he has been trying to move computer interfaces beyond the desktop meta-phor. In a recent interview Kay described the paradigm as a bicycle with training“ wheels, and nobody knows the training wheels are on it, because they don't know it's sup-posed to be a bike,” later adding: [After thirty years it is] completely encrusted with jewels“ and rhinestones, because it has been decorated in a thousand different ways, features have been put on it, but it has still got the fucking training wheels! ”[Internet Archive 2013]

What these training wheels that Kay refers to might be will be discussed later.“ ” For now, note that they themselves are a metaphor, illustrating how metaphors are fundamental in enabling us to grasp ideas, despite Kay's wariness of their usage. The following passage by Iain McGilchrist argues this more clearly:

Language functions like money. It is only an intermediary. [...] To use a metaphor, lan“ -guage is the money of thought. [ ] Metaphoric thinking is fundamental to our understand… -ing of the world, because it is the only way in which understand-ing can reach outside the sys-tem of signs to life itself. It is what links language to life. [ ] At the 'bottom' end, I am talking… about the fact that every word, in and of itself, eventually has to lead us out of the web of language, to the lived world, ultimately to something that can only be pointed to, something that relates to our embodied existence. Even words such as 'virtual' or 'immaterial' take us back in their Latin derivation – sometimes by a very circuitous path – to the earthy realities of a man's strength (vir-tus), or the feel of a piece of wood (materia). Everything has to be ex-pressed in terms of something else, and those something elses eventually have to come back to the body. To change the metaphor (and invoke the spirit of Wittgenstein) that is where one's spade reaches bedrock and is turned. There is nothing more fundamental in relation to which we can understand that. [ ] That is why it is like the relation of money to goods in the real… world. Money takes its value (at the 'bottom' end) from some real, possibly living, things – somebody's cows or chicken's, somewhere – and it only really has value as and when it is translated back into real goods or services – food, clothes, belongings, car repairs – in the realm of daily life (at the 'top' end). In the meantime it can take part in numerous 'virtual' transactions with itself, the sort of things that go on within the enclosed monetary system.

Let me emphasise that the gap across which the metaphor carries us is one that language itself creates. Metaphor is language's cure for the ills entailed on us by language (much as, I believe, the true process of philosophy is to cure the ills entailed on us by philosophising). If the separation exists at the level of language, it does not at the level of experience. At that level the two parts of a metaphor are not similar; they are the same. ”[Mcgilchrist 2009]

No interface design concept (or any concept in general) can truly make sense to us unless it can be related to at an embodied level, and this is done through meta-phors. An example of this in interface design is skeuomorphism: a stylistic choice to

graphically design the interface to emulate real world objects (fig. 7), which is a very literal metaphor: this element functions like the object in or feature of the physical“ world being alluded to. This particular method of explaining functionality by sim” -ilarity has also often been likened to training wheels, and is currently going out of fashion in favour of more flat designs (fig. 8). It is presumed that people are now“ ” familiar with computers and touch screen interfaces, and therefore a button no longer needs to represent a physical button to convey how to be used. An obvious question is if these skeuomorphs are the same training wheels Alan Kay referred“ ” to. It seems unlikely – if only because the first graphical user interfaces were in many ways as flat as contemporary interfaces are (fig. 9), even though this was for technical reasons instead of a stylistic choice. More likely these forms of skeuo-morphism are the jewels and rhinestones , and removing them does not change the“ ” underlying problem – it just brings us back to the original undecorated bicycle, as fig-ure 9 subtly illustrates.

A different argument for removing skeuomorphs is that using such literal meta-phors limits the freedom of expression, as it supposedly fixates perception and us-age to the limitations of their real-life counterparts. By that logic getting rid of them would liberate design possibilities. However, this fails to address what metaphors we are left with. The reason for being stuck with the desktop metaphor could be as banal as not having come up with a better one – simply removing it will not do if there is no other metaphor connecting the interface to our embodied experience. For that reason, it is worth investigating what metaphors Kay uses when describing the design of the Xerox Alto – what were the bicycle wheels he referred to? As we are discussing the role of language in graphical and text-based user interfaces, this also gives us an opportunity to see how language played a role in its design.

2.3.3 The metaphors underlying the design of the Xerox Alto

When describing the design reasoning behind the Xerox Alto [Kay 1990], Kay takes the work by psychologists Jerome Bruner as a starting point, who concluded that there are at least three stages through which our minds develop: enactive, iconic and symbolic. The three systems are good and bad at different things and, presum-ably because they evolved separately, more often interfere with each other than

Fig. 9: Something inbetween: icons from the Xerox Star interface

Fig. 8: An example of flat interface design: elements from the Flat UI Kit by DesignModo

Source: http://designmodo.github.io/Flat-UI/

Fig. 7: Extreme skeuomorphism: the interface of the Redstair GEARcompressor AU-Plugin (OS X)

work together (note however that sign language covers all three types at once, un-like vocal language). Because none of them are superior to the other, Kay suggested that the best strategy would be to force gentle synergy between them in the user interface“ design [ ] one should start with – be grounded – in the concrete, [...] and be carried into the… more abstract,” a mentality embodied in the slogan Doing with Images makes Symbols.“ ”

How this concept mapped to the design of the Xerox Alto interface design is shown in table 1.

2.3.4 Language in contemporary computer interfaces, part II

Smalltalk, the designed manifestation of the symbolic mentality, was a program-ming language integrated into the GUI that would allow the users to manipulate, de- and reconstruct the software at a relatively deeper level of the computer. Small-talk played the role of language in the larger whole of the user interface, and it is very much centred around playing with embodied (virtual) metaphors. This concept was not adopted by Apple when it designed its interface for the first Macintosh, which was based on the Xerox Parc GUI, and therefore it was not used by those who copied the Macintosh afterwards (effectively everyone).

Instead of embedding a symbolic layer in the GUI interface, every desktop com-puter now comes with some form of the older command line interface (CLI), as it is still part of the core foundation upon which the graphical shell is built. However, the command line interface has all kinds of strange quirks that are just as much the result of history as they are conscious design [Kernighan and Pike 1983].

Because the average user does not have to worry about the internal working of the computer and approaches it from the outside, encountering the graphical shell

Doing

with

Mouse Enactive Know where you are,

manipulate Images

makes

Icons, windows Iconic Recognize, compare,

configure, concrete Symbols Smalltalk (a programming language

integrated into the graphical user in-terface)

Symbolic Tie together long chains of reasoning, abstract

Tab. 1: Three Mental Stages

first, the exposure to text based interfaces will likely be limited to the internet browser bar, using some form of search tool and writing texts. Text based interfaces have not been abandoned by the average user, they are simply not picked up because neither the CLI nor any other language-based form of interaction is a first class“ ” “ citizen of the GUI” .

This then is likely what Kay refers to when he speaks of the desktop metaphor being limited by training wheels: the inability to grow beyond iconic and enactive in-teraction with the computer into a symbolic one without effectively leaving the GUI environment and diving into a lower level of the computer by using a programming language or a CLI.

It is important to note that the CLI is not the only possible language-based inter-face for a computer, and neither is Smalltalk. For example, a number of experi-mental command line interfaces with different approaches to its design have sur-faced in recent years, notable examples being Xiki by Craig Muth4, Terminology by

Carsten Haitzler5 and TermKit by Steven Wittens6.

Another intriguing text interface in a GUI context is the ACME text editor by Rob Pike [Pike 1994]. It has no icons, using text labels instead (fig. 10). However, by clicking on it any piece of text can be interpreted as an executable command, simul-taneously giving text labels the functionality of buttons in a GUI and text com-mands in a CLI. Combined with allowing the user to change labels on the fly the result is having the expressiveness of language in an otherwise pointer-based GUI“ ” environment.

Another example of mixing iconic and symbolic forms of interaction can be found in visual programming environments, like the Scratch programming lan-guage (fig. 11) or Pure Data (fig. 12). It is interesting to contrast these with recent interfaces for the creation of dynamic images that have been designed by Bret Vic-tor (fig. 13). All three of these are examples of environments trying to embody Kay's doing with images makes symbols slogan, but the approaches are fundamentally

“ ”

different.

4 http://xiki.org/

5 http://www.enlightenment.org/p.php?p=about/terminology 6 http://acko.net/blog/on-termkit/

Fig. 12: The ACME text editor

Source: http://doc.cat-v.org/plan_9/4th_edition/papers/acme/

Fig. 11: Patch written in Pure Data

Source: Miller Puckette, Wikimedia Commons

Filter white noise at 900 hertz, then fade it in and out every second, over the course of a half second.

noise~ bp~ 9000 20 dac~ metro 1000 f *~ $1 500 line~ == 0

Fig. 10: “Code for a flying bat in Scratch”

Scratch and Pure Data first visualise the structure of language and let the user ma-nipulate that: one drags and drops keywords and connects them to form sentences“ ” in a programming language. These sentences are then interpreted by the computer“ ” to generate the actual output of the program. The problem is that one still has to ex-press what needs to be done in the form of a sentence before the computer can do it. In other words: one must conceptualise what one wants the computer to do (at this stage the thoughts do not need to be in the form of a language), then these ideas have to be translated to symbolic concepts, which are then expressed and manipu-lated through iconic mean. It effectively requires two steps of translation between different modes of thinking.

Victor's interface (it does not seem to have a name yet) starts with the user creat-ing and manipulatcreat-ing shapes, which is accompanied by sentences expresscreat-ing how the computer interprets these actions. These sentences are stored as steps, recording how a drawing was made. These sentences/steps can then be manipulated by drag-ging words from datasets or other sentences on top of words in a sentence, effect-ively replacing static words in a sentence with variable data, which can then be

Fig. 13: Drawing Dynamic Visualisations by Bret Victor“ ”

changed to change the drawing dynamically. For example, in figure 13 in the sen-tence Rotate rect from rect's top left by 11.4%, the element rect's top left can be“ ” “ ” replaced by another anchor, like the circle's centre, changing the pivot around which the rectangle is rotated. Similarly 11.4% can be replaced by a dataset, ef“ ” -fectively turning the image into a customised graph. This example program is only a data visualisation tool: it allows the user to start from a concrete example drawing and turn this into a general template to interpret and display a dataset. However, Victor has also created a variation that allows for dynamic input and the creation of interactive animations [Victor 2013]. This opens the possibility for, for example, live performances and possibly more general forms of programming.

More importantly, with the interface Victor has designed the mode of thinking and expression match: iconic manipulation to express iconic ideas, symbolic manip-ulation to express symbolic thinking. While we may lose some of the ability to dir-ectly symbolically turn the universe inside out this way, Victor has argued that in“ ” many situations this is a far more straightforward and intuitive approach of in-structing the computer what to do.

2.3.5 In conclusion, and relating this into sign language

As we have seen, language plays an important role in adding symbolic expressiveness to interfaces on top of enactive and iconic forms of it. Choosing the right metaphor as a foundation for the design matters, and many different metaphors have been tried as a way of incorporating language into interfaces over the years. All of them in-volve language as a way to manipulate symbols. However, the ability to manipulate is rooted in metaphor itself: the capability to grasp something, as the etymology of the word suggests. To cite Kay (emphasis mine):

As I see it, we humans have extended ourselves in two main ways. The first to leap to “

anyone's mind is the creation of tools – physical tools like hammers and wheels and figurat-ive mental tools like language and mathematics. To me, these are all extensions of gesture

and grasp. Even mathematics, which is sometimes thought of as forbiddingly vague, is actu-ally a way to take notions that are too abstract for our senses and make them into little

At the moment, most gesture interfaces use either manipulative and deictic ges-tures, similar to how the mouse and other pointing devices have been used before, or semaphoric gestures triggering an action, similar to clicking a button in a GUI or starting a program in a CLI. These are respectively enactive and iconic ways of using gestures. What seems to be missing is designs using them in a symbolic context, al-though this is true for many GUI interfaces as well. Sign language uses all three modes of thinking at once. The discussed text-interface designs show different ways of incorporating language into an interface, and combining these ideas with insights from sign language could provide a fruitful starting point.

3 “Hold That Thought” - Design prototype and user feedback

Recall that the aim of this thesis is investigating if sign language might provide valu-able knowledge to the field of gesture interface design. The originally planned re-search method was to do rere-search through design in collaboration with the Deaf, discussing the nature of sign language in a hands-on manner, and probing their tacit knowledge on gestures using small and simple prototypes inspired by these discus-sions. However, the members of the local Deaf community that I managed to get in contact with turned out to be hesitant to join the project, either having difficulties understanding what the project was about, not seeing the point of it, or simply not being interested in the topic.

An alternative approach was therefore chosen: to review the sign language liter-ature and single out feliter-atures specific to sign language that might be potential design openings. Of these, the simultaneous expressiveness was selected. An interface pro-totype that tried to capture this ability without requiring the user to know sign lan-guage was designed and programmed as a proof of concept to demonstrate the feas-ibility of designs based on sign language. This prototype was then shown to various users, who gave their feedback on the concept and its implementation, leading to further iterations of the prototype.

3.1 Design Concept

3.1.1 Starting Metaphor

The starting point for the design was the simultaneous expressiveness of sign lan-guage. In the case of ASL verbs of motion, each hand has a shape morpheme as an object classifier, with up to five more morphemes defined by location and motion to signify the motion itself (fig. 1). The essence is that the hands represent something using handshapes (semaphoric gestures), and the motion of the hands (deictic/ma-nipulative) signifies the relation between the two.

As discussed before, metaphors are important for our sense of understanding in general, and in this particular case helped with looking beyond the verb of motion“ ”

open Opens file named by text-thought (only works for plain-text, im-ages and movies)

close Closes file (turns it into text-Thought with the name of the file) play/pause/stop Play/pause/stop playing movie

clear/del Removes the target Thought

toupper/tolower Sets targeted text(s) to uppercase/lowercase split Splits text into individual words

help Creates a text Thought with instructions

Tab. 4: Commands defined in the prototype and their effects.

In a more elaborate prototype one would expect additional commands similar to those available in a CLI, and perhaps specific commands for manipulating the GUI environment.

Enter Detach cursor and add Thought to right hand Caps Lock “Enter” for left hand

Ctrl + Enter Add thought to hand, appy Thoughts in hand to other hand Shift + Enter Add new line to Thought without detaching the cursor

Tab/Shift Tab Jump cursor forward or backward from one text-thought to other Arrow keys Move cursor within attached text-Thought

Tab. 3: Keyboard input, special keys

Hand Gesture Mouse Action Input

“Grab” Left click + drag Pick up Thought underneath pointer “Activate” Double left click Activate Thought underneath

pointer “Apply Thought(s)

to other hand” Applies all held Thoughts in hand se-quentially to all Thoughts held in the other

“Drop last

Thought” Right click + drag Drops the last Thought that was picked up at the current spot. If dropped on Thought, applies it to that Thought

“Drop all

Thoughts” Double right click Drops all held Thoughts, arrange sor-ted by order of picking up. If dropped on Thought, applies all Thoughts to that Thought in sequence

“Put cursor here” Right click with empty hand Puts the cursor underneath the pointer. If on top of tex-Thought, at-taches it to that Thought.

“Grab” with both hands and pull apart

Shift + Click Copy grabbed Thought (both hands will hold a copy, mouse will leave one copy). Also works with cursors.

that was the starting point. The root metaphor is as follows: everything in the computer is an expression of an idea, a Thought (fig. 14a). Taking the expression of grasping an idea literally, Thoughts can be held“ ” and manipulated by the hands (fig. 14b). Applying Thoughts to one another one can then transform them or create new Thoughts (fig. 14c and 14d).

This metaphor takes inspiration from Kay's concept of Object Oriented Programming. Kay ap-pears to have chosen the word object as a metaphor“ ” to illustrate the idea of concretely manipulating an idea - doing with images makes symbols. However,“ ” the object oriented metaphor might have primed our thinking too much toward the concrete, similar to how the desktop metaphor seems to have hindered us in moving beyond it. Furtherore, within computer sci-ence OOP is a loaded term with many unwanted con-notations like class hierarchies, so dropping it gives us the freedom to design something else. The metaphor of manipulating ideas is not alien to most people – for example, take the expressions hold that thought,“ ” grasping an idea or take the expression – so we do

“ ” “ ”

not need to use the object metaphor understand the“ ” idea of manipulating thoughts, and simply call the ac-tion of doing so manipulating Thoughts.

The metaphor of Thoughts allows us to bypass the potential issue of having to learn many specific ges-tures for a specific task: instead of learning the gesture for an idea, you can literally pick one up and manipu-late it. In the context of a computer this will often be a file or a program, but it can also be written text or a

Fig. 14: Anything in the interface is a Thought, and Thoughts can be grasped, held and applied to each other.

The little figure resembling a musical note is a text cursor, with the small dot meant as an anchor to allow a pointer, represented by a hand icon, to grab it and manipulate it.

button referring to either, or something yet to be thought of. What it does not solve however is one of the problems of CLI interfaces: that any textual commands have to be learned before they can be used – compared to buttons in a GUI that can dis-play their functionality, or the list of words in a menu. There are potential solutions to this, but they were not thought of until after the project ended and will be dis-cussed in section 3.2.3.

In a way this idea of applying one Thought to the other already exists in GUIs in the form of Drag-and-Drop, where one can drag and drop files, folder and pro-grams on top of each other to trigger some effect. However, this form goes beyond that in two ways: first, it adds the ability to drag text on top of text (or any other Thought), with one assuming the role of command and the other as that of input (fig. 14 and 15). This way, the interface becomes a hybrid between GUI and CLI.

The second difference is that each hand has its own pointer with the ability to grab, manipulate and apply Thoughts to other Thoughts, including those held by the other hand. Guiard's kinematic chain can

emerge spontaneously in this situation: one hand is used to hold a Thought (setting the reference frame) and the other to manipulate it or apply a different Thought to it, and in principle the plat-form allows for what Guiard calls bi-manual co“ -operation. ”

It has been argued that due to a historical quirk, currently the default set-up of mouse and keyboard works better for left-handed people than right-handed in the context of two-right-handed input[MacK-enzie and Guiard 2001]. Since both hands can grab Thoughts and apply them to each other in this in-terface, it is inherently ambidextrous.

To summarise the interface design:

• Thoughts can be text, pictures, movies,

distinction between windows, icons and menus in the WIMP paradigm by providing a higher level abstraction that they all fall under, similar to Ob-jects in Smalltalk.

• To manipulate Thoughts, the environment has pointers, which are either the

hands of user (using a gesture detection system) and/or the mouse. These are represented with hand icons, left or right hands to distinguish which hand, to emphasize that they can hold and manipulate Thoughts. They can grab, hold, pull apart and apply Thoughts and cursors, and in the case of hands

have a limited set of gestures avail-able to them (see tavail-able 2).

• There is a text cursor through which a

user can create new text-based Thoughts, or manipulate existing ones with the keyboard (see table 3). The text cursor can be picked up and moved, or with a cursor gesture or“ ” right mouse click immediately put underneath the spot of the relevant pointer.

• Similar to Pike's ACME, text can be

interpreted and executed by drag-ging a Thought on top of another Thought, provided the first repres-ents a command. In this case it will be applied to the latter. Alternatively,

Ctrl+Enter will activate Thoughts held in a hand similar to a CLI command. This can be text (assuming the word represents a command, see table 4) or a button.

• As a result of the previous two points, text input is integrated with the GUI,

unlike standard WIMP interfaces where it exists in parallel to the graphics environment, and the interaction is indirect.

• Buttons are special thoughts that consist of sequences of thoughts saved as

icons. They are functionally similar to scripts in CLI environments. These icons can be executed by dragging them on other Thoughts, or by dragging Thoughts on top of them. Icons can be created by dragging Thoughts on a create icon -icon, or deleted by dragging the trash icon on top of an icon.

“ ” “ ”

The default trash and create icon -icons do not affect each other. A pop-“ ” “ ” up should appear above the icon when hovering with a pointer, to show which thoughts are applied when using it.

Fig. 17: Applying the trash button – the del command saved as an icon “ ”

3.2 Implementation, Set-Up and User Feedback

3.2.1 Implementation

The design concept described above came forth partially by synthesizing ideas from the theoretical exploration summarised in this paper, and partially by trying out multiple small prototypes.

Notably, using floating text was part of the prototypes from the start, but origin-ally had little to do with the design ideas mentioned, as these had not been crystal-lised at that point. Instead they were a consequence of the intended user group for the prototype: it could not be assumed that participants from the Deaf community

spoke English, and I do not know sign language either. Having to rely on a translator

would break the immediacy of the conversation, and also pull the user out of the in-terface that he or she might be playing with at that point. The ability to communic-ate within the interface with written text was considered a potential way to avoid these issues. While in the end no willing participants from the Deaf community were found, it did prove to be a valuable way of documenting the workshops in general. More importantly, it serendipitously lead to the concept of a GUI where text input was an integral part of the interface.

In the end, a prototype that implemented most of the described design ideas was created. It did not include a graphical file manager, but did allow for the opening of files if the file-path was known.

3.2.2 User Feedback – Session Set-Up

During the project, prototypes implementing various ideas were tried out by vari-ous users (a list of the file names they could open was provided). The resulting dis-cussions and user-testing would then be used to iterate on the design and refine the concept. The prototypes were written in the Processing framework. The Kinect was used as the gesture input device, using the PKinect library. The users were provided with a wireless keyboard and mouse, the latter as an alternative mode of input for the users to compare to gestures. Because the Kinect was not precise and reliable enough for gesture detection, a Wizard-of-Oz set-up was used where I had to press

Fig. 18: Two screenshots of a recorded discussion about multi-tasking and using two hands (due to a bug the hand icons were not saved in the image).

Fig. 19: Two screenshots of a recorded discussion about the role of buttons and pointers during a user test session (due to a bug the hand icons were not saved in the image).

a specific button matching a semaphoric gesture. This had the benefit that the user could design his or her own gestures for different types of commands. The upper-“ ” left corner would display the camera input of the Kinect, documenting the motion of the users as they tried out the system. The software framework allowed for easy recording of the on-screen activities, but not sound. However, as mentioned before, since I also had access to the interface with my own keyboard and mouse I could take over the system at any moment and take notes the discussions and user-feed

“ ”

-back, using the interface to document itself.

3.2.3 User Feedback – Results

Due to the aim of the research question (exploring new gesture interface ideas based on insights from sign language), combined with the nature of the design process (in-volving many different design ideas combined into an experimental platform), do-ing quantitative user tests was considered inappropriate. Instead, the sessions were more of a qualitative exploration of the design and a discussion of the concept.

Generally, the users had some difficulty getting the concept, partially because the prototype and its design were incomplete, partially because they were unfamiliar with using text input in a graphical environment, and partially because the Kinect turned out quite unreliable as a pointing device for precise motion. Most users had difficulty imagining concrete examples of how the ambidextrous interface would be useful in practice and where they would apply it (fig. 18), which fits with the earlier notion that this is a way of framing interaction that most users are not consciously familiar with.

Another common remark was that this type of interface would need to be taught to them; it would be unlikely for them to spontaneously discover which gesture triggered what and how these triggers worked together (fig. 19). This is somewhat related to one of the earlier mentioned weaknesses of text interfaces compared to GUIs: it lacks the ability to learn by exploring. One possible solution to this is hin-ted at by Bret Victor's prototypes: having the interface give textual feedback on what the user does, in a (programming) language that can also be re-used by the user as input to instruct the computer. Future work in this direction could imple-ment and refine these ideas.

The versions that were tested lacked many simplifications that later versions would have, most of which would be the result of these discussions. For example, buttons originally were all pre-programmed, and did not work both ways

“ ”

(dropped on Thought and Thought dropped on them). During a discussion (fig. 19) a user asked what the fundamental difference between the buttons and Thoughts were and why buttons did not work like other thoughts. This idea was incorporated into the design, simplifying both the underlying code as well as providing the user with more customisation options. Similarly, early versions of the prototype con-tained Thought bubbles that would hold Thoughts for the left and right hands re“ ” -spectively (fig. 18 and 19). Visually similar to task bars in traditional Desktop inter-faces, these would be shown on the bottom of the screen and list the Thoughts held by pointers. One could not activate or double-click a filename to open or close it,“ ” or drag and drop thoughts on each other to trigger them – this had to be done through the thought bubbles, using pre-programmed buttons.

All of this was later simplified, or in the case of thought bubbles completely re“ ” -moved; Thoughts now simply move with the pointers that hold them (fig. 14 and 15). This made the relationship between making a grasping gesture with one hand and the associated pointers holding a Thought clearer, and the interface became more efficient to use in the process.

In conclusion, a large part of the user-test discussions was spent on these design flaws in the early prototypes, or technical limitations such as the Kinect being an unreliable means of input. There are a few likely reasons for this. First, these issues limited the ability to freely interact and play with the interface as intended, while providing a concrete experience of their own to respond to. Second, while using two hands in complex, bi-manual ways is something we do all the time, this usually does not happen at a conscious, reflective level. This might be easier if we give the users various concrete bi-manual tasks to do, but the real difficulty might lie in not having a way to conceptualise these actions – the lack of a language to describe it. This again points to the likelihood that people fluent in sign languages (or even bet-ter: for whom sign language is a first language, and thus think in sign) are better

equipped to reflect on gesture-based designs.

4 Conclusions

As stated in the introduction, sign languages have generally been dismissed in ges-ture interface research outside of the development of sign language recognition sys-tems, on grounds of their linguistic nature. This thesis has been an attempt at ex-ploring if this has been premature. Because direct contact with sign language users stayed out this exploration was reduced to studying sign language papers and the history of language in computer interfaces. From this a design opening was extrac-ted and a design prototype built. Design flaws in early versions and technical issues made it impossible to draw reliable conclusions about the design as a proof-of-concept. A more robust and polished version, ideally tested and co-designed with fluent users of sign language, would allow for more conclusive statements of the quality of the design idea.

As for the value of sign language users as an expert group, no empirically backed up conclusions can be drawn, as no actual designing or testing was performed with them. In this sense I have not shown that sign language can add to research when trying to refine already existing paradigms. However, from the theoretical overview we can conclude that when ones inner monologue consists of a sign language, then bi-manual gestures inherently are the way through which reflection itself takes place. In this light, the potential value of sign language users for the design of gesture inter-faces seems undeniable. Furthermore, if metaphors and language play an important role in designing new, powerful user interface paradigms, and I have argued in this thesis that they do, and we want to elevate gesture interfaces beyond mere enactive and iconic modes of interacting with the computer and add to it the enriching world of symbolic thought, then sign language is a good candidate for exploring new ideas. It covers all three types at once, and can provide concrete examples of complex real world symbolic gesture usage to extract new metaphors from for the purpose of in-spiring and designing new gesture interfaces.

![Tab. 1: Three Mental Stages Source: [Kay 1990]](https://thumb-eu.123doks.com/thumbv2/5dokorg/3950469.74046/19.892.94.728.131.318/tab-three-mental-stages-source-kay.webp)