Exploring users’ perception of

chatbots in a bank environment

A critical view on chatbots and how to design for a

positive user experience

Oskar Hansson

Interaktionsdesign Bachelor

22.5HP Spring 2018

Supervisor: Anne-Marie Hansen Author: Oskar Hansson

University username: af9106

2

Contact Information

Author: Oskar Hansson E-mail: oskar-hansson@hotmail.com Supervisor: Anne-Marie Hansen E-mail: anne-marie.hansen@mau.seMalmö University, School of arts and communication (K3)

Examiner:

Anuradha Venugopal Reddy

E-mail: anuradha.reddy@mau.se

3

Abstract

Chatbots have been around for decades but haven’t really gained a lot of attention until very recently. With new advancements in the technologies of artificial intelligence and natural language processing, chatbots have very quickly gained the interest of commercial businesses. What seems to be lacking, though, is a user perspective on the subject of chatbots. This thesis explores how customers of Swedbank in the segment of 18 – 35 perceives Swedbanks current chatbot service, Nina, and the perception of the resulting concept that was developed based on empirical findings, Nina 2.0. The findings conclude among other things that chatbots with a clear and simple goal was positively received by the users, and interaction through pre-programmed answering buttons resulted in expressions of relief as the users did not have to worry about miscommunication with the chatbot.

Keywords: chatbot, banking, conversational theory, anthropomorphism, gamification

4

Acknowledgements

I would like to thank my supervisor, Anne-Marie Hansen for guiding me through this thesis and providing helpful assistance. I would also like to thank all the people who participated in interviews and user tests, making this project possible.

5

Table of contents

Abstract ... 2 Acknowledgements ... 3 1 Introduction ... 7 1.1 Background ... 7 1.2 Delimitations ... 8 1.3 Target group ... 8 1.4 Purpose ... 8 1.5 Research question ... 8 2 Theory ... 92.1 Why do people use chatbots? ... 9

2.2 Conversational theory ... 10

2.2.1 Grice’s maxims ... 10

2.2.2 Turn taking ... 11

2.2.3 Threading ... 11

2.2.4 Conversational repair ... 11

2.3 Planned vs. Situated actions ... 12

2.4 Anthropomorphism ... 12 2.5 Gamification ... 13 3 Methods ... 14 3.1 Benchmarking ... 14 3.2 Ethnographic interviews ... 14 3.3 Think aloud-test ... 14 3.4 Brainstorming ... 14 3.5 Wizard of Oz ... 15 3.6 Role play ... 15 3.7 Prototyping ... 15 3.8 User testing ... 15 4 Design process ... 17 4.1 Benchmarking ... 17 4.1.1 SEB’s Aida ... 17 4.1.2 Nordeas Nova ... 17

4.1.3 Bank of America’s Erica ... 18

4.2 Ethnographic interviews ... 18

4.2.1 Main use of Swedbanks online services ... 18

4.2.2 Mobile first ... 19

4.2.3 Save money... 19

4.2.4 Summary of ethnographic interviews ... 20

4.3 Think aloud-test of Nina ... 20

6

4.3.2 Quantity of information... 21

4.3.3 Quality of information ... 23

4.3.4 Human touch ... 23

4.3.5 Problem solved? ... 23

4.3.6 The nature of the questions... 24

4.3.7 Summary of think aloud-test ... 24

4.4 Brainstorming ... 25

4.4.1 Short term savings... 25

4.4.2 Setting up a fixed savings goal ... 25

4.4.3 Advice for retirement plan... 26

4.5 Wizard of Oz-pilot test ... 26

4.5.1 Modifications to the original method... 26

4.5.2 Setup of the pilot test ... 26

4.5.3 Tools vs. information ... 27

4.5.4 Entertainment value ... 27

4.5.5 Access to more data ... 27

4.5.6 Framing the conversation ... 28

4.5.7 Preconceptions about communication... 28

4.5.8 Chatbots vs. GUI ... 29

4.5.9 Update on position in process ... 29

4.5.10 Summary of Wizard of Oz-pilot test... 30

4.6 Conceptualization ... 30

4.6.1 Nina 2.0 ... 31

4.7 First prototype ... 31

4.7.1 Role ... 33

4.7.2 Look and feel... 33

4.8 First user tests... 33

4.8.1 Use of informal language ... 34

4.8.2 Predetermined answers ... 34

4.8.3 The budget ... 35

4.8.4 The quiz ... 36

4.8.5 Flow of information ... 37

4.8.6 Summary of first user test ... 37

4.9 Second prototype ... 38

4.10 Second user tests ... 39

4.11 Final words from user tests ... 40

5 Discussion ... 41

5.1 Ethics ... 43

5.2 Self critique and future work ... 43

6 Conclusion ... 45

7 References ... 46

7.1 Literature ... 46

7

1 Introduction

A chatterbot, or chatbot is a “computer program designed to simulate conversation with human users, especially over the internet.” (Oxford dictionaries, 2018). Chatbots have been around for decades but haven’t really gained a lot of attention until very recently. With new advancements in the technologies of artificial intelligence and natural language processing, chatbots have very quickly gained the interest of commercial businesses. A recent study showed that 60 % of the over 300 participants who worked in a wide array of different industries ranging from online retail and e-commerce to hospitality and marketing heard about chatbots for the first time in 2016 (Mindbowser, 2018). The same study also showed that 96% of the participating companies believe that chatbots are here to stay and 75 % of them were planning on building a chatbot for their business in 2017 (Mindbowser, 2018). This makes a lot of sense from a business perspective; chatbots can work twenty-four hours a day, every day, they don’t require any salary other than the time it takes to develop them, and they are always polite to their customers. There are potentially thousands of dollars to be saved every month in salaries with the implementation of chatbots to handle various tasks previously reserved for humans.

In recent years, banks in Sweden have taken a big step towards the developing technologies of artificial intelligence and many banks are now offering customer service through a chatbot powered by artificial intelligence and natural language processing. Swedish bank SEB have Aida, Nordea just launched their own version of a chatbot in Norway called Nova, and Swedbank has a virtual assistant called Nina who handles questions from their customers (Hoikkala & Magnusson, 2017).

What seems to be lacking, though, is a user perspective on the subject of chatbots. While more and more businesses are developing chatbots to handle customer service and similar tasks in order to save money, there seems to be little research on how the end users perceive these chatbots and whether they add any value to the user experience of their services.

The aim of this thesis is to explore the utilization of Swedbanks chatbot, Nina, and through a user-centered perspective develop a design concept based on empirical research findings combined with academic theory in related topics.

1.1 Background

In 1950, Alan Turing presented his paper “Computing machinery and intelligence”. The paper proposed a hypothetical situation that is now called the Turing test. In the test a human interrogator is placed in an isolated room and tasked with the objective to ask questions to two other participants, a human and a computer, who are placed in a different room. If the interrogator can’t tell which of the two other participants is human, the computer is said to have passed the Turing test (Turing, 2009). This was one of the first papers presented on the notion of artificial intelligence. The first chatbot was developed already in 1966 and was named ELIZA (Weizenbaum, 1966). ELIZA used a simple pattern matching mechanism, looking for keywords in a phrase and matched that with an output in the style of a psychotherapist,

8

fooling humans into believing she was a real therapist (Weizenbaum, 1966). This sparked a lot of interest in people into building chatbots that would perhaps one day pass the Turing test (Dale, 2016). In 1991 a prize called the Loebner prize was installed, which annually awards the chatbot that is deemed most human through the use of the Turing test, the most recent one being Mitsuku (Mitsuku, 2018). Today, chatbots are helping us book our flights, find tickets for concerts, find restaurants and numerous other tasks (Chatbots, 2018). So far only text-based chatbots have been introduced, but there is also voice controlled bots such as Apple’s Siri, Microsoft’s Cortana or Amazon’s Alexa that we can talk to. Google recently showed a video of their artificial intelligence system calling up a hair salon and made a booking for her client by talking to a human (Tech Insider, 2018). Chatbots and artificially intelligent systems have arguably come a long way since 1950 and with messaging apps like Facebook messenger and WhatsApp recently becoming bigger than social media platforms, it looks like they are here to stay (Business insider, 2016).

1.2 Delimitations

The work and research conducted in this thesis is limited to the context of Swedbanks digital platform and their chatbot service, Nina. The findings that are made in this thesis may not be true for other implementations of chatbots in other businesses or even other banks. The findings may however serve as possible guidelines for future designs of chatbots.

1.3 Target group

To narrow down and frame the research project, the intended target group for the proposed design presented in section 4.6.1 is customers of Swedbank and its different local branches who share their digital platform within the age of 18 to 35.

1.4 Purpose

The aim of this thesis is to explore how Swedbanks chatbot is perceived by users of the intended target group and to investigate whether there are any opportunities for improving the user experience by re-design of the current service. By using methods stemming from the field of interaction design, the goal is to find gaps in how Swedbanks chatbot functions and what the users expect of the existing chatbot service. These gaps could then be perceived as opportunities for re-design in order to improve the user experience for Swedbanks customers.

1.5 Research question

How can Swedbanks chatbot be redesigned using interaction design methods in order to improve the user experience for people aged 18-35?

9

2 Theory

Although the design solution that is suggested in section 4.6.1 relies heavily on empirical research, it is also inspired by the following sections containing a review of research in the field of chatbots, conversational theory, anthropomorphism and gamification.

2.1 Why do people use chatbots?

In a recent study from 2017, Brandtzaeg and Følstad investigated the question of why people use chatbots. The study took place in the US, in the form of a survey with 146 participants that matched the intended target group of people age 16 to 55. The survey contained both closed and open answers, the most important one being: What is your main reason for using chatbots? From the answers, four main categories could be revealed; Productivity, Entertainment, Social/relational and Novelty/Curiosity (Brandtzaeg & Følstad, 2017). It is worth mentioning that some participants gave answers that placed them in two or more different categories, meaning that the percentage given below equals up to more than 100 %.

68 % of the participants gave answers that indicated that productivity was the main reason for using chatbots. The participants further indicated sub-categories of the productivity category with their qualitative answers that the ease of use, speed and convenience was their main motivations for using chatbots. The answers also mentioned the importance of getting answers quickly, the hassle they no longer need to go through by calling a company, wait in line and then try and get the right information from a person or having to look through tons of text to find the right answer. In the productivity category, people also reported that their main motivation for using chatbots was the ease of obtaining help or accessing information, with people giving examples of utilizing chatbots for finding information on news and weather or to get travel advice (Brandtzaeg & Følstad, 2017).

20 % of the respondents reported that they use chatbots for entertainment purposes either in a positive way, because they can provide entertaining answers to a question, or in a more negative way, when people are bored and want someone to talk to in order to waste some time (Brandtzaeg & Følstad, 2017).

The third and fourth category, contained 12 % and 10 % of the participants respectively and stated that these participants use chatbots either for social purposes or for simple curiosity of the phenomenon (Brandtzaeg & Følstad, 2017).

Brandtzaeg and Følstad further goes on to discuss the their findings and conclude that if the main reason for engaging with chatbots is productivity, the concept of usefulness is an important factor when designing a successful chatbot service (Brandtzaeg & Følstad, 2017, p. 338)

10

For chatbots to be successful in the studied user group, they must help users resolve a task or achieve a concrete goal in an effective and efficient manner; in other words, they need to be easy, fast, and convenient. Also, they need to fulfill a valued productivity goal, such as getting help or access to information on the fly.

They continue to state that while using chatbots for entertainment and social purposes motivated fewer participants, it was still an important factor to take into account since previous research in the area argues that good user experiences stems from a sense of community or systems that support enjoyable social interactions (Brandtzaeg & Følstad, 2017, p. 389):

[…] it should be noted that entertainment and social motivations do not exclude productivity motivations. On the contrary, more than one third of the participants reporting entertainment or social motivations also reported productivity motivations. People want to get their jobs done, but many prefer to do so in a social and enjoyable manner.

2.2 Conversational theory

In order to understand what a good user experience between a chatbot system and a user can look like, inspiration can be taken from human to human conversations. The following sub-sections explains some basic rules of conversational theory that all humans subscribe to in order to communicate and understand each other. Conversation may seem to us as an easy and natural action but in reality, it is full of complex interactions that we are rarely aware of.

2.2.1 Grice’s maxims

Linguistics philosopher Paul Grice developed in 1975 what he called the cooperative principle, which describes how people communicate with each other effectively. In short, the principle explains that in order to have effective communication between humans, there must be a mutual understanding of cooperation between these. He also developed some basic rules for effective

conversation which he named Grice’s maxims:

Maxim of quality – Only say things that you know to be true Maxim of quantity – Don’t say more or less than is needed Maxim of relation – Only say things of relevance to the topic

Maxim of manner – Avoid ambiguity, obscurity of expression and get to the point

(Grice, 1975).

Since one of the main properties of chatbots is the ability to deliver more human like interactions with computer systems, it was not implausible to hypothesize that Grice’s maxims could also be applied to human – chatbot

11

conversations in order to build effective communication and deliver a good user experience.

2.2.2 Turn taking

Perhaps an obvious one, is the fact that we take turns to talk in order to talk to each other effectively. If we did not do this, we would talk over each other constantly and miss out on important information since we were too busy talking ourselves (Google, 2016). In relation to chatbot interaction, the principle of turn taking can be used to for example ask follow-up questions, confirm an action before continuing the conversation or make corrections to the conversation.

2.2.3 Threading

Threading is an important principle of effective conversation, as it tells us that all elements of a conversation should come in so called adjacency pairs. When one person speaks, the response from the other should be relevant in relation to what the first person said. This keeps the whole conversation relevant and confirms that both parts understand each other. The most common form of an adjacency pair is the question – answer form, but it can take other forms as well an example can be seen below:

Person 1: I lost my credit card the other night, I need to block it! Person 2: oh no, do it fast!

Person 2 confirms that the statement was heard and understood based on a mutual understanding of the context and answered with a statement that related to what person 1 just said. They can now continue to talk more about the credit card or talk about something entirely different, resulting in new adjacency pairs where one person confirms the other by giving statements that relate to what the other just said. (Google, 2016).

2.2.4 Conversational repair

When a miscommunication happens in a dialogue, for example when the hearer does not understand the meaning of what the talker has to say, a conversational repair is needed to get the conversation back on the intended conversational path (Callejas, Griol & McTear, 2016). Conversational repair may also be needed when someone is violating Grice’s maxims, an example is given below:

Person 1: Excuse me, do you know where the toilet is? Person 2: Yes.

In the example, we can infer that person 1 is interested in knowing more than just if person 2 knows where the restroom is. Clearly, person 1 is also interested in where it is. By just answering yes, person 2 is violating Grice’s maxim of quantity, by giving out too little information to be cooperative. This then, requires the conversation to be repaired in order to get the conversation back on track. Either party of a conversation can repair a conversation and a repair can be done both in and out of turn. Generally, the person making the

12

mistake spots this early and is given the opportunity to repair the mistake before the recipient has a chance to answer, but in some cases, like in the previous example, it might be necessary for the other party to initiate a repair action (Callejas, Griol & McTear, 2016). Applied to human – chatbot conversations, it is important to have a strategy for handling these types of conversational errors in order for the conversation to run smoothly.

2.3 Planned vs. Situated actions

Anthropologist professor Lucy Suchman is a prominent researcher on human – machine interactions, and presents in her book Plans and situated actions, the problem of human-machine communication (1987) two models for human intelligence and purposeful actions which she argues is of relevance when designing for intelligent machines. On the one hand, purposeful action can be seen as first and foremost setting up a plan beforehand for what you want to do, then follow that plan in order to get the desired result. The plan is based on general principles and abstract theories which is then applied and adapted to a given situation. On the other hand, purposeful action can also be seen as setting up an objective, and the necessary actions to reach that objective is dependent entirely on the context and any problems would have to be solved ad hoc because there is simply no way of knowing beforehand what any one given situation looks like. She calls these actions situated actions (Suchman, 1987). Suchman continues to argue that for humans, all actions are in reality situated actions in the sense that however much we may plan our actions beforehand, situations and contexts continuously change around us, and these plans that are laid out beforehand are always necessarily vague in order to accommodate any obstacles that may arise in any given situation. She continues to state that in the design of intelligent machines however, planned actions has been the way to model machine intelligence and suggests that we instead should focus on situated actions if we want to simulate human intelligence (Suchman, 1987).

Put in relation to modern day chatbot systems, one could argue that both versions of intelligence exists today. There are the chatbots that guide you through a controlled flow of conversation in order to take you to a predetermined finish line (Dale, 2016), reminding us of the theory of planned actions (Suchman, 1987). And then there are the chatbots that you can interact with freely like Mitsuku (Mitsuku, 2018), that does not know what the interaction will look like and does not have a preference for it either as the chatbot does not provide the user with a clear purpose other than pure conversation, reminding us of the idea of situated actions. Although it can also be argued that these types of social chatbots also constitute planned actions in the sense that they are beforehand equipped with a vocabulary that is intended to make them capable of answering any question you can think of and are therefore not producing an answer ad hoc, but rather searches through a database of sentences for a fitting answer.

2.4 Anthropomorphism

The concept of anthropomorphism presents the idea that humans have a tendency to attribute human traits, characteristics, emotions and intentions to non-human entities such as animals or robots (Oxford dictionaries, 2018).

13

Nass and Moon (2000) performed experimental studies that indicated human tendencies to ethically identify with computers and apply gender stereotypes to computer agents and also apply social behaviors such as showing politeness and reciprocity towards these agents. Even though in their own study they discard the idea of anthropomorphism with the argument that the individuals who took part in the experiment did not actually believe the computer to have human attributes (Nass & Moon, 2000), in the broader sense of the word as described in the oxford English dictionary (2018), these individuals did still in fact attribute human qualities to non-human entities by being polite and apply gender stereotypes to the computer agents, which can be described then as a form of anthropomorphism.

This is then also in line with what Brandtzaeg & Følstad (2017) found with regards to the fact that humans utilize chatbots for social and entertainment purposes. The concept of anthropomorphism could be used as a tool to engage users with a chatbot through social interaction.

2.5 Gamification

Gamification is defined as “The use of game design elements in non-game contexts” (Deterding, Dixon, Khaled & Nacke, 2011, p. 9). Rajat Paharia argues that the concept of gamification should by applied to amplify intrinsic value to a product (Deterding, 2012). This means that the product or service being gamified should already have some intrinsic value to its users without the gamification elements added to it, the gamification element alone is not enough to add value. Paharia also presents some tools to take advantage of when adding game elements; goal setting, real time feedback, transparency, mastery, and competition. (Deterding, 2012).

Seeing as some people interacts with chatbot for entertainment purposes (Brandtzaeg & Følstad, 2017), gamification elements could be seen as another way of engaging users of the chatbot for the purpose of providing an entertaining aspect of the experience.

14

3 Methods

The methods that are presented in this section are taken from the field of interaction design and were aimed at gaining an understanding of the domain and explore design opportunities. Methods were also chosen to for ideation purposes and to test and validate ideas that were based on the findings from the exploration methods.

3.1 Benchmarking

Benchmarking is a method where the designer examines existing versions or prototypes of the product as well as the biggest competitors to the product in order to gain insights into what the technology is capable of and to find inspiration for new concepts (Cooper, Reinmann, Cronin, & Noessel, 2014). In order to gain an understanding of the field, several banks who had a chatbot service were investigated, see section 4.1.

3.2 Ethnographic interviews

Ethnographic research can be described as the gathering of information of a particular group of people and their behaviors, beliefs, perceptions and social interactions (Muratovski, 2015). During the research phase it was of interest to gain an understanding of how people use Swedbanks online services presently in their daily lives for their private banking purposes in order to see where a chatbot service could be a good fit for future design work. For this purpose, ethnographic research was made in the form of semi-structured interviews with six people that represented the target group. See section 4.2.

3.3 Think aloud-test

While conducting a think aloud-test, a user is asked to voice their thoughts out loud while being asked to perform some tasks that are to be tested. This test can give insights into the users’ thoughts and feelings towards the tasks they are performing and the overall experience of a service or product (Rogers et al., 2011). This method was used for getting insights into the target groups perception of Swedbanks current chatbot system, Nina. Critique can be pointed put to this method for occurrences of moments of silence where the user forgets to voice his or her thoughts out loud and thus loose valuable data. To mitigate this occurrence, constant encouragement to talk about the events and experiences was endorsed. See section 4.3.

3.4 Brainstorming

The purpose of a brainstorm is to come up with new ideas and explore possibilities for design directions (IDEO, 2018). It is important to not be critical of any ideas, but rather encourage people to produce as many ideas as possible and evaluate later which ones are viable and which ones are not (Cooper et al. 2014). This method was chosen for the purpose of producing ideas where the use of a chatbot could be a good fit in relation to private banking affairs. Critique can be pointed to the method when working alone as

15

it may not produce a lot of ideas and the lack of other group members also means a lack of inspiration from other people’s ideas. See section 4.4.

3.5 Wizard of Oz

The Wizard of Oz-method basically means that a human is simulating parts of or whole of a computerized system (Thies, Menon, Magapu, Subramony & O’neill, 2017). The decision to take advantage of this method was quite easy, since one of the key characteristics of a chatbot is the ability to provide a more humanlike interaction with a computerized system, and the fact that the founder of the method John F. Kelly used the method when working on a natural language processing application (1984), it seemed fitting to apply the method to this project. This method also allows for quick and cheap ways to create an experience that can be re-shaped and tested several times without the time it takes to create several different prototypes with different purposes. See section 4.5.

3.6 Role play

IDEO describes the role play method as a type of cheap and easy way of prototyping in order to test an experience out without wasting too much time on building prototypes (IDEO, 2018). Since one of the characteristics of a chatbot is the intention of acting human, the role play method seemed as an interesting choice to extract information from the chatbot’s system point of view through the use of a human. The role play method was applied during a Wizard of Oz session to investigate the conversation dynamics between chatbot and user. See section 4.5.

3.7 Prototyping

A prototype can be seen as a manifestation of a design, that lets stake holders interact with it and test its validity through user testing (Rogers, Sharp & Preece, 2011). A prototype can be anything from a piece of paper to a complex piece of machinery depending on what aspect of the design that is up for evaluation (Rogers et al., 2011). Buchenau & Suri (2000) also explains the concept of experience prototyping as prototypes made for communicating whole experiences of a concept to an audience. In this project the developed prototypes were used both for communicating whole experiences of design concepts as described by Buchenau & Suri (2000) and for testing the validity of smaller aspects of the design such as which features to include and the language of the chatbot. See section 4.7.

3.8 User testing

A user test can be described as a structured session that is focused on specific features of a prototype that is tested by an evaluator, most preferably someone representing the intended user (Goodman, Kuniavsky & Moed, 2012). The user tests the prototype and gives feedback on the positive and negative

16

aspects of the prototype. Goodman et al. argues that user tests are not a god way to communicate whole experiences to an audience (2012). However, like mentioned in the previous section, the developed prototypes were deemed useful both for testing features of the proposed concept and communicate the overall experience of the concept.

17

4 Design process

As mentioned in the introduction to chapter two, the emphasis of this project has leaned heavily towards empirical research, which is presented in this chapter. The design process has taken a user-centered approach in the sense that end-users have been involved in a majority of the activities presented in the forthcoming sections, both for exploratory methods such as interviews and for validation methods such as user testing. User centered design is here interpreted as a design process in which the users have a large influence over the shaping of a product or service (Abras, Maloney-Krichmar & Preece, 2004). It should be noted that the term ‘users’ can be divided into sub-categories of primary, secondary and tertiary (Abras et al., 2004), and while an effort was made to involve secondary users by contacting an advisor from Swedbank, the sole focus has been on primary users, as no answer was received from the bank.

The design process itself can be divided into three parts, research, ideation and design, with the first part of the process used for exploring the design domain and users through methods such as ethnographic interviews and benchmarking, the second part used for conceptualization and development of a design proposal and the third part for testing prototypes of the developed design concept.

4.1 Benchmarking

4.1.1 SEB’s Aida

Svenska Enskila Banken (SEB) has a similar product to Swedbanks service which they call Aida. Aida presents herself as a digital assistant with an artificial intelligence who is new at work and is therefore limitied in her responses but she’s constantly learning. A first impression was that Aida instantly seems more humanoid than Swedbanks service with a more conversation oriented way of communicating. Aida also offers to connect you with a human through telephone when she does not know the answer to a question (SEB, 2018). To offer human take over indicates that SEB are prepared to repair the conversation in case it breaks down (Callejas, Griol & McTear, 2016)

4.1.2 Nordeas Nova

The Norweigan branch of Nordea bank has a chatbot named Nova, who can currently answer questions about life insurance and retirement funds. Nova also presents herself as a chatbot with limited capabilities but is more than happy to suggest solutions if she can’t help you herself. Unlike Aida, Nova also has an avatar in the shape of a full-figured woman with brown hair, glasses and blue outfit that adds to the feeling of talking to an intelligent agent and thus, utilizes the concept of anthropomorphism(Nordea, 2018).

18

4.1.3 Bank of America’s Erica

The American bank Bank of America is soon to launch a new service which they call Erica, a virtual financial assistant that can help you with your banking needs, Erica can:

• View bills and schedule payments • Transfer money between accounts • Lock and unlock debit cards • Send money to your friends

Unlike Nova and Aida, Erica is not providing you with general information about insurance or retirement, she is actually helping you with your personal banking services like sending money or paying bills (Bank of America, 2018). Erica gives an indication of what the technology is capable of doing and can serve as an inspiration for future design concepts.

4.2 Ethnographic interviews

Ethnographic interviews can be conducted in several different ways depending on what data you want to collect, for example, structured, structured or in-depth interviews (Muratovski, 2015). In this case, semi-structured interviews were conducted, meaning that questions were prepared in advance, but time was also planned to be prepared for other relevant topics to be discussed and explored if they were deemed interesting to talk about (Muratovski, 2015). In an effort to collect more valuable data, the participants were asked to log in to their bank account during the interview in order to get inspiration and to probe their thoughts towards the relevant topic.

Ethnographic interviews were made with six participants, three male and three female, aged between 24 and 31, with various occupations ranging from student to purchasing assistant. The interviews were conducted one on one at six separate occasions in the participants home or in one case, at a café where the participant felt comfortable conducting private banking business. The names used in the next sections are fictive.

4.2.1 Main use of Swedbanks online services

To get the interview started, the first question that was asked to all participants was:

“What services do you utilize whilst logged in to your bank account?”.

Like expected, all of the participants first answer was that they paid their bills. The second most common answer, also given by all participants, stated that they transfer money from their savings account to their spending account or the other way around.

19

Other services used included getting an overview of expenses the previous month, checking interest rates, setting up direct debit lines and setting up saving plans. An interesting topic came up during this first question and that was an interest in investing in stocks and funds. Two people, Jasmine, 31, and Tim, 27, mentioned that they were interested in buying stocks but felt that they either lacked the knowledge or self-confidence to do so. When asked to elaborate on this, Tim stated that since he never had bought any stocks himself, the whole concept seemed scary and alien, and he was afraid to make mistakes. Jasmine was asked why she was interested in stocks and she answered that it was in investment for the future, in order to both save money and make them grow.

4.2.2 Mobile first

An interesting topic came up with regards to when and where people conduct their banking business. A majority answered that they almost exclusively used their smartphone do go about their errands except for tasks that demanded the use of a desktop, like setting up a payment through invoice with a new company for example. The reason for choosing phone over desktop was related to the ease and mobility that smartphones provide today, which meant that the participants could check their bank account or pay a bill while waiting on the bus instead of putting aside time to log in to their desktop at home or work. An assumption was made before the interviews were conducted, which stated that while checking your balance might be a suitable task for a smartphone, paying your bills could be perceived as a security-sensitive task that people might want to perform on a computer in the security of the home. This assumption proved to be wrong with the exception of one participant, Jasmine, who stated that she always used her home computer to pay her bills because “That’s the way I’ve always done, I know that it works”. The other participants, as stated above, used their smartphones and besides that, did not really care where they were when they conducted their bank business, it could just as easily be at home as on the bus or in the gym. This finding indicates that the participants are not afraid of using their smartphone to conduct sensitive banking business, which correlates well with the use of a chatbot-service since this is a medium well suited for being used in smartphones (Følstad & Brandtzæg, 2017).

4.2.3 Save money

The following questions handled topics such as what the participants thought was working or not working currently in their bank service, and what they wished they could do with their bank service that they can’t currently do today.

The questions generated a wide array of answers ranging from easier contact with your personal bank-agent to being able to get extended bankID (a digital identification software), but overall there seemed to be an overarching pattern of wanting to save or invest money in some way. Anna, 27 said that would like to have more counselling and easily available information from the bank, in topics like saving money in funds or save money for retirement. Linus, 23

20

stated that he would like to see more fun and creative ways to save money like for instance by blocking your spending account for a certain amount of time or transferring a small amount of money to your savings account every time you make a purchase. Combined with previous responses from Jasmine and Tim, four out of six participants expressed an interest in saving or investing their money in some way.

4.2.4 Summary of ethnographic interviews

In summary, the ethnographic interviews pointed to two relevant findings; the users mostly handle their banking business on their smartphone, and the fact that there seems to be a gap in the services that Swedbank provides with regards to different forms of information and tools for saving and investing money, and the interest from the users to do this. This could be seen then, as a possible problem area that could be improved with the use of a chatbot service.

4.3 Think aloud-test of Nina

When the participants for the ethnographic interviews were contacted they were also asked whether they knew that Swedbank had a chatbot on their website and if so, if they had used it at any point. None of the participants answered that they even knew that Swedbank had a chatbot service available to them. This knowledge was used as an opportunity to complement the findings from the interviews with insights into the participants first impression of the existing service and how it was perceived by the intended users. To understand how the participants perceived the current chatbot system, a think aloud-test was planned to take place after the ethnographic interviews.

4.3.1 Ability to understand questions

One task the participants were asked to perform was to ask the chatbot a random question. The inquiries were of varied nature and included questions such as “what should I think about when buying shares?” to “I am sending

an invoice to the USA and have a question about my IBAN-number”. A

general frustration among the participants was observed when the chatbot was unable to understand the question asked. Out of the six participants, the bot was only able to understand one on the first try. The other five participants had to either rephrase their question or the bot answered the question with an

21

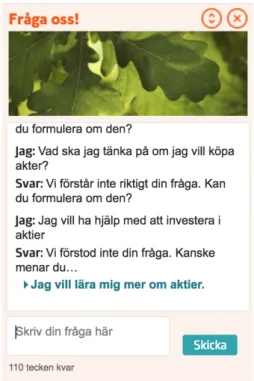

attempt to steer the conversation into a topic where it could give a more exhaustive answer see figure 4-1.

Figure 4-1. Nina fails to understand the question

It was also observed that the first questions were quite detailed and personal in their formulation, like the example above regarding the IBAN-number, a number needed for sending international financial transactions. When the chatbot did not understand the first time, the questions were rephrased into shorter more general questions with less complexity. The question with the IBAN-number was a particular disappointing meeting, Kim was not able to get a satisfactory answer from the chatbot at all after two attempts and stated the following:

“I want an answer from a human, I want to be sure that I get the help that I need, I don’t trust the chatbot to understand me correctly.”

What was interesting to observe here was the initial reliance the participants had in the chatbots abilities to answer complex questions. This faith in the chatbots abilities then quickly turned to disappointment when they discovered that the chatbot actually couldn’t answer very detailed questions at all. This finding highlights the need to set the right expectations for the users in order to not cause disappointment for not living up to standards that are too high for the chatbot to fulfill.

4.3.2 Quantity of information

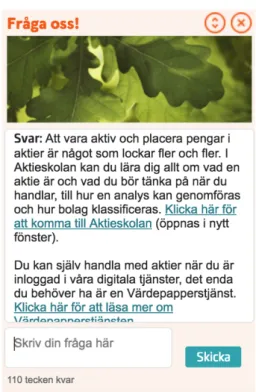

After all the participants had received an answer to a question, three out of six reacted to the answer, which filled the whole conversation box, indicating that it was a lot of information to hand out in one answer, see figure 4-2. One

22

participant, Jasmine even reacted to the speed with which the chatbot answered, which is instantly after the user presses the send button. The following quote is from Jasmine:

It felt a little arrogant, like it was mocking me, that it could answer a question with that amount of text in less than a millisecond, like it knew the answer to everything.

Figure 4-2. Ninas answer filling up the whole conversation box. The answer itself was also considered too general.

Given these observations, it is important to consider the medium used for channeling the information from Swedbank to the user. The amount of information and the speed with which it was delivered would likely not have been an issue if the user would have clicked on a link on the website, but when delivered through a chatbot that is communicating through a window with limited width and certain preconceptions of how communication works in chats, the user is taken by surprise when the communication does not function the way the users are used to. Previous research has also found that users may not read all of the information presented if it is delivered in to large quantities and too fast (Duijst, 2015).

An interesting side note here was that Jasmine ascribed intent to the chatbot system, when she said that it felt like the system was mocking her, even though there was no indication that Jasmine was talking to a human, more than the fact that the medium itself is traditionally used for human to human communication. This relates to the concept of anthropomorphism described in section 2.4 earlier. This is an indication that it is possible to include more human characteristics in the design of the chatbot in order to connect better with humans. If the user can interpret some actions as bad intentions, he or

23

she could also be possible to interpret some actions as good intentions, providing the user with a positive experience.

4.3.3 Quality of information

After reading through the answers given by the chatbot, many participants felt frustrated about the general, non-personal answer given by the chatbot (see figure 4-2). Jennie, who asked if she could get a loan for a car, said the following:

This answer is irrelevant for me, I could have just as easily clicked on the link on the first page that said ‘car loan’ and gotten the exact same information.

In general, there was a pattern of wanting to get more personalized answers from the chatbot, illustrated by the following statement from Anna, who asked a question about advice for investing in shares and funds:

This answer is very general, I would like to be able to ask more specific questions based on my own individual situation

This can be seen as a violation of the maxim of relation (Grice, 1975) discussed in section 2.2.1, as the participants did not feel that the answer fully related to the specified question but merely touched upon the general topic of discussion. It seemed as if a deeper knowledge about the participants could have provided them with more useful information and a more meaningful experience for them. This relates back to the expectations that are set for the chatbot before the answers are given. The bar is set rather high by not giving proper limitations for what the chatbot is able to answer, leaving users to expect a high-quality answer. When the chatbot fail to deliver this, the result is disappointment.

4.3.4 Human touch

As mentioned in section 4.3.2, the tendency for anthropomorphism was clear in some participants. One participant, Anna, even started with a “Hello” before asking her first question, even though she knew she was talking to a computer. In another case, Linus articulated the lack of humanness in the chatbot and stated that he was missing small human words usually utilized in human to human interaction, or an avatar or character with which to connect to. A pattern could be observed on these findings that the participants were looking for ways to connect to the system in a more human and natural way through the use of greetings and comments about the lack of human aspects of the interaction. This indicates there is a preconception about chatbot systems that includes more human characteristics than is prevalent in Swedbanks chatbot system today and is something to take into account in the proposed design solution.

4.3.5 Problem solved?

In Annas scenario, she expressed a substantial amount of irritation when she noticed that the answer she got from her question ended by saying “Have a

24

nice day”, consequently finishing their conversation in one turn without asking her if she got the answers she needed. Anna articulated the following:

“I want to be the one who determines when the conversation is over, when I got the answers I need, now they have already finished the conversation without asking if I am satisfied with the answer. I feel like they threw me out of the chat”

This was an interesting finding and could be interpreted as a violation of either the maxim of quantity or maxim of quality (Grice, 1975) as Anna felt that she had not gotten the quantity or quality of information she wanted. An important learning from this observation is to make sure that the user has reached its goals before shutting the doors on them in order to create a meaningful experience.

4.3.6 The nature of the questions

The last finding from the think aloud test was related to the nature if the questions asked to the chatbot by the participants. All of the questions were of a personal level and aimed at getting information or advice regarding the participants own individual situation, a majority with relation to the topic of saving or investing money for the future. It is understandable then that when the chatbot answered with general statements that could be applied to anyone, and even copied and pasted information from pages on the website, frustration and irritation were among the feelings expressed. An important insight based on this finding is that the chatbot service should be able to handle information of individual nature, in order to give answers that are more tailor-fitted to the individual asking the questions. This is of course also a question of ethical nature, how much personal information should the system have access to? When this was discussed with the participants, the general trend was that they did not mind if the chatbot had access to all the information that was available in their bank account, as they considered the chatbot to already be a part of this system, as long as the chatbot service was not based on other messaging platforms such as Facebook messenger or WhatsApp.

4.3.7 Summary of think aloud-test

A lot of interesting findings could be derived from the think-aloud-test. Moments of silence could sometimes arise but when reminded to voice their thoughts, the participants delivered a lot of interesting and useful data to be used in the forthcoming design proposal. Below is a short bullet point-list to summarize the findings

• The chatbot’s ability to understand complex questions is limited, this should be taken into account in future design proposal.

• The delivery and presentation of information is not fitted to the chatbot format.

25

• The content of the answer is in many cases too general to be if value to the user.

• The users are looking for ways to connect with the chatbot service in a more natural way than is possible today.

• No confirmation that the question has been answered results in frustrated users.

• The questions that were asked were of personal nature, indication the need for the chatbot to have access to more personal information.

The following statement by Linus concludes this sub section and captures the whole experience of the participants perception of Swedbanks existing chatbot service in one sentence:

“It is just hard to ask the right question as it is to get the right answer, I would rather just make a phone call to them.”

4.4 Brainstorming

The findings from ethnographic interviews indicated that there was support for a service that could help the user with financial situations that related to the topic of saving money in some way. The think aloud-test provided some information on where the interaction with the current chatbot failed to deliver a pleasant experience.

Based in these findings, three ideas were developed during a brainstorming session that described three different scenarios where the user was interested in saving money in some way. The purpose of the brainstorming session was to explore different situations where a user from the intended target group would be in need of a chatbot assistant that could provide financial assistance.

4.4.1 Short term savings

In the first scenario the user wanted to set up a regular transaction to a short-term savings account in order to have a buffer for unexpected costs or more extravagant expenditure like a weekend holiday. The user could then choose from three different ways of setting up this transaction; a fixed monthly or weekly rate, a percentage of monthly income, or a percentage transferred for every outgoing transaction from the connected bank account, meaning if the user bought a pair of jeans for 1000 SEK and had used the last option with a percentage of 10 %, the user would also transfer 100 SEK to a savings account

4.4.2 Setting up a fixed savings goal

In the second scenario, the user had a fixed savings goal for a holiday, three months later and needed to save 15 000 SEK. The user would ask the chatbot

26

to set up a savings goal in order to reach the 15 000 SEK in time for the holiday.

4.4.3 Advice for retirement plan

In the third scenario, the user was interested in advice for a retirement plan and was asked to fill some numbers by the chatbot in order to get advice on how much money was needed to save each month to have a decent retirement.

4.5 Wizard of Oz-pilot test

To test the overall user experience of the three concepts, and also to investigate how the communication works between the user and a chatbot, a Wizard of Oz pilot test was planned. Since the scenarios were only tested on one person, the amount of data is insufficient to represent a real wizard of Oz test, for this purpose, it is called a Wizard of Oz pilot test.

4.5.1 Modifications to the original method

In a traditional Wizard of Oz-test, the person that is testing the system is the only one that is being observed and the only one that gives feedback on the system (Kelly, 1984). In this session, the person that was playing the chatbot was also allowed to make comments and give input on their role in the test in order to evaluate the conversation from the chatbot’s perspective. In light of this, the session can be seen as both a wizard of Oz-test and a sort of role play session.

To test the concepts through the wizard of Oz-method and role playing was a quick and cheap way to make the concepts a bit more tangible and provided important insights into which direction the design concepts should take without putting too much time towards building more high fidelity prototypes.

4.5.2 Setup of the pilot test

For this test, all that was needed was a simple chat program (the communications application Slack was used) and some basic directions for the three different scenarios for the person playing the chatbot. Two people participated in this test, one user and one person playing the role of the chatbot. The directions written for the person playing the chatbot was very loosely defined in order for the person to communicate freely with the user and was just used for letting the person playing the chatbot know which data to collect from the user. The user and the person playing the chatbot were sitting in different rooms, with the test facilitator present in the room with the user in order to take notes and document the process while the chatbot was given a pen and paper to document thoughts and wonderings that could arise during the session. The user was then asked to interact with the chatbot system, playing through the three different scenarios described earlier. After each scenario an informal discussion about the scenario and interaction took place. The findings are presented in the coming sections.

27

4.5.3 Tools vs. information

Two of the three concepts that was tested had a lot of focus on giving users the practical tools to set up a healthy savings plan (short term savings and setting up a savings plan) and less focus on being advisory. This turned out to be somewhat of a wrong turn in the design process since, as it turned out during the wizard of Oz-pilot test, the participant was more interested in receiving personal advice on healthy ways to save money, rather than the actual tools to do this. For instance, during the first scenario, the chatbot informed the user that it could set aside a percentage of their monthly income over to their savings account, which was appreciated, but failed to deliver a recommendation of how big a percentage is advisable to set aside from the monthly income, which the participant stated was a desirable feature.

4.5.4 Entertainment value

In the discussion after the first scenario had been tested, the user gave the following statement:

“I already know that it is good to save money, but it is so boring”

This indicates an interesting insight; that the chatbot experience could be more entertaining in some way in order to encourage a healthy savings plan. This finding supports the idea of implementing gamification elements mentioned in section 2.5 into the process in order to provide a more entertaining experience.

4.5.5 Access to more data

Another feature that was desirable from the user point of view was the idea that the chatbot should have more insights into weekly and monthly expenditures and be able to categorize these in order to compare specific categories over time and give advice when the user has overspent or perhaps underspent in a category. An example of this could look like this

“Hey XX, you have saved 100 SEK on coffee this month compared to last month, would you like to put this money in your savings account?”

Or

“Hey xx, I’ve noticed you’re spending on take-out food is considerably high this week, consider buying some groceries instead and save some money buy cooking your own meal!”

This comment from the participant is in line with previous findings in section 4.3.6 about the chatbot system having access to more information from the users in order to provide a more personal experience.

28

4.5.6 Framing the conversation

An observation was made during the test that the conversation had a tendency to stray from the intended conversational path see figure 4-3. The user kept asking questions that led to side tracks which resulted in scenarios that lasted longer than expected and confused the user as to the original purpose of the chatbot. While it was interesting to see how the conversation took shape and which questions the user asked, it also provided the insight that perhaps the chatbot should lead the conversation more and direct the flow towards the intended goal. Possible ways to do this is to inform the user of the chatbot’s limitations or restrict the interactions with the system. This is in line with Duijst (2017) finding that users that are presented with a chatbot that has a clearer goal and shorter task, rated this more useful than a chatbot that has a more vague, complex and longer task.

Figure 4-3. The user (red) asks multiple questions and strays from the intended conversation

A reason for the tendency to stray away from the main goal of the conversation could relate to the fact that the participant figured out early on that the chatbot system was faked, and therefore felt she could talk more freely and more human-like than if the chatbot system would have been real. This was also noted by Hill, Ford & Farreras (2015), who found that humans use richer and more complex vocabulary when talking to other humans compared to chatbots.

4.5.7 Preconceptions about communication

Another recurring comment from the participant was that she felt the system was slow and that she had to wait a long time for an answer. The reason for this is of course because the chatbot system was a human and had to write in

29

answers manually on a keyboard, which took some time compared to the speed with which real chatbots can answer. This made the participant feel frustration since she expected the chatbot to answer immediately, even though she knew by now that it was a human faking the system. This indicates that we have different expectations of human-chatbot conversations compared to human-human conversations. A chatbot should be able to answer fast, although, as showed in section 3.3.3, too fast can be detrimental to the experience. This was also an interesting finding for using the wizard of Oz method, showing that in order to test what Houde & Hill (1997) refers to as the look and feel of a prototype, this method may not be optimal as it does not accurately represent what it would be like to interact with a real chatbot system.

4.5.8 Chatbots vs. GUI

During the third scenario, which described a user wanting advice on a retirement plan and being asked to press in some numbers, the participant stated that the conversation was too long in comparison to what she got back in return. The chatbot asked in total eight questions which was too many in the participants opinion, some of them was not understood by the participant which increased the frustration even more. The participant suggested that this service would be better suited to a graphical user interface where she could see all the questions at once and thus see where she was in the process. This is an important insight since it tells us that not all features are fitted to this type of text-based interaction, services and features that involve many steps such as registration forms or setting up a new bank account are perhaps better suited for graphical user interfaces and would not benefit from a conversion to a chatbot. This further confirms Duijst’s (2017) finding that chatbots with longer and more complex tasks are rated less useful than chatbots that provide shorter tasks.

4.5.9 Update on position in process

Something that was positively received was the small words and informal meanings that the chatbot improvised into the scenarios and communicated where the user was in the process. Comments like:

“Almost there, just a few more questions”

Were appreciated and indicated to the participant not only where she was in the process and how long she had until the finish line but also gave more life to the interaction. This is an important insight to take further when developing a prototype. Since the user do not have a graphical interface to see where he or she is in the process, it is important to inform of this while chatting with the chatbot. The user commenting that small informal talk made the interaction more lifelike and was appreciated is yet another finding that indicates the presence and importance of anthropomorphism in the interaction with a chatbot. This finding also relates back to section 4.3.4 where a participant in the think aloud-test asked for a more human touch to the chatbot system.

30

4.5.10

Summary of Wizard of Oz-pilot test

After the test session, it was clear that the first scenario which described the short term saving tool was the most appreciated and generated the most positive comments from the user. Overall the WoZ-pilot test provided interesting insights into the further development of the chatbot service. However, the person faking the chatbot system could not provide any interesting insights to further inform the design process from the chatbots point of view. However, from a user point of view, this person also prioritized the first scenario as the one most promising for a chatbot. One preconception was also that the Wizard of Oz method would be a sufficient tool to represent a fairly high-fidelity prototype of a real chatbot system. However, this was not the case as the time it took for the chatbot to actually use the keyboard of the computer broke the illusion of talking to a chatbot. This was something that was not anticipated before the pilot test was initiated and was taken into account in the development of the prototype. Below is a bullet point list with the main findings from the test session.

• The user indicated that personal advice was more important than financial tools

• Entertainment value can possibly increase engagement with the service

• Giving chatbot more access to personal data in order to give better financial advice was desired

• The chatbot could be more active in the direction that the conversation is taking in order to not stray from the original purpose of the chatbot. • A chatbot should take advantage of the ability to give quick answers • Longer, more complex tasks are better suited for graphical user

interfaces

• Small talk is appreciated not only for giving life to the conversation, but also for informing the user about the progress of the task at hand

4.6 Conceptualization

After synthesis of the findings from the WoZ-pilot test, the first scenario, regarding short term savings was the only one chosen to be further developed due to being the most appreciated by the user and the person faking the chatbot system. Taking the findings from the pilot test and the think aloud-test, combined with Duijst (2017) finding that simple tasks are perceived as more useful than complex tasks, the concept was developed further and is presented in the next section.

31

4.6.1 Nina 2.0

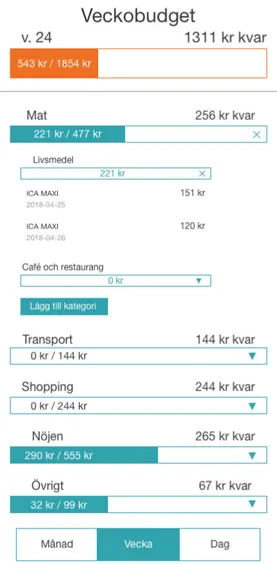

Nina 2.0 is a concept developed for customers of Swedbank aged between 18-35 and is aimed at aiding the user in setting up a monthly budget in order to have a healthy financial situation especially with a focus on having a savings buffer for unexpected expenditures. Nina 2.0´s primary function is to set up a budget for its user based on monthly income minus fixed expenses like rent, mortgage, subscriptions etc. Since Nina 2.0 is a part of Swedbanks system, it can gather information directly from the user’s bank account and only needs a confirmation if the figures of income and fixed expenses are correct or not. When the numbers are confirmed, Nina recommends a budget for you divided into categories of food, transport, shopping, entertainment, miscellaneous and savings which is based on your previous history of transactions in the represented categories but only trimmed down a little bit in order to put aside some money for savings. The budget is also broken down and presented in terms of weekly and daily budget for a more manageable handling of the budget. The whole idea of the concept is that it is automatic, with Nina giving you advice on how you should spend your money for a healthy financial situation, but the service also include options for handling your own budget. Another feature of Nina is the ability to provide insights on your budget and bring advice if you are overspending or ask if you want to want to put aside extra money to your savings account if you are underspending in a particular category.

Nina also has an element of gamification to her; If the user has allowed her to send notifications, Nina gives the user weekly updates on the budget on Sundays. During this time Nina doesn’t present the budget straight away, but rather asks the user if they want to guess how much he or she thinks they spent in a certain category that week, giving the user the opportunity to reflect upon the week and guess the right amount.

Nina has a name, an avatar and talks in a humanlike, informal way in order for users to get a better sense of connection to the service.

4.7 First prototype

From the concept presented in section 4.6.1, a prototype was developed in order to test both the interaction features and the overall experience of the concept. In Houde and Hills paper What do prototypes prototype? they present a model for three different dimensions of prototyping; role, look & feel and implementation, where role represents a testing of the actual usefulness of the artifact, look & feel represents dimensions of the sensory experience, what it looks and feels like to use the artifact and implementation handles question of the technical components of the artifact (Houde & Hill, 1997). According to this model, the developed prototype positioned itself somewhere between role and look & feel, with an emphasis on role, but since the prototype was created with a digital tool that allowed for interaction through a smartphone, which was the most commonly used form for interacting with the bank according to the ethnographic interviews in section 3.2.2, there was also an opportunity to

32

test the actual look and feel of the concept as well, see figure 4-4. The prototype can also be referred to as an experience prototype, as the purpose of the prototype was to communicate the experience of what it would be like to interact with the real product (Buchenau & Suri, 2000).

Figure 4-4. Model of which dimensions the prototype prototype

33

4.7.1 Role

In total three different scenarios were developed, the first meeting with the chatbot and setting up a budget, the second meeting where the budget is set, and the user is self-initiating a check-up on the budget plan, and the third meeting where the chatbot gives the user a weekly update on the budget plan, which included the elements of gamification presented in section 4.6.1. These three scenarios were developed in order to test the value that the concept might bring to the intended target group.

4.7.2 Look and feel

In accordance with previous findings during the think aloud-test of the existing chatbot, together with research from the literature referenced in section 2, some guidelines were set with regards to the look and feel of the prototype:

• There needed to be checkups to confirm that the users’ needs were being looked after

• In order to not bombard the user with too much information at the same time, the information needed to appear at the speed of reading the text

• The expectations for what the chatbot actually can do need to be presented in order to not get too high expectations.

• Nina should have some human characteristics in order to feel a sense of connection

A limit in the prototyping tool was the fact that in order for the conversation to run smoothly, the user was constricted to answer with predetermined chat bubbles instead of free text, although providing the users with an easy way of interaction, this was something that was hypothesized to be criticized during the user tests because of the constraints it puts on the user. However, since all available conversations were predetermined and thought of beforehand, it also provided the opportunity for strictly following the rules of conversational theory and Grice’s maxims mentioned in section 2.2, which means that it was impossible for the user and the chatbot to misunderstand each other and break the conversational flow. There is of course also the risk that the user does not feel that any of the pre-programmed answers are suitable to take the conversation in the desired direction which is an argument for supporting the theory of situated actions (Suchman, 1987).

4.8 First user tests

For the first user tests, six participants were recruited to test the three different scenarios presented in section 4.7.1, four female and two male, aged between