Designing transparent display

experience through the use of

kinetic interaction

Interaction Design Master’s Programme

School of Arts and Communication (K3)

Malmö University, Sweden

August 2017

MSwedenugust 2017

A Master’s Thesis by

Designing transparent display experience

through the use of kinetic interaction

Interaction Design Master’s Programme

School of Arts and Communication (K3)

Malmö University, Sweden

August 2017

Author:

Rafael Rybczyński

Supervisor:

Susan Kozel

Examiner:

Clint Heyer

Thesis Project I 15 credits 2017Acknowledgements

Over the lengths of this thesis project I have received support and encouragement from a many people. First of all, I would like to thank my supervisor Susan Kozel for advising me to trust my own instinct.

Moreover I would like humbly to thank all the participants without whom this project would not have been possible. Also humbly I thank Alvar, Sanna, and Susanne for shelter and feedback. For proofreading my thanks goes to Jan Felix Rybczyński and Sanna Minkkinen. Finally, my deepest gratitude is reserved to my father Dr. med. Jerzy Antoni Rybczyński. Rest in peace. I would have wished for you reading this paper.

Author’s notes

Often during the process of doing this study I was asked why transparent displays. What’s the point? Probably I would say this goes way back to my childhood when enjoying my father reading novel stories to us as good night stories. A good example of great stories was Scheerbart’s utopian architectural vision The Gray Cloth who told about the great Swiss architect who traveled by an airship. Wherever he went, he designed buildings made from brightly colored glass, including the roof.

Modern architecture has always fascinated me, and even though I did not study architecture I choose eventually to learn in interaction design to understand how close this discipline goes hand in hand with architectural principles and thoughts.

Abstract

This essay presents a study into the domain of architecture meeting new interaction design principles. The paper discusses future transparent surfaces to become programmable kinetic user interfaces, usable as information and communication channels to simplify our everyday environment. Based on the approach of using the five methodologies: Cultural Probes, Research Through Design, Grounded Theory, Star Life Cycle Model and Wizard of Oz; consistent data was collected to design and iterate on a visionary interface prototype to bridge the use of freehand gestures through motion sensing and moreover supported by RFID in a building structure on a see-through background. The objective of this paper is to unravel the main research question of how can people through kinetic interaction use organic interfaces on transparent surfaces?

Several possible uses were ideated such as multiple shared user access, collaborative interaction on both sides. The primary research was answered through a final presented prototype combining a CV system with RFID for multiple and collaborative usages. User experiences and feedback makes an array of applications possible how a transparent interfaces with kinetic interaction can be applied to the interior and exterior such as fridge, mirror, doors, glass panels, alarm systems, games and the home entertainment.

In today’s norm screens in the shape of a square are obsolete and support of new patterns, forms and materials are needed. Fieldwork concluded that kinetic interaction could flawlessly unite real world conditions with computer-generated substance, and become the design environment for future interactions to communicate with the user. We no longer seek to be bound to stiff shaped Graphical User Interfaces. Adding a transparent surface as background for such kinetic motion is underlying paradigm for the content to be projected into any

List of abbreviations

2D - Two-dimensional image 3D - Three-dimensional image AI – Artificial Intelligence AR - Augmented Reality AV - Audio/Video CP – Cultural probesCPU - Central Processing Unit CV – Computer Vision

DYI – Do-it-Yourself

EC - Electrochromic smart windows / Switchable glass FOLED - Flexible organic light-emitting diodes

GUI – Graphical User Interface HCI - Human-Computer Interaction HOE - Holographic optical elements HUD - Head-up Display

KOI - Kinetic Organic Interface KUI – Kinetic User Interfaces LCD - Liquid Crystal Display LED - Light-emitting diodes I/O – Input/Output

IR - Infrared

ITD - Interactive Transparent Display

MIT - Massachusetts Institute of Technology MR - Mixed Reality

NUI – Natural User Interface

OLED - Organic light-emitting diodes OUI - Organic User Interfaces

RAM – Random Access Memory RFID - Radio frequency identification Rtd - Research through Design RUI - Remote-use User Interface SDK – Software Development Kit SAR – Spatial Augmented Reality TUI – Tangible User Interface Ubicomp – Ubiquitous computing UI – User Interface

VR - Virtual Reality

Keywords and Phrases

This report will use keywords and phrases, which may require further clarification: KOI is an acronym for Kinetic Organic Interface that can have any shape or form. This new class of emerging KOI employ kinetic motion to embody and communicate information to people. (Parker et al., 2008)1

Multimodal interfaces provide users with greater expressive power, naturalness, flexibility through combining modalities such as body movements, hand gestures, touch, speech, pen, sight and sound to enrich humans experience (Oviatt, 2002) Matter is one possible future scenario of continued progress in nanoscale technology that is based to “create a physical artefact using programmable matter that will

eventually be able to mimic the original object’s shape, movement. Visual appearance, sound and tactile qualities” 23 (Goldstein et al., 2005)

Programmable physical architecture is a visionary concept for “future architecture where physical features of architectural elements and facades can be dynamically changed and reprogrammed according to people’s needs”4 (Rekimoto, 2012)

Switchable glass is also known as Electrochromic (EC) smart windows. ECs are capable to control visible light and solar radiation into buildings. Moreover EC can “impart energy efficiency as well as human comfort by having different transmittance levels depending on dynamic need.” 5 Smart windows are currently being used in an

increasing number of constructions in architecture. (Granqvist, 2014)

1 Oviatt, S. (1999), “Ten Myths of Multimodal Interaction”, November1999/Vol.42, No.11 Communication of the ACM

2 http://www.cs.cmu.edu/~./claytronics/

3 Goldstein, S. et al. (2005), “Programmable Matter”, Invisible Computing, Carnegie Mellon University, pp. 99-101

4 Rekimoto, J. et al. (2012), “Squama: Modular Visibility Control of Walls and Windows for Programmable Physical Architectures”, AVI 12, May 21-25, 2012, Capri Island, Italy, Copyright 2012 ACM 978-1-4503-1287-5/12/05

5 Claes G. Granqvist, C.G. (2014), “Electrochromics for smart windows: Oxide-based thin films and devices”, Thin Solid Films 564, 2014 Elsevier B.V., pp. 1–38

“The most profound technologies are those that disappear. They weave themselves

into the fabric of everyday life until they are indistinguishable from it“

Table of contents

1. Introduction ... 10

2. Research Focus ... 13

3. Methodology ... 14

4. Literature Review and Related work ... 16

1. 4.1. Living and adapting to emerging technologies in the home domain ... 16

4.2. Ubiquitous computing and the Internet of Things ... 17

4.3. Window – A static information medium in the peripheral background ... 18

4.4. Tangible User Interfaces ... 21

4.5. Vision and RFID sensors ... 22

4.6. Computer Vision, Depth-sensing cameras and dynamic environments . 23

4.7. Multi-touch, NUIs, Surface Computing, OUIs ... 25

4.8. Gesture recognition ... 27

5. Summary ... 28

6. Exploratory Research & Field Studies ... 29

6.1. Brainstorming, set up of goals and requirements ... 29

6.2. The Scenario workshop ... 32

7. Lo-fi experiments – Project Nimetön ... 35

7.1. Paper mock-up ... 35

7.2. Prototyping Nimetön ... 36

7.3. Validation Phase ... 36

7.4. Evaluation Phase ... 38

7.5. Summary ... 38

8. Concept Development – Project Aikakone ... 39

8.1. Prototyping I ... 40

8.2. Validation & Evaluation Phase ... 42

8.2.1. Validation ... 42

8.2.2. Evaluation ... 44

8.3. Prototyping II ... 44

8.3.1. Design ... 44

8.4. Validation & Evaluation Phase ... 46

8.4.1. Validation ... 46 8.4.2. Evaluation ... 47 9. Results ... 47 9.1. Conclusion ... 47 9.2. Discussion ... 50 Appendices: 1) References ... 51 2) Figures ... 55 3) Arduino Code ... 56

1. INTRODUCTION

In a not too distant future, we are at the home of the Schuster family who reside with their two teenagers – a boy and a girl - in a state-of-the-art house with many transparent window planes which interweave into the physical programmable architecture. The house built with emerging materials that envelope useful functionality towards a system that can respond to the resident’s needs while meeting the energy demands of the building. The pervasive technologies and sensors embed in the structure dynamically can respond to the inhabitant’s enrichment, such through window displays that can be used as multimodal devices to control temperature, lighting, home entertainment, privacy and an information system, when needed visible. Each family member has a favourite thing to do at home, and benefits of the spacious surroundings thanks to the physical programmable architecture interfaces in their social and cultural activities. For example, the girl likes to practice singing using a popular karaoke game through the home entertainment system. She can use the system in any room of the home on any surface including window planes. The most convenient way to enable the game the girl needs to stand in front one of the large windows at a minimum distance of 3 meters and move her hands in the air. A depth sensor covered in the wall, and out of visible sight will recognize the girl’s gestures, and a switchable liquid crystal window glass switches the background from light to dark. A multimodal interface type for human-machine interaction appears on the transparent darkened surface, and a menu pops up with a few augmented icons featuring options such as home entertainment, Internet, lighting, temperature, cooling, privacy, and reset button. The girl presses through kinetic gestures a few buttons, and a new widget appears enabling the karaoke game app to start.

Such potential touch-free scenario in these days is not that farfetched to think of, but yet far from possible making it to work out so smoothly as described above. Each user of such futuristic interface would have the right to question how would people use through kinetic interaction multimodal devices in their everyday life activities? How would such interactive interface ease daily routines? Would such engaging multimodal interface intensify a more spacious and convenient life by getting rid of cables, keyboards and conventional screens? Would such interface support more time for social and cultural activities? Would such smart home architectural system enable shareable and collaborative human-machine interaction? Moreover approaching such scenario as interaction designer what should be considered relevant to make such an idea feasible, and how? These concerns are investigated in this essay taking into consideration the proportions between crafting materials and intervening in use contexts.

“Interaction design is about exploring possible futures.., and to design processes that are able to be curious and inquisitive, driven by what-if and divergence”6 (Löwgren et al., 2013).

Throughout the last forty years, computers have evolved and spread into every field of our personal and professional environment. Computers have simply become an integral part of our lives, and connected to a network “have become a window through which we can be present in a place thousands of miles away”7 (Manovich, 1995). The computer cobbled with

peripherals such as a mouse or a web cam connected to a rectangular screens, television, or computer display convert to a human-computer interface which embrace a user experience of being physically present somewhere else and the illusion of navigating through virtual spaces. The screen is commonly in the shape of a rectangular frame, and as such has been used to “present visual information for centuries – from Renaissance painting to twentieth-century cinema” 8 (Manovich, 1995). In the meantime computers continuously are miniaturising in size

as well as what is inside such as RAM, CPU, motherboards, and so forth. Concurrent multi-touch technologies emerged, followed by the commercialisation of the Internet of Things, and mobile devices are more and more replacing computers modify the way on how we today perceive, access and process information between electronic devices and people. This transformation has set new standards and needs in a constant changing pervasive information environment. Practices that were for a long time neglected in HCI design “because of the lack of diversity of input/output media, and too much bias towards graphical output at the expense of input from the real world” 9 (Buxton, 1997). Due to rapid development in sensing technology

and ubiquitous computing, today the technological advancing society makes it possible to synthesise the artificial way of human-computer interaction through the use of multi-touch technologies integrated into many devices and displays. Notably, during the last decade, many new emerging trends of computing interweaved with our daily life lead to believe that new technologies to be introduced in the future of novel forms of interactive surfaces and displays.10 Henceforth the architectural framework and the field of kinetic interaction design

give much space to develop new interfaces to our build structure since “ubiquitous, embedded systems, and the Internet of Things are just a few examples of how interactive technologies are increasingly integrated into our physical surroundings.”11

Considerably serving as a forerunner to grasp the attention of the popular masses of how technology would advance inasmuch, the cinematic adaption of Minority Report helped to introduce a transparent tangible multi-touch data interface12 showcasing how cool it would

be to use natural gestures without a mouse or keyboard on a real physical object. What once was initiated as a new direction to move away from the dependence of interfaces displayed on peripheral hardware parts does not end here. The curious mind of an interaction designer

“entails thinking through sketching and other tangible forms of mediation” 13 (Löwgren, 2007).

The same values may be shared with an architecture where the purpose is “the design of interactive systems that takes the architectural understanding of interactive technologies as one point of departure, and how such technologies might operate as architectural elements in the creation of interactive experience as another point of departure” 14 (Wiberg, 2015).

This paper presents a study into the domain of architecture engaging interaction design principles. The concept is to support future transparent surfaces to become programmable kinetic organic user interfaces, usable as information and communication channels to simplify our everyday environment. Such possible future would grant people to use more efficiently their expendable and ineffective architectural settings for more space comfort and ease of social and cultural activities. My study investigates user experience in a context-aware environment that displays information on several perceptual levels through paper prototyping and use cases, storyboards that inspire participants, and recorded observations of such thinkable scenarios in the home and office scenery.

To realise the conceptual framework to facilitate user studies with participants I have experimented without the use of any commercial see-through LCD displays or switchable glass. My approach to assessing different viewpoints in the exploratory process to such a fictional KOI several lo-fi prototype display installations were created, which continually were iterated in progress and set up in offices and private locations to mock up my visions.

Figure 1. Aikakone – Kinetic User Interface System (Source: author)

To the contrary of these enquiries, considerably a transparent display alone as an artefact is already a compelling, immersive interface in the midst of uniting the outer sphere and inside world for the user.

This research describes how participants were stimulated and engaged with a mix of various sensors (RFID, PID, Motion), computer graphics, hyper-reality, optical effects, games for testing user experience

with gestural and kinetic behaviour on a transparent surface (plexiglass) with an imaginable Kinetic User interface for which as such yet there is not a complete working structure. Moreover, this paper explores requirements, identifies patterns and presents results of users perception

observed in wild studies examining how a touch-free interface could function for the comfort proportionally and aesthetically in modern architecture. The conclusions aim to find answers to research questions such as: How would such interactive interface ease daily routines? Would such engaging interface system intensify a more spacious and convenient life? How would such smart home automation system enable shareable and collaborative human-machine interaction?

I report on user studies not tested in a lab setting, but rather applied to home and office environment within a private condo and a design studio. Altogether this essay analyses direct observation in the field; inspects recorded knowledge and evaluates data from various test phases with visual artists, students of interaction design, random people at office premises, and a family household with parents, their teenage kids, grandparents and friends. Participants taking part in the tests were 10-76 years old.

The aim of this research is to contribute plausible new knowledge of how an original touch-free gesture enabled tangible user interface, that joined into the fabric of physical architecture, can support “explicitly targeted use domains outside the office setting of the personal computer” (Redström, 2001).

The findings explored and discussed in this essay can help to understand potential new ubiquitous interface types to support future architects, engineers and others in the use of transparent programmable physical surfaces in home and office architecture.

2. RESEARCH FOCUS

My research examines one of the many factors that come into play when uniting kinetic interaction with physical objects. This paper focuses on identifying how people would use kinetic motion for manipulating matter through sensing technologies embedded and capable of being programmed in a transparent flat surface in an architectural context. The ambition is to design a futuristic Kinetic Organic Interface, that is an embedded system in programmable physical architecture. To build a framework on the subject in human-computer interaction the central research question I intend to answer is:

How can people through kinetic interaction use organic user interfaces

on transparent surfaces?

3. METHODOLOGY

In this study following five methods were applied: Cultural Probes, Research Through Design, Grounded Theory, the Star Life Cycle Model and Wizard of Oz. The choice to choose these five methodologies was that I wanted to find out how my first ideas of a transparent screen would work as an interface with kinetic interaction, and how would a design process change it. Throughout this paper, these data gathering techniques were applied to collect sufficient and relevant results capturing users’ experience and performance. Through interviews, questionnaires, and observations of participants, pilot studies with known interfaces types and paper-prototyping ideas for future systems were fostered.

One the first relevant discoveries noticed led to the first design emerged from using Cultural Probes that users would be keen to engage their senses on both sides of transparent surface. CP is a practice that is applied to gather information about people and their activities, once they use a kit of materials with the requirement to self-record on the phenomena exploring the interactions or feelings with the kit’s components. CP sets were distributed along with laser cut transparent plexiglass boards, whiteboard markers, task, age determination, gender, and a simple structured question. These were given to visual artists, philologists, cultural producers and families with children to evoke inspirational data. The users of CP received the kits to give new insights on how they would use transparent flat surfaces through body language, including a written fictional scenario of an interface design display embedded into the architectural fabric of a building. As Gaver (2007) points out: “Designs for everyday life must be considered in terms of the many facets of experience they affect, including their aesthetics, emotional effects, genre, social niche, and cultural connotations.“ 15 Through this

technique, inspirational notions and findings captured a broad range of possibilities than those of an individual one person approach would ever reveal. The results exposed a variety of values and activities of how people imagined transparent KOI, which were very useful in the early design process. Users for example were keen to get know the temperature to get instant clothing advice, control shades to regulate lightning or read messages by their beloved ones. One user expressed his interests in communicating on both sides of the transparent surface board, for example playing a game that would require direct input from the two sides of the screen. Further, the data proved helpful in creating personas and scenarios to facilitate better ways of communicating the concept in further data gathering processes.

As secondary methodology Research through Design (RtD)16 was applied which supported the

only about what is. Correspondingly it helped in the design process to build further on the findings from the CP and to foster further experiments started to reframe, iterate and advance problem standings, and critiques by moving forward doing micro-experiments with transparent surfaces and digital products, doing what is called the right thing. New data gathering sessions were initiated based on prototyping and involving users on newly built interfaces (e.g. Nimetön, based on ideas gathered from the CP) to explore users’ reactions and performance on a transparent KOI. Moreover, addressing functional, aesthetical and technical aspects. The RtD research model made it possible to focus on under-constrained problems that became much clearer when participants contributed with crucial insights towards the conceptual framework connecting to a bigger picture such as the product to be in architectural context. Zimmerman et al. (2007) encouraged “inquiries focused on producing a contribution to knowledge”.17 During

the design process a few of the usability and user experience goals were identified, when observing people’s reactions performing certain outlined task in kinetic interaction experiments while testing gestural behaviour on transparent surfaces. For instance, grasping graphical icons “in the air” by moving these across the screen or performing repeatable interaction by grabbing icons and putting these into visual “buckets”. As such these probes would support the concept of a touch-free architectural environment, if the commands would smoothly work out and a lengthy “feeding” of interactions between the HCI can be created. Also, the tests confirmed that the “faster” you get your task done, the less tired or bored the user would get with the task.

In interaction design, it is common to assure that a qualitative analysis process is employed in regards to structuring observational data around a theoretical framework. In this study, the grounded theory was applied. The intention of grounded theory is “a set of well-developed concepts related through statements of relationships, which together constitute an integrated framework that can be used to explain or predict phenomena”18 (Strauss & Corbin, 1998).

Moreover, according to Strauss and Corbin (1990), the research data further shall be coded under the three conditions explained hereafter: 1) Open coding to help through questioning and constant comparisons enabling “investigators to break through subjectivity and bias”; 2) Axial coding to set up and connect categories with sub-categories, and “the relationships tested against data”; 3) Selective Coding: To assemble and refine all types “to form a larger theoretical theme”, and seek for cross-reference in literature to back categories.

For example, I did a few workshops with contributors in a home and office environment. All data then was transcripted and used to identify for repeating relationships regarding of desired functionality of prototype, use of content (e.g. games, grasping visual icons etc.), and from

related works and literature. For instance, based on the following research question: How would such smart home automation system enable shareable and collaborative human-machine interaction? The row with Open code would present a heap of data: Collaborative Interaction; smart home; shareable etc. The row with the axial code would identify relationships among open codes: Playing a game together; enjoying team spirit, touch-free. The third row would include core values, which at the end after much conveying and processing data would lead to the selective code: Wanting sharable user interaction.

4. LITERATURE REVIEW and RELATED WORK

With the intent to explore how to design an interface based on the physical kinetic motion on a transparent surface (windows) to begin with this essay requires a literature and related work review. This analysis divides into two aspects. The first examines briefly how we live with and adapt to advanced technologies in the home and office domain, whereas the second displays knowledge on new types of technologies.

The theoretical findings in this summary reveal and consider relevant insights, best practices and academic state-of-the-art technology. Only a few of the most related works are pictured.

4.1. Living and adapting to emerging technologies in the home domain

Ethnographic studies made with fifty households by Venkatesh et al. (2001) concluded a few very relevant observations how families live with emerging information technologies in the Home of the Future. This study divided into five conditions focused on the properties of physical, technical, and social and cultural spaces. In the last prospect, they wanted to unravel “how prepared households were to accept Smart Home technologies that will be on the market in the next 3-7 years”.19 In regards to the architectural space they revealed:

“Despite these cutting edge accommodations, the interior spaces of these homes were only minimally designed for intensive IT use”.20 Concerning the social and cultural space they

identified that the use of IT depends much on speed and reliability of the connection. Further, they recognised “parental concern about IT use focused on gaming, Internet content, and the potential anti-social aspects of overuse”.21 In regards to the technological point, they discovered

that programs were mostly made for individual use only: “even though many software products support multiple users, the typical configuration of the PC in the home (i.e. the single keyboard,

mouse, monitor set-up) does not optimally facilitate these interactions.” 22 Henceforth this could

lead to “potentially negative consequences on their families“.23 For example, my use scenario

workshop results and design supports a user experience in the means to collaborate and share interactions when using such interface. Also, it can be argued that a digital interface embedded within the materials of a wall or window could intensify a more spacious and convenient life as such set up would make the typical set up of a single PC with peripherals obsolete and engage more than one user only.

4.2. Ubiquitous computing and the Internet of Things (IoT)

Weiser coined the expressions ubiquitous computing and calm technology. His concept of ubiquity (1993), bearing for example following attributes that a useful device “is an invisible tool”. Forthwith he meant that devices would “not intrude on your consciousness”, allowing the user to close ”on the task, not the tool”. Weiser’s vision to use components in modern architecture supposed to be appreciated by its “plain geometric forms emphasising layout, location and function within the structures themselves.”24 This has lead to the latest development

in recent years to IoT to allow access to computation in everywhere. Wireless networks deliver ubiquitously widely available support for mobile by being non-intrusive and as such invisible, integrated into the general ecology of home, or workplaces, for instance, a desk, chair or display. Moreover, Weiser was strongly opposed to Virtual Reality and any attempts for people to create the world inside the computer, and people to require using special eye goggles. The qualities of Ubicomp, calm technology and IoT played a significant role in the conceptual schema for designing a display experience in a futuristic physical programmable architectural setting. As such from the many associated works taking advantage of the ubiquitous ideology

Figure 2. Raskar et al. (1998)

framework two stood out and inspired the present design work research. For example, Raskar et al. (1998) developed an interactive project mapping procedure using real-time “computer vision techniques to dynamically extract per-pixel depth and reflectance information for the visible surfaces”25 to foster optical illusions to

people within physical built environment. Raskar et al. intended to investigate how to interact and communicate with as many people as possible through projecting real-time images onto a

spatially immersive display (SID) surface under a natural setting. Usually, SID’s are in confined architectural spaces, for instance in a laboratory where users see and interplay with remote collaborators using a CAVE-like system. The way the office of the future interface was imagined to work was very uplifting but seemed too limited to be used only in closed darkened building spaces. Contrary the way the KOI is expected to work is on an open and transparent surface, which usefulness will be further investigated and explored in my prototypes.

Krietemeyer et al. (2015) designed a full-scale simulation ambience and data processing framework supporting “state-of-art systems that address complex performance criteria of the environment and occupant response”26 in a responsive, dynamic electroactive daylighting

setup. Krietemeyer et al.’s simulation

4.3. Window – A static information medium in the peripheral background

Everyday windows admit light waves to give us the necessary energy to maintain life. Windows protect us from natural conditions, and let us see and experience things happening outside that impact our lives. Essentially windows are physical objects that are defined by physical theory. A window, for instance, used in physical building periphery can be described as the oldest static information medium existing. At the same time monitoring interactions through a window can be described to a similar user experience as when looking at a screen. Correspondingly, Manovich argues that the properties of a screen coupled with a computer is like “looking through a screen – a flat rectangular surface positioned at some distance from the eyes” that has been used “to present visual information for centuries”. He refers to the screen as a frame of “two absolutely different spaces that somehow coexist”.27 Depicting a window

Figure 3. Krietemeyer et al. (2015)

environment to measure energy demands by user needs to be recorded user interactions through the use of gestural control sensors via skeletal recognition. Inspiring results including material compositions and pattern dynamics to define users preferences for visual effects, this team was able to recognise. This refreshing work confirmed that materials and users desire for visual setting making it a core to investigate further such scenario in this study.

as artefact only makes it already a compelling, immersive interface that unites interaction for users between the internal sphere and external world. For example, it is well known that glass being a transparent object is giving many opportunities for interaction for instance by just writing a note with markers to signage a message to user inside or outside a home.

In Interaction design and the field of HCI, the window has been used and explored for various digital solutions. For example, in the pursuit of the “window as an interface” Rodenstein (1999) reflected in his experiments with transparent displays a weather forecast display. He remarked the social aspects and practicality of points in interaction for users to gain experience whether to wear a t-shirt or rain coat.28 As such this is something similar that my findings also confirmed,

only with the difference that my users wanted to experience a more practical and visual advice of what clothes to wear that they owned already. MIT Senselab realised Rodenstock’s research by using it to design Eyestop29 - an interactive weather bus station combined with different

news and stay in touch with people inside and outside the house (Ventä-Olkkonen et al., 2014). Also, my findings led to a similar user feedback, but rather to a news ticker with personal notes as already in use by some Social Media apps. From the point of view that windows can support collaborative work through transparent displays. Li et al. (2014) propose a prototype design featuring a system that should visually enhance interactions on both sides on the screen based on their observation that “affords the ability for either person to directly interact with the workspace items.”31 As such Ventä-Olkkonen et al. and my first findings through CPs lead

to the same user experience of wanting to use the display from both sides, hence sparking interest to examine this more thorough in my prototype design.

Hsu et al. (2014) proclaimed a screen that enables nanoparticle scattering states “the ability

Figure 4. De Niederhausem et al. “eyestop” (2012)

display technologies (De Niederhausem, 2012). For example, the paradigm included an active environmental sensing node, powering itself through sunlight, and collecting real-time information about the surrounding environment. Such features of being of interest as user experience my findings suggested as well as a way to control the shades of the transparent surface. Another approach, the augmented home window concept is a mixed reality display with the aid of “real-time information channel” 30

applications presenting new interaction practices 33 developing new inventions to take different

shapes and forms for home automation products with materials steadily improving for the benefit of the human race. For example, Koike et al. (2010) introduced a new 3D interaction system by use of “2D transparent markers”34 accessible through mobile phones controlling a large

wall display “to enable multiuser, non-contact, robust interaction without spoiling background images”.35 During the prototyping phase the majority of users revealed they would prefer to

access interface through mobile phones or wearables. For instance, the American company Corning Inc. produced an elegant commercial (2011)36 how they would picture the future with

glass products seamlessly being a part of every inch of smart home, office, and shopping mall. In their vision devices would be usable throughout all sorts of technologies.

Figure 6. Koike et al. (2010) Figure 7. Hsu et al., MIT (2014) Figure 5. Li et al. (2014)

to display graphics and texts on a transparent screen can enable many useful applications”.32 Seemingly

an optimal interface would be enabled through such nanotechnology. Also in my findings assumption were made by users that such a graphical display of content would be an ideal state for a user experience. There are so many futuristic visions how the near future will change through rapid prototyping to use of transparent surfaces, glass and displays. For example, leading manufacturers of glass products around the world envision a wide range of possible

4.4. Tangible User interfaces

That tangible user interfaces use ubiquitous qualities in the architectural ambience in transparent displays is as a concept not entirely new. Leaning on Weiser’s model of computation of ubiquity by making “a computer so embedded, so fitting, so natural, that we use it without even thinking about it”, and his vision of calm technology “when technology recedes into the background of our lives” (Weiser, 1996). Much inspired by Weiser’s concepts is Ishii, the patron of tangible interfaces, who together with Ullmer published a visionary essay on interfaces that will “allow users to “grasp & manipulate” bits in the centre of users’ attention by coupling the bits with everyday physical objects and architectural surfaces.” 37 Earlier in this innovative essay, Ishii

founded the Tangible Media Group at MIT, with the aim for TUIs a solid physical framework to digital information and computation assisting the progress by direct manipulation of particles. For example, he inaugurated with many other scientists at MIT novel use cases and digital solutions such for example the URP, AmbientRoom, ComTouch, LumiTouch, metaDesk, SenseTable, SmartSkin and much more.

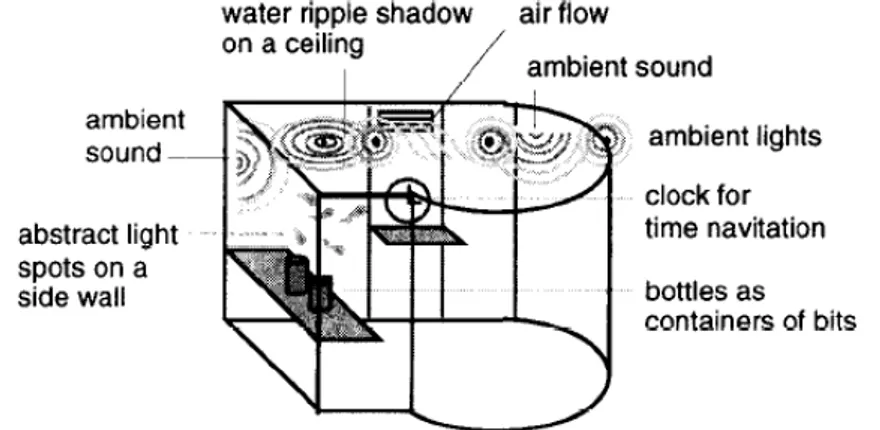

Considerably one much-related avenue to my research, and somewhat inspiring for the design of the KOI, is the concept of Ambient Displays that proposed “a new approach to interfacing people with online digital information”, which they envisioned in architectural space to allocate into the context of employing digital information “on small rectangular windows” in the periphery of people’s attention. Moreover, this calm technology concept embraced to “allow users to grasp and manipulate digital information with their hands at the centre of attention”. The AmbientRoom prototype put the user within an augmented environment “by providing subtle, cognitively background augmentations activities conducted within a room” Wisneski et al. (1998) with ambient media such as light, sound, airflow, and physical motion to analyse human activity awareness and behaviour. In my approach for instance to evaluate how people would

Figure 8. Wisneski et al. “AmbientRoom” (1998)

envision using windows as a kinetic interface scenarios were created, consisting of a few prototype layers, enabling a number of cognitive walkthroughs focusing on the cognitive aspects of interaction with the envisioned prototype system.

4.5. Vision and RFID sensors

Visual perception is a “fundamental human sensory capacity or sensation”.38 Henceforth what

plays much a key role in the visual imagination is how are we viewing and seeing things, and how they appear to be for example according to Gibson’s (1979) considerations “the way in which objects appear to the eye based on their spatial attributes”.39 In cognitive studies,

Neisser (1976) revealed that perception is a “cyclic interaction with the world and an image is a single phase of that interaction”.40 In interaction design understanding how to use various

kind of sensors, computer vision, and depths camera in such way to recreate such sensations is vital when creating new solutions for people. Kolko (2007), for example, stated “Making dialogue between technology and user invisible, i.e. reducing the limitations of communication through and with technology.” 41

The two sensor technologies that are suitable in regards to this study are the vision sensor and RFID sensor. Vision sensors are known to detect, analyse and see renderable objects in their field of view such as shapes, forms, contrast, point clouds, etc. For example, RFID is a complementary technology to vision sensors, but RFID rather provides and trace identity information.

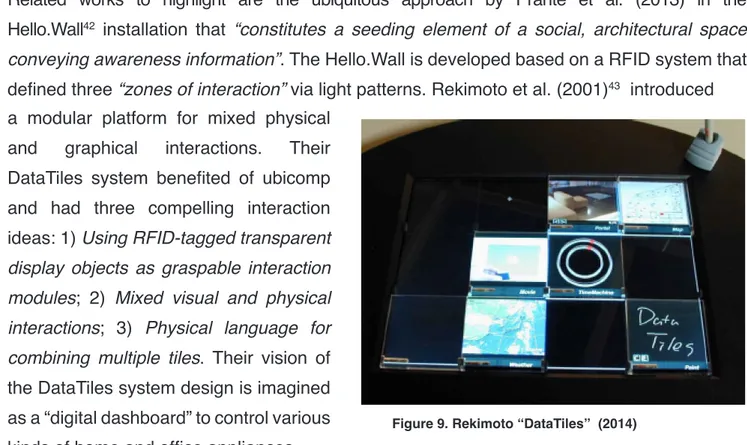

Related works to highlight are the ubiquitous approach by Prante et al. (2013) in the Hello.Wall42 installation that “constitutes a seeding element of a social, architectural space

conveying awareness information”. The Hello.Wall is developed based on a RFID system that defined three “zones of interaction” via light patterns. Rekimoto et al. (2001)43 introduced

In My Fridge by Schilling (2011)44 deals with the process of food management in a futuristic

a modular platform for mixed physical and graphical interactions. Their DataTiles system benefited of ubicomp and had three compelling interaction ideas: 1) Using RFID-tagged transparent display objects as graspable interaction modules; 2) Mixed visual and physical interactions; 3) Physical language for combining multiple tiles. Their vision of the DataTiles system design is imagined as a “digital dashboard” to control various kinds of home and office appliances.

4.6. Computer Vision, depth-sensing cameras, and dynamic environments

Ballard and Brown (1982) defined Computer Vision (CV) as “the construction of explicit, meaningful descriptions of physical objects from images...Descriptions are a prerequisite for recognising, manipulating, and thinking about objects.”45 CV systems do play an utmostimportant role as they can acquire, communicate, and process data of sensors to identify for example audiovisual content, gestures, bodies, objects and RFID tags. For instance, since the mid-nineties, many new approaches has propelled the CV community in an account of the release of cheap depth-sensing cameras combined with sensors to enrich the use of motion through gestural interactions adapting to objects and the anatomy of individuals and their behavioural patterns. The first of such kind was released by Microsoft’s Kinect46 and is

nowadays followed by new devices like Leap Motion47, Asus48, and Orbbec49 amongst others.

The first prototype Nimetön as such was built using a 60x60 cm large transparent plexiglass unit that was suspended to the ceiling, and connected to a Leap Motion, a laptop and a projector with the aim to investigate the user’s gestural interactions on both sides of an augmented see-through screen. The testers received a set of instructions. The observations made were to unravel new knowledge if such setup would enable shareable and collaborative human-machine interaction? Moreover, if such engaging system would support a more spacious and convenient life without cables, keyboards and conventional screens? As well as how user did correspond of shared displays in office settings, and how this influences their work processes and work culture. Based on my findings I would argue that fewer peripherals support a roomy lifestyle, but at the same can be disadvantageous when there is, for instance, an “error” in the system, and a user does have a hard to time fixing the problem. Issues with badly plugged

Figure 10. Schilling “In My Fridge” (2011)

scenario where RFID tags are printable. The data stored on the RFID tags is put at the users’ disposal through an interactive terminal and is applied at the place where perishable goods are kept in – the fridge. In the prototyping phase, my findings with test persons revealed an interest in accessing the visionary Interface through mobile gadgets or RFID tech. Further users expressed a wellbeing once knowing that through their mobile devices a connection could be made to the visionary interface.

or functioning cables are known as common trouble. In my approach for instance, to test how users would correspond in an office setting a mock-up was created where few metal strips were attached to plexiglass and interactions were recorded of users moving magnets on both sides to evaluate their mutual work progress and collaboration.

For example, Rekimoto (2012)50 presented Squama - a modular visibility control platform

through the use of depths camera to regulate and program walls and windows “to accommodate users’ changing needs”. The concept is a matured version of the previously described DataTiles system. Moreover, it is an upgraded adaptation to dynamically control transparent surfaces by satisfying user needs. For instance, privacy matters and “afford indoor comfort without completely blocking the outer view”. Both the DataTiles and Squama display show a fascinating application to exhibit digital information and mix natural phenomena of giving people a glimpse of the state of the world around them in an architectural environment. Despite that there exists much interest in hand gesture recognition systems and sensors, Freeman et al. (2012) pointed out concerns about the reliability of such procedure “most methods either do not operate in real-time without specialised hardware or else are not appropriate for an unpredictable scene”.51 However in recent years, a range of new gesture recognising input devices was

introduced in shape of handy small camera systems, and software systems52 to enable better

tracking of depth detection to capture real-time body movements, 3D camera-computers, and 3D scanners53 for objects, people and structures. Rekimoto’s attempt to dynamically reshape

real physical objects and entire transparent environments to communicate with the user is convincingly a display demonstrating new interface structures. These prototypes are highly inspirational examples for a programmable window system, and therefore this concept was investigated. For instance, envisioning such flippable tiles on a transparent surface, connected to a depths sensor, changing into a darkened backdrop once identifying a gesture, suitable to

initiate a multimodal interface as described in the introduction scenario.

4.7. Multi-touch, NUIs, Surface Computing, and OUIs

NUIs are multi-touch technologies integrated into many devices and displays, often invisible to users, and capable of learning interactions, for example, in mobile phones, copy- and cash machines amongst other devices. Buxton,54 55 categorised multi-touch and gesture-based

user interfaces into “three main segments: Kiosks, point-of-sale devices, and mobile devices.” Solutions to emerge supporting touch are according to Schöning et al. (2008) using “sensing techniques of light interruption, capacitive, camera-sensor optical based, and surface wave touch”.56 For example, SensorFrame is a multi-touch system that uses cameras detecting

fingers and gestures with the “ability to sense simultaneously the location of multiple points of contact” 57 (McAvinney, 1986). Buxton et al. (1999) demonstrated a 50-inch display using a

direction-based gestural interaction feature to let users through simple finger body language “cause animated images to play or stop”,58 and enabling collaboration and discussion on

designs through large-format electronic displays. A decade later he actualized an interactive surface technology based on optical IR sensors embedded inside an LCD to enable multi-touch interaction with the design approach bringing “capabilities of surface computing to thin displays” 59 (Buxton et al., 2009). Another project that was considered relevant is an optical

multi-touch device60 which is a 2D frame with many smaller sensing modules, allowing a full

sensor to track hand and fingers via free-air gestural interaction (Moeller and Kerne, 2012).

designed through the use the Kinect - a ubiquitous display toolkit to support projections on any surfaces enabling the “rapid development of multimedia-rich and multi-touch opportunities within a physical space”, for example, usable on any artefacts such as tables, beds, chairs

Figure 13. Moeller & Kerne (2012)

Related but rather a subfield of NUIs is surface computing on multi-display environments. According to Wobbrock (2009), surface computing distinguishes itself from “traditional input using the keyboard, mouse, and mouse-based widgets is no longer preferable; instead, interactive surfaces are typically controlled via multi-touch freehand gestures.”61 Notable projects are

for example Microsoft’s LightSpace (2010) prototype62

that through depth cameras and a projector on the ceiling allows projection of multimedia onto any solid surface including the user’s body making architectural environments to interactive spaces. For example,

(Hardy, 2012).63 64 At first sight may surface computing be considered the very close concept to

desired functionalities how my prototype could work, but this system was developed for single user interaction only, and therefore limits the desired collaborative aspects as well the use from both sides of the screen. To analyse all these approaches was relevant to become aware of current touching technologies and to understand better the broad given possibilities for users. The next step was to learn about displays on real-world objects allowing more realistic user interfaces.

Holman and Vertegaal (2008)65 profiled Organic User Interfaces (OUI), which capture a new

philosophy promoting new materials, flexible shapes, and deformability. They exemplified that

“an OUI is a computer that uses a non-planar display as a primary means of output, as well as input”. OUIs pursue three principles that are input equals output, function equals form, and form follows the flow. Instead of simulating objects and their motion on the screen, Parkes et al. (2008) propose KOIs as a new category of display devices to “attempt to dynamically reshape and reconfigure real physical objects, and perhaps entire environments to communicate to the user”.66 By definition, they assigned kinetic interaction design to be a part of the larger

framework of OUI. Solutions that belong to this category are for example E-Ink (a paper-like display technology), Foldable Input Devices (double-sided foldable displays), flexible LEDs, or OLEDs (thin, flexible, transparent displays that are more efficient than LCDs). For example, Taptl (2015) developed an Interactive Transparent Display (ITD) suitable for indoor and outdoor conditions aiming to help users in their daily needs (e.g. streaming food making programmes) and supports a more spacious and convenient lifestyle (Figure 15). ITDs are capable as two-ways viewable displays and as touchscreen kiosks. WiFi is integrated as

standard ports as USB, HDMI, etc. Based on the same concept, but mostly through the use of LCD screen coupled with sensitive multi-touch functions the gadget market was flooded in 2012 by advertisement agencies with interactive transparent LCD screens empowering immersive experience through for example refrigerators68 and flat vertical build stands. I would

argue that it is likely that Organic and Kinetic user interfaces and displays will become the norm of the future. Simultaneously this chapter provides insights, and answers the question what kind of solutions there are in place to use transparent surfaces efficiently.

4.8. Gesture recognition

The first chain of interpreting hand gestures synchronised with voice was introduced by McNeill (1996)69 who stipulated “gesture and speech convey information about the same

scenes, but each can include something that the other leaves out”. In his classification, he declared a method for collecting and coding gestures. He interpreted sign language as iconic, beat, metaphoric, and deictic gestures.70 What can be associated is that sign language plays

a crucial role in the communication process between people when voices cannot reach them. Deaf people use sign language as their principal method of communication. Hand poses represent the alphabets of sign languages in many different linguistic groups. Regarding HCI Quek developed the elemental structure for gesture vocabulary based on spatiotemporal vectors of static poses, and dynamic gestures suitable to be translated into programmable data and signals for computers. Moreover, McNeill defined the basics that apply until today between machines coupling of human through gestures “Prospective vision research areas for HCI include human face recognition, facial expression interpretation, lip reading, head orientation detection, eye gaze tracking three-dimensional finger pointing, hand tracking, hand gesture interpretation and body pose tracking.”71 Quek points out that gesture styles built

on categories that originate on a broad range of information from varied disciplines such as semiotics, social and cultural anthropology, cognitive science and psycholinguistics.

However, the literature review has shown that many works within HCI rather address the phenomena of how machines are coupling with humans instead of describing how hand-gestures data should be analysed. For example, the novel system Videoplaza occupies human gesture using live video image monitoring the “behaviour of graphic objects and creatures so that they appear to react to the movements of gesture to the participant’s image in real-time” (Krueger et al., 1985).72 For instance, Charade (Baudel et al., 1993) a remote

rather focuses on the advantages of the natural form of interaction of using hand gestures for manipulating objects.

For example, during my design process, repeatedly users reacted and manipulated information based much on McNeill’s behaviour classifications such as the beat and deictic gesture while using the prototype. Other often recognised gestures were hand movements such as waving, pushing and swiping. For example, during the iteration process, hand movements and gestures were recorded through the GRT gesture recognition toolkit. After the GRT cross-platform,73 machine-learning library was installed and connected to Kinect sensor, gestures

were spotted and learned by the system in conjunction with the transparent surface. As a final system, I imagined to program and making a KOI to mostly dependent on pointing (deictic) gestures combined with hand movements only. Moreover, the imagined system should promote collaboration, and allow multiple people to use it at the same time. For instance, RFID technology provides such control solution. Once a RFID tag is scanned additional people would be added to the system, and able to use the same interface through their gestures.

5. SUMMARY

As Parkes et al. (2008) state in their essay about KOIs “the entire real world, rather then a small computer screen, becomes the design environment for future interaction designers”.74 The

idea appears potential due to recent developments in kinetic interaction design, architecture, and HCI engineering amongst others fields. All related work material is giving a hunch that there exists an interest in turning computer interfaces more into a symbiosis with the natural world in a more dynamic architectural setting. Simultaneously rapid prototyping efforts with new materials as well as the availability of more depths-sensing gadgets, multi-display environments, switchable glass on the market are considered a positive sign. The literature review is backing the validity of the idea of the usefulness of such interface, both through transparent surfaces or walls in an architectural environment. Furthermore, the research provided more clues of what kind of approaches, applications, materials and technology at present times is available. Moreover what kind of consideration, problems, and concerns are known. Additionally, user experiences are listed from the design process of developing prototypes with freehand gestures with an own interface system.

6. EXPLORATORY RESEARCH & FIELD STUDIES

6.1. Brainstorming, set up of goals and requirementsTo kick off the exploratory research during the first week of this study a workshop was set up with a small group of four interaction designers at the Malmö University to activate interventions, and prompt curiosity to ideate around that topic to set goals and requirements. To generate first leads and get a better understanding the participants of this brainstorming session were student colleagues. The questions used were of open-ended unstructured manner, which was guided by a facilitator, leading to conversations and exchange of practices around transparent surfaces and possible ways of how to interact with such compelling interface. In this context, various types of requirements were proposed and discussed such e.g. functional versus non-functional requirements, content, data, share-dealing application domain, natural lighting, operational environment, technical, user needs, compatibility issues, characteristics of the intended user group and their abilities, user and usability requirement for the proposed system. Moreover, to consider peripheral awareness, usage benefits (both sides), challenges with transparent displays, factors of daylight, suitable as weather forecast interface, holograms, augmented reality, virtual reality, and a good interface for collaborative communication. Further, for example, to consider user experience in a scenario if the product is used from outside in a cold climate. Then need goals relating to the display not freezing up or slowing down under minus temperatures, and the interface being operable by people wearing thick gloves. After the collecting and sampling the primary research data first clues enabled the process to start seeking for reasonable literature that would give more grounds how to learn more about the subject. For data collection photography and post-it notes were used.

During the following week, the decision was made to initiate and use of the first methodology for a field study by producing a set of cultural probes to explore feedback and find more awareness for this design project. In the workshop of the University, I laser cut 5mm thick transparent plexiglass sheets (24cm wide x 19cm high) to include them in the kits. Further, CP sets equipped with whiteboard markers, task (e.g. to write or paint on board), demographic inquiry, and a graphical flyer with sender/reply option asking to answer one question only with a narrative to enabling a dialogue: “Imagine this sheet to be a computer screen interface accessible through hand gestures! How would you use it?” Furthermore, for inspiration, a set of implications that could relate to such usage were included on a piece of paper, for example stating information such as content, input, output, devices, touch, kinetic, graphic, texture, form, message, game, art, sound, image and video. The probes then were given to 16 participants compromising visual and street artists, philologists, cultural producers and families with children to evoke inspirational data. 9 participants were living in a house in the countryside, and 7 in an apartment in the city, whereas 3 were part of a design studio. The returned kits included self-recorded notes, and revealed a variety of findings determining values, new ideas, revealing hidden clues and activities relevant to the data gathering process. During pick up of CP, follow-up interviews were conducted.

suggestions that such product might be just useless. One male participant liked the idea much and felt this way the house would always be kept safe and protected from theft and burglaries. Or at least he said such “transparent computer” could instantly ubiquitously trigger a silent alarm upon burglary and contact the police by sending a live feed content. 2 teenage girls came up with a social interaction scenario. To express their intentions, they drew images expressing their feelings on the other side of the sheet to communicate with each other.

Figure 18. Cultural Probes kit (Source: author)

The findings from participants living in the countryside (claiming to be closer to nature itself) differed from ideas of people residing in the city. For example, one family with three children imagined that sheet could be used a “multimodal” display to show a clock, but also popup info on the screen to show when buses would arrive/depart, give weather updates for the day, provide useful advice what plants, vegetables could be planted or harvested. Also, 2 persons questioned what’s the point, making

Another 2 participants could well imagine such display to be used as TV and multimedia (photo and video) display, whereas one other said to be using it a screen for making art with it. For example, one of the designers liked the transparent display to use as kind of blueprint projector to pinpoint in a 3D environment to sculptures, that he would create with his hand on the other side of the screen. In the overall people liked the idea of playing with the thought that a computer would be made of material being transparent, as well as to use a computer without a mouse. Further people felt comfortable with the idea of fewer cables. There was a mixed feeling of all participants of using the interface with hand gestures versus touching the screen with fingers. One argument they stated in the follow-up interviews was that with touching the screen they would be sure that the function they imagined would be executed. On the other hand, they were not sure how constant a touch-free interaction would be. But if it would work, it would be “cool” and user-friendly as they could use interface even from a nearby distance. The participants who received the probes were from twelve 12-70 years, with an average of 40 years of age.

The notions and discoveries through this used methodology disclosed a wider range of possibilities than those a personal approach would ever expose in the ideation process. To analyse the data from probes I used an affinity diagram to sort ideas and diaries transcribing these on cards. All notes then were organised into groups based on their natural relationship and considered how they effect on each other. This method significantly helped in the interaction process to establish incentive requirements to learn about the target group and what kind of means an interactive product based on kinetic user interaction could provide. As well this practice encouraged creating personas to facilitate further field studies how to focus on users behaviour and tasks.

Figure 19. Testing an imaginable

6.2. The Scenario workshop

To be able to understand prospective users better in conjunction to design a futuristic product and to apply a framework for observation in the field following type of user in a use scenario was created. This narrative was used to explore my design concept by making more concrete the users who will use my design, and the types of things they will do with it. This story was employed in a workshop held in a townhouse, partly utilised as home, and partly as an office. The participants were a family with three adults (aged 48-54) and four teenagers (aged 15-17).

Persona One: Persona Two:

Name: Sarah Gold Name: Vicky Gold

Age: 48 Age: 16

Occupation: HR-Manager Occupation: Grammar school student

Status: Divorced Status: Single

Location: London Location: London

Technical expertise: Intermediate Technical expertise: Expert

Sarah recently moved with her teenage daughter Vicky into a newly built residence with semi-detached houses with large windows, surrounded by a small garden with the view to the street level. All houses are technology advanced and equipped with a smart home automation system connected to EC windows to save on energy consumption, control lighting, regulate privacy towards the outdoors, and enable a home theatre entertainment system directly on the window plane. Sarah just returned home from work. The digital interface of the smart home control system is ubiquitously integrated into the window surface and initiated by using flick air-gestures by hand-gesture only. Sarah standing about 1,5m away from the screen lifts up her hand and points toward the window enabling a part of the surface to darken in its appearance and projecting a user-friendly interface. A horizontal, text-based display information widget opens promptly, and Sarah discovers in the news ticker a personal message from her daughter Vicky, who is now in the shop to buy groceries to prepare dinner for them tonight. Being exhausted, Sarah wants to listen to her favourite music and sits down to relax on the sofa. She enables by waving her one hand towards the smart home interface sensor, where a panel appears with icons. She finds the home stereo, and few playlists and empowers one. The music starts to play. Sarah is enjoying the kinetic interaction experience the smart home system provides. Now she does not require any longer to walk around the house to press and authorise many different devices, as the smart home system ubiquitously unite everything needed from one spot fading naturally away in the periphery of the architectural setting.

Figure 21. Personas: Vicky & Sarah Gold (Source: creative commons )

The above scenario was used to collect additional qualitative data on multiple viewpoints, but as well to problematize existing settings versus envisioning future settings (Rogers and Belloti, 1997).75 For example, imagine a residence with a smart home control system: How efficient and

useful would such system be in home or office setting? Contemplating a few of my initial user goals for the KOI such as calm technology, usage benefits on both sides - all year around, the collaborative communication aspect and touch-free interaction the next reasonable questions are: Does such technology appear to be central to the users’ goals? Which potentials do the users see in the kind of interface design and advanced technology? How can people through kinetic interaction use digital interfaces on transparent surfaces?

To agree on, two things all users mentioned which were that such interface would be a perfect medium to deal with matters regarding the weather. Secondly, if this NUI would provide a consistent immediate and seamless response this technology and interactive user experience would have a chance to gain a wider popularity among users.

Moreover, the structured questions included their present preferences of how they use their computers, mobile devices, preferred applications, social media? How important are security and privacy measures? How do users explore/use content? What people do first when starting their devices? How do people operate their computer, and how their mobile devices? One could argue that such futuristic screen set up embedded into the architectural environment eventually could replace alone standing devices such as it is norm today in the set up of single computer with external screen or TV etc.

During the seminar, the participants were encouraged based on the narrative to envision how they would use this kind of product? The workshop included lo-fi paper prototypes with hand-drawn sketches pertaining the use scenario. Also, drawings were attached to mirror in bath, fridge in the kitchen, doors, window wall and a stand in the middle of the office room. Each sketch included the leading research question: How can people through kinetic interaction use organic user interfaces on transparent surfaces? All participants received pens and were asked to write down their ideas, annotate drawings, explain the possible use and give a vague usability feedback upon each situation (findings are displayed in below Figure 22). The data was recorded in writing notes and digital photography, observing each attendant imagining such scenario. After this procedure, semi-structured interviews and an open discussion held with participants of how they would perceive such change in their environment.