IT 12 056

Examensarbete 30 hp

November 2012

Large Scale Multimedia Messaging

Service Center with Optimized

Database Implementation

Erdem Aksu

Teknisk- naturvetenskaplig fakultet UTH-enheten Besöksadress: Ångströmlaboratoriet Lägerhyddsvägen 1 Hus 4, Plan 0 Postadress: Box 536 751 21 Uppsala Telefon: 018 – 471 30 03 Telefax: 018 – 471 30 00 Hemsida: http://www.teknat.uu.se/student

Abstract

Large Scale Multimedia Messaging Service Center with

Optimized Database Implementation

Erdem Aksu

The standardization forum 3GPP has specified Multimedia Messaging Service (MMS)

standard including an MMS Relay-Server that allows users to send and receive

messages including multimedia contents like text, images, audio and video. A large

scale Multimedia Message Service Relay-Server implementation needs an efficient

database solution. The database should be able to support storage and manipulation

of data for millions of transactions. Consistency and availability should be ensured

under heavy traffic load to prevent data losses and service delays. In this thesis, I

present the implementation and extended evaluation of a store and forward

mechanism for messages kept in MMS Server and I propose a solution to use Berkeley

DB as a back-end to Mnesia DBMS to achieve higher storage efficiency. I show that it

is feasible to replace database back-end of Mnesia with Berkeley DB while keeping

transparency to application level and having an efficient message store in terms of

higher throughput under heavy traffic.

Sponsor: Mobile Arts AB

IT 12 056

Examinator: Lisa Kaati

Ämnesgranskare: Tore Risch

Handledare: Martin Kjellin

Preface

I would like to address special thanks to my advisor, Martin Kjellin for his support,

ad-vice and attentive guidance throughout this thesis work. I also would like to thank Lars

Kari for being a valuable source of ideas and for his insightful comments that helped me

accomplish this thesis. I would like to express my gratitude to Tore Risch, my reviewer at

Uppsala University, for reviewing my work and sharing his worthwhile opinions and also to

Lisa Kaati, my examiner at Uppsala University, for her invaluable guidance that helped me

complete this work. Furthermore, I would like to express my thanks to all my colleagues

in Mobile Arts for their assistance, motivation and the opportunity they gave me to work

on this thesis. Finally, my warmhearted thanks go to my adored mother and father. Their

love and valuable support gave me the highest motivation and courage to achieve my goal.

I am grateful to all people who supported me. Without their help, it would not have been

possible to successfully complete this study.

Contents

1 Introduction

1

1.1 Motivation and Problem Statement . . . .

1

1.2 Method . . . .

4

1.3 MMSC Development . . . .

5

1.4 Limitations . . . .

6

1.5 Alternative Approaches . . . .

6

1.6 Contributions . . . .

7

1.7 Thesis Structure . . . .

8

2 Background

9

2.1 Erlang/OTP . . . .

9

2.2 Mnesia . . . 10

2.3 Berkeley DB . . . 11

2.4 Port Drivers . . . 12

2.5 Multimedia Message Service (MMS) . . . 12

2.6 Store and Forward . . . 14

2.7 MA Framework . . . 15

2.8 Related Work . . . 15

3 Design and Implementation

19

3.1 Transparency . . . 19

3.2 Abstraction Level . . . 20

3.3 Berkeley DB - Dets Interface . . . 21

3.4 MMSC Design . . . 22

3.4.1 MMSC Front-End . . . 24

3.4.2 MMSC Back-End . . . 27

4 Evaluation

35

4.1 Test Platform Setup . . . 35

4.2 Functional Testing . . . 36

4.2.1 Functions of Mnesia Exports . . . 36

4.2.2 Functions of System Components . . . 36

4.3.1 mnesia meter . . . 37

4.3.2 mnesia tpcb . . . 40

5 Conclusions and Future work

45

5.1 Discussion . . . 45

5.2 Future Work . . . 46

Appendices

51

A DB Driver Implementation

51

A.1 Erl driver Usage . . . 51

A.2 BDB Library Usage . . . 52

B TPC-B Test Outputs

55

B.1 TPC-B on BDB Back-End . . . 55

B.2 TPC-B on DETS Back-End . . . 71

List of Figures

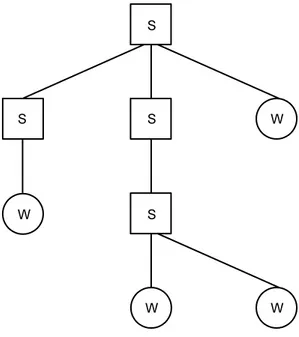

2.1 Supervision Tree . . . 10

2.2 Implementation of MMS

MInterface Using WAP Gateway [4] . . . 13

2.3 Implementation of MMS

MInterface Using HTTP Based Protocol Stack [4]

13

2.4 Example MMS Transaction Flow . . . 14

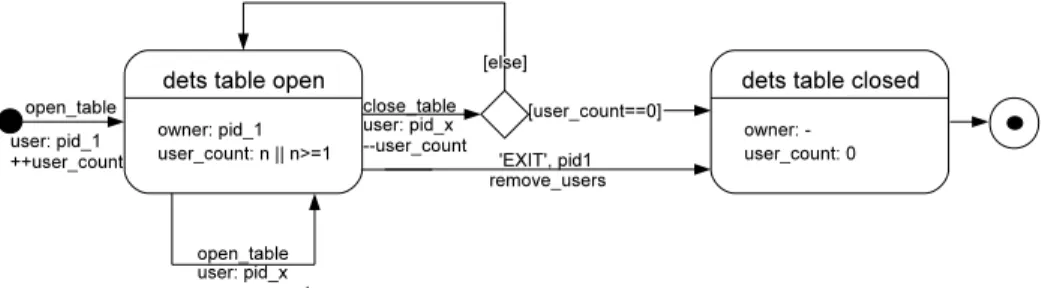

3.1 Dets Table States . . . 21

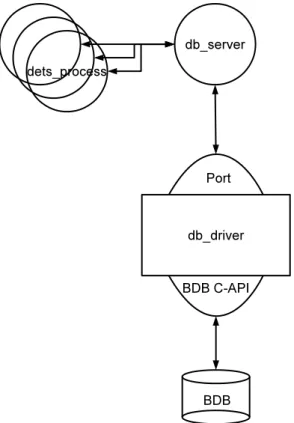

3.2 BDB-Erlang Communication . . . 22

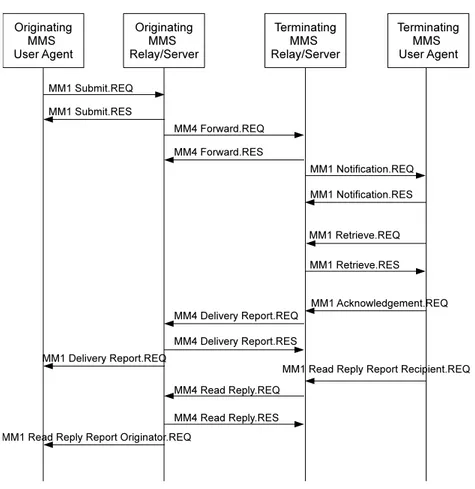

3.3 Transaction Call Flow of MMS . . . 23

3.4 Data Flow Diagram of MMSC . . . 24

3.5 Entity Relationship between mmsc subscriber and mmsc profile . . . 26

3.6 MMSC Back-End Modules and Interactions . . . 28

3.7 MM Notification Module State Machine . . . 34

4.1 Test Environment Setup . . . 36

4.2 Column Chart for mnesia meter Results . . . 40

List of Abbreviations

BDB

Berkeley DB

ESME

External Short Messaging Entity

GSM

Global System for Mobile Communications

IMSI

International Mobile Subscriber Identity

MA

Mobile Arts

MM

Multimedia Message

MMS

Multimedia Message Service

MMSC

Multimedia Message Service Center

MSISDN

Mobile Subscriber ISDN Number

SMS

Short Message Service

Chapter 1

Introduction

As capabilities of mobile networks and mobile phones evolve, demand on services

pro-vided by telecommunication systems increase. Increasing demands makes services to be

provided to rapidly growing number of users. Multimedia Messaging Service is a popular

and ubiquitous service provided by mobile operators widely around the world. The

stan-dardization forum 3GPP specified Multimedia Message Service (MMS) [6, 7] which allows

to interchange a set of media types including text, image, audio, video and possible future

formats of media, between service users. Likewise Short Message Service (SMS), MMS

provides a non real-time service which does not require the realtime delivery of messages

to recipients. Since the terminals of MMS are mobile phones, these devices might be turned

of or they might lack signal reception and this aspect leads to other needs of mechanisms

to deliver messages, one of which brings the requirement to store multimedia messages

before delivery. This thesis work studies possible approaches to come up with an efficient

storage mechanism that is going to be applied on a combined implementation of MMS

Relay-Server where MMS Relay functionality implemented on the front-end and supports

services like interaction with MMS Clients and messaging activities with other available

messaging services, whereas MMS Server functionality implemented on the back-end that

provides storage services for MM messages.

1.1 Motivation and Problem Statement

Scalability in telecommunication systems is a significant aspect since the number of users

of a service can grow arbitrarily. While number of users of a system differs from

opera-tor to operaopera-tor, various parameters like population of service areas, culture, economy, legal

regulations play important role on prevalence and popularity of the service. Efficiency is

a key fact to achieve scalability of a system by increasing the throughput. An efficient

MMS Relay-Server should handle incoming traffic by processing and transmitting

mes-sages. Since transmission of messages are dependent on human interaction, under heavy

traffic load, MMS Server requires persistent storage of messages. Furthermore, while

pro-cessing messages, subscriber based information is required. Thus subscriber database is

needed to keep user data. Having persistent storage requirements, database accesses

ap-pears to be the bottleneck that affect the system throughput. Thus, system throughput of

MMS Relay-Server can be increased with an efficient storage back-end implementation.

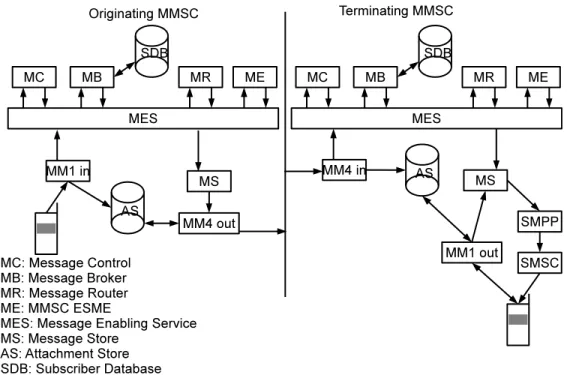

The Multimedia Message Service Center (MMSC) presented in this work combines

MMS Relay and MMS Server and operates as a single entity. MMSC is is divided into

two subordinate components and these components are referred as front-end and back-end.

MMSC front-end and MMSC back-end undertakes MMS Relay and MMS Server

function-alities, respectively. A subscriber database for MMSC is not needed to be developed from

scratch but the data model should be designed and implemented using an existing

commer-cial implementation of subscriber database framework which was provided to me by the

company Mobile Arts AB which supported and supervised this work. On the other hand,

for handling transmission of multimedia messages (MMs), new mechanism needed to be

developed for storing and forwarding. Although MMS is a non real-time messaging

ser-vice like SMS, MMS comes with a significantly different delivery mechanism than SMS.

A subordinate application under MMSC back-end needs to be developed to implement this

delivery mechanism. This application requires an efficient database implementation and it

should be based on persistent copies. Since the time between arrival and delivery of a MM

is arbitrary, MMSC has to keep the MM without causing cumulative delays under

contin-uous traffic. Persistent storage options brings overhead of disk access but disks provide

required space to store large amounts of data. To achieve efficiency, I investigate the

per-sistent storage options that can ensure consistency for MMSC back-end.

To carry out this thesis work, Erlang/OTP is chosen as development platform. Erlang

packages comes with Open Telecom Platform (OTP) which includes Mnesia DBMS which

makes Mnesia ready to use by system developers since it does not require any

integra-tion using external interfaces. Thus, I focus on persistent storage opintegra-tions of Mnesia DBMS.

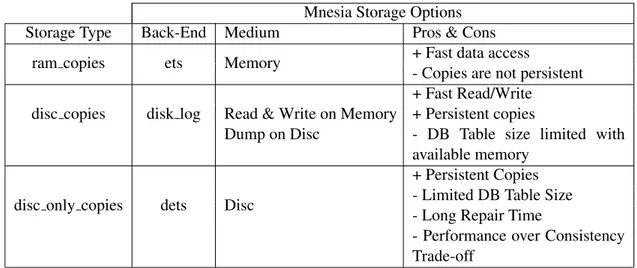

Mnesia provides non-relational, key/value database service to Erlang developers which also

brings possibility to choose which physical storage media to be used. Mnesia supports two

kinds of disk and one memory based storage options. For any replica of the table on any

given Erlang Run-Time System, that is called a ”node” in OTP context, the storage type is

defined by providing type and node list as an option to table creation function of Mnesia.

Possible options are disc copies, disc only copies and ram copies. For persistent storage,

Mnesia offers disc copies option which is based on logging facility provided in OTP and

disc only copies option which uses disk based term storage module Dets.

Mnesia disc copies option dumps the logs directly to disk but it keeps all off the data

also in memory and always read from memory. Thus disc copies option is much faster than

disc only copies but limits the database size to available memory of the system. To write

to and read from disk I needed to use disc only copies option which uses Dets tables.

Dets module, that is used to implement disc only copies in Mnesia, provides a

key-value storage of Erlang data types by writing into DAT files on disk. A Dets table is a

Mnesia Storage Options

Storage Type

Back-End Medium

Pros & Cons

ram copies

ets

Memory

+ Fast data access

- Copies are not persistent

disc copies

disk log

Read & Write on Memory

+ Fast Read/Write

Dump on Disc

+ Persistent copies

- DB Table size limited with

available memory

disc only copies

dets

Disc

+ Persistent Copies

- Limited DB Table Size

- Long Repair Time

- Performance over Consistency

Trade-off

Table 1.1: Mnesia Storage Options

collection of a Erlang data type kind which are called tuples. Tuples constitute a data type

with arbitrary number of other data types. A record in a dets table is defined by a specified

element in the tuple. The file size for Dets tables are limited to 2GB (4GB with 32 bit

integer file offset [8]) and Mnesia offers table fragmentation in case of larger storage needs.

Table fragmentation allows a table to be spitted into specified number of parts. Table user

accesses a fragmented table with the access module ”mnesia frag” which computes hash

value from record key and finds the right fragment to read. Then table access is done as

usual on specified fragment. Dets tables must be opened first to perform any operation on,

and should be closed properly. In case of an abnormal erlang runtime system termination,

Dets table will not be closed properly, then Dets module will automatically repair the table

which will take significant amount of time [11]. It is stated that Dets tables must be opened

with disabling automatic repair functionality, if not, Mnesia may assume tables were closed

properly [9], hence skip repairing the corrupted disc files. Dets uses a buddy algorithm to

allocate spaces in the Dets file and keeps the space allocation scheme in memory. Since this

data structure will be lost if erlang virtual machine crashes, one reason for long repair time

is to build up space allocation scheme of the disc file into memory during system recovery.

While using dets tables for storing MMs in store and forward mechanism, data will be

inserted and deleted for every traffic case. One expected drawback is the internal

fragmen-tation in allocation scheme to grow when using the buddy algorithm, unlike in case of a

dynamic allocation algorithm. Growth of this internal fragmentation will result in a larger

memory allocation scheme kept in the memory.

Decision between ensured consistency or performance left to system designers by

Mnesia through configuration options. Dets usage in Mnesia can be configured to select

strategy for data dumps. Mnesia can dump transactions directly to disk to improve

performance, but system failures may result in data inconsistency. On the other hand

temporary transaction logs can be used to dump transactions to prevent inconsistency, but

this strategy reduces dump speed. Mnesia configuration of MMSC uses dump log in place

strategy, which is also default behavior of dets tables.

1.2 Method

In this work I propose an efficient database solution for MMSC by replacing mnesia

back-end, so MMSC back-end can use an alternative medium for storage while mnesia

front-end kept without any change. This solution gives the chance to test MMSC both with

current mnesia back-end and with newly developed back-end without changing MMSC

implementation. As investigated above the target back-end to replace is Dets module.

In this section, I analyze how to integrate new back-end to Mnesia and what database

system to replace Dets. To keep the current mnesia front-end, options to use other database

management systems with alternative erlang interfaces defined specifically for those

systems is not viable to reach my goal mostly because interfaces bring extra overhead.

Interfacing an external DBMS can be the bottleneck of database accesses

The interface to selected database should be placed to a single position so mnesia

can perform calls to to such interface to perform operations. Mnesia functions uses dets

module’s callbacks to perform consistent storage operations. One idea is to replace dets

calls with new back-end’s interface module. Drawback of this approach is that mnesia does

not interface dets through one medium but mnesia modules uses dets callbacks directly.

Instead of routing dets calls to another module or interface, it is more feasible to use same

dets callback functions and route table operations inside dets. Dets module already offers

a good framework to start with and callback functions were ready. Using current dets

module, only modification needed is to redirect disc accesses to new back-end. Mnesia

starts the server in dets module which will handle database operations. By making changes

in the server code, it is possible to keep all mnesia functionality and abstract new back-end

from mnesia. Since the dets callbacks are also kept, any dets user will not notice any

change in behavior. With the changes, the server looping for any opened dets table will

forward operations to the new back-end’s interface which will access to external database.

This newly introduced interface should map forwarded dets operations to external

database operations. To ease mapping operation and be compatible to support similar

behavior, external database should support similar operations that dets support which

operate on keys and values. For this reason a key-value store would be a much proper

selection than a relational database management system as the external back-end. There is

many key-value store database options offering different features for a variety of purposes.

The objective in my case is to place an on-disc storage back-end for dets module and

compensate weak points of dets. An embedded storage on local disk is viable to replace

dets since it does not bring communication overhead. Berkeley DB provides these features

with its persistent disk storage and it is a reliable system that is used commercially and

BDB is documented well. Berkeley DB meets the storage capacity needs, it supports hash

table based access method like dets, B+ Tree access method which I use in configuration

and recno access method which is built on B+ Tree and ables to store sequentially ordered

data values [17]. I do not use recno type in this thesis work, but it is a promising solution

for dets to support ordered set type of tables. BDB has a different approach to dump the

updates to disk than mnesia, that can improve consistency.

BDB’s approach to persistent storage addresses the weak points of dets. It uses a

write-ahead logging approach while dumping transactions to disc. With this approach, BDB

writes a log entry to the log file with the state before and state after for any data change

that is going to be done on the database. This log entry is guaranteed to be flushed to

disk before any changed data is written to memory. When the application commits the

transaction, BDB writes the data pages in memory to disk. A similar security mechanism

in Mnesia is obtained by setting ”dump log update in place” configuration parameter to

false. The significant difference between two approaches is that, Mnesia uses temporary

copies of original database files to dump the transaction log instead of keeping a log file,

and then renames the temporary files. Since dets files are organized by linear hash tables,

write operation requires key lookup, on the other hand bdb write-ahead logs are written

sequentially and data pages are written by random access to memory [11].

1.3 MMSC Development

The major task for developing a MMSC back-end is to design and implement a message

store application that undertakes message storage and transmission task to recipients. The

functionality of the message store that is described in this work should not be mixed up

with a configurable network based storage like message inbox. Instead, message store is a

temporary storage used in store and forward mechanism that keeps MMs until delivery to

recipient. The storage that provides a subscriber inbox is specified in 3GPP TS 23.140 and

it is called MMBox [7] and it is out of scope of this work.

MMSC front-end development is not in focus of this thesis work since it is in scope

of another project. MMSC as a MMS Relay-Server uses various protocols to interconnect

with external entities which are specified in 3GPP TS 23.140 [7]. Out of these protocols,

MM1, MM4 and MM7 were in scope of MMSC front-end project [18]. The front-end was

not ready to be used during my development, but I had the opportunity to use MM1 and

MM4 protocol stacks which were needed to run traffic cases those are in scope of this

the-sis. I implemented the rest of the front-end components the way that they just simulate the

job they suppose to do.

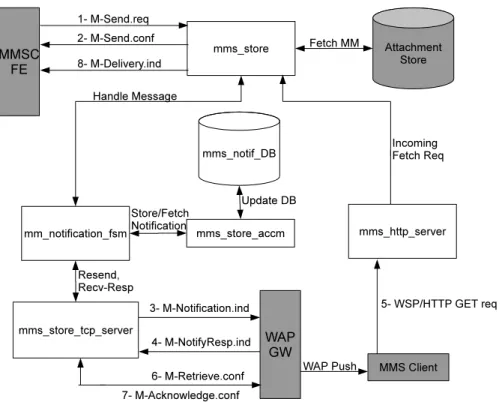

Upon receiving MM, message is stored and MM1 notification message is pushed to the

recipient. WAP Push [3] mechanism over SMPP [2] is used for notification messages. When

subscriber is notified by receiving a SMS, MM can be downloaded to terminal through

HTTP protocol. This traffic case might get complicated with failure of notification delivery

and according to reasons of the failure, various actions can be taken on traffic. Handling

MM traffic with storage is implemented in the way that it is done in SMSC product of

MA by implementing similar scheduling, queueing and bearing mechanisms. These

mech-anisms and message store together composed so called mms store application as the

back-end for MMSC.

1.4 Limitations

This thesis focuses on MMSC back-end development and investigating efficient storage

back-end alternatives for MMSC. Since MMSC combines MMS Relay and MMS Server

functionalities, the services and capabilities of MMS Relay and MMS Server are divided

into MMSC front-end and back-end logical entities. Communication in between front-end

and back-end is done via a local erlang application which implements an MA proprietary

communication protocol. Although MMSC front-end development is out of scope, since

subscriber database resides at front-end, I develop a prototype of MMSC front-end that is

capable of simulating traffic.

Transcoding between media formats of multimedia message content is excluded from

the scope of this thesis due to its complexity that it would increase the amount of work, as

well as the amount of code. According to the system needs, I limit the possible database

op-tions by excluding relational database management systems, therefore the analyzed database

options in this work includes only key-value based database management systems.

1.5 Alternative Approaches

To achieve the goal of this thesis, the work that should be done can be seperated into two

major tasks. One is about integrating an external DBMS to Mnesia back-end as a

persisten-t/consistent storage option which should be an alternative to Dets storage currently used by

Mnesia. And evaluating the alternative end. Second task is to develop MMSC

back-end for multimedia message storage and evaluating the system with the alternative Mnesia

implementation.

There could have been alternative ways to accomplish first task which I did not

pre-fer for various reasons. Main difpre-ference could have been selecting a difpre-ferent DBMS than

BDB. I investigated some of the non-relational databases like CouchDB and Scalaris. If I

had chosen CouchDB to replace dets, I would need to implement a HTTP client for using

JSON API of CouchDB. I could take same approach if I had chosen Scalaris also, or I could

use Java or Python APIs provided by Scalaris. APIs of to these databases eases scalability

for distributed systems but does not bring performance advantages for local data storage

since they bring the communication overhead while I can use dynamically linked shared

libraries to communicate with BDB. Another issue with Scalaris is that it does not support

persistent storage. Without persistent storage, preserving data consistency would be hard

to get in MMSC system setup since MMSC will not be running on an available distribution

topology for paxos protocol which is used by Scalaris to ensure consistency. Considering

interfacing options with back-ends and consistency concerns, and taking into account that

BDB having well documentation and support plus broader usage in practice, I eliminated

these databases. Detailed information about CouchDB and Scalaris is presented in related

work 2.8 section.

Another very promising way to interface external database would have been using

Mne-siaex. Mnesiaex project introduces new behavior which can be patched to mnesia [10]. It

provides an extensible storage API which help to integrate another database system as

back-end to mnesia. Mnesiaex would offer a better structure at Mnesia back-back-end since it brings a

new replica type which can be used during database table creation. I could not use Mnesiaex

due to the fact that it is not supported by all erlang distributions and it would make MMSC

dependent on a developing project at its early stage. Additionally it does not provide the

level of transparency that I aim to achieve. More information about these databases and my

reasons not to select them are given in related work 2.8 section of second chapter.

For my second task on development of MMSC back-end, I followed the requirements

given to me by MA thus I did not consider alternative approaches on development. On the

other hand this does not mean that I came up with the absolute system design for MMSC

but there were no branching road to select the appropriate way. Instead the system design

evolved by iterative development cycles.

1.6 Contributions

This work shows the feasibility of replacing Dets at Mnesia back-end with a robust

key-value pair database by extending Erlang by using linked-in drivers so to use external API

of a external database management system. Berkeley DB, which is an embedded database,

replaces erlang’s term storage without bringing communication overhead. I evaluate the

performance of BDB back-end by comparing it to original Mnesia with Dets back-end by

using disc only copies as the disk storage option. My evaluation shows that BDB back-end

outperforms Dets by means of transactions per second (TPS) with an average of ≈ 90%

improvement based on the results of TPC-B Benchmarking.

The MMSC back-end and MM store application is ready to be integrated to MMSC

front-end since implementation is modular and compatible with Mobile Art’s framework.

Functional tests show that store and forward mechanism works as expected when system

was running in interaction with an SMS Center where MM Notification messages were

de-livered through SMS Center to MMS clients. Resulting MMSC is able to operate on both

database back-ends with Dets and BDB tables.

1.7 Thesis Structure

The thesis continues with chapter 2 in which background information is described. Basic

information about the programming language, database management systems, system

con-cepts and related work are given under this chapter. After that system design is presented in

chapter 3 which is followed by implementation of details given in chapter 4. Thesis

contin-ues with evaluation of the work presented in chapter 5, In chapter 6, conclusions and future

work is discussed.

Chapter 2

Background

2.1 Erlang/OTP

Erlang is an open source, functional programming language and run-time environment. One

of the major features of Erlang is that it has support for concurrency independent from

op-erator system. Concurrency is provided by light-weight Erlang processes those do not share

memory and communicate by message passing. Fast context switch between processes and

easy to develop dead-lock free code lets users to experience almost linear speedup in

mul-ticore machines [14].

Erlang comes with OTP (Open Telecom Platform) which comprise large number of

li-braries. OTP libraries provide a wide range of solutions for any system that needs fault

tolerance, concurrency, storage, data structures or more specialized servers, protocol stacks

and applications. Concurrency support and OTP libraries makes Erlang/OTP a strong

devel-opment platform alternative for distributes systems especially those requires soft real-time

properties.

In Erlang functions are declared inside modules. A module can also contain attributes

to declare exported functions, data types, module imports and macro definitions. A

mod-ule can be imported by other modmod-ules and exported functions can be called from outside.

OTP defines application behavior which helps to combine a specific functionality into one

component which can be started and stopped. Erlang applications are defined in application

resource files where modules introduced by the application is given. One module introduced

by application is the application callback module which exports start and stop functions of

the application. Applications can be re-used in different systems by loading them in. In this

thesis work any application refers to an Erlang application.

OTP sets design principles about structuring the behaviors of Erlang processes. The

proposed structure is a hierarchy set between processes according to their roles and is called

supervision trees. Supervision trees are composed of workers and supervisors. A worker is

a process doing the computations and all the real work that is intended to be done. On the

other hand supervisor process are responsible to monitor the worker processes under it as

well as lower level supervisor processes. Supervisors starts its child processes and they can

restart if a child process dies unexpectedly. Figure 2.1 illustrates an example supervision

tree. Supervisors can be configured according to their restart strategies. These strategies

include one for one, one for all, rest for all and simple one for one options. In case of

one for one strategy supervisor restarts only the affected child process. If one for all

op-tion is set, supervisor terminates all child processes in case of any child process’s

termina-tion and restarts all child processes. If rest for all optermina-tion is set then supervisor terminates

only the child processes those are started after the affected child process and restarts all

terminated processes. Last option simple one for one strategy for supervising dynamically

created instances of processes those run same code. Supervisors can be configured for

restart frequency which prevents too frequent restart of same code.

Figure 2.1: Supervision Tree

2.2 Mnesia

Mnesia is the distributed database management system that comes with OTP. As Erlang,

Mnesia supports development of systems those have soft-real time requirements. Mnesia is

designed to meet high availability and reliability requirements of telecommunication

sys-tems [9].

Mnesia is a key-value pair database with fast real time key lookups. Mnesia allows

distributed data as well as distributed replicas of database tables. Transaction manager

en-sures atomicity, consistency, isolation, durability (ACID) properties on distributed tables

and keeps table locations transparent to programmer. Mnesia also allows dirty operations

which are without transactions, to be done on tables.

Mnesia provides three kinds of storage options each using different back-ends. From

another point of view, Mnesia is a DBMS built on three storage modules provided in OTP.

These options include ets, dets and disk log where dets and disk log based on disk and ets

based on memory. Ets and dets are erlang’s term storage mechanisms to store a collection

of objects with a defined key in a table. Mnesia introduced the second disk based

imple-mentation by using disk log which was more efficient than dets during disk access. Using

disk log, tables are kept in memory and dumped to disk at once which performs faster

ac-cess to disk than dets module can do, additional data applications acac-cess the tables much

faster since data is kept in memory.

In Erlang compound data structures can be defined as records which can contain other

Erlang data types in other words Erlang terms. Erlang records are basically tuples which

comprise fixed number of terms where first element defines record name and rest of the

terms defines values for record attributes. Mnesia tables comprise of Erlang record

in-stances. Each record in table is identified by a tuple of table name and key called object

identifier. Mnesia lets programmers to define relations between tables by this data model.

Any attribute of a record can contain an object identifier which is a foreign key pointing

another table.

2.3 Berkeley DB

Berkeley DB is an embedded database supplying persistent storage for key-value pairs.

Berkeley database can be linked into applications directly and allows concurrent storage [17].

Transactions support atomicity, consistency, isolation and durability (ACID) properties.

Berkeley DB supplies APIs for many programming languages in which C, C++, Java are the

most common and well documented ones. There is also an Erlang API created by EDTK

Project [16] which is not used while this work is carried out.

Berkeley DB lets programmer to decide on datatype of keys and values and all datatypes

defined in C are supported by Berkeley DB. Hash, B+tree and Recno are the three access

methods supported by Berkeley DB. Hash and B+tree access methods supports key lookup

by exact match where B+tree also support range lookups. Recno is built up on B+tree, this

access method can be used in applications where there is a need for storing records

sequen-tially and searching in records in order. Berkeley DB supports keys up tp 2

32Bytes long

and tables up to 2

48bytes (256 petabytes). [17]

2.4 Port Drivers

Port drivers are operating system shared libraries to which Erlang processes can connect

via Erlang ports. They also called Erlang linked in drivers. Using these drivers supply the

fastest way to call C code outside Erlang emulator. Port drivers can operate for data flow

in two directions. Loading a shared library copies the program into memory once and any

program uses the same copy of the library if they load it. In cooperation with Erlang, this

behavior gains importance since each process is going to use the same instance of library

in memory which brings performance during load. Of course it does not bring performance

improvement but this behavior prevents a possible bottleneck during interconnection.

One drawback of using Port Drivers is harder code replacement. Erlang has support

for code replacement on running system but for linked in drivers unloading and loading

requires more care. If a code replacement is needed for a port driver, all users of the driver

should unload before new code is loaded since two instances of code can not be used in

system by different users. If we are talking about high availability of databases, we need

to ease the code replacement problem. One design solution is to let only a single process

to load and unload the driver. In such a design, driver’s user process will not wait for

other processes to unload or there will not be need to force kill other users. On the other

hand, routing all the traffic towards only one process can make cause a bottleneck. If we

consider the approaches for code replacement as, using single process to load the driver or

using multiple processes and waiting all to unload or using force to kill all users for instant

unload, none of the approaches will meet any specific systems requirements. Since there

is no generic solution fits for all systems, the best approach can be designing driver reload

mechanism in a hybrid way where user count, unload timeout, buffer size and force kill

conditions can be configured.

2.5 Multimedia Message Service (MMS)

Multimedia Message Service supplies non-realtime messaging service to subscribers with

a rich set of media content. MMS standardizes messaging with multimedia content in

be-tween terminals those capable of supporting some number of defined media formats. High

level requirements of MMS include consistent way of messaging while it aims to integrate

different media formats which are currently in use and provide support for evolving

tech-nologies. Access to MMS should be available for users independent from the network

system they use. MMS provides some minimum set of formats to ensure interoperability in

between networks and terminals those support these defined formats [6].

Two major entities in MMS are MMS Client and MMS Proxy-Relay. MMS Client is

the WAP enabled user terminal acts as the end-point in MMS service and MMS

Proxy-Relay is a server which provides messaging services through other messaging systems and

it interacts with MMS Clients. Multimedia Messages are sent through MMS

Minterface in

Figure 2.2: Implementation of MMS

MInterface Using WAP Gateway [4]

Figure 2.3: Implementation of MMS

MInterface Using HTTP Based Protocol Stack [4]

WAP Architecture or using HTTP Based Protocol Stack [4]. Figures 2.2 and 2.3 illustrate

the MMS

Minterface implemented using WAP Gateway and HTTP based stack. Over WAP

WSP or WAP HTTP transport protocols, payloads carried on are encapsulated in protocol

data units (PDUs) defined in ”Multimedia Messaging Service Encapsulation Protocol”

doc-ument [5]

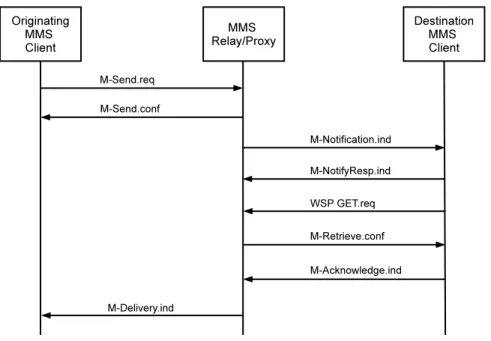

One basic example of WAP encapsulated data flow in MMS is illustrated in figure 2.4

where we can see number of PDUs used for transactions. MMS Client sends message to

MMS Proxy-Relay with M-Send.req PDU. When MMS Proxy-Relay receives the send

re-quest it sends a M-Send.conf PDU back to MMS Client including the status of operation.

MMS Proxy-Relay then sends M-Notification.ind PDU to notify the target MMS Client

about the new message. M-Notification.ind PDU does not include the message body but the

URI of the message so the target MMS Client can fetch the message later. When

notifica-tion is received by target MMS Client it responds with a M-NotifyResp.ind to acknowledge

the transaction to MMS Proxy-Relay.

To retrieve the message MMS Client requests the MM by sending WSP/HTTP GET.req

request including the URI which is received in notification PDU. MMS Proxy-Relay then

responds with M -Retrieve.conf PDU including the message body. After delivery of MM

is accomplished, target MMS Client confirms the delivery with M-Acknowledge.ind PDU.

The last transaction shown in figure 2.4 is between MMS Proxy-Relay an Originating MMS

Client is the delivery reporting. M -Delivery.ind PDU is sent to Originating MMS Client

from MMS Proxy-Relay if such a delivery report is requested and if Target MMS Client did

not explicitly deny the deliver reporting. There is no acknowledgement messages sent after

receiving delivery reports. Another transaction can be done. Other major PDUs which are

not shown in example figure include M-Forward.req and M-Forward.conf types those used

for MM forwarding and M-Read-Rec.ind, M-Read-Orig.ind types for notifying he sender

about the event that target MMS Client handled the MM.

Figure 2.4: Example MMS Transaction Flow

2.6 Store and Forward

Store and forward is a technique used in networks those serving non-real-time services

like short message service (SMS). If this technique is used, the transferred data is kept

in an intermediate entity and later sent to destination. Intermediate entity keeps the data

and ensures its integrity until delivery or another configured logic state like timeout

hap-pens. Store and forward might be used for some number of reasons such as delays during

transmission, errors causing delivery fails or absence of subscriber which is common in

mobile networks where end-to-end connection is not possible. Store and Forward requires

a scheduling mechanism to try resend data as scheduled and a notification mechanism to

be able to trigger resend on notification. On subscriber basis, store and forward should

keep messages targeted to the subscriber in an order and invoke resend events according to

built-in mechanisms.

2.7 MA Framework

This thesis work is supported by and done at Mobile Arts(MA) AB and MA has proprietary

rights on the MMSC and the it’s subsystems, thus MA is mentioned to denote company’s

specific tools, applications or code used in this work. MA has developed its own framework

which is used by its products, almost completely on Erlang. Framework supplies

applica-tions and libraries that developers can built their system on. Even though Erlang/OTP

sup-plies many specific solutions for telecom applications, MA Framework supsup-plies one level

higher applications those meet system needs and standardizes core services and tools in all

MA products. MA Framework guided my design decisions, eased implementation phase

with tools like build, debug, and helped evaluation phase with variety of test tools.

2.8 Related Work

Although there is no existing single best answer on the grounds that different kinds of

sys-tems have various requirements, it has been a popular discussion topic to find out the best

fitting database management system for distributed and concurrent systems. The research

is done based on couple of criteria to reduce the scope where studies and practical

appli-cations of database management systems have a broad range. To my best knowledge, my

goal in this work, thus the approach I chose, was not been part of a previous study. This is

because I chose a very specific solution that would fit to Mobile Arts’ product range with

backwards compatibility support. Considering my aim, I defined the first criterion for a

database solution to be supporting key-value storage. The main reason was that I would not

need relational algebra to manage my data since the data would be stored and retrieved by

the primary key. Second criterion was that the study or application focusing on alternative

solution to Mnesia DB.

Erlang Driver Toolkit [16] provides a code generator for creating prototypes of driver

extensions for Erlang. Although EDTK is not directly related to provide a solution for

my problem, an Erlang driver for Berkeley DB is presented with this work. The

devel-oped driver interfaces the BDB C API functions through Erlang linked-in drivers. However

EDTK supports both driver generation of port drivers and linked-in drivers. If Mnesia

front-end is not needed while an alternative approach is required for persistent storage in an

Erlang system, The BDB driver created with EDTK would be a good option to consider.

Mnesiaex [10] project is targeting to have an external back-end to Mnesia by

defin-ing a new behaviour ”external copies” as an alternative to ”ram copies”, ”disc copies” and

”disc only copies”. Mnesiaex project provides quite reasonable solution by keeping the

advantages of Mnesia, like distribution and transaction support, plus a generic solution for

external database system integration as a back-end. Back-end change is almost

transpar-ent after application of new database, which only requires code change at database table’s

creation. One example usage is done with tcerl project in Cardell’s thesis work [15] which

uses Tokyo Cabinet as database back-end. Cardell mentions ACID issues with Mnesiaex

in his work, stating that Mnesiaex lacking to check the returned value from back-end and

commits a transaction assuming the transaction is also committed at the back-end, which

breaks atomicity and consistency properties. Also using Mnesiaex approach would make

MMSC back-end dependent on an external project that will prevent to bring the solution to

production in Mobile Arts.

Scalaris [13] is another key-value storage system implemented in Erlang. Scalaris offers

highly scalable, distributed and transactional data storage. Scalaris does not use persistent

storage and keeps data in memory. As a new feature, Tokyo Cabinet DBM, which

man-ages file based key-value databases, introduced as a back-end to Scalaris. However this

approach does not provide persistent data storage for Scalaris either, but it helps to improve

the storage capacity by using disk storage instead of memory. On the other hand Scalaris

implements consistency by paxos protocol for recovery of inconsistent data. Scalaris

distri-bution has a ring topology for nodes with database replicas and each node takes the role as

a acceptor in paxos protocol. Consistency is preserved by constituting quorum by majority

of replicas and data is accepted by availability of instances in quorum. Scalaris can offer

high performance in some configuration with supporting data consistency but such

configu-ration is hardly feasible while establishing a MMS Center, since distribution is usually done

for redundancy while internal network offers limited distribution possibilities for hardware.

Persistency of data is also needed since all nodes can crash together in an example scenario

where some faulty code executed on all nodes. Instead, I would like to use distribution for

load balancing and redundancy.

CouchDB [12] is a document based database server built on Erlang/OPT. CouchDB

server hosts databases that stores documents. Documents are uniquely identified in databases

and CouchDB server provides access on Documents by Restful JSON API over HTTP.

This API would supply benefits like standard representation of data, interfacing data with

standard HTTP methods thus language independence. CouchDB does not implement lock

mechanism but it allows concurrent read with multi version concurrency control (MVCC).

Data stored on disk and updates are done by editing old version of document in database.

If concurrent editing happens, update gets conflict error and changes are tried to be

reap-plied after latest version of document is read from database. While CouchDB offers quite

valuable features through its API for systems like those providing web services, it does

not provide an advantage for the system I develop since I want to store Erlang records on

local disk and interfacing another erlang system with Restful HTTP API would bring

ex-tra overhead. Additionally MVCC mechanism might bring performance drawback while

many Erlang processes tries to update the data concurrently. But I should add that this is a

pre-estimated initial guess done while selecting a database back-end that suits my system

needs and CouchDB performance needs to be compared with BDB back-end performance

Chapter 3

Design and Implementation

I present MMSC, a MMSC relay-server that is developed on erlang/OTP and using

Berke-ley DB placed underneath Mnesia. MMSC implementation takes MA Framework as basis

and the framework eases development and debugging. Both MA framework and the MMSC

developed on top of it, uses Mnesia as database back-end. The main challenge is to keep

database back-end replacement transparent to application level to ensure backward

compat-ibility with framework.

Architectural requirement of this system is mostly formed by MMSC front-end

struc-ture requested by MA. Many elements comprising MMSC are supplied by MA Framework

and these elements interact with each other in relationships defined by set of rules. New

elements needed specifically for MMSC front-end and back-end require new relationships

and rules need to be defined. Since I used erlang/OTP as development platform, any

ap-plication mentioned in this thesis points to a system component with defined apap-plication

features in OTP. Supervision tree structure are built in applications and OTP behaviors used

to implement supervisors, s and finite state machines. I did not follow OTP defined building

and release handling tools, instead, I used build scripts and boot scripts of MA framework.

Most significant architectural decision is given on a behavioral requirement of MMSC

that servers behaves as originating MMSC or terminating MMSC with respect to their roles

in traffic cases. System design and decisions taken are explained in following sections in

detail where system design undertaken in parts according to independent contexts.

3.1 Transparency

The database back-end replacement can have side effects in system behavior if back-end

is poorly abstracted. The MMSC front-end and many other applications running on same

system are using the same database and there is no possibility to adjust them to preserve

their behaviors while using a new back-end. Many applications are already commercial

and I want all applications using database to support both old and new database back-end.

Development of new store and forward mechanism should be implemented as usual so the

change done at back-end should not affect my design decisions at application level.

Trans-parency of database back-end replacement is crucial for feasibility of integration of it to

MA systems.

Having the change transparent also brings the possibility to test new back-end in

exist-ing MA systems which are commercially runnexist-ing for years, thus these systems can be

con-sidered robust and stable with their current back-ends. Testing on existing systems brings

the possibility to compare the behaviors of different back-ends. MA’s SMSC (Short

Mes-sage Service Center) is used to test Mnesia with Berkeley DB integrated back-end. SMSC is

chosen since it implements a similar behavior with its store and forward mechanism which

is using Mnesia and it is used during functional tests of MMSC where notification messages

sent through SMSC to terminals.

3.2 Abstraction Level

To meet our transparency requirement, crucial decision is given on abstraction level for

Berkeley DB integration. For easier integration I want to place BDB at the highest level

it can be. Developers interface to Mnesia by the functions exported by Mnesia. The

only part I should not change are these functions and they support interface to interact

with any back-end of Mnesia. Keeping same interface to Mnesia, the calls to database

can be forwarded to Berkeley DB. To achieve this, exported Mnesia functions can be

reimplemented and operations can be executed with same arguments on BDB. This would

be the highest level to abstract back-end and keep transparency. Drawback of this approach

is that i would loose all Mnesia features and it would limit the the system. First of

all distribution support would be impossible to support with such abstraction. Besides

distribution of databases I would loose ”ram copies” and ”disc copies” options which are

very useful and being used a lot in MA systems. Since my higher priority is to provide

a better solution for consistent copies of data stored, specifying abstraction level deeper

where Mnesia directs disc writes to dets tables seems much better. This way no Mnesia

functionality would be lost and distribution of tables can still be supported.

Dets tables are managed by dets module and it is not just Mnesia using disc based term

storage in a system. Developers can use dets without Mnesia or users can manually access

Mnesia tables those are using dets tables, by using dets module. Same transparency problem

appears here so I needed to keep dets interface as it is. By reimplementing dets internal

functions, it is possible to redirect dets calls to Berkeley DB. Therefore, the abstraction

level is at dets level and dets functions are reimplemented in a way that dets do not perform

operations on files it self but directs operations to Berkeley DB - Mnesia Interface.

3.3 Berkeley DB - Dets Interface

Each dets table is managed by one dets process which is a server receiving operations

for the table associated with the database file of that table. Upon receiving an open file

operation, dets module invokes dets server module which keeps track of opened dets tables

and users of the table. Server module checks its registry to find out if the table is already

opened and if the table is not opened, it invokes dets sup module which is a supervisor

that starts child dets processes with simple one for one relations. The initialized dets

process takes ownership of dets table and the process id of owner process is returned to

dets server module. If table was already opened, dets server does not invoke the supervisor

but it invokes the owner process to add a new user to table and it also keeps new user in

its registry. Table close operations are handled by dets server module similarly. It does

not close the table completely until all the users closes. With the last users close call,

dets server invokes the owner process which closes the table and terminates itself. State

machine for dets tables given with Figure: 3.1.

Figure 3.1: Dets Table States

The owner process is a server in loop handling operations directed to the table it

man-ages. I keep the structure of Dets callbacks and integrated redirection of all operations that

owner dets process receive to Berkeley DB back-end. Interaction with Berkeley DB and

Dets is done using port drivers. An illustration can be seen in Figure: 3.2 for

communica-tion flow diagram. When ever a dets child process is started for opening a table, it invokes

db server module which is a server managing open tables and open ports towards Berkeley

DB. When db server module receive database operations including open file and close, it

creates a package including table, operation, arguments and sends the package in binary

format through port to db driver. The db driver is the shared library implemented in C,

code templates for erl driver is given in Appendix: A. The driver unpacks the package

re-ceived and translates the operation into Berkeley DB operation. Using Berkeley DB C API,

the driver performs desired database operations. These functions are called by db driver

according to translations of Mnesia operations. The rest is done by Berkeley DB to

per-form requested operation and result is returned back to db server. Result is translated in to

Erlang’s internal notation by db server. The expected successful or unsuccessful results are

constructed and returned back to dets process.

Figure 3.2: BDB-Erlang Communication

3.4 MMSC Design

MMSC front-end is designed to handle the basic traffic case where a multimedia message

is travelled from originating user agent to terminating user agent. Although providing

ser-vice for other messaging serser-vices is out of scope of this thesis, MM forwarding is still used

since my architecture includes two logical MMSCs involve in one traffic case. These

log-ical entities are called Originating MMSC where originating MMS Client is a registered

subscriber of it and Terminating MMSC where Terminating MMS Client is a subscriber of

it. Logical role separation allows us to customize messaging behaviors according to client

roles (originating, terminating) in a traffic case. Example transaction flow for such traffic

case is shown in Figure 3.3.

MMSC front-end includes a subscriber database where such client based

customiza-tions and data can be kept. MMSC front-end behavior meets the role of MMS Relay and it

Figure 3.3: Transaction Call Flow of MMS

is composed of several components each have specific role to process messaging. Received

packets to MMSC front-end are routed through these components via Message Enabling

Server (MES) application and MES ensures the packet is routed and processed properly.

On the other hand, MMSC back-end meets the functionality of MMS Server which should

provide storage services and operational support for MMS service [4]. MMSC back-end

includes attachment store database and store and forward mechanism to support the MMS

Server requirements. Data flow over a system of originating and terminating MMSC is

il-lustrated in Figure: 3.4.

In the next two sections I describe MMSC front-end and back-end with their subordinate

applications, database applications and defined data models. The database model

imple-mented in this thesis splits record definitions to two as application level and database level.

Database level records are used to wrap application level records and eases application

level changes to be applied without recreating database tables. For wrapping application

level records, Mnesia’s activity function is used to access tables. Activity function gets an

Figure 3.4: Data Flow Diagram of MMSC

access module as an argument which implements mnesia access behavior for corresponding

mnesia callbacks. While wrapping to database records are done in re-implemented access

module callbacks, some constraints are also implemented for operations on tables with

for-eign keys.

3.4.1 MMSC Front-End

Upon receiving MM1 submit request packet, MM1 in, interface module to MMSC

front-end, transforms the WAP encapsulated message to MMS abstract message types

accord-ing to the mappaccord-ings given in MMS Encapsulation Protocol [5]. After transformation, it

forwards decoded full packet to Attachment Store (AS) and forwards the packet without

multimedia content to MES. The purpose of removing the multimedia content is to get rid

of heavy payload and prevent unnecessary copies of attachments carried in system. MES

routes the message through Message Control Application. Message Control performs initial

checks on received message and accepts the message if it is a valid MM1 Submit request

or possibly a MM4 Forward Request. Second destination of message is Message Broker

Module. Message Broker has the interface to Subscriber Database (SDB) and it fetches

the subscriber data of originating or terminating user according to to the role of being on

terminating MMSC or originating MMSC.

SDB Configuration

SDB is an application in MA Framework and I do not have to re-implement such a database

application again, but I need to design data relationships for MMSC subscribers. Data

structures used in Mnesia are erlang records and the data model described in this work is

presented in erlang record’s syntax. Records in erlang are defined as in Listing: 3.1 and

accessed or modified by #<RECORDNAME >{<ATTRIBUTENAME >= <VALUE >}.

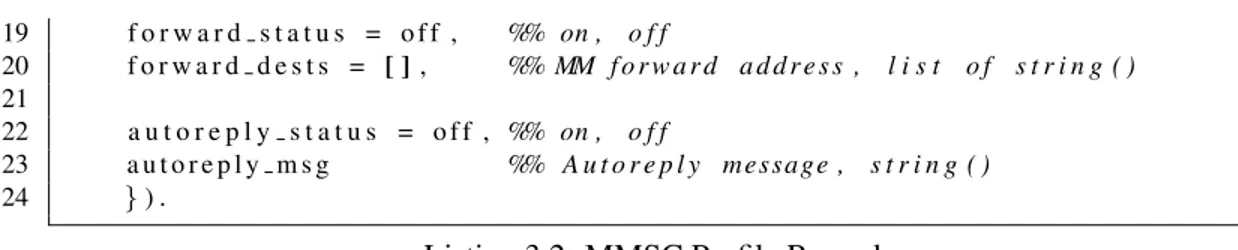

I defined new data structures to be kept by SDB application. Subscriber data model

is represented as #mmsc subscriber{}, as in Listing: 3.1 and #mmsc profile{}, as in

List-ing: 3.2 application level erlang records.

1 −r e c o r d ( mmsc subscriber ,

2 { msisdn , %% key ; MSISDN , s t r i n g ( )

3 imsi , %% key ; IMSI , s t r i n g ( ) | u n d e f i n e d

4 name = ” ” , %% S u b s c r i b e r name , s t r i n g ( )

5 t i m e s t a m p , %% S u b s c r i b e r d a t a t i m e s t a m p 6 s u b s c r i b e r i n f o = ” ” , %% S u b s c r i b e r i n f o , s t r i n g ( )

7 language , %% User l a n gu a g e atom ( )

8 c h a r g i n g t y p e , %% p r e p a i d | p r e p a i d 2 | p o s t p a i d 9 s t a t u s = true , %% Enable / d i s a b l e a l l s u b s c r f u n c t i o n a l i t y 10 %% t r u e | f a l s e 11 a u t o r e p l y = true , %% f l a g , b o o l ( ) 12 c l o n e s t a t u s = true , %% f l a g , b o o l ( ) 13 e m a i l c o p y s t a t u s = true , %% f l a g , b o o l ( ) 14 f o r w a r d i n g s t a t u s = true , %% f l a g , b o o l ( ) 15 m m b o x s t a t u s = f a l s e , %% For f u t u r e c o n s i d e r a t i o n s 16 p r o f i l e %% C u r r e n t p r o f i l e , 17 %% s t r i n g ( ) | ?PROFILE ( ) | u n d e f i n e d 18 } ) .

Listing 3.1: MMSC Subscriber Record

1 −r e c o r d ( mmsc profile ,

2 { id , %% key ; {MSISDN , Name} , { s t r i n g ( ) , s t r i n g ( ) }

3 msisdn , %% Group i n d e x 4 5 s c r e e n s t a t u s = o f f , %% C o n t r o l w h i t e / b l a c k l i s t s , 6 %% o f f | w h i t e | b l a c k | a l l | d i s a l l 7 w h i t e l i s t = [ ] , %% White l i s t , l i s t o f s t r i n g ( ) 8 b l a c k l i s t = [ ] , %% B l a c k l i s t , l i s t o f s t r i n g ( ) 9 10 %% On o r i g i n a t i n g 11 d e l i v e r y r e p o r t = o f f , %% on , o f f 12 r e a d r e p l y r e p o r t = o f f ,%% on , o f f 13 o r i g e m a i l l i s t = [ ] , 14 o r i g c l o n e l i s t = [ ] , 15 %% On t e r m i n a t i n g 16 d e l i v e r y s t a t u s = on , %% on , o f f 17 t e r m e m a i l l i s t = [ ] , 18 t e r m c l o n e l i s t = [ ] ,

19 f o r w a r d s t a t u s = o f f , %% on , o f f 20 f o r w a r d d e s t s = [ ] , %% MM f o r w a r d a d d r e s s , l i s t o f s t r i n g ( ) 21 22 a u t o r e p l y s t a t u s = o f f , %% on , o f f 23 a u t o r e p l y m s g %% A u t o r e p l y message , s t r i n g ( ) 24 } ) .

Listing 3.2: MMSC Profile Record

Subscriber database holds one #mmsc subscriber{} record for each user of MMSC.

Records are identified by MSISDN as key and tables are indexed by imsi. Attributes of

#mmsc subscriber{} includes basic information for MMSC user and basic functionality

settings like autoreply, clone status, email copy status, forwarding status, mm box status.

These functionalities are configured in detail in another record called #mmsc profile{}.

Profile record is addressed from #mmsc subscriber{} record by a foreign key. Although

mnesia is not a relational database, a relationship is defined in between subscriber and

pro-file tables as in Figure 3.5.

Figure 3.5: Entity Relationship between mmsc subscriber and mmsc profile

Message Broker application generates copies of multimedia message according to the

subscriber’s configuration if subscribers status is set true in #mmsc subscriber{} record.

For originating subscriber it checks delivery report and read reply report status and

gener-ates email copies and clones for those addresses configured in orig email list and orig clone list

of originating subscribers #mmsc profile{} record. For terminating subscriber message broker

checks delivery status of #mmsc profile{} record and if it is ”on”, it creates email and clone

copies according to term email list and term clone list configurations. If the forward status

is on, it creates a copy for destination configured in forward dests field of #mmsc profile{}

record. If terminating side set autoreply status to ”on”, message broker creates the reply

message with the message configured in autorply msg field of #mmsc profile{} record.

Message broker uses default settings if a profile record is not configured for the subscriber.

MES delivers MM and it’s copies to Message Router application where all the instances

of messages routed through corresponding gateways. Since the system design in this work

suppose to support only mobile originating to mobile terminating traffic, Message Router

only simulates it’s behaviors and routes multimedia message to MM4 out or MM1 out

modules. Next destination configured in MES is MMSC ESME application. ESME stands

for External Short Messaging Entity and it interconnects with applications to send and

re-ceive messages. ESME name is kept for historical reasons since similar functionality is

supplied by SMSC ESME application in MA’s SMSC product. MMSC ESME does

conver-sions of MM PDUs form MM1 to MM4 and vice versa according to mappings defined in

3GPP TS 23.140 [7]. Lastly, MES directs the message through Message Store application

running in interaction with MMSC back-end.

3.4.2 MMSC Back-End

MMSC back-end undertakes the behaviors of a MMSC Server where it supplies storage

and operational services of MMSC. It is composed of a database called Attachment Store

and the Message Store application which provide store and forward mechanism. Message

Store application includes a state machine, notification database, tcp server and a http server

for supporting store and forward service, Figure: 3.6. MMS Store application responds

Send.req with Send.conf PDU and for the received send request, a notification PDU

M-Notification.ind is sent to MMS Client through WAP Gateway. In the successful case, MMS

back-end receives M-NotifyResp.ind and keeps the MM until it is retrieved. If notification

could not be sent, then MMS Store can queue the notification messages for that subscriber

and try to resend later. MMS Client retrieves the MM by a WSP/HTTP GET request to the

URL supplied in the notification message. This URL is set by MMS Store and includes

the reference identifier for MM. Upon arrival of WSP/HTTP GET request, MMS Store

fetches MM’s media content from Attachment Store and constructs Retrieve.conf.

M-Retrieve.conf is sent through WAP Gateway and transaction is finished with the received

M-Acknowledge.conf from MMS Client.

Attachment Store

Attachment Store is first accessed by MMSC Front-End package handlers MM1 in and

MM4 in components to store the multimedia payload of received PDU. When the MM

is going to be forwarded by MM4 out or sent by MM1 out, content is fetched back from

Attachment Store by these components. Together with a one time generated reference,

orig-inating MSISDN constructs the database key of stored MM content. The database key is

then returned MES together with MM without the content.

Attachment Store compresses the data and stores the copy in consistent database for

re-trievals during MM fetch by destination user agent or MMS Proxy-Relay forwarding. Data

is kept in ”attachment store” record’s ”content” attribute and this record also defines the

database table, Listing: 3.3. The one time generated reference value is then sent to MES

in ”ref” attribute of #mes data{} record, Listing: 3.4. MES carries #mes data{} all the

way and system components can get and set #mes data{} when they receive it. Carrying

#mes data{} in between components allows communication and keeping traffic case base

information until the end of the traffic. To be able to retrieve back the attachments, mm1 out

and mm4 out components set the database key by combining originating address from MM

and ref value from #mes data{}.

Figure 3.6: MMSC Back-End Modules and Interactions

1 −r e c o r d ( a t t a c h m e n t s t o r e ,

2 {key , %% key = { r e f , msisdn }

3 msisdn ,

4 c o n t e n t %% d a t a

5 } ) .

Listing 3.3: Attachment Store Data Representation

1 −r e c o r d ( mes data , 2 {pdu , 3 r e f , 4 o p t i o n s = [ ] , 5 fsm , 6 e i p %% s e n d r e q u e s t or p r o c e s s r e q u e s t 7 } ) .

![Figure 2.3: Implementation of MMS M Interface Using HTTP Based Protocol Stack [4]](https://thumb-eu.123doks.com/thumbv2/5dokorg/5486522.142830/25.918.205.696.371.506/figure-implementation-interface-using-http-based-protocol-stack.webp)