Faculty of Technology and Society Department of Computer Science

and Media Technology Bachelor Thesis 15 hp, First Level

Are You OK App

A smartphone alarm application for runners and cyclists

using GPS-inactivity in fall detection

Are You OK App

En smartphone larm-applikation för löpare och cyklister

som använder GPS-inaktivitet som härlett fall i fall-detektion

Kasper Kold Pedersen

Peder Nilsson

Bachelor's degree 180 hp

Main field: Computer Science

Program: Application development & Systems development

Final seminar: 2020-06-03

Supervisor: José Maria Font Fernandez Examiner: Nancy Russo

Abstract

This thesis describes how a smartphone-based alarm application can be constructed to provide safer trips for cyclists and runners. Through monitoring and evaluating GPS data via the mobile device over time, the proposed application prototype, coined Are You OK App (AYOKA), automatically sends SMS messages and initiates phone calls to contacts in a user-configured list when a fall is detected. In this project, falls are inferred on the basis of GPS-inactivity (in this context defined as when the user’s geolocation has not changed within a selected time interval). Detection of GPS-inactivity, combined with a lack of response from the user, will trigger the alarming features of the application. The project also exemplifies how the implementation of a cloud data sharing flow, which uses Microsoft Azure as a platform, can significantly enhance the data gathering capabilities of the application. By utilizing an IoT Hub, Stream Analytics and an Azure SQL database, the prototype demonstrates how the gathered data can be centralized, and in future research could potentially be utilized for monitoring and analytical purposes. The method of detection performed relatively well with the focus on cyclists and runners since these activities involve changing of geographical coordinates, thereby making GPS-tracking effective. By focusing on detecting GPS-inactivity, it is argued that the prototype could potentially be utilized in other emergency scenarios apart from falls, such as being hit by a car. A disadvantage discussed includes the high degree of reliance on user participation to discern a fall from a voluntary pause. To enable detection of falls from physical activity occurring in one location, it would be necessary to incorporate data from accelerometer and gyroscope sensors into the current fall detection functionality. This thesis suggests that the prototype, including the cloud data sharing flow, can serve as a framework for future smartphone-based fall detection systems that use streamed sensor data.

Keywords: Fall detection, GPS, Android, IoT Hub, Azure, Stream Analytics, Smartphone, Emergency system, Cloud storage, Xamarin

Acknowledgments

We would like to express our immense appreciation to Professor José Maria Font Fernandez at the Department of Computer Science and Media Technology, Malmö University, for his patient guidance, enthusiastic encouragement and assistance in keeping our progress on schedule. We would also like to thank our examiner, Senior Lecturer Nancy Russo at Internet of Things and People Research Centre, Malmö University, for her clear and insightful critique of our work, and not least her approachability. Our gratitude is also extended to CGI Malmö - in particular Tony Jönsson, Director for Consulting Services Bi & Analytics, and Maria Grace, Senior App Developer . We sincerely thank you for giving us the opportunity to collaborate in developing the prototype. Your invaluable guidance and inspiration has allowed us to not only grow as developers, but also to unleash our imagination and creativity.

Sammanfattning

Denna avhandling beskriver hur en smartphone-baserad larm-applikation som ger säkrare resor för cyklister och löpare kan konstrueras. Genom att övervaka och utvärdera GPS-data från telefonen över tid skickar den föreslagna applikations-prototypen, namngiven till Are You OK App (AYOKA), automatiskt SMS-meddelanden och initierar telefonsamtal till kontakter i en lista konfigurerad av användaren när densamme har råkat ut för ett fall. I detta projekt härleds fall utifrån GPS-inaktivitet (när användarens geografiska koordinater är oförändrade inom ett valt tidsintervall). Detektion av GPS-inaktivitet, i kombination med att användaren inte har svarat, sätter igång larmfunktionen i applikationen. Projektet exemplifierar också hur implementeringen av ett flöde som delar data i ett moln, vilket använder Microsoft Azure som plattform, kan förbättra applikationens datainsamling avsevärt. Genom att använda en IoT Hub, Stream Analytics och en Azure SQL-databas, visar prototypen hur insamlad data kan centraliseras och potentiellt användas i framtida forskning inom övervakning och analys. Den testade prototypen visar ett förbättrat nöd- / säkerhetssystem som kan fungera i många olika sammanhang. Metoden för detektion passar relativt bra med fokus på cyklister och löpare eftersom dessa aktiviteter innebär att utövaren förflyttar sig, vilket i sin tur gör GPS-spårning effektiv. Några nackdelar som diskuteras är den höga grad av interaktion från användaren som behövs för att urskilja ett fall från en vald paus. För att möjliggöra detektering av fall från fysisk aktivitet som sker på en och samma geografiska plats, skulle det vara nödvändigt att i detekteringen använda data från accelerometer och gyroskop. I avhandlingen föreslås att prototypen, inklusive delnings-flödet för molndata, kan tjäna som ett ramverk för framtida system för smarta telefoner där fall-detektering använder sig av strömmad sensordata från enheten.

Sökord: Fall, GPS, Android, IoT Hub, Azure, Stream Analytics, Smartphone, Nödsystem, Molnlagring, Xamarin

Contents

Abbreviations 8

1. Introduction 9

1.1 Problem motivation and hypothesis 9

1.2 Research questions 10

1.3 Collaboration 10

1.4 Research methods 11

1.4.1 Literature review 11

1.4.2 Design and Creation 11

1.5 Presented contributions 11

1.6 Structure of the thesis 12

2. Methods 12

2.1 Literature review 12

2.2 Design and Creation 13

2.3 System development method 15

2.3.1 System requirement elicitation 15

2.4 Requirements 16

2.4.1 Functional requirements 17

2.4.2 User requirements 18

2.5 Hardware 18

2.6 Software 18

2.7 Evaluation and testing 20

3. Literature review 21

3.1 Defining a fall 21

3.2 Methods of detecting falls 21

3.3 Smartphone-based fall detection systems 24

4. Results 25

4.1 Requirements testing 25

4.2 Cloud data sharing flow 29

4.3 Summary of results 29

5. Are You OK App (AYOKA) 29

5.1 Use case scenario 30

5.2 Description of the prototype 30

5.2.1 Check and Alarm Functionality (CAF) 30

5.2.3 User dialogue and GUI 34

6. Analysis and discussion 40

6.1 Advantages and disadvantages of inferring falls from GPS 40

6.2 The proposed data flow as a generic framework 41

6.3 Limitations regarding voice messages 41

7. Conclusion 42 8. Further research 43

Abbreviations

CGI Conseillers en gestion et informatique [1] - a Canadian global IT-company (in English, the acronym stands for Consultants to Governments and Industry) GPS Global Positioning System

GUI Graphical User Interface

IDE Integrated Development Environment IoT Internet of Things

IT Information Technology JSON JavaScript Object Notation

.NET Open source developer platform created by Microsoft [2]

NuGet Package manager that produces and consumes packages for .NET [3]

OS Operating System

UUID Universal Unique Identifier

SMS Short Message Service - text messages from mobile phones SQL Standard Query Language

XAML Extensible Application Markup Language

1. Introduction

According to the World Health Organization (WHO), falls are the second largest contributing cause of unintentional and accidental injury and death worldwide [4]. A study estimated that on a global scale, around 700 000 deaths in 2017 were directly due to falls [5]. However, the much greater number of disabilities, lower life expectancy and lower quality of life caused by fall incidents are not accounted for in this figure. It is, therefore, no surprise that the demand for alarm systems specifically for fall detection continues to rise within the healthcare industry [6]. The risk of serious injury from a fall increases with age [4]. Those above the age of 65 are at the greatest risk, which explains why existing research within the field of fall detection tends to focus on this demographic group. Studies thus far have primarily explored how sensors and software applications can collect, evaluate and monitor the daily movements of the elderly and in case of a fall, report to a medical center [7]–[21]. While a principal focus on people above 65 is logical, a fall accident poses a threat to all demographics, putting them at risk of injury and death. As an example, people with active lifestyles, such as cyclists and runners, have received less scientific attention, despite also having a heightened risk of experiencing a serious fall. The great potential of using smartphone technology in fall detection and emergency systems is well established [22]–[24]. In contrast to other kinds of fall detection approaches, for instance, camera-based or a separate wearable device, smartphones have the benefit of being a common personal item that most people in developed societies already tend to carry around with them for most of the day. Moreover, all smartphones today come with inbuilt movement sensors, such as accelerometers and gyroscope, as well as a global positioning system (GPS). A fall detection application in a smartphone that is able to run as a background service could, therefore, be seen as more easily available, inconspicuous and unobtrusive to the user [25].

1.1 Problem motivation and hypothesis

Existing fall detection research and applications are predominantly focusing on the elderly demographic falling at home in their everyday life. This project explores how to extend the applicability of this technology and enhance safety for other demographics.

The findings of Winters and Branion [26], showing the drastic underestimation of cycling crashes and falls in insurance claims data, suggest that more innovations on cycling safety incident surveillance are needed. Furthermore, the IT-company Consultants to Governments and Industry [1], better known as CGI, in Malmö are expressing their interest in exploring how to develop a mobile application that enhances personal safety during on-the-move activities, such as cycling and running. In collaboration with CGI, this project aims to explore the potentialities in using mobile technology to provide a tool that can gather accurate safety incident data from people with active lifestyles. As will become clear later in this thesis, a fall can be defined and detected in a variety of different ways. However, in this specific project falls are inferred from GPS data. Falls are detected by comparing the measured GPS values of a smartphone within a time interval set in the prototype mobile application. If no significant alteration in the GPS values is found from the

previous measurement the application requests an input from the user to confirm that they are safe, and prevent the application from alarming. This is referred to as an inferred fall and/or GPS-inactivity throughout this paper. As with all fall detection, this is an inference based on a selected method, and other possible methods exist that could have been chosen instead. The decision to use GPS data for detecting falls was taken during a collaborative requirements elicitation process with CGI because GPS was an essential requirement from them. This decision also seems reasonable for the purpose of this project, given that cycling and running normally are outdoor on-the-move activities, where frequent tracking of GPS data is expected to be a relevant indicator of a fall. It is also worth stating that the presented prototype not only deals with the actual detection of falls but the alarm system as a whole, including aspects concerning the flow and storage of data when a fall has been detected. These aspects were important requirements from CGI as well.

1.2 Research questions

RQ1:On the basis of existing fall detection research, and in collaboration with CGI, how can an application for mobile devices be developed for cyclists and runners that automatically will contact friends, relatives and medical centers in scenarios where the user is in need of help, but for whatever reason is unable to use the mobile device themselves?

RQ2:

How can Azure technologies be implemented in the proposed application to enhance data gathering capabilities?

1.3 Collaboration

This project is conducted in collaboration with the global software consulting company CGI. CGI in Malmö initially suggested the idea for the specific prototype developed in this project. They envisioned an activity alarm system application for smartphones that can ensure help in case of a fall suffered by the user while on the move. The stipulations of the application were to notify persons from a preconfigured contact list in case of a fall or accident to increase the chance of getting help to the user. Moreover, CGI is interested in exploring the flow of data between the application and a cloud-based platform for storing, centralizing and monitoring purposes. CGI’s desire to develop such a prototype illustrates that there is a demand for this type of application within the software industry. CGI’s preferred coding language, C# [27], cloud storage service Azure Cloud Platform [28] including Azure IoT Hub [29], Stream Analytics [30] and Azure SQL Database [31] are selected to allow for greater efficiency in the collaboration. The choice to develop the

prototype to primarily work with Android phones is grounded in the same rationale. Requirements elicitation are done in close collaboration and the process is described in Section 2.3.1.

1.4 Research methods

The chosen methods to answer our research questions are literature review, followed by design and creation, using an agile [32] system development method.

1.4.1 Literature review

To establish the academic base upon which this project stands [33], a review of relevant previous research literature is carried out. Knowledge about previous works within this field of research is crucial in order to establish what is already known, as well as to gain knowledge of technologies that can be used to create an application of this kind. During the process, related research papers focusing on fall definitions, methods of detection, sensor types, and existing smartphone-based fall detection systems are iteratively consulted and reviewed.

1.4.2 Design and Creation

Design and creation, as described by Oates [33], is the chosen research method for this project and is providing an instantiation of a prototype that answers the research questions stated in section 1.2. This method is well documented and suitable for creating IT artifacts [33]. This project's adaptations are explained further in the Method section together with a specification for the system development method. There are disadvantages to this method. One disadvantage is the challenge to verify that the developed IT artifact is valid research and not an ordinary product delivery from an industry perspective. Another is the difficulty to reproduce and generalize the scientific results [33].

1.5 Presented contributions

This project provides an example of how Azure technology can be applied to a safer practice of traveling activities for a user. The example is presented through a developed and tested prototype of a mobile application for an Android smartphone with the name Are You OK App (AYOKA). AYOKA includes a cloud sharing network. This prototype is based on previous research and is tested by the authors and a small group of students. Evaluation is made by the authors and collaborators in relation to tests and the research questions. The cloud data sharing network provides further knowledge about gathering and logging data

from traveling activities, that could potentially be of use to municipalities, city planners, insurance companies and other stakeholders, to help locate areas where falls and safety incidents relating to cyclists and runners occur more frequently.

1.6 Structure of the thesis

Below (section 2) is a more elaborate description of the methods applied in this project, followed by a list of requirements and an introduction to the technologies used. Up next (section 3) is the conducted literature review, followed by the Results section (4) which provides a summary of the findings from testing the prototype. Section 5 presents a description of the developed application prototype and its various parts, after which section 6 analyzes and discusses these results. The thesis rounds off with some concluding remarks in section (7) and lastly a section (8) about possible future research that could be pursued in light of the findings of this thesis.

2. Methods

This section elaborates on the chosen research methodologies briefly introduced in Section 1.4.

2.1 Literature review

In order to create a sound scientific foundation for this project, a review of the existing research literature and trends within the field of smartphone-based fall detection and alarm/emergency systems is required. Reviewing what research has already been done makes it easier to identify potential gaps in scientific knowledge about this particular field of research. At the same time, one avoids falling into the trap of merely repeating other researchers’ work. The process also allows for inspirational inputs and ideas by adding to one's own understanding of the scientific methods, tools and technologies that researchers within the same field have utilized. The process and structure of the literature review conducted in this thesis are based on the general principles and guidelines described in Doing a Literature Review by Hart [34] and in Researching Information Systems and Computing by Oates [33].

Hart breaks down the literature review into a two-phase four-stage process. Phase one is a systematic search of literature about topics and methods related to one’s research question. In this phase, a selection of keywords is determined to define the scope of the search.

Phase two is the actual reviewing stage of the literature review. Hart breaks it down into three stages. First, one must read carefully with the intention of extracting themes relevant to one’s research question; this is the analysis stage. Second, Hart proposes that in order to critically evaluate previous work and find weaknesses and/or gaps, one must classify these extracted themes into categories such as methods, theories, data, etc. Thirdly, a synthesizing process of the existing knowledge within the specific research field, which entails writing sections on the basis of the identified themes and incorporate essential takeaways from the sources [34]. Hart makes it an important point that conducting a literature review is iterative in nature. It is not uncommon to move back and forth between these phases and processes, as the researcher over time expands his or her knowledge of the research field: “Within the searching state (Phase One) you will move from trying to find everything to focusing on what is relevant to your own work” [34].

Oates specifies different activities that constitute a literature review. These are searching, obtaining, assessing, reading, critically evaluating, and writing a critical review [33]. It resembles more of a hands-on practical guide with proposed shortlists for the researcher to use, rather than a theoretical framework. The research student is guided linearly from the early searching activity to the actual writing of the review. It is useful as a checklist and to break down the steps needed to conduct a literature review. However, it lacks some important complexities - especially regarding the iterative element Hart puts emphasis on. In relation to this project, exactly that has proved an important point. To give an example, in the initial phase, much effort has been put into assessing and evaluating research regarding different definitions and categorizations of falls, as well as the technical workings of sensors used to detect falls - not just in smartphones, but also in wearable devices. As the project has progressed, it has become more clear what exact method of detection is being applied in the prototype. It became clear that sensors such as an accelerometer and a gyroscope are not the primary area of concern. Instead, a return to the activities of searching, assessing, obtaining, reading, and evaluating sources on smartphone-based emergency systems has been necessary. The prototyping process (Design and Creation) has revealed that the project is less concerned with accelerometer and gyroscope data than what was initially thought, and more concerned with GPS data and how emergency systems are designed.

2.2 Design and Creation

This project implements the design and creation methodology as a learning-by-making process, as described by Oates [33]. This process uses five iterative steps similar to the ones used in Design Science as described by Vaishnavi, Kuechler and Petter [35], where every part creates a deeper understanding from the previous, and even more so when some steps are iterated. This enhances the possibilities for learning via making. The five steps are

awareness, suggestion, development, evaluation, and conclusion. These are explained in the context of this project in more detail below, and constitutes the general framework of the process.

This project uses the prototyping approach, described by Oates [33] as well, meaning that this project is deliberately disregarding any waterfall processes. Instead, an iterative approach is preferred, which is expected to improve the design and functionality of the prototype with every iteration, and ultimately answer the research questions and meet the criteria of our collaborator.

Awareness

This project gains knowledge through the literature review and gathers data from interviews with the collaborator describing the need and relevance for the initial application initiating an iterative process with system requirement analysis described later in Section 2.3.1. The system development method handling the development of the artifact also starts in this step which is different from the earlier stated research methodology that is handling the research of this paper. The system development method is described in Section 2.3. In short, this part recognizes the problem.

Suggestion

From the previous step, a first suggestion of a user interface with essential functions is created in the form of a low fidelity paper prototype [36] and iterated back to the client for feedback and more data gathering. Also, a first UML sketch over classes and functions is created with the purpose to start off discussions and continue data gathering.

Development

Implementation of functionality using the Visual Studio IDE, Xamarin.Forms, the programming language C#, and Azure cloud services is developed and then iterated back to the Awareness and the Suggestion steps for feedback. New knowledge is acquired along the way until the development process has reached a stable state and the product is ready to be evaluated.

Evaluation

According to March and Smith [37] and their definition of the research activities Build and Evaluate, “building an artifact demonstrates feasibility” and should be evaluated. Developing criteria and assessing the artifact performance against those criteria are, according to March and Smith, the definition of an evaluation.

This project’s developed system is evaluated against the criteria which are the requirements developed through the System development method together with the system requirement elicitation described in section 2.3. New knowledge is gained during iterations and the

application is evaluated from a small group of testers consisting of students at Malmö University and by the representatives from the collaborator.

Conclusion

Presentation and discussion of results compared to requirements, expected results, the research questions, and findings from the evaluation with the collaborator. All this together is the gained knowledge from this project.

2.3 System development method

This project’s system development method has a prototyping approach as described by Oates [33] and involves four phases; analysis, design, implementation and testing with an agile iterative approach. This entails that the implementation is made step by step and each phase can be revisited when needed. This corresponds well and is integrated and adapted iteratively in the overall research methodology. This method is chosen with regard to its iterative nature.

2.3.1 System requirement elicitation

A simplified system requirement elicitation based on guidelines from Tsui et al [38] is conducted iteratively throughout the development process to analyse, define, prototype, review, and agree upon.

Requirement elicitation is done together with the collaborator in order to find what implementations are sufficient in the application according to stated requirements from the collaborator, time and size of project, knowledge and experience of researchers, and research questions. The iterative flow of that process is described in Figure 1.

Figure 1. Requirements process [38]

2.4 Requirements

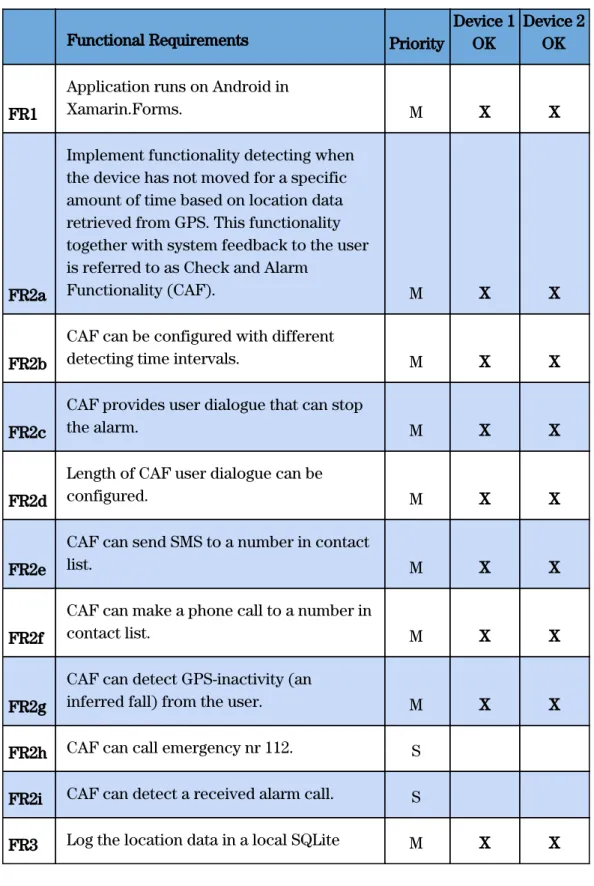

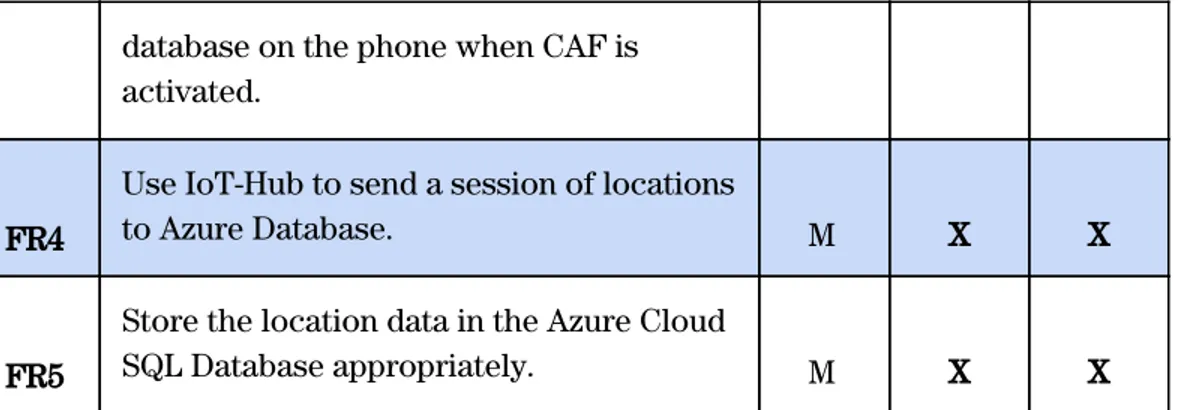

The following requirements, divided into Functional requirements (FR) and User requirements (UR), were discovered when performing the above described requirement analysis. Requirements are prioritized according to MoSCoW [39] where the (M) is Must have, (S) is Should have, (C) is Could have and (W) is Would have. Requirement FR2c and FR2h together with UR1,UR4, UR5 and UR6 are initiated by the authors.

2.4.1 Functional requirements

FR1. Application runs on Android in Xamarin.Forms. (M)

FR2.

FR2a. Implement functionality detecting when the device has not changed GPS coordinates for a specific amount of time based on location data retrieved from GPS (an inferred fall).

This functionality together with system feedback to user is referred to as Check and Alarm Functionality (CAF). (M) FR2b. CAF can be configured with different time intervals. (M) FR2c. CAF provides user dialogue that can stop the alarm. (M) FR2d. Length of CAF user dialogue can be configured. (M)

FR2e. CAF can send SMS to nr in contact list. (M)

FR2f. CAF can make a phone call to nr in contact list. (M) FR2g. CAF can detect GPS-inactivity / inferred fall from a user. (M) FR2h. CAF can call emergency nr in Europe 112. (S) FR2i. CAF can detect when a contact received an alarm call. (C) FR3. Log the location data in a local SQLite database on the phone when CAF

is activated. (M)

FR4. Use IoT hub and Stream Analytics to send a session of locations to

an Azure Sql Database. (M)

FR5. Store the data in an Azure SQL Database. (M)

2.4.2 User requirements

UR1. The user can configure the alarm user dialogue with a selected time

interval. (M)

UR2. The user can activate CAF. (M)

UR3. The user can add, save and delete contacts to and from a list. (M) UR4. The user can get feedback from the system on status for CAF. (M) UR5. In dialogue: The user can choose to continue activity/continue CAF

(press button “I'm OK ”). (M)

UR6. The user can answer “I'm OK” in a dialogue prompting user when the

alarm in CAF is triggered. (M)

UR7. The user can deactivate CAF. (M)

UR8. Contacts receive an SMS from the system on alarm. (M) UR9. Contacts receive a phone call from the system on alarm. (M)

2.5 Hardware

The smartphones used in this project for development and testing are Samsung Galaxy A505FN (Device 1) with the operating system (OS) Android version 10 and Huawei P20 Pro (Device 2) with OS Android version 9.

2.6 Software

There are several requirements regarding software from the collaborator of this project, and C# is required to be the main programming language, as well as using Xamarin.Forms, in order to prepare the application to be built for Android and with a possibility for iOS in the future. There are different versions of Xamarin - Xamarin Native and Xamarin.Forms can both be used for mobile application development. Xamarin.Forms enables the sharing of logic and Graphical User Interface (GUI) between Android and iOS. Xamarin Native shares the logic but the GUI is programmed separately and will be more time consuming. Visual Studio is the integrated development environment (IDE), and the standard development

environment from Microsoft for Xamarin. For that reason, it is used in this project together with the following:

● MVVM software architecture

This project applies the Model-View-ViewModel design pattern [40] [41]. In this pattern, the user interface code is separated into three categories - Model, View and ViewModel. Classes in Model represent the actual data from a service, database, etc. The classes in View are the visual representations (GUI) of the app. These classes are commonly referred to as “pages” in Xamarin. The ViewModel classes handle the connection between View and Model, as well as the connection with other native parts of the application and is more specifically with the Android part in this project.

● NuGet package manager facilitates extra functionality in .Net and within this project using Xamarin.Forms. Packages can be added from third-party libraries or other libraries from Microsoft [42] not initially installed in Visual Studio. The NuGet Package Manager is an open-source package manager from Microsoft [3] for the Microsoft development platform that manages these libraries and comes preinstalled.

● Xamarin.Essentials is a cross-platform NuGet package offering access via shared code for a variety of functionalities. This project uses this package for Text-to-Speech [43] functionality when making a digital speech from text. This package also has support for geolocation but this project uses the Android LocationManager [44] to locate a device.

● Sqlite-net-pcl 1.6.292 [45] is a Portable Class Library (PCL) chosen by authors to facilitate a lightweight open-source library for easy SQLite database storage with this project’s Xamarin.Forms application. This is installed as a NuGet in Xamarin.Forms. SQLite-net produces different PCL that also works with .NET and Mono applications. Sqlite-net-pcl 1.6.292 uses SQLitePCLRaw in order to enable platform-independent versions of SQLite.

● Microsoft.Azure.Devices.Client [46] is a NuGet that enables methods to connect from client devices to an Azure IoT Hub and to manage instances of the IoT Hub service and Provisioning service from back-end.NET.

● Json.NET [47] is another NuGet package, and a well documented open-source high-performance framework for .NET that makes it easy to create, parse and modify JSON. It is used to serialize the message with geolocations from our prototype and smartphone, preparing them for being sent to an Azure IoT Hub.

● Xam.Plugins.Messaging [48] is a NuGet package that handles text messaging via SMS and phone calls in the prototype of this project. By using the AutoDial-method included in this package, it also enables an application to automatically initialize phone calls, bypassing the native dialer on the device.

● Azure cloud storage from cloud service provider Microsoft Azure [49] is a requirement from the collaborator. This storage enables scaling a service economically sufficient and with just enough resources needed from a strategic location. Measures of security and reliability are already taken care of and are included in a package. There are different types of cloud computing, such as IaaS (Infrastructure as a Service), PaaS (Platform as a Service), serverless computing, and SaaS (Software as a Service). This project will use SaaS which delivers a service online, on demand, and on a subscription basis.

● Azure IoT Hub

Another requirement from the collaborator is to use an IoT Hub [29] in the communication with the Azure data storage. Hosted in the cloud, the IoT Hub in this project is used as a central message hub for device-to-cloud telemetry data, and it passes on the incoming data from the devices to an Azure Stream Analytics service for further distribution. The IoT Hub simplifies the process of defining message routes to other Azure services, without the need of writing any additional code. It can also be used in cloud-to-device communication to reliably send commands and notifications to your connected devices and track message delivery with acknowledgment receipts. In case of lost connectivity, messages can be resent automatically.

● Azure Stream Analytics is a flexible, production-ready end-to-end analytics pipeline with rapid scalability and elastic capacity to build robust streaming data pipelines and analyze large amounts of events at subsecond latencies. The service makes it possible to run complex analytics, production-ready in minutes with familiar SQL syntax and extensible with JavaScript and C# custom code [30]. In this project, the service is used for inserting GPS data from the IoT Hub correctly into a table of an Azure SQL database.

2.7 Evaluation and testing

As explained in section 2.2, evaluation and testing against requirements, research questions and the collaborator’s requirements are a part of the whole process of this research and

adapted in an explorative testing manner [50] from sprint to sprint. The final evaluation is made when all requirements possible are met within the timeframe of the project.

Functional requirements are tested within the project group together with two representatives from the collaborator. User requirements are tested by the authors and through a test group with four students from Malmö University. The testing of the GPS-functionality is made by the authors engaging in running and biking interchanging with taking breaks. The evaluation is made by the authors together with the collaborators using the information from Requirements testing.

3. Literature review

A diverse set of literature and documentation has been consulted during the course of this project. Much of it consists of relevant journal articles and conference papers on fall detection and emergency apps found by searching through the online databases ACM Digital Library and IEEE Xplore. Other sources have taken the form of studies showing the global significance of fall incidents [5], technical documentation on the use of Xamarin [51], the Model-View-ViewModel-architecture (MVVM) [40] and a few books on software engineering processes [38] and research methodologies [33], [34].

3.1 Defining a fall

While most people have an intuitive and self-experienced-based understanding of what a fall is, it is more complex to find an exact scientific definition. Noury, et al.[16] describes a fall as “the rapid change from the upright/sitting position to the reclining or almost lengthened position, but it is not a controlled movement, like lying down (...)”. Yu [9] explains that a fall for the elderly belongs to one of the four types: fall from sleeping, fall from sitting, fall from walking or standing on the floor or fall from standing on some kind of tool or support. Rungnapakan et al. [52] studied four other types being Non-Movement (Sit or Stand), Constantly Moving (Walk or Run), Change of Movement (Sit-Stand, Stand-Sit) or Falling Movement (Walk-Fall on the buttocks, Walk fall on the back, Run-Fall on the back, Hop- Fall on the back). However, over a dozen other definitions exist [53] and in order to define, recognize or prevent a fall there are many parameters to consider.

3.2 Methods of detecting falls

A fall can involve the whole body, but some parts can signify a fall more than others. Inactivity might occur just after to signify that a fall has just occurred. Yu [13] divides fall detection methods into three groups being; Wearable-based with the help of a posture

device and a motion device, Ambience-based with the help of posture device and presence device usually involving a pressure sensor, and lastly Camera-based with the help of inactivity detection, shape change analysis and 3D head motion analysis.

Zhang and Sawchuk [14] implement context awareness in fall detection by using motion sensors for the actual fall, together with physiological sensors measuring blood pressure and respiration, as well as adding GPS. Xu et al [54] are using Machine Learning and Convolutional Neural Networks to train and recognize Human activities such as walking, jogging, sitting, standing, upstairs and downstairs from a smartphone. Different survey articles on fall detection, methods and principles exist. Noury [16] and Mubashir in 2013 [6] are calling for a scientific common definition of fall and fall detection. Noury asks as well for an agreement on a protocol for evaluating fall detection systems. Mubashir and Pannurat et al. [55] mentions the lack of a publicly available real-life dataset on fall detection for benchmarking. According to Mozaffari et al. [56], fall detection systems consist of three main stages, which they define as prediction, prevention and detection. They review the utilizations of layers such as Cloud, Fog and Edge in these stages, and suggest their use in a fall diagnosis system. The Edge layer, being closer to sensors handling contextual data and smaller computations used in prevention and detection of falls. Cloud layer is used for more complex computations used in the prediction state being the main storage handling, monitoring and analysing the other layers. Fog is situated in proximity to the local network serving as a bridge between Edge and Cloud for smaller computations. Besides also asking for the aforementioned publicly available dataset, Ren and Peng [20] provide useful taxonomies within fall detection and prevention. These are provided in Figure 2 , presenting categories of sensors and hardware used in fall detection. The same authors sorts methods used to interpret and process data from detecting hardware in Figure 3.

Figure 2. A fall detection and fall prevention taxonomy from a sensor apparatus aspect according to Ren and Peng [20].

Figure 3. Fall detection and fall prevention taxonomy from the perspective of an analytical algorithm according to Ren and Peng [20].

3.3 Smartphone-based fall detection systems

As the global popularity and prevalence of smartphones have continued to rise for a decade and a half, more and more research in personal emergency/alert and fall detection systems is adopting smartphone technology as an inexpensive and accessible way of deploying their systems [12]. Already in 2015, Casilari et al. [12] identified 73 research projects that included experimental results with various mobile-based fall detection systems. And this list only included the Android-based projects. The vast majority of the projects are conducted with elderly people in mind as the intended users. Some systems aim to predict or prevent falls, while others focus on responding to incidents when they have already happened. An important common feature of fall detection systems in smartphones are, naturally, to give some sort of response - either when a fall has been detected, or if there is a perceived heightened risk of an imminent fall. These responses can be either local or remote. The local responses often include features such as showing an alarm display on the phone, combined with a sound/vibrational alarm to notify people who might be in close proximity to the user. Another local feature is to leave the user a window of time to break off the alarm, in case of a false positive. Remote responses notify emergency personnel, remote monitoring users, relatives/friends of the user, etc. Wireless communication technologies such as Wi-Fi, 3G/4G, Bluetooth, as well as SMS and/or automated phone calls have been used to monitor and notify relevant contacts, other nearby users of the platform, or local emergency centers [12]. Alert messages sent from a local smartphone often include personal preconfigured text and voice messages, GPS location data, timestamps, and sensor data from the phone [9], [57]. Several different approaches to handling and transmitting this data have been taken. Some prototypes have focused on logging and evaluating the data directly on the phone, which can be sufficient if the project focus is entirely on local responses [7]. Most systems however, transmit data to remote monitoring users. While some projects rely solely on the delivery of SMS, email, and/or phone calls [58], more sophisticated and large-scale systems transmit data to a remote central server [59]. The use of a central server makes for better monitoring of the user’s activities and enhances the scalability of the fall detection system.

Well-known constraints related to smartphone-based fall detection systems are connected to the limited battery life of smartphones, as well as their limited computing resources [12]. Most commercial smartphones in circulation today still need to be charged at least once a day [60], and it is important to keep the energy consumption of an emergency app to a minimum. Otherwise, the intended users might not deem it to be feasible to run the app on their device. This problem has been addressed by Mutohar et al [61], who suggests implementing an energy-efficient solution that utilizes the software-based significant motion sensor in Android systems when gathering and storing location data. This virtual sensor uses the hardware sensors in the smartphone to detect significant motion, such as

walking, biking, or driving in a car. By distinguishing between significant motion events and inactivity, such as when the phone is lying still on a table, the phone can notify relevant apps more strategically, and conserve power whenever possible. This sensor is available to developers in Android API level 18 and onwards [62].

The conducted literature review informs of the complexity of fall detection systems, and the variety in applied methods of detecting/measuring, alarming and data handling. There seems to be no clear consensus in the field of research regarding a common definition of a fall. Instead, research projects tend to each define their own interpretation that best fits their chosen method of detection, prediction or prevention. However, it is clear that smartphones play an increasingly prominent role in fall detection, and that ways to reduce the significance of their constraints or limitations are being found, for instance by applying significant motion sensor technology to ensure low power consumption and address the problem of battery life. Incorporating a layer of cloud computing in the systems is also becoming more and more common, along with more advanced ways of data analysis and machine learning. It seems clear that by implementing a layer of cloud structure that enables more complex and centralized use of the gathered data, has indeed been advancing the field of fall detection systems in recent years. This underpins the chosen approach of this project, where the Azure platform is utilized. The insights gained from studying related research literature serve to place this project in a larger context. It played a role as well in the decision made to detect falls based on GPS data by inferring fall as GPS-inactivity in CAF and AYOKA.

4. Results

This section presents the findings from the evaluation of AYOKA according to the requirements stated in paragraph 2.4 and the geolocation tests made by the authors.

4.1 Requirements testing

This section presents testing results in accordance with the requirements stated in section 2.4. All requirements are tested on two devices specified in section 2.5. The test results from the Functional requirements are exhibited in Table 1-2 and results from the User requirements tests are presented in Table 3.

AYOKA presents satisfying results in all requirements tests with the priority (M) on both devices when tested by the authors, test group and the collaborators. The requirements FR2h and FR2i are failing, and this is explained in the section “Summary results”. FR2g is tested by the authors during biking and running see Table 3.

Table 1. Test results Functional Requirements

Functional Requirements Priority

Device 1 OK

Device 2 OK

FR1

Application runs on Android in

Xamarin.Forms. M X X

FR2a

Implement functionality detecting when the device has not moved for a specific amount of time based on location data retrieved from GPS. This functionality together with system feedback to the user is referred to as Check and Alarm

Functionality (CAF). M X X

FR2b

CAF can be configured with different

detecting time intervals. M X X

FR2c

CAF provides user dialogue that can stop

the alarm. M X X

FR2d

Length of CAF user dialogue can be

configured. M X X

FR2e

CAF can send SMS to a number in contact

list. M X X

FR2f

CAF can make a phone call to a number in

contact list. M X X

FR2g

CAF can detect GPS-inactivity (an

inferred fall) from the user. M X X

FR2h CAF can call emergency nr 112. S

FR2i CAF can detect a received alarm call. S FR3 Log the location data in a local SQLite M X X

database on the phone when CAF is activated.

FR4

Use IoT-Hub to send a session of locations

to Azure Database. M X X

FR5

Store the location data in the Azure Cloud

SQL Database appropriately. M X X

Table 2. Results of testing FR2g:

Identifying an inferred fall through Geolocation when biking and running.

Identifying an inferred fall/ GPS-inactivity

Activity Fall detected

Device1 OK Device 2 OK Biking X X X Running X X X

Table 3. Test results User Requirements.

User Requirements Priority

Device 1 OK

Device2 OK

UR1

The user can configure the “alarm user

dialogue” with a selected time interval. M X X

UR2 The User can activate the “ CAF”. M X X

UR3

The user can add, save and delete

contacts to and from a list. M X X

UR4

The user can get feedback from the

system on status for CAF. M X X

UR5

In dialogue: The user can choose to

continue activity/continue CAF. M X X

UR6

The user can answer "I'm OK" in a

dialogue asking if the user is ok when the

alarm in CAF is triggered. M X X

UR7 The user can choose to deactivate CAF. M X X

UR8

User’s contacts in contact list receives an

SMS from user’s phone on alarm M X X

UR9

User’s contact in contact list receives a

phone call from user’s phone on alarm M X X

4.2 Cloud data sharing flow

AYOKA successfully uploads all data from every session from Device 1 and Device 2 via the IoT Hub to the Azure cloud storage. Please see Table 1 and test results for FR4-6. Sometimes there is a minor delay before all data is gathered. Some data points created later are arriving before ones that were created earlier, but there are no losses of data and there are identifiers and logged timestamps, so this does not raise concerns at this stage.

4.3 Summary of results

The testing showed that AYOKA satisfactorily detects falls based on GPS-inactivity during cycling and running, as intended within the scope of this project. AYOKA delivers satisfying results on almost all stated requirements with the highest priority (M), with a reservation on FR2f. This reservation is regarding the ability to make a phone call to a contact in the contact list since the prototype can make the call but not deliver the message. AYOKA fails to fulfill the two requirements FR2h and FR2i with the lower priority Should have (S). The prototype is capable of acknowledging whether an alarm call was answered by either an answering machine or by a person but fails to deliver the voice message read from the text by the software functionality Text-to-Speech. This is due to technical limitations regarding accessing the sound stream of the outgoing voice call. Consequently FR2h is not fulfilled, because AYOKA at this time is not able to deliver the voice message in a practical way. Furthermore, it was decided not to proceed with the possible implementation to call the emergency number 112 in Europe. False positives occur occasionally when strolling at low speed and stopping for a red light at a pedestrian crosswalk This is out of the scope of this paper but it is an interesting observation that could be useful in future work. The prototype successfully implements Azure technology to store the measured GPS data from the device in the cloud, using an IoT Hub to handle incoming data in JSON format from the application installed on a smartphone. A configured Stream Analytics job takes data from the IoT Hub as input and inserts the data correctly into a table in an Azure SQL database (requirements FR4-7). The data makes it possible to track the route of the user, and shows where GPS-inactivity (which, in this project is inferred as a fall) has been detected by AYOKA. This flow of data is described in more detail in the following section.

5. Are You OK App (AYOKA)

This section presents the prototype Are You OK App (AYOKA) - a smartphone-based activity alarm system application. Included is a use case scenario describing the intended context, screenshots of the GUI and a description of the cloud data sharing flow that AYOKA is using.

5.1 Use case scenario

In case of a crash or fall happening to the runner or cyclist, a mobile application (the prototype) installed on the user’s smartphone automatically initializes phone calls and sends preconfigured text messages from the device to the user’s close ones, as well as nearest emergency responders. This to ensure that people are notified that the user is in serious need of help and to provide their current location, even if the person is unconscious or in some other way unable to make phone calls themselves. Furthermore, geolocation data from the device are sent to a Microsoft Azure database via an IoT hub and, ensuring precise data of the current location, as well as the route taken by the user. The collected data can be used by remote monitoring users to notify them of a user in need of help.

5.2 Description of the prototype

The mobile application prototype AYOKA is developed in Xamarin.Forms and consists of three major parts; the Check and Alarm Functionality (CAF), the Cloud data sharing flow, and the user aspects concerning GUI and user dialogue.

5.2.1 Check and Alarm Functionality (CAF)

CAF is made up of the inferred fall/ GPS-inactivity detection and the alarm functionality. The fall detection is a background service implemented in the native Android part of the Xamarin.Forms prototype. It monitors the geolocation of the user and saves it locally on the phone in a SQLite database until a user's inactivity sets off the alarm of CAF, or the service is deactivated by the user. GPS-inactivity, which is the selected method to infer a fall in this project, is defined as when a user has not changed geolocation for a certain interval. The user can configure the length of the intervals between CAF’s comparison of the latest geolocation value with the previous value.

The alarm functionality consists of two parts; the alarm user dialogue and the actual alarm . Please see the alarm user dialogue in Picture 5-7 and the flowchart in Figure 5 . The alarm user dialogue starts when the GPS-inactivity threshold is reached. A timer in the application is started, a user dialogue is displayed to the user, including the user-selected or default timer interval countdown in seconds, asking the user to confirm that all is OK. If the user confirms, the application and CAF returns to the state of checking for GPS-inactivity. If no confirmation is given, the CAF will alarm. CAF utilizes the native text messaging and phone dialing functions of the smartphone to send out SMS messages to all the phone numbers from the user’s contact list in the app (Picture 3), as well as to initialize phone calls to the contacts. The SMS message has one part that can be edited in the GUI by the user, and

another part that is added from the code of the application, including information of the latest registered geolocation with time, date and an http-link to Google maps (Picture 1).

5.2.2 Cloud data sharing flow

Cloud data sharing flow is used in AYOKA when the latest session of saved geolocations is formatted and serialized in JSON, before being transmitted to the IoT Hub, parsed in Stream Analytics ( Picture 1 ) and inserted as rows into a table in the Azure SQL database. A session in this context is defined as a list of geolocation objects that are sequentially added to a local SQLite database on the device. Geolocation objects are only saved to the local database when CAF is active. In this way, a session holds all gathered geolocations between the activation of CAF, until the alarm sets off or CAF is deactivated, which ends a session. Transmission of data from the device to the cloud occurs in two situations; when CAF is being deactivated, and when CAF alarms after a detected fall, where a user failed to confirm that all is OK. The transmission of data in case of deactivation could be argued to pose a potential privacy issue by gathering more data from the user than what is strictly necessary for safety reasons. However, the choice to transmit on deactivation is meant only for the purpose of testing the prototype, because it saves time by enabling testing of the cloud sharing data flow, without actually waiting for the detection of a fall. If the prototype at a later state should be made publicly available, this function should not be included in a later version, to avoid gathering more data from the user than what is needed. Examples of the information stored in one table from one device are presented below in two tables with the column geoId repeated in both to enhance the readability ( Table 4 and 5) . As shown in the table, a geolocation object holds a geolocationId (auto incremented newsequentialid), a device id, a session id, user’s longitude, user’s latitude, time, date, and whether a fall was detected or not. The connection to the Azure cloud is handled by Microsoft’s Azure library for Xamarin, installed through the NuGet package manager. This flow is demonstrated in Figure 4 below. The prototype demonstrates that this works according to the expectations of our collaborators.

AYOKA is a participatory sensing system rather than an opportunistic one - both described by Mutohar et al [61]. An opportunistic system is deciding itself when to collect data, whereas with AYOKA the user decides when to activate or deactivate the monitoring service that collects their location data. This type of system requires more interaction with the app from the user, but on the other hand, avoids collecting data from the user when this is not required.

Figure 4. AYOKA Cloud data sharing flow

Picture 1: This SELECT query in Stream Analytics retrieves the information from a session of one or several GeoLocation objects that are serialized with JSON and inserted into the Azure SQL database.

Table 4.

Example of information sent and stored from one device in the actual Geolocation Table in the Azure SQL database. These are the four first columns.

Table 5.

Continuation from Table 4: Example of information sent and stored from one device in the actual Geolocation Table in the Azure SQL database. These are the three last columns with the column geoId repeated in the beginning to match the information in Table 4 .

5.2.3 User dialogue and GUI

The user aspect includes all feedback to the user from the system and user inputs to the system, including the possible configurations a user can make (see Picture 1-8 ). The possible user configurations are: add and delete contacts, select the amount of seconds for alarm user dialogue, and select time between logged geolocations ( Picture 4-5 ). The implementation of the alarm user dialogue is described with the flowchart below in Figure 5 and shown in GUI in Picture 6-8.

Figure 5 below shows a flowchart diagram describing the implementation of the alarm user dialogue. It is part of CAF, and is also visualized in GUI in Picture 4- 8. When the user activates the CAF from the user interface ( Picture 2 ), detection - by way of comparing recent geolocations - will commence. If an inferred fall is detected, an alarm screen is shown for a number of seconds, awaiting input from the user ( Picture 6 ). If no input is received, the application will start assembling a preconfigured message to send via SMS to all the existing contacts in the contact list ( Picture 4 ). The app will then consecutively initialize phone calls to the contacts.

Below in Picture 2 is an example of an SMS sent from AYOKA on alarm. The message is partly constructed before a training session. If no message is constructed by the user the app uses a default message.

Picture 2: Automated SMS

The GUI of AYOKA is designed with a tabbed page structure and is kept plain and simple to not distract the user, as shown in Picture 3 . Picture 4 shows when CAF is activated. The saved geolocation objects are shown in a list, and there is also system feedback showing the remaining time to the next check.

Picture 3: Home screen - CAF not activated. Picture 4: Home screen - CAF activated with Geolocation, feedback and timestamp.

Picture 5 shows the configuration page from where a user can choose to add a contact, extend the interval of the time between check for GPS-inactivity (1, 2, 3, 4 or 5 minutes), as well as configuring the duration of the alarm user dialogue time (10 - 60 seconds). It also has an edit field to write the text message being sent to contacts, along with some additional text and a link to Google maps with the latest coordinates. Picture 6 shows how a contact can be edited, saved or deleted.

Picture 5: Configuration screen. Picture 6: Add or Edit a Contact

Picture 7-8 shows the alarm user dialogue where a user can interrupt CAF from alarming and continue monitoring. The system feedback is shown. If the user is not able to confirm that all is OK, CAF alarms(Picture 9 ).

Picture 7: User dialogue started. Picture 8: User confirmed all is ok.

6. Analysis and discussion

6.1 Advantages and disadvantages of inferring falls from GPS

Findings from the literature review showcase that there are various ways to define a fall, in the same way that different types of equipment and methods are employed to detect falls. The knowledge gained from the literature study, in combination with lessons gradually learned from developing the prototype and the requirements elicitation in collaboration with CGI, ultimately led to the decision to infer a fall by evaluating GPS data. This resulted in the development of the Check and Alarm Functionality CAF. The testing of the prototype confirmed that checking for GPS-inactivity can indeed be a promising method of fall detection during running or cycling because the user normally is on the move at all times. However, in the developed prototype shorter stops are made permissible by adjusting the detection-interval or by a user input during the alarm dialogue.

It is potentially both an advantage and disadvantage in the prototype that it is not able to discern between different emergency situations. As GPS-inactivity also could entail many other user scenarios besides a fall, it is likely that the app could also be useful in other scenarios that require an emergency response, for instance in the event of a traffic accident, such as being hit by a car, or a technical breakdown of the bike. Another potential safety related scenario where the application could be of use is when navigating precarious neighborhoods, by providing comfort to individuals who may feel insecure in traveling within certain areas. They can set it for the time they anticipate it would take to navigate said area, and if the journey exceeds expected time and no input is received from the user, it would trigger the alarm, to notify the contact list in the same way as if it were a fall. This could not only provide an added sense of comfort and ease to the user, but also be a functional way to alert people in case of finding oneself in a compromising situation, such as an abduction. On the other hand, the prototype requires more interaction from the user, because it does not take other sensors into account apart from GPS, and therefore can not distinguish between, for instance, being hit by a car and stopping to chat with a friend, thereby putting extra work on the user to confirm that they are OK. Realizing this, CAF could also be seen as a foundation for incorporating an additional method/algorithm of detection in AYOKA, such as accelerometer and/or gyroscope, which has been beyond the scope of this project. One study that has utilized accelerometer and gyroscope sensor data seems to suggest that realistic crashes and falls during cycling are difficult to test, and as a result also difficult to validate the fall algorithm [63]. The GPS-approach taken in this project can be seen as having the benefit of avoiding some of these resource-demanding hurdles with crash testing that seem to be a limitation with other types of smartphone-based fall detection for cyclists.

![Figure 1. Requirements process [38]](https://thumb-eu.123doks.com/thumbv2/5dokorg/4056614.83845/16.918.157.740.135.444/figure-requirements-process.webp)

![Figure 2. A fall detection and fall prevention taxonomy from a sensor apparatus aspect according to Ren and Peng [20].](https://thumb-eu.123doks.com/thumbv2/5dokorg/4056614.83845/23.918.124.751.141.574/figure-detection-prevention-taxonomy-sensor-apparatus-aspect-according.webp)