Mälardalen University Press Dissertations No. 203

HEAD-MOUNTED PROJECTION DISPLAY TO

SUPPORT AND IMPROVE MOTION CAPTURE ACTING

Daniel Kade

2016

School of Innovation, Design and Engineering Mälardalen University Press Dissertations

No. 203

HEAD-MOUNTED PROJECTION DISPLAY TO

SUPPORT AND IMPROVE MOTION CAPTURE ACTING

Daniel Kade

2016

Mälardalen University Press Dissertations No. 203

HEAD-MOUNTED PROJECTION DISPLAY TO SUPPORT AND IMPROVE MOTION CAPTURE ACTING

Daniel Kade

Akademisk avhandling

som för avläggande av teknologie doktorsexamen i datavetenskap vid Akademin för innovation, design och teknik kommer att offentligen försvaras tisdagen den 30 augusti 2016, 09.15 i Lambda, Mälardalens högskola, Västerås. Fakultetsopponent: Professor Morten Fjeld, Chalmers University of Technology

Akademin för innovation, design och teknik Copyright © Daniel Kade, 2016

ISBN 978-91-7485-275-2 ISSN 1651-4238

Mälardalen University Press Dissertations No. 203

HEAD-MOUNTED PROJECTION DISPLAY TO SUPPORT AND IMPROVE MOTION CAPTURE ACTING

Daniel Kade

Akademisk avhandling

som för avläggande av teknologie doktorsexamen i datavetenskap vid Akademin för innovation, design och teknik kommer att offentligen försvaras tisdagen den 30 augusti 2016, 09.15 i Lambda, Mälardalens högskola, Västerås. Fakultetsopponent: Professor Morten Fjeld, Chalmers University of Technology

Akademin för innovation, design och teknik

Mälardalen University Press Dissertations No. 203

HEAD-MOUNTED PROJECTION DISPLAY TO SUPPORT AND IMPROVE MOTION CAPTURE ACTING

Daniel Kade

Akademisk avhandling

som för avläggande av teknologie doktorsexamen i datavetenskap vid Akademin för innovation, design och teknik kommer att offentligen försvaras tisdagen den 30 augusti 2016, 09.15 i Lambda, Mälardalens högskola, Västerås. Fakultetsopponent: Professor Morten Fjeld, Chalmers University of Technology

Abstract

Current and future animations seek for realistic motions to create an illusion of authentic and believable animations. A technology widely used to support this process is motion capture. Therefore, motion capture actors are used to enrich the movements of digital avatars with suitable and believable motions and emotions.

Acting for motion capture, as it is performed today, is a challenging work environment for actors and directors. Short preparation times, minimalistic scenery, limited information about characters and the performance as well as memorizing movements and spatial positions requires actors who are trained and able to highly rely on their acting and imagination skills. In many cases these circumstances can lead to performances with unnatural motions such as stiff looking and emotionless movements, as well as less believable characters. To compensate this, time-consuming repetitions of performances or post-processing of motion capture recordings is needed.

To improve this, we explore the possibilities of acting support and immersion through an interactive system supporting motion capture actors during their performances. In this process, we use an approach that combines research methods from interaction design and computer science. For our research, we firstly identify the challenges actors are facing in motion capture, as well as suggest possible concepts to support the actors. Thereafter, we explore initial prototypes built to support actors during their performance in a motion capture studio. The resulting insights from these initial prototypes led to the design exploration and development of a mixed reality head-mounted projection display that allows showing virtual scenery to the actors and provides real-time acting support. Thereafter, we describe our developed mixed reality application and our findings on how hardware and software prototypes can be designed as acting support, usable in a motion capture environment. A working prototype allowing to evaluate actors' experiences and performances was built as a proof-of-concept.

Additionally, we explored the possibility to use our developed mixed reality prototype in other fields and investigated its applicability for computer games and as an industrial simulator application. Finally, we conducted user studies with traditionally trained theatre and TV actors, experienced motion capture actors and experts, evaluating the experiences with our prototype. The results of these user studies indicate that our application makes it easier for motion capture actors to get into demanded moods and to understand the acting scenario. Furthermore, we show a prototype that complies with the requirements of a motion capture environment, that has the potential to improve motion capture acting results and supports actors with their performances.

ISBN 978-91-7485-275-2 ISSN 1651-4238

Abstract

Current and future animations seek for realistic motions to create an illusion of authentic and believable animations. A technology widely used to support this process is motion capture. Therefore, motion capture actors are used to enrich the movements of digital avatars with suitable and believable motions and emotions.

Acting for motion capture, as it is performed today, is a challenging work environment for actors and directors. Short preparation times, minimalistic scenery, limited information about characters and the performance as well as memorizing movements and spatial positions requires actors who are trained and able to highly rely on their acting and imagination skills. In many cases these circumstances can lead to performances with unnatural motions such as stiff looking and emotionless movements, as well as less believable charac-ters. To compensate this, time-consuming repetitions of performances or post-processing of motion capture recordings is needed.

To improve this, we explore the possibilities of acting support and immer-sion through an interactive system supporting motion capture actors during their performances. In this process, we use an approach that combines research methods from interaction design and computer science. For our research, we firstly identify the challenges actors are facing in motion capture, as well as suggest possible concepts to support the actors. Thereafter, we explore initial prototypes built to support actors during their performance in a motion capture studio. The resulting insights from these initial prototypes led to the design ex-ploration and development of a mixed reality head-mounted projection display that allows showing virtual scenery to the actors and provides real-time act-ing support. Thereafter, we describe our developed mixed reality application and our findings on how hardware and software prototypes can be designed as acting support, usable in a motion capture environment. A working proto-type allowing to evaluate actors’ experiences and performances was built as a

Abstract

Current and future animations seek for realistic motions to create an illusion of authentic and believable animations. A technology widely used to support this process is motion capture. Therefore, motion capture actors are used to enrich the movements of digital avatars with suitable and believable motions and emotions.

Acting for motion capture, as it is performed today, is a challenging work environment for actors and directors. Short preparation times, minimalistic scenery, limited information about characters and the performance as well as memorizing movements and spatial positions requires actors who are trained and able to highly rely on their acting and imagination skills. In many cases these circumstances can lead to performances with unnatural motions such as stiff looking and emotionless movements, as well as less believable charac-ters. To compensate this, time-consuming repetitions of performances or post-processing of motion capture recordings is needed.

To improve this, we explore the possibilities of acting support and immer-sion through an interactive system supporting motion capture actors during their performances. In this process, we use an approach that combines research methods from interaction design and computer science. For our research, we firstly identify the challenges actors are facing in motion capture, as well as suggest possible concepts to support the actors. Thereafter, we explore initial prototypes built to support actors during their performance in a motion capture studio. The resulting insights from these initial prototypes led to the design ex-ploration and development of a mixed reality head-mounted projection display that allows showing virtual scenery to the actors and provides real-time act-ing support. Thereafter, we describe our developed mixed reality application and our findings on how hardware and software prototypes can be designed as acting support, usable in a motion capture environment. A working proto-type allowing to evaluate actors’ experiences and performances was built as a

ii

proof-of-concept.

Additionally, we explored the possibility to use our developed mixed reality prototype in other fields and investigated its applicability for computer games and as an industrial simulator application.

Finally, we conducted user studies with traditionally trained theatre and TV actors, experienced motion capture actors and experts, evaluating the ex-periences with our prototype. The results of these user studies indicate that our application makes it easier for motion capture actors to get into demanded moods and to understand the acting scenario. Furthermore, we show a proto-type that complies with the requirements of a motion capture environment, that has the potential to improve motion capture acting results and supports actors with their performances.

Swedish Summary /

Sammanfattning

Illusionen om autentiska och trov¨ardiga animeringar efterstr¨avas genom real-istiska r¨orelser b˚ade inom dagens och framtidens animation. Motion capture anv¨ands i stor utstr¨ackning f¨or att st¨odja den h¨ar str¨avan. Motion capture-sk˚adespelare berikar digitala avatarer med trov¨ardiga r¨orelser, kroppsspr˚ak och k¨anslor.

Idag utg¨or arbetsmilj¨on f¨or motion capture-sk˚adespeleri en utmaning f¨or sk˚adespelare och regiss¨orer. Korta f¨orberedelsetider, obefintlig scenografi, be-gr¨ansad bakgrundsinformation om karakt¨arer och scener s˚av¨al som bebe-gr¨ansad instudering av r¨orelser och rumsliga positioner kr¨aver v¨alutbildade sk˚adespelare som f¨orlitar sig p˚a sin sk˚adespels- och f¨orest¨allningsf¨orm˚aga. I m˚anga fall kan dessa omst¨andigheter leda till mindre trov¨ardiga karakt¨arer och till onaturliga r¨orelser som ser stela och k¨anslol¨osa ut. F¨or att kompensera detta beh¨ovs tids¨odande repetitioner eller efterbearbetning av motion capture-inspelningar.

F¨or att f¨orb¨attra den h¨ar situationen har vi utforskat m¨ojligheterna f¨or ett immersivt sk˚adespelarst¨od genom ett interaktivt system som st¨odjer motion capture-sk˚adespelare under f¨orberedelse och inspelning. Vi kombinerade forsk-ningsmetoder fr˚an interaktionsdesign och datavetenskap. I v˚ar forskning, iden-tifierade vi f¨orst de utmaningar sk˚adespelare st˚ar inf¨or inom motion capture och vi har f¨oreslagit designkoncept f¨or att st¨odja dessa utmaningar. D¨arefter desig-nade, utvecklade och unders¨okte vi de f¨orsta prototyperna f¨or motion capture-sk˚adespelsst¨od i en motion capture studio. Insikter fr˚an de inledande proto-typerna och studierna ledde till en design av en huvudmonterad projektion-ssk¨arm f¨or mixed reality som g¨or det m¨ojligt att visa virtuella milj¨oer i realtid. Vi beskriver v˚ar mixed reality-applikation och v˚ara unders¨okningsresultat om hur h˚ardvaru- och mjukvaruprototyper kan utformas som ett anv¨andbart

ii

proof-of-concept.

Additionally, we explored the possibility to use our developed mixed reality prototype in other fields and investigated its applicability for computer games and as an industrial simulator application.

Finally, we conducted user studies with traditionally trained theatre and TV actors, experienced motion capture actors and experts, evaluating the ex-periences with our prototype. The results of these user studies indicate that our application makes it easier for motion capture actors to get into demanded moods and to understand the acting scenario. Furthermore, we show a proto-type that complies with the requirements of a motion capture environment, that has the potential to improve motion capture acting results and supports actors with their performances.

Swedish Summary /

Sammanfattning

Illusionen om autentiska och trov¨ardiga animeringar efterstr¨avas genom real-istiska r¨orelser b˚ade inom dagens och framtidens animation. Motion capture anv¨ands i stor utstr¨ackning f¨or att st¨odja den h¨ar str¨avan. Motion capture-sk˚adespelare berikar digitala avatarer med trov¨ardiga r¨orelser, kroppsspr˚ak och k¨anslor.

Idag utg¨or arbetsmilj¨on f¨or motion capture-sk˚adespeleri en utmaning f¨or sk˚adespelare och regiss¨orer. Korta f¨orberedelsetider, obefintlig scenografi, be-gr¨ansad bakgrundsinformation om karakt¨arer och scener s˚av¨al som bebe-gr¨ansad instudering av r¨orelser och rumsliga positioner kr¨aver v¨alutbildade sk˚adespelare som f¨orlitar sig p˚a sin sk˚adespels- och f¨orest¨allningsf¨orm˚aga. I m˚anga fall kan dessa omst¨andigheter leda till mindre trov¨ardiga karakt¨arer och till onaturliga r¨orelser som ser stela och k¨anslol¨osa ut. F¨or att kompensera detta beh¨ovs tids¨odande repetitioner eller efterbearbetning av motion capture-inspelningar.

F¨or att f¨orb¨attra den h¨ar situationen har vi utforskat m¨ojligheterna f¨or ett immersivt sk˚adespelarst¨od genom ett interaktivt system som st¨odjer motion capture-sk˚adespelare under f¨orberedelse och inspelning. Vi kombinerade forsk-ningsmetoder fr˚an interaktionsdesign och datavetenskap. I v˚ar forskning, iden-tifierade vi f¨orst de utmaningar sk˚adespelare st˚ar inf¨or inom motion capture och vi har f¨oreslagit designkoncept f¨or att st¨odja dessa utmaningar. D¨arefter desig-nade, utvecklade och unders¨okte vi de f¨orsta prototyperna f¨or motion capture-sk˚adespelsst¨od i en motion capture studio. Insikter fr˚an de inledande proto-typerna och studierna ledde till en design av en huvudmonterad projektion-ssk¨arm f¨or mixed reality som g¨or det m¨ojligt att visa virtuella milj¨oer i realtid. Vi beskriver v˚ar mixed reality-applikation och v˚ara unders¨okningsresultat om hur h˚ardvaru- och mjukvaruprototyper kan utformas som ett anv¨andbart

sk˚ade-iv

spelsst¨od i en motion capture milj¨o. F¨or att utv¨ardera sk˚adespel och sk˚ade-spelares upplevelse av v˚ar design implementerade vi en fungerande proof-of-concept-prototyp.

Vi unders¨okte ocks˚a m¨ojligheten att anv¨anda v˚ar mixed reality-prototyp inom andra omr˚aden, dels dess anv¨andbarhet f¨or datorspel och dels som simu-lator f¨or en industriell till¨ampning.

Slutligen utv¨arderade vi v˚ar prototyp genom anv¨andarstudier med utbil-dade teater- och TV-sk˚adespelare samt erfarna motion capture-sk˚adespelare och experter. Resultaten av dessa anv¨andarstudier visar att v˚ar prototyp un-derl¨attar f¨or motion capture-sk˚adespelare att komma in i st¨amning och f¨orst˚a scenen de ska spela. V˚ar prototyp uppfyller kraven f¨or en motion capture-milj¨o, st¨odjer motion capture-sk˚adespelare och har potential att f¨orb¨attra resul-tatet f¨or motion capture-tagningar.

German Summary /

Zusammenfassung

Aktuelle und zuk¨unftige Animationen streben nach realistischen Bewegungen um eine Illusion von authentischen und glaubhaften Animationen zu erschaf-fen. Eine Technologie, die h¨aufig verwendet wird um diesen Prozess zu un-terst¨utzen, ist Motion Capture. Daher werden Motion Capture Schauspieler eingesetzt, um die Bewegungen von digitalen Avataren mit passenden und glaubw¨urdige Bewegungen und Emotionen zu bereichern.

Motion Capture Schauspiel ist, wie es heutzutage durchgef¨uhrt wird, ein forderndes Arbeitsumfeld f¨ur Schauspieler und Regisseure. Kurze Vorbere-itungszeiten, minimalistische Kulissen, begrenzte Informationen ¨uber Charak-tere und das Schauspiel, sowie das Einpr¨agen von Bewegungen und r¨aumlichen Positionen erfordern ausgebildete Schauspieler die in der Lage sind sich hoch-gradig auf ihre Schauspiel- und Vorstellungsf¨ahigkeiten verlassen zu k¨onnen. In vielen F¨allen k¨onnen diese Umst¨ande zu Auff¨uhrungen mit unnat¨urlichen Bewegungen, wie zum Beispiel steif aussehende und emotionslose Bewegun-gen, sowie zu weniger glaubhaften Charakteren f¨uhren. Um dies zu kompen-sieren werden zeitaufwendige Wiederholungen der Auff¨uhrungen oder eine Nachbearbeitung der Motion Capture Aufnahmen ben¨otigt.

Daher untersuchen wir die M¨oglichkeiten von Schauspielunterst¨utzung und Immersion durch ein interaktives System, welches Motion Capture Schaus-pieler w¨ahrend ihrer Auftritte unterst¨utzt. Dabei verwenden wir einen Ansatz, der Forschungsmethoden von Interaktionsdesign und Informatik kombiniert. F¨ur unsere Forschung identifizieren wir zun¨achst die Herausforderungen denen Motion Capture Schauspieler gegen¨uberstehen und schlagen m¨ogliche Konzepte zur Unterst¨utzung der Schauspieler vor. Danach entwickelten und untersuchten wir erste Prototypen, die gebaut wurden um Schauspieler w¨ahrend ihres

iv

spelsst¨od i en motion capture milj¨o. F¨or att utv¨ardera sk˚adespel och sk˚ade-spelares upplevelse av v˚ar design implementerade vi en fungerande proof-of-concept-prototyp.

Vi unders¨okte ocks˚a m¨ojligheten att anv¨anda v˚ar mixed reality-prototyp inom andra omr˚aden, dels dess anv¨andbarhet f¨or datorspel och dels som simu-lator f¨or en industriell till¨ampning.

Slutligen utv¨arderade vi v˚ar prototyp genom anv¨andarstudier med utbil-dade teater- och TV-sk˚adespelare samt erfarna motion capture-sk˚adespelare och experter. Resultaten av dessa anv¨andarstudier visar att v˚ar prototyp un-derl¨attar f¨or motion capture-sk˚adespelare att komma in i st¨amning och f¨orst˚a scenen de ska spela. V˚ar prototyp uppfyller kraven f¨or en motion capture-milj¨o, st¨odjer motion capture-sk˚adespelare och har potential att f¨orb¨attra resul-tatet f¨or motion capture-tagningar.

German Summary /

Zusammenfassung

Aktuelle und zuk¨unftige Animationen streben nach realistischen Bewegungen um eine Illusion von authentischen und glaubhaften Animationen zu erschaf-fen. Eine Technologie, die h¨aufig verwendet wird um diesen Prozess zu un-terst¨utzen, ist Motion Capture. Daher werden Motion Capture Schauspieler eingesetzt, um die Bewegungen von digitalen Avataren mit passenden und glaubw¨urdige Bewegungen und Emotionen zu bereichern.

Motion Capture Schauspiel ist, wie es heutzutage durchgef¨uhrt wird, ein forderndes Arbeitsumfeld f¨ur Schauspieler und Regisseure. Kurze Vorbere-itungszeiten, minimalistische Kulissen, begrenzte Informationen ¨uber Charak-tere und das Schauspiel, sowie das Einpr¨agen von Bewegungen und r¨aumlichen Positionen erfordern ausgebildete Schauspieler die in der Lage sind sich hoch-gradig auf ihre Schauspiel- und Vorstellungsf¨ahigkeiten verlassen zu k¨onnen. In vielen F¨allen k¨onnen diese Umst¨ande zu Auff¨uhrungen mit unnat¨urlichen Bewegungen, wie zum Beispiel steif aussehende und emotionslose Bewegun-gen, sowie zu weniger glaubhaften Charakteren f¨uhren. Um dies zu kompen-sieren werden zeitaufwendige Wiederholungen der Auff¨uhrungen oder eine Nachbearbeitung der Motion Capture Aufnahmen ben¨otigt.

Daher untersuchen wir die M¨oglichkeiten von Schauspielunterst¨utzung und Immersion durch ein interaktives System, welches Motion Capture Schaus-pieler w¨ahrend ihrer Auftritte unterst¨utzt. Dabei verwenden wir einen Ansatz, der Forschungsmethoden von Interaktionsdesign und Informatik kombiniert. F¨ur unsere Forschung identifizieren wir zun¨achst die Herausforderungen denen Motion Capture Schauspieler gegen¨uberstehen und schlagen m¨ogliche Konzepte zur Unterst¨utzung der Schauspieler vor. Danach entwickelten und untersuchten wir erste Prototypen, die gebaut wurden um Schauspieler w¨ahrend ihres

Schaus-vi

piels in einem Motion Capture Studio zu unterst¨utzen. Die resultierenden Erkenntnisse von diesen ersten Prototypen f¨uhrten zu Designuntersuchungen und der Entwicklung eines am Kopf befestigten Mixed Reality Projektions-displays, welches es m¨oglicht macht den Schauspielern virtuelle Kulissen zu zeigen und die Schauspieler in Echtzeit zu unterst¨utzt. Danach beschreiben wir unsere entwickelte Mixed Reality Anwendung und unsere Erkenntnisse wie Hardware- und Softwareprototypen als Schauspielunterst¨utzung entwick-elt werden k¨onnen, so dass diese in einer Motion Capture Umgebung einset-zbar sind. Ein funktionsf¨ahiger Prototyp, der es erm¨oglicht die Erfahrungen und Auff¨uhrungen der Schauspieler zu evaluieren, wurde als Proof-of-Concept gebaut.

Zus¨atzlich erkundeten wir die M¨oglichkeit unseren entwickelten Mixed Reality Prototypen in anderen Bereichen zu nutzen und untersuchten die An-wendbarkeit f¨ur Computerspiele und als Simulator f¨ur Industrieanwendugen.

Schließlich haben wir Anwenderstudien mit traditionell ausgebildeten The-ater und TV-Schauspielern, erfahrenen Motion Capture Schauspielern und Ex-perten durchgef¨uhrt um die Erfahrungen mit unserem Prototypen zu evaluieren. Die Ergebnisse dieser Anwenderstudien zeigen, dass unsere Anwendung es den Motion Capture Schauspielern erleichtert, sich in geforderte Stimmungen zu versetzten und das Schauspielszenario zu verstehen. Dar¨uber hinaus zeigen wir einen Prototypen, der den Anforderungen einer Motion Capture Umgebung entspricht, das Potenzial hat Motion Capture Schauspielergebnisse zu verbes-sern und Motion Capture Schauspieler w¨ahrend ihres Schauspiels unterst¨utzt.

Acknowledgements

At first, I would like to express my sincere gratitude to my supervisors, Prof. Dr. O˘guzhan ¨Ozcan and Assoc. Prof. Dr. Rikard Lindell who have guided and encouraged me throughout my studies. I have learned a lot from their advices, feedback and guidance. Without their support this thesis would not have been possible.

I would also like to thank Professor Dr. Dr. Gordana Dodig-Crnkovic for her help and supervision during my Licentiate thesis as well as her valuable feedback and collaboration on research articles.

Moreover, I would like to thank O˘guzhan for his efforts in giving me the opportunity to be an industrial PhD student, to have participated in a larger research project and to study a semester abroad at Koc¸ University in Istanbul. This exchange opened up for multiple subsequent research visits to Istanbul. Here, I would especially like to thank Hakan ¨Urey and his team for their sup-port and collaboration. Furthermore, I would like to thank the guys from De-sign Lab at Koc¸ University who have made my research visits a very enjoyable time.

Then, I want to thank my co-authors, Kaan Aks¸it, Markus Wallmyr, Tobias Holstein and Jiaying Du for their research cooperation and fellowship during our research projects.

I am also grateful to Ivica Crnkovic, Anna-Lena Carlsson and Radu Dobrin for reviewing my proposals and giving me valuable feedback for my theses.

At this point I would also like to thank the staff of the ITS-EASY school and IDT administration who have made things much easier. Here, I would like to especially thank Radu Dobrin, Krisitina Lundqvist, Malin Rosqvist, Gunnar Widforss, Carola Ryttersson and Susanne Fronn˚a for their support.

Moreover, I would like to thank Afshin Ameri and Batu Akan for their support and for making teaching, lab supervisions and lunch breaks even more enjoyable.

vi

piels in einem Motion Capture Studio zu unterst¨utzen. Die resultierenden Erkenntnisse von diesen ersten Prototypen f¨uhrten zu Designuntersuchungen und der Entwicklung eines am Kopf befestigten Mixed Reality Projektions-displays, welches es m¨oglicht macht den Schauspielern virtuelle Kulissen zu zeigen und die Schauspieler in Echtzeit zu unterst¨utzt. Danach beschreiben wir unsere entwickelte Mixed Reality Anwendung und unsere Erkenntnisse wie Hardware- und Softwareprototypen als Schauspielunterst¨utzung entwick-elt werden k¨onnen, so dass diese in einer Motion Capture Umgebung einset-zbar sind. Ein funktionsf¨ahiger Prototyp, der es erm¨oglicht die Erfahrungen und Auff¨uhrungen der Schauspieler zu evaluieren, wurde als Proof-of-Concept gebaut.

Zus¨atzlich erkundeten wir die M¨oglichkeit unseren entwickelten Mixed Reality Prototypen in anderen Bereichen zu nutzen und untersuchten die An-wendbarkeit f¨ur Computerspiele und als Simulator f¨ur Industrieanwendugen.

Schließlich haben wir Anwenderstudien mit traditionell ausgebildeten The-ater und TV-Schauspielern, erfahrenen Motion Capture Schauspielern und Ex-perten durchgef¨uhrt um die Erfahrungen mit unserem Prototypen zu evaluieren. Die Ergebnisse dieser Anwenderstudien zeigen, dass unsere Anwendung es den Motion Capture Schauspielern erleichtert, sich in geforderte Stimmungen zu versetzten und das Schauspielszenario zu verstehen. Dar¨uber hinaus zeigen wir einen Prototypen, der den Anforderungen einer Motion Capture Umgebung entspricht, das Potenzial hat Motion Capture Schauspielergebnisse zu verbes-sern und Motion Capture Schauspieler w¨ahrend ihres Schauspiels unterst¨utzt.

Acknowledgements

At first, I would like to express my sincere gratitude to my supervisors, Prof. Dr. O˘guzhan ¨Ozcan and Assoc. Prof. Dr. Rikard Lindell who have guided and encouraged me throughout my studies. I have learned a lot from their advices, feedback and guidance. Without their support this thesis would not have been possible.

I would also like to thank Professor Dr. Dr. Gordana Dodig-Crnkovic for her help and supervision during my Licentiate thesis as well as her valuable feedback and collaboration on research articles.

Moreover, I would like to thank O˘guzhan for his efforts in giving me the opportunity to be an industrial PhD student, to have participated in a larger research project and to study a semester abroad at Koc¸ University in Istanbul. This exchange opened up for multiple subsequent research visits to Istanbul. Here, I would especially like to thank Hakan ¨Urey and his team for their sup-port and collaboration. Furthermore, I would like to thank the guys from De-sign Lab at Koc¸ University who have made my research visits a very enjoyable time.

Then, I want to thank my co-authors, Kaan Aks¸it, Markus Wallmyr, Tobias Holstein and Jiaying Du for their research cooperation and fellowship during our research projects.

I am also grateful to Ivica Crnkovic, Anna-Lena Carlsson and Radu Dobrin for reviewing my proposals and giving me valuable feedback for my theses.

At this point I would also like to thank the staff of the ITS-EASY school and IDT administration who have made things much easier. Here, I would like to especially thank Radu Dobrin, Krisitina Lundqvist, Malin Rosqvist, Gunnar Widforss, Carola Ryttersson and Susanne Fronn˚a for their support.

Moreover, I would like to thank Afshin Ameri and Batu Akan for their support and for making teaching, lab supervisions and lunch breaks even more enjoyable.

viii

Another thank you goes to Ragnar Tengstrand, Jan Frohm, Daniel Forsstr¨om, Claudio Redavid, Robert Gustavsson, David Reypka and Patrick Sj¨o¨o who have helped to further develop and explore prototype ideas included in this thesis.

Certainly, there are many more people who made my time at MdH enjoy-able and therefore I would like to thank all ITS-EASY students, PhD students from MdH, staff members, teachers and friends for the great time and their support.

As industrial PhD student, I was able to get insights into industrial projects and corporate research. Therefore, I would like to thank Imagination Studios and Motion Control for their support and the possibility to collaborate on their research projects. In this respect, I would like to thank Christer Gerdtman from Motion Control for his guidance and giving me the opportunity to participate in interesting research projects.

This work has been supported by the Swedish Knowledge Foundation (KKS), M¨alardalen University, Imagination Studios and Motion Control AB i V¨aster˚as through the PhD school ITS-EASY. This support has made it possible for me to go through my PhD education and therefore I would like to also express my gratitude.

My time as a PhD student has been interesting, fun and rewarding. I also met many interesting people and new friends. Therefore, once again, thank you all for your support, guidance and friendship.

Daniel Kade V¨aster˚as, August, 2016

List of Publications

Papers Included in the PhD Thesis

1Paper A An Immersive Motion Capture Environment. Daniel Kade, O˘guzhan ¨Ozcan and Rikard Lindell. Proceedings of the ICCGMAT 2013 Interna-tional Conference on Computer Games, Multimedia and Allied Technol-ogy vol. 73, pp. 500-506, Zurich, Switzerland, January 2013.

Paper B Towards Stanislavski-based Principles for Motion Capture Acting in

Animation and Computer Games. Daniel Kade, O˘guzhan ¨Ozcan and

Rikard Lindell. CONFIA 2013 International Conference in Illustration & Animation, Porto, Portugal, November 2013.

Paper C Head-worn Mixed Reality Projection Display Application. Kaan Aks¸it, Daniel Kade, O˘guzhan ¨Ozcan and Hakan ¨Urey. ACE 2014, Advances in Computer Entertainment Technology, Funchal, Portugal, November 2014.

Paper D Head-mounted mixed reality projection display for games production

and entertainment. Daniel Kade, Kaan Aks¸it, Hakan ¨Urey and O˘guzhan

¨Ozcan, Personal and Ubiquitous Computing (2015), Volume 19, Issue 3, pp. 509-521, DOI: 10.1007/s00779-015-0847-y.

Paper E Supporting Acting Performances Through Mixed Reality and Virtual

Environments. Daniel Kade, Rikard Lindell, Hakan ¨Urey and O˘guzhan

¨Ozcan. Proceedings of Software and Emerging Technologies for Educa-tion, Culture, Entertainment, and Commerce (SETECEC) 2016, ISBN 978.88.96.471.40.1, DOI 10.978.8896471/401. Blue Herons Editions (Italy), Venice, Italy, March 2016. (Best Paper Award)

1The included articles have been reformatted to comply with the PhD thesis layout

viii

Another thank you goes to Ragnar Tengstrand, Jan Frohm, Daniel Forsstr¨om, Claudio Redavid, Robert Gustavsson, David Reypka and Patrick Sj¨o¨o who have helped to further develop and explore prototype ideas included in this thesis.

Certainly, there are many more people who made my time at MdH enjoy-able and therefore I would like to thank all ITS-EASY students, PhD students from MdH, staff members, teachers and friends for the great time and their support.

As industrial PhD student, I was able to get insights into industrial projects and corporate research. Therefore, I would like to thank Imagination Studios and Motion Control for their support and the possibility to collaborate on their research projects. In this respect, I would like to thank Christer Gerdtman from Motion Control for his guidance and giving me the opportunity to participate in interesting research projects.

This work has been supported by the Swedish Knowledge Foundation (KKS), M¨alardalen University, Imagination Studios and Motion Control AB i V¨aster˚as through the PhD school ITS-EASY. This support has made it possible for me to go through my PhD education and therefore I would like to also express my gratitude.

My time as a PhD student has been interesting, fun and rewarding. I also met many interesting people and new friends. Therefore, once again, thank you all for your support, guidance and friendship.

Daniel Kade V¨aster˚as, August, 2016

List of Publications

Papers Included in the PhD Thesis

1Paper A An Immersive Motion Capture Environment. Daniel Kade, O˘guzhan ¨Ozcan and Rikard Lindell. Proceedings of the ICCGMAT 2013 Interna-tional Conference on Computer Games, Multimedia and Allied Technol-ogy vol. 73, pp. 500-506, Zurich, Switzerland, January 2013.

Paper B Towards Stanislavski-based Principles for Motion Capture Acting in

Animation and Computer Games. Daniel Kade, O˘guzhan ¨Ozcan and

Rikard Lindell. CONFIA 2013 International Conference in Illustration & Animation, Porto, Portugal, November 2013.

Paper C Head-worn Mixed Reality Projection Display Application. Kaan Aks¸it, Daniel Kade, O˘guzhan ¨Ozcan and Hakan ¨Urey. ACE 2014, Advances in Computer Entertainment Technology, Funchal, Portugal, November 2014.

Paper D Head-mounted mixed reality projection display for games production

and entertainment. Daniel Kade, Kaan Aks¸it, Hakan ¨Urey and O˘guzhan

¨Ozcan, Personal and Ubiquitous Computing (2015), Volume 19, Issue 3, pp. 509-521, DOI: 10.1007/s00779-015-0847-y.

Paper E Supporting Acting Performances Through Mixed Reality and Virtual

Environments. Daniel Kade, Rikard Lindell, Hakan ¨Urey and O˘guzhan

¨Ozcan. Proceedings of Software and Emerging Technologies for Educa-tion, Culture, Entertainment, and Commerce (SETECEC) 2016, ISBN 978.88.96.471.40.1, DOI 10.978.8896471/401. Blue Herons Editions (Italy), Venice, Italy, March 2016. (Best Paper Award)

x

Paper F Low-cost mixed reality simulator for industrial vehicle environments. Daniel Kade, Markus Wallmyr, Tobias Holstein, Rikard Lindell, Hakan

¨Urey and O˘guzhan ¨Ozcan. HCIi 2016, Toronto, Canada, July 2016. Paper G Evaluation of a Mixed Reality Projection Display to Support Motion

Capture Acting. Daniel Kade, Rikard Lindell, Hakan ¨Urey and O˘guzhan

¨Ozcan. MobiQuitous 2016, Hiroshima, Japan, November 2016. (Paper submitted)

Licentiate Thesis

2Towards Immersive Motion Capture Acting: Design, Exploration and Devel-opment of an Augmented System Solution. Daniel Kade. Licentiate Thesis,

ISSN 978-91-7485-161-8, ISBN 1651-9256, October 2014.

Related Publications not Included in the Thesis

1. Ethics of Virtual Reality Applications in Computer Game Production. Daniel Kade. 2015. In Philosophies 2016, Basel, Switzerland, vol.1, no. 1, pp. 73-86, doi: 10.3390/philosophies1010073.

2. Acting 2.0: When Entertainment Technology Helps Actors to Perform. Daniel Kade, Rikard Lindell, Hakan ¨Urey and O˘guzhan ¨Ozcan. In Pro-ceedings of the 12th Conference on Advances in Computer Entertain-ment Technology (ACE ’15), Iskandar, Malaysia, November 2015.

Unrelated Publications not Included in the Thesis

1. The Effects of Perceived USB-delay for Sensor and Embedded System

Development. Jiaying Du, Daniel Kade, Christer Gerdtman, O˘guzhan

¨Ozcan, Maria Lind´en. 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC’16), Orlando, USA, August 2016.

2A licentiate degree is a Swedish graduate degree halfway between M.Sc. and Ph.D.

xi

Authors Contribution

1. Paper A: Main researcher and author was the author of this thesis. 2. Paper B: Main researcher and author was the author of this thesis. 3. Paper C: This contribution was made in collaboration with the Koc¸

Uni-versity Micro Optics Lab where the lab provided the initial hardware topology of a stripped down laser projector. In cooperation, we created a wearable solution and shaped its design to be more comfortable and use-ful for general uses. Software and digital content was provided through the author of this thesis. This paper was written in shared collaboration with the first author (50% first author, 50% author of this thesis). 4. Paper D: Main researcher and author was the author of this thesis. 5. Paper E: Main researcher and author was the author of this thesis. 6. Paper F: The development, research and writing the paper was

per-formed in a shared collaboration of the first three authors (40% author of this thesis, 30% second author, 30% third author).

x

Paper F Low-cost mixed reality simulator for industrial vehicle environments. Daniel Kade, Markus Wallmyr, Tobias Holstein, Rikard Lindell, Hakan

¨Urey and O˘guzhan ¨Ozcan. HCIi 2016, Toronto, Canada, July 2016. Paper G Evaluation of a Mixed Reality Projection Display to Support Motion

Capture Acting. Daniel Kade, Rikard Lindell, Hakan ¨Urey and O˘guzhan

¨Ozcan. MobiQuitous 2016, Hiroshima, Japan, November 2016. (Paper submitted)

Licentiate Thesis

2Towards Immersive Motion Capture Acting: Design, Exploration and Devel-opment of an Augmented System Solution. Daniel Kade. Licentiate Thesis,

ISSN 978-91-7485-161-8, ISBN 1651-9256, October 2014.

Related Publications not Included in the Thesis

1. Ethics of Virtual Reality Applications in Computer Game Production. Daniel Kade. 2015. In Philosophies 2016, Basel, Switzerland, vol.1, no. 1, pp. 73-86, doi: 10.3390/philosophies1010073.

2. Acting 2.0: When Entertainment Technology Helps Actors to Perform. Daniel Kade, Rikard Lindell, Hakan ¨Urey and O˘guzhan ¨Ozcan. In Pro-ceedings of the 12th Conference on Advances in Computer Entertain-ment Technology (ACE ’15), Iskandar, Malaysia, November 2015.

Unrelated Publications not Included in the Thesis

1. The Effects of Perceived USB-delay for Sensor and Embedded System

Development. Jiaying Du, Daniel Kade, Christer Gerdtman, O˘guzhan

¨Ozcan, Maria Lind´en. 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC’16), Orlando, USA, August 2016.

2A licentiate degree is a Swedish graduate degree halfway between M.Sc. and Ph.D.

xi

Authors Contribution

1. Paper A: Main researcher and author was the author of this thesis. 2. Paper B: Main researcher and author was the author of this thesis. 3. Paper C: This contribution was made in collaboration with the Koc¸

Uni-versity Micro Optics Lab where the lab provided the initial hardware topology of a stripped down laser projector. In cooperation, we created a wearable solution and shaped its design to be more comfortable and use-ful for general uses. Software and digital content was provided through the author of this thesis. This paper was written in shared collaboration with the first author (50% first author, 50% author of this thesis). 4. Paper D: Main researcher and author was the author of this thesis. 5. Paper E: Main researcher and author was the author of this thesis. 6. Paper F: The development, research and writing the paper was

per-formed in a shared collaboration of the first three authors (40% author of this thesis, 30% second author, 30% third author).

xii

xiii

Term Descriptions

Interaction Design

Actor We consider an ’actor’ as someone who is acting for a

motion capture shoot. This ’actor’ can have different skills and must not necessarily have received acting training of some sort. Application Hereby we mean software, designed to support performing

specific tasks.

Expert An expert is a user who is using or testing our prototypes, designs and systems. This user has specific skills or knowledge.

Life-like Movements that resemble suitable and believable movements, Movements creating a sense of realism of a 3D character.

Stakeholder Stakeholders are persons involved or considered within the design of a solution. In our research, stakeholders are mainly actors, directors, motion capture staff and researchers. System A system combines software and hardware with their

inter-actions and components to act as a whole.

Tester A tester is someone who is testing our prototypes, designs and systems. This user can but does not need to have specific skills. User A user is someone who is using or testing our prototypes, designs

and systems. This person can but does not need to have specific skills.

xiii

Term Descriptions

Interaction Design

Actor We consider an ’actor’ as someone who is acting for a

motion capture shoot. This ’actor’ can have different skills and must not necessarily have received acting training of some sort. Application Hereby we mean software, designed to support performing

specific tasks.

Expert An expert is a user who is using or testing our prototypes, designs and systems. This user has specific skills or knowledge.

Life-like Movements that resemble suitable and believable movements, Movements creating a sense of realism of a 3D character.

Stakeholder Stakeholders are persons involved or considered within the design of a solution. In our research, stakeholders are mainly actors, directors, motion capture staff and researchers. System A system combines software and hardware with their

inter-actions and components to act as a whole.

Tester A tester is someone who is testing our prototypes, designs and systems. This user can but does not need to have specific skills. User A user is someone who is using or testing our prototypes, designs

and systems. This person can but does not need to have specific skills.

xiv

Computer Science

AR Augmented Reality: An augmented reality superimposes digitally created content onto the real world. This allows a person using AR to see both; the real and the digital world. Usually AR uses some means of connecting real world objects with the augmented reality. AV Augmented Virtuality: Augmented virtuality aims at including

and merging real world objects into the virtual world. Here, physical objects or people can interact with or be seen in as themselves or digital representations in the virtual environment in real-time. Avatar An avatar is usually the representation of a person or character in a

digital or virtual environment. In our definition an avatar is simply a digital character.

CAVE Cave Automatic Virtual Environment: A CAVE is a virtual environ-ment showing digital content on multiple or all six walls of a cube-sized room.

DOF Degrees of Freedom: We use the DOF description from computer graphics and animation to describe possible movements and rotations around different axes.

HD High Definition

HDMI High-Definition Multimedia Interface HMD Head-mounted Display

HMPD Head-mounted Projection Display LED Light Emitting Diode

MHL Mobile High-definition Link

MR Mixed Reality merges the real world with the virtual world. This can be achieved with different means and technologies for different purposes. Milgram’s Taxonomy [1] uses MR as an umbrella term ranging from VR to AR, Augmented Virtuality (AV) and the real world.

VR Virtual Reality: A virtual reality creates a reality that is not real. The vision of a person is in many cases occluded by digitally created content or the person is placed in a virtual reality environment. Synonyms are: virtual world, virtual environment, and cyberspace.

Contents

I

Thesis

1

1 Introduction 3 1.1 Introduction . . . 3 1.2 Motivation . . . 5 1.3 Thesis Outline . . . 5 1.4 Related Work . . . 6 2 Research Description 13 2.1 Research Questions . . . 132.2 Proposed Solution to the Research Problem . . . 14

2.3 Why not VR? . . . 16

2.4 Identified Scientific Challenges . . . 16

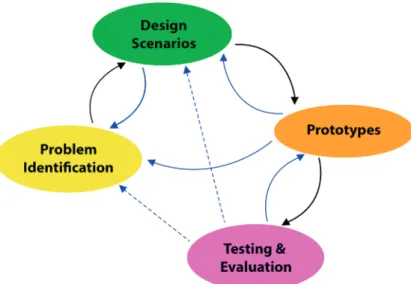

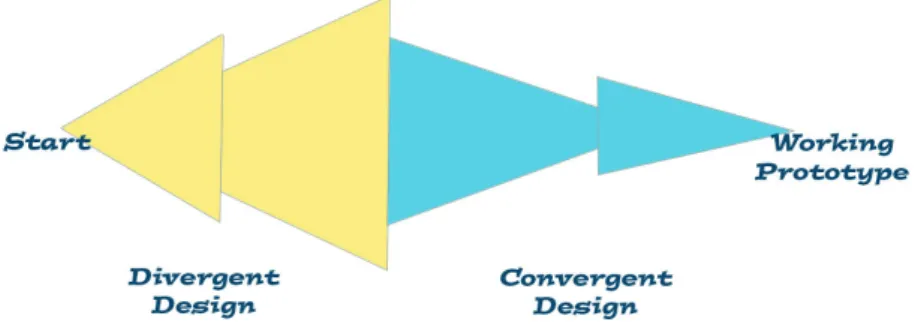

2.5 Research Methods . . . 17

2.6 Expected Results . . . 23

3 Thesis Contributions 25 4 Approach, Prototype and Findings 29 4.1 Part I: Identifiying the Problem Setting . . . 29

4.2 Technology Review . . . 30

4.2.1 Virtuality Continuum . . . 30

4.2.2 Projection Mapping . . . 31

4.2.3 Augmented Reality . . . 32

4.2.4 Mixed Reality Installations . . . 33

4.3 Personas . . . 34

4.4 Improved Motion Capture Process . . . 37

4.5 Part II: Designing Scenarios and Ideas . . . 39 xv

xiv

Computer Science

AR Augmented Reality: An augmented reality superimposes digitally created content onto the real world. This allows a person using AR to see both; the real and the digital world. Usually AR uses some means of connecting real world objects with the augmented reality. AV Augmented Virtuality: Augmented virtuality aims at including

and merging real world objects into the virtual world. Here, physical objects or people can interact with or be seen in as themselves or digital representations in the virtual environment in real-time. Avatar An avatar is usually the representation of a person or character in a

digital or virtual environment. In our definition an avatar is simply a digital character.

CAVE Cave Automatic Virtual Environment: A CAVE is a virtual environ-ment showing digital content on multiple or all six walls of a cube-sized room.

DOF Degrees of Freedom: We use the DOF description from computer graphics and animation to describe possible movements and rotations around different axes.

HD High Definition

HDMI High-Definition Multimedia Interface HMD Head-mounted Display

HMPD Head-mounted Projection Display LED Light Emitting Diode

MHL Mobile High-definition Link

MR Mixed Reality merges the real world with the virtual world. This can be achieved with different means and technologies for different purposes. Milgram’s Taxonomy [1] uses MR as an umbrella term ranging from VR to AR, Augmented Virtuality (AV) and the real world.

VR Virtual Reality: A virtual reality creates a reality that is not real. The vision of a person is in many cases occluded by digitally created content

Contents

I

Thesis

1

1 Introduction 3 1.1 Introduction . . . 3 1.2 Motivation . . . 5 1.3 Thesis Outline . . . 5 1.4 Related Work . . . 6 2 Research Description 13 2.1 Research Questions . . . 132.2 Proposed Solution to the Research Problem . . . 14

2.3 Why not VR? . . . 16

2.4 Identified Scientific Challenges . . . 16

2.5 Research Methods . . . 17

2.6 Expected Results . . . 23

3 Thesis Contributions 25 4 Approach, Prototype and Findings 29 4.1 Part I: Identifiying the Problem Setting . . . 29

4.2 Technology Review . . . 30

4.2.1 Virtuality Continuum . . . 30

4.2.2 Projection Mapping . . . 31

4.2.3 Augmented Reality . . . 32

4.2.4 Mixed Reality Installations . . . 33

4.3 Personas . . . 34

4.4 Improved Motion Capture Process . . . 37

xvi Contents

4.6 Initial Design Concepts . . . 39

4.7 Part III: Building Prototypes . . . 40

4.8 Initial Prototype Explorations . . . 40

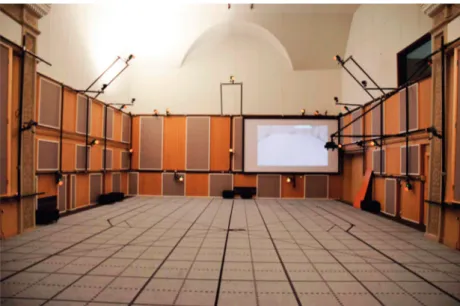

4.8.1 Screens Around an Actor . . . 41

4.8.2 Using a Motion Capture System . . . 42

4.8.3 Portable Screen . . . 43

4.8.4 Using a Pico Projector . . . 44

4.8.5 Design Decisions . . . 45

4.8.6 Prototype Evolution . . . 46

4.9 Head-mounted Projection Display Prototypes . . . 47

4.9.1 Technology Categorization of our Prototype . . . 51

4.9.2 Software . . . 52

4.10 Use of Reflective Materials in Optical Motion Capture? . . . . 53

4.11 Importance of Sound and Other Senses . . . 55

4.12 User Experiences . . . 56

5 Additional Findings 59 5.1 MR Prototype for Other Use Cases . . . 59

5.1.1 Gaming . . . 59

5.1.2 Industrial Simulator . . . 60

5.2 Gap Between Design and Engineering . . . 62

6 Conclusions 63 6.1 Conclusion . . . 63 6.2 Future Work . . . 64 Bibliography 67

II

Included Papers

75

7 Paper A: An Immersive Motion Capture Environment 77 7.1 Introduction . . . 797.2 Current State-of-the-Art . . . 80

7.2.1 Visualization . . . 80

7.2.2 Tracking . . . 82

7.2.3 Interaction . . . 82

7.2.4 Combined Research Areas . . . 84

7.3 Conducted Research . . . 85 Contents xvii 7.4 Findings . . . 86 7.5 Open Issues . . . 88 7.6 Future Solution . . . 89 7.7 Conclusion . . . 91 Bibliography . . . 93 8 Paper B: Towards Stanislavski-based Principles for Motion Capture Acting in Animation and Computer Games 97 8.1 Introduction . . . 99

8.2 What is acting? . . . 99

8.3 Which principles should we support in motion capture? . . . . 101

8.4 What is the nature of motion capture actors? . . . 104

8.5 Do we need to adapt major acting techniques in motion capture actor training? . . . 106

8.6 What motion capture actors think and need? . . . 107

8.7 How to improve current motion capture structures? . . . 109

8.8 Conclusion . . . 111

Bibliography . . . 113

9 Paper C: Head-worn Mixed Reality Projection Display Application 115 9.1 Introduction . . . 117

9.2 State-of-the-Art . . . 119

9.3 Head-worn Projection Display . . . 122

9.3.1 Hardware Description . . . 122

9.3.2 Software Description . . . 127

9.4 Application of the Prototype in Motion Capture . . . 129

9.5 Functionality Test . . . 130

9.6 Conclusion . . . 131

9.7 Future Improvements . . . 132

Bibliography . . . 133

10 Paper D: Head-mounted Mixed Reality Projection Display for Games Pro-duction and Entertainment 137 10.1 Introduction . . . 139

10.2 State-of-the-Art . . . 140

xvi Contents

4.6 Initial Design Concepts . . . 39

4.7 Part III: Building Prototypes . . . 40

4.8 Initial Prototype Explorations . . . 40

4.8.1 Screens Around an Actor . . . 41

4.8.2 Using a Motion Capture System . . . 42

4.8.3 Portable Screen . . . 43

4.8.4 Using a Pico Projector . . . 44

4.8.5 Design Decisions . . . 45

4.8.6 Prototype Evolution . . . 46

4.9 Head-mounted Projection Display Prototypes . . . 47

4.9.1 Technology Categorization of our Prototype . . . 51

4.9.2 Software . . . 52

4.10 Use of Reflective Materials in Optical Motion Capture? . . . . 53

4.11 Importance of Sound and Other Senses . . . 55

4.12 User Experiences . . . 56

5 Additional Findings 59 5.1 MR Prototype for Other Use Cases . . . 59

5.1.1 Gaming . . . 59

5.1.2 Industrial Simulator . . . 60

5.2 Gap Between Design and Engineering . . . 62

6 Conclusions 63 6.1 Conclusion . . . 63 6.2 Future Work . . . 64 Bibliography 67

II

Included Papers

75

7 Paper A: An Immersive Motion Capture Environment 77 7.1 Introduction . . . 797.2 Current State-of-the-Art . . . 80

7.2.1 Visualization . . . 80

7.2.2 Tracking . . . 82

7.2.3 Interaction . . . 82

7.2.4 Combined Research Areas . . . 84

7.3 Conducted Research . . . 85 Contents xvii 7.4 Findings . . . 86 7.5 Open Issues . . . 88 7.6 Future Solution . . . 89 7.7 Conclusion . . . 91 Bibliography . . . 93 8 Paper B: Towards Stanislavski-based Principles for Motion Capture Acting in Animation and Computer Games 97 8.1 Introduction . . . 99

8.2 What is acting? . . . 99

8.3 Which principles should we support in motion capture? . . . . 101

8.4 What is the nature of motion capture actors? . . . 104

8.5 Do we need to adapt major acting techniques in motion capture actor training? . . . 106

8.6 What motion capture actors think and need? . . . 107

8.7 How to improve current motion capture structures? . . . 109

8.8 Conclusion . . . 111

Bibliography . . . 113

9 Paper C: Head-worn Mixed Reality Projection Display Application 115 9.1 Introduction . . . 117

9.2 State-of-the-Art . . . 119

9.3 Head-worn Projection Display . . . 122

9.3.1 Hardware Description . . . 122

9.3.2 Software Description . . . 127

9.4 Application of the Prototype in Motion Capture . . . 129

9.5 Functionality Test . . . 130

9.6 Conclusion . . . 131

9.7 Future Improvements . . . 132

Bibliography . . . 133

10 Paper D: Head-mounted Mixed Reality Projection Display for Games Pro-duction and Entertainment 137 10.1 Introduction . . . 139

10.2 State-of-the-Art . . . 140

xviii Contents

10.3.1 Hardware Description . . . 143 10.3.2 Software Description . . . 148 10.4 Application of the Prototype in Motion Capture . . . 150 10.5 Functionality Tests . . . 151 10.6 Prototype Improvements . . . 152 10.7 Using the Prototype in Gaming and Entertainment . . . 154 10.8 Conducted User Tests within a Gaming Application . . . 156 10.8.1 User Test Method . . . 157 10.8.2 Results . . . 157 10.9 Conclusion . . . 160 10.10Future Improvements . . . 160 Bibliography . . . 163 11 Paper E:

Supporting Acting Performances Through Mixed Reality and

Vir-tual Environments 167 11.1 Introduction . . . 169 11.2 Related Works . . . 171 11.3 Prototype Description . . . 172 11.4 User Tests . . . 175 11.4.1 Procedure . . . 175 11.4.2 Description of Acting Scenes . . . 176 11.4.3 Card Sorting . . . 177 11.5 Discussion and Conclusion . . . 181 11.6 Lessons Learned . . . 183 11.7 Future Work . . . 183 Bibliography . . . 185 12 Paper F:

Low-cost Mixed Reality Simulator for Industrial Vehicle

Environ-ments 189

12.1 Introduction . . . 191 12.2 Related Work . . . 192 12.3 Simulator Setup . . . 194 12.3.1 Head-worn Projection Display . . . 194 12.3.2 Projection Room . . . 195 12.3.3 Architecture and Software Description . . . 195 12.4 Functionality Test . . . 197 12.5 Conclusion . . . 201

Contents xix Bibliography . . . 203 13 Paper G:

Evaluation of a Mixed Reality Projection Display to Support

Mo-tion Capture Acting 207

13.1 Introduction . . . 209 13.2 Related Work . . . 210 13.3 Our Concept of Supporting Motion Capture Acting . . . 212 13.4 Prototype Description . . . 212 13.5 User Tests . . . 215 13.5.1 Procedure . . . 215 13.5.2 Description of Acting Scenes . . . 216 13.6 Evaluation Results . . . 218 13.6.1 Card Sorting . . . 218 13.6.2 Interviews . . . 220 13.6.3 Observation . . . 223 13.7 Discussion and Conclusion . . . 224 13.8 Future Work . . . 225 Bibliography . . . 227

xviii Contents

10.3.1 Hardware Description . . . 143 10.3.2 Software Description . . . 148 10.4 Application of the Prototype in Motion Capture . . . 150 10.5 Functionality Tests . . . 151 10.6 Prototype Improvements . . . 152 10.7 Using the Prototype in Gaming and Entertainment . . . 154 10.8 Conducted User Tests within a Gaming Application . . . 156 10.8.1 User Test Method . . . 157 10.8.2 Results . . . 157 10.9 Conclusion . . . 160 10.10Future Improvements . . . 160 Bibliography . . . 163 11 Paper E:

Supporting Acting Performances Through Mixed Reality and

Vir-tual Environments 167 11.1 Introduction . . . 169 11.2 Related Works . . . 171 11.3 Prototype Description . . . 172 11.4 User Tests . . . 175 11.4.1 Procedure . . . 175 11.4.2 Description of Acting Scenes . . . 176 11.4.3 Card Sorting . . . 177 11.5 Discussion and Conclusion . . . 181 11.6 Lessons Learned . . . 183 11.7 Future Work . . . 183 Bibliography . . . 185 12 Paper F:

Low-cost Mixed Reality Simulator for Industrial Vehicle

Environ-ments 189

12.1 Introduction . . . 191 12.2 Related Work . . . 192 12.3 Simulator Setup . . . 194 12.3.1 Head-worn Projection Display . . . 194 12.3.2 Projection Room . . . 195 12.3.3 Architecture and Software Description . . . 195 12.4 Functionality Test . . . 197 12.5 Conclusion . . . 201

Contents xix Bibliography . . . 203 13 Paper G:

Evaluation of a Mixed Reality Projection Display to Support

Mo-tion Capture Acting 207

13.1 Introduction . . . 209 13.2 Related Work . . . 210 13.3 Our Concept of Supporting Motion Capture Acting . . . 212 13.4 Prototype Description . . . 212 13.5 User Tests . . . 215 13.5.1 Procedure . . . 215 13.5.2 Description of Acting Scenes . . . 216 13.6 Evaluation Results . . . 218 13.6.1 Card Sorting . . . 218 13.6.2 Interviews . . . 220 13.6.3 Observation . . . 223 13.7 Discussion and Conclusion . . . 224 13.8 Future Work . . . 225 Bibliography . . . 227

I

Thesis

I

Thesis

I

Thesis

Chapter 1

Introduction

1.1 Introduction

Todays video games are becoming more and more realistic [2], not only be-cause of hardware and software innovations, but also bebe-cause of the use of highly realistic animations of humans, animals, objects and environments in these games. For instance, cinematic elements shown during gameplay almost feel like watching a movie. It is to a large extent thanks to motion capture that we perceive motions in a gaming environment as more real or life-like than in older video games which did not use this technology. To create this sense of re-alism, human motions are recorded from skilled performers and then mapped to virtual avatars. Motion capture actors play an important role in creating more believable and life-like avatars. Therefore, recorded motions of actors, stuntmen and athletes help bringing virtual characters closer to realism.

Motion capture technology is used for various application areas e.g. for medical, training, and entertainment purposes. In this work we focus especially on motion capture for animations, mainly used in computer games. Here, cap-tured movements from human actors are used to be mapped onto virtual char-acters or so called avatars. The avatars then perform these movements during gameplay or in short video clips telling the story line, so called in-game cut scenes.

This research started with the goal to explore solutions to support a motion capture studio with their daily work. Our research investigations started with three needs: quick responses to customer and actor needs, scenery changes and the need to improve motion capture procedures.

Chapter 1

Introduction

1.1 Introduction

Todays video games are becoming more and more realistic [2], not only be-cause of hardware and software innovations, but also bebe-cause of the use of highly realistic animations of humans, animals, objects and environments in these games. For instance, cinematic elements shown during gameplay almost feel like watching a movie. It is to a large extent thanks to motion capture that we perceive motions in a gaming environment as more real or life-like than in older video games which did not use this technology. To create this sense of re-alism, human motions are recorded from skilled performers and then mapped to virtual avatars. Motion capture actors play an important role in creating more believable and life-like avatars. Therefore, recorded motions of actors, stuntmen and athletes help bringing virtual characters closer to realism.

Motion capture technology is used for various application areas e.g. for medical, training, and entertainment purposes. In this work we focus especially on motion capture for animations, mainly used in computer games. Here, cap-tured movements from human actors are used to be mapped onto virtual char-acters or so called avatars. The avatars then perform these movements during gameplay or in short video clips telling the story line, so called in-game cut scenes.

This research started with the goal to explore solutions to support a motion capture studio with their daily work. Our research investigations started with three needs: quick responses to customer and actor needs, scenery changes and the need to improve motion capture procedures.

I

Thesis

4 Chapter 1. Introduction

To get a better understanding of these needs and the involved scenario, we conducted initial observations and interviews with 18 actors, 10 directors and 4 motion capture operators.This collected data, showed that the current acting environment in a motion capture studio is challenging to act in, even for skilled actors. Performers for motion capture need to highly rely on their movement or acting skills and need to be able to imagine the environment. This is mainly because actors are performing for a virtual environment, which is neither vis-ible nor perceptvis-ible while acting. Objects, obstacles, other virtual characters or events have to be memorized and are not yet visualized in an efficient way to the actors while acting. Short preparation times, shaping movements and characteristics of an avatar on the shoot day, being able to do improvisational acting and to put oneself in desired acting roles and moods quickly are further challenges that motion capture actors are facing [3].

For this doctoral thesis, the main goal was to explore ways to support actors within a motion capture environment. Yet to achieve this, we first investigated how motion capture actors can be supported and which technologies and com-putational artefacts could be applied in a motion capture studio. Thus, we explore different approaches, which led to the development of a working pro-totype. Our prototype is a head-mounted mixed reality projector and was used to conduct user tests investigating the actors’ experiences of our acting support application and its applicability for motion capture.

Literature studies have shown, that there seems to be no sufficient out-of-the-box solution, usable in a motion capture environment to support actors with their variety of performance tasks. Therefore, we address scientific and prac-tical challenges of which system can be built to support actors and works in a motion capture environment. This includes hardware, software, and ergonomic challenges. The construction of digital artefacts, their findings and a usability evaluation of our mixed reality prototype as a gaming and simulator applica-tion provide extra knowledge from our work. Moreover, we investigated and evaluated the user experiences with the built prototypes.

Results of our evaluations with motion capture stakeholders and our work-ing prototype indicated that our application supports actors to get into the de-manded acting moods and to understand the acting scenario perceptibly faster. This includes that less time for explanations of the acting scenario is needed, compared to just discussing the scenario, as commonly done in motion capture at the beginning of a scene or a shoot.

1.2 Motivation 5

1.2 Motivation

The industrial motivation for the research presented in this thesis is to improve motion capture acting to enable actors to perform more efficiently and to im-prove the quality of acting results.

Academic motivations for our research are in the areas of interaction de-sign and ubiquitous computation. Here, the interests lay in understanding the modalities of interaction between the users and interactive artefacts, designed for a motion capture environment. Moreoever, having a guideline on how in-teractive systems can be developed, as well as identifying user experiences in the specific area of motion capture were of interest.

My personal motivation for this research is to built upon the above-mentioned interests and the interest to create immersive virtual environments. Further-more, a personal interest in computer games and the exploration to use them in other applications were a motivation for this research.

The overall motivation of this thesis results in a vision:

Through an immersive environment, actors will visually and emotionally perceive their acting scenery while acting for mo-tion capture shoots.

1.3 Thesis Outline

The thesis consists of two main parts, Part I is the thesis part and Part II holds the included papers. Part I is divided into six chapters. The first chapter provides an introduction to the thesis, explains the motivation and gives an overview of the related work. Chapter 2 is a description on the research of the thesis and addresses research questions, methods, challenges and expected re-sults. Thereafter, chapter 3 lists the research contributions of this thesis. Chap-ter 4 provides a closer description of the approach that we have chosen for this thesis, the developed prototypes and some findings during our research. Then, we describe in chapter 5 extra research that we performed with our mixed re-ality prototype and how it could be used for other applications such as gaming and as a mixed reality simulator application. Finally, chapter 6 concludes the thesis work and gives an outline for future work.

4 Chapter 1. Introduction

To get a better understanding of these needs and the involved scenario, we conducted initial observations and interviews with 18 actors, 10 directors and 4 motion capture operators.This collected data, showed that the current acting environment in a motion capture studio is challenging to act in, even for skilled actors. Performers for motion capture need to highly rely on their movement or acting skills and need to be able to imagine the environment. This is mainly because actors are performing for a virtual environment, which is neither vis-ible nor perceptvis-ible while acting. Objects, obstacles, other virtual characters or events have to be memorized and are not yet visualized in an efficient way to the actors while acting. Short preparation times, shaping movements and characteristics of an avatar on the shoot day, being able to do improvisational acting and to put oneself in desired acting roles and moods quickly are further challenges that motion capture actors are facing [3].

For this doctoral thesis, the main goal was to explore ways to support actors within a motion capture environment. Yet to achieve this, we first investigated how motion capture actors can be supported and which technologies and com-putational artefacts could be applied in a motion capture studio. Thus, we explore different approaches, which led to the development of a working pro-totype. Our prototype is a head-mounted mixed reality projector and was used to conduct user tests investigating the actors’ experiences of our acting support application and its applicability for motion capture.

Literature studies have shown, that there seems to be no sufficient out-of-the-box solution, usable in a motion capture environment to support actors with their variety of performance tasks. Therefore, we address scientific and prac-tical challenges of which system can be built to support actors and works in a motion capture environment. This includes hardware, software, and ergonomic challenges. The construction of digital artefacts, their findings and a usability evaluation of our mixed reality prototype as a gaming and simulator applica-tion provide extra knowledge from our work. Moreover, we investigated and evaluated the user experiences with the built prototypes.

Results of our evaluations with motion capture stakeholders and our work-ing prototype indicated that our application supports actors to get into the de-manded acting moods and to understand the acting scenario perceptibly faster. This includes that less time for explanations of the acting scenario is needed, compared to just discussing the scenario, as commonly done in motion capture at the beginning of a scene or a shoot.

1.2 Motivation 5

1.2 Motivation

The industrial motivation for the research presented in this thesis is to improve motion capture acting to enable actors to perform more efficiently and to im-prove the quality of acting results.

Academic motivations for our research are in the areas of interaction de-sign and ubiquitous computation. Here, the interests lay in understanding the modalities of interaction between the users and interactive artefacts, designed for a motion capture environment. Moreoever, having a guideline on how in-teractive systems can be developed, as well as identifying user experiences in the specific area of motion capture were of interest.

My personal motivation for this research is to built upon the above-mentioned interests and the interest to create immersive virtual environments. Further-more, a personal interest in computer games and the exploration to use them in other applications were a motivation for this research.

The overall motivation of this thesis results in a vision:

Through an immersive environment, actors will visually and emotionally perceive their acting scenery while acting for mo-tion capture shoots.

1.3 Thesis Outline

The thesis consists of two main parts, Part I is the thesis part and Part II holds the included papers. Part I is divided into six chapters. The first chapter provides an introduction to the thesis, explains the motivation and gives an overview of the related work. Chapter 2 is a description on the research of the thesis and addresses research questions, methods, challenges and expected re-sults. Thereafter, chapter 3 lists the research contributions of this thesis. Chap-ter 4 provides a closer description of the approach that we have chosen for this thesis, the developed prototypes and some findings during our research. Then, we describe in chapter 5 extra research that we performed with our mixed re-ality prototype and how it could be used for other applications such as gaming and as a mixed reality simulator application. Finally, chapter 6 concludes the thesis work and gives an outline for future work.