A

CADEMY FORI

NNOVATION,

D

ESIGNA

NDT

ECHNOLOGYV

ÄSTERÅS,

S

VERIGEThesis for the Degree of Bachelor of Science in Engineering - Computer

Network Engineering

DVA333 – 15 credits

REPLACING VIRTUAL MACHINES AND

HYPERVISORS WITH CONTAINER SOLUTIONS

Tara Alndawi

tai16002@student.mdh.se

Examiner:

Mohammad Ashjaei

Mälardalens Högskola, Västerås, Sverige

Supervisor: Svetlana Girs

Mälardalens Högskola, Västerås, Sverige

Company supervisor: Jacob Wedin

jacob.wedin@SAABgroup.com

2

Abstract

We live in a world that is constantly evolving where new technologies and innovations are being introduced. This progress partly results in developing new technologies and also in the improvement of the current ones. Docker containers are a virtualization method that is one of these new technologies that has become a hot topic around the world as it is said to be a better alternative to today's current virtual machines. One of the aspects that has contributed to this statement is the difference from virtual machines where containers isolate processes from each other and not the entire operating system. The company SAAB AB wants to be at the forefront of today's technology and is interested in investigating the possibilities with container technology. The purpose with this thesis work is partly to investigate whether the container solution is in fact an alternative to traditional VMs and what differences there are between these methods. This will be done with the help of an in-depth literature study of comperative studies between containers and VMs. The results of the comparative studies showed that containers are in fact a better alternative than VMs in certain aspects such as performance and scalability and are worthy for the company. Thus, in the second part of this thesis work, a proof of concept implementation was made, by recreating a part of the

company’s subsystem TactiCall into containers, to ensure that this transition is possible for the concrete use-case and that the container solution works as intended. This task has

succeeded in highlighting the benefits of containers and showing through a proof of concept that there is an opportunity for the company to transition from VMs into containers.

3

Table of Contents

1. Introduction ... 5 1.1. Problem formulation ... 5 2. Background ... 7 2.1. Virtualization ... 7 2.1.1. Hypervisor-based Virtualization ...7 2.1.2. Container-based Virtualization ...9 2.2. Docker ... 92.2.1. The Docker Engine...9

2.2.2. Dockerfile... 10

2.2.3. Docker Compose ... 10

2.3. Red Hat Enterprise Linux (RHEL)... 11

2.4. TactiCall ... 11

2.5. VMware Workstation ... 12

2.6. Trivial File Transfer Protocol (TFTP) ... 12

2.7. Secure Shell Protocol (SSH) ... 13

2.8. Dynamic Host Configuration Protocol (DHCP) ... 13

3. Method ... 14

3.1. Litterature Study ... 14

3.2. Proof of Concept Implementation ... 14

4. Ethical and Societal Considerations ... 16

4.1. Ethical Considerations ... 16

4.2. Societal Considerations ... 16

5. Containers vs VMs – Literature Study... 17

5.1. Performance ... 17

5.2. Portability ... 20

5.3. Security ... 21

6. From A Virtual Machine to Containers - Proof of concept implementation ... 24

6.1. Phase 1: Functionality recreation ... 25

6.1.1. RHEL deployment Server ... 26

6.1.2. TFTP Server ... 26

6.1.3. DHCP Server ... 28

6.1.4. SSH Server ... 29

6.2. Phase 2: Docker Setup ... 30

6.2.1. Create Dockerfile and docker-compose.yml ... 31

6.2.2. Testing – functionality ... 31

6.3. Phase 3: Docker in Offline Environment ... 31

6.3.1. Final functionality test ... 32

4

7. Conclusions ... 35

References ... 36

Appendices ... 38

FIGURES FIGURE 1:VIRTUALIZATION WITH TYPE 1. ...8

FIGURE 2:VIRTUALIZATION WITH TYPE 2. ...8

FIGURE 3:CONTAINER-BASED VIRTUALIZATION...9

FIGURE 4:COMMUNICATION BETWEEN DOCKER CLIENT AND DOCKER DAEMON. ... 10

FIGURE 5:EXAMPLE OF A DOCKERFILE... 10

FIGURE 6:THE BUILDING PROCESS OF DOCKER CONTAINERS. ... 11

FIGURE 7:CONNECTIONS IN TACTICALL. ... 12

FIGURE 8:SYSTEM DEVELOPMENT RESEARCH PROCESS. ... 15

FIGURE 10:NUMBER OF REQUESTS PROCESSED WITHIN 600 SECONDS. ... 18

FIGURE 11:TIME TAKEN TO PROCESS A REQUEST. ... 18

FIGURE 12:SCALABILITY COMPARISON BETWEEN THE CONTAINER AND THE VM. ... 19

FIGURE 13:RESOURCES AND OPERATIONAL OVERHEAD FOR THE VM VS DOCKER SWARM IN A DISTRIBUTED SYSTEM. ... 20

FIGURE 14:THE PHASES INTO WHICH THE IMPLEMENTATION IS DIVIDED. ... 25

FIGURE 15:NETWORK CONFIGURATION. ... 26

FIGURE 16:INSTALL TFTP-SERVER... 27

FIGURE 17: TFTP-SERVER.SERVICE FILE. ... 27

FIGURE 18:START AND ENABLE TFTP SERVER. ... 27

FIGURE 19:TFTP PERMISSIONS AND ALLOW TFTPTRAFFIC... 28

FIGURE 20:TFTPSERVER STATUS. ... 28

FIGURE 21:INSTALL DHCPSERVER. ... 28

FIGURE 22:ALLOW DHCPTRAFFIC. ... 28

FIGURE 23:DHCPSERVER STATUS. ... 29

FIGURE 24:SSHSERVER SETUP. ... 29

FIGURE 25:SSHSERVER STATUS. ... 30

FIGURE 26:START TIME CONTAINERS VS VM. ... 33

FIGURE 27:STOP TIME CONTAINERS VS VM... 33

5

1. Introduction

The IT world is constantly evolving and there is no doubt that part of this development has taken place thanks to virtualization [1]. Virtualization is one of the most favorable techniques that has taken great strides and benefits businesses enormously. Virtualization creates a virtual version of something instead of having it in a physical environment. For example, create a virtual version of a network, application or server. This makes it possible to divide a physical server into several virtual servers that would share the physical resources. Each individual server can then run its own operating system and programs. Why this technology has become so popular is mostly due to hardware consolidation as virtualization reduces the need for physical hardware while increasing its availability and also significantly simplifies administration. With virtualization, several physical machines can be replaced with one that runs these machines virtually. This comes with great benefits for businesses as IT costs are reduced and there is a time saving at the same time as there is an increase in efficiency, flexibility and resource utilization. These benefits have created a great deal of interest in the market where businesses want to constantly stay up to date on the most efficient and cost-effective virtualization solutions available. Virtualization has now been around for several years and is commonly known as a hypervisor-based virtualization. A very popular approach to virtualization has been through the use of virtual machines (VMs). This method was the first method on the market that enabled the use of virtualization and is the most widely used in virtualization, however, is no longer the only method that provides virtualization

capabilities. Container-based virtualization is another type of virtualization that has created a great deal of curiosity and interest in the IT market. Thus, the focus of this thesis work is a move of an existing system from a VM to a container solution and investigation of the challenges and opportunities this will create. This report will introduce virtualization and in particular focus on a transition from virtual machines to container solution.

1.1. Problem formulation

The purpose of this is work is to investigate a migration from hypervisor-based virtualization to container-based virtualization in a general way. This purpose serves a concrete use case for

SAAB AB. SAAB is a Swedish aerospace and defence company that serves services,

products and solutions for military defence and civil security [2]. SAAB aims to always be

one step ahead in development and wants to explore the challenges and opportunities there are of transitioning from a hypervisor-based solution to a container-based solution. The purpose of this is, among other things, to reduce the current resource usage, costs and at the same time be able to save some administration time. The result of this work should be able to give SAAB a fair overall view of the benefits of the transition in general and, particularly for their system, an answer on how a transition from one of their existing VM solutions into a

container solution can be done and what opportunities and challenges there will be. In order to achieve the overall goal of the thesis work, few research questions will be answered.

• What are the differences between containers and VMs in terms of performance, security and portability?

• In terms of scalability, are containers a better option than VMs?

• Given the company applications, how can the functions of the system be recreated to run in a container in the same way as they do on the virtual machine?

6

To answer the questions above, first, a literature study will be conducted to investigate the pros and cons of the two solutions, feasibility of the move. Next, a proof of concept

implementation will be done to see how the transition can be made given specific application and its requirements. The implementation will start by recreating one of the company's existing telecommunication systems, TactiCall. TactiCall handles multilevel secure

communication within armed forces [3]. This system runs on two different virtual machines, one used for management and the other for deployment. SAAB is interested in moving this system to a container solution as these two virtual machines take up unnecessary space and resources and need to be scaled down. The management machine is mostly used for the user interface, it is the deployment machine that the system's functionality lies in. Therefore, this work will focus on transitioning this machine from a traditional virtual machine to a container solution. The idea behind this is to see if it is possible to have the TactiCall system in a container solution instead of having it running on top of two different virtual machines as it does today.

7

2. Background

Along with the growth of the internet, the need for servers and data centers increased in recent years as more and more companies want to get their own websites to be able to reach out to their customers and expand their businesses [1]. The larger a company becomes the more equipment is needed, which means that big companies might have several hundreds or even thousands of servers indoors. This increase in the needed hardware also raises the costs for maintenance, hardware, power and cooling as well as in terms of time it takes for the staff to maintain these machines. Virtualization is a good candidate technique to solve the scalability issues and decrease the cost. The remainder of this section will explain what virtualization is and more specifically how it works, gives information that is important to understand the two virtualization methods that are relevant to this work, namely hypervisor-based virtualization and container-based virtualization.

2.1. Virtualization

Virtualization is the technology that enables the creation of several virtual machines on one and the same physical machine. Virtualization offers a large downsizing of the number of servers and, among other things, reduces the size of data centers, the maintenance of the servers and costs in terms of power, cooling and administration [4]. Businesses can gain a lot from virtualization by reducing the size of their data centers, reducing the cost of hardware and the need for maintenance. VM is a software that aims to mimic a physical computer system and its functionality by using resources such as processor, memory, bandwidth and storage space of the physical machine that it is installed on. The physical hardware is called

host and the virtual machines created on the host are guests. There can be several VMs

running on top of a single physical machine and a way to allocate hardware resources to these VMs is needed. Hypervisors have a way of enabling hardware resources for these VMs and redistributing them in a correct way for virtualization to work.

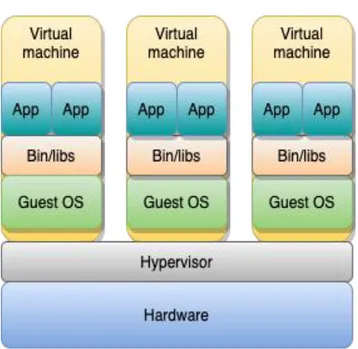

2.1.1. Hypervisor-based Virtualization

Hypervisor-based virtualization uses hypervisors as a method to segment a system into several systems [1] [4]. This segmentation is done by creating several VMs that can be run on the host's operating system (OS). Each VM can then have its own guest OS and be run

separately. There are two types of hypervisor-based virtualization, type 1 and type 2. Type 1, also called bare-metal hypervisor, runs directly on the host's hardware (see Figure 1). The host's OS and hypervisor are combined into a single layer which can be called the hypervisor layer. This creates a direct connection to the hardware where the hypervisor can communicate directly with the hardware components of the host. Through this composition, a system can utilize the resources such as performance and scalability of the host significantly more than what it can do in type 2. Type 2, also known as hosted hypervisor, separates the host's OS and hypervisor into different layers (see Figure 2). All VMs will run via the host's OS and not directly on the hardware as in type 1. In type 2, an OS is installed on the host's hardware and then on top of that layer a hypervisor is installed and becomes the software that is used for virtualization.

8

Figure 1: Virtualization with type 1.

9

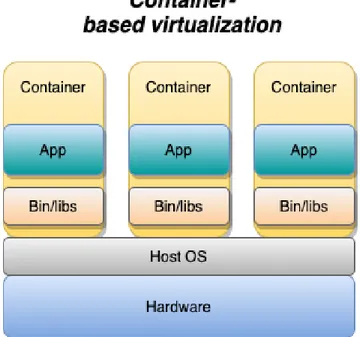

2.1.2. Container-based Virtualization

Container-based virtualization provides OS-level virtualization and therefore uses no

hypervisor [1]. This is the big difference between containers and traditional virtual machines, they visualize on the OS instead of the hardware (see Figure 3). Each container created on one and the same physical host will be able to share the same host OS with each other. This opens up the possibility of being able to run several applications at the same time without having to use several OS as in the traditional VM method. Containers package the software in such a way that it is possible to run several containers on the same OS. These containers can

therefore be run together with the same OS core and still be kept isolated. It does not require access to the entire OS to make the software work as they have their resources and libraries within their containers. Several different applications, services and databases can be run on the same physical machine by placing them in different containers completely isolated from each other.

Figure 3: Container-based virtualization.

2.2. Docker

To be able to build and run these containers, some type of engine is needed that handles these containers. Docker is a software application that uses its own containzeration engine to enable containers [5]. This technology has been developed by Docker, Inc. Docker was originally created to run on Linux but can also run on Windows and Mac.

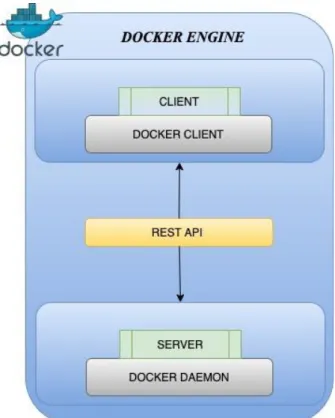

2.2.1. The Docker Engine

Unlike traditional VMs, Docker does not use a hypervisor. Instead, it has a docker engine layer that handles everything that drives these containers [6]. Docker engine is thus the core of Docker which creates and maintains containers. There are two versions of Docker engine, Docker Engine Community and Docker Engine Enterprise. Docker Engine Community is an open-source code and is thus free to use while Docker Engine Enterprise comes with extra costs as it offers more advanced features. Docker Engine Community will be used in this work. Docker engine is a client-server application where the architecture consists of a Docker

client that speaks to a Docker daemon [5]. Docker daemon is doing the heavy work to create,

10

Application Programming Interface (API). REST API provides a communication way for Docker objects such as images, plugins, networking, containers and volumes to talk to the Docker daemon. A daemon can also communicate with other daemons when needed. Docker client is the main way for a user to integrate with Docker. When a user (Docker client) executes a command, that command is sent to the Docker daemon via the REST API for execution (see Figure 4).

Figure 4: Communication between Docker client and Docker daemon.

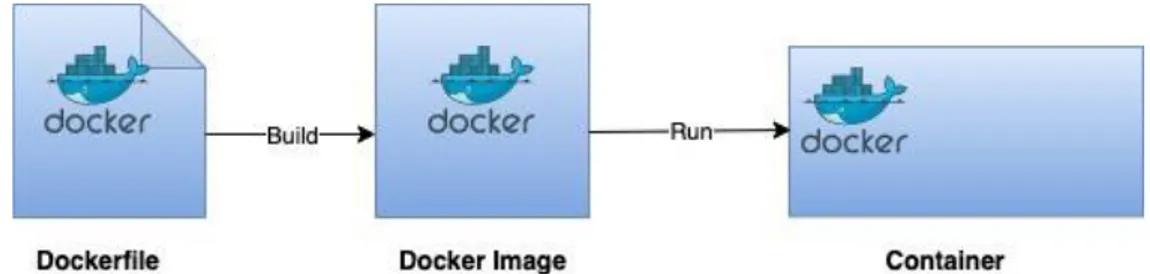

2.2.2. Dockerfile

A Docker file is used by Docker to build images which in turn build containers [7]. Dockerfile is a text file that contains commands with instructions on how to assemble

containers. Docker reads the instructions from top to bottom, see Figure 5 where docker starts by reading the command FROM ubuntu:20.04.

Figure 5: Example of a Dockerfile.

2.2.3. Docker Compose

Docker compose is an orchestration tool used by Docker to enable multiple containers to run simultaneously [8]. The advantage of Docker compose is that it is possible to divide all

11

services into their own containers instead of having them in one and the same container. The idea here is to create a multi-container solution, which makes containers more manageable and easier to build and maintain. This is because you only need to maintain each service in your container separately instead of doing it in a single large container. A YAML file is used to build these multi-containers, this file specifies the configurations needed to run the desired services in containers. The services specified in the YAML file are based on a Dockerfile and thus it is important to understand that Docker compose is not a replacement for the

Dockerfile. Containers must first be built up and published using a Dockerfile in order to be able to be run with Docker compose as multi-containers (see Figure 6).

Figure 6: The building process of Docker containers.

2.3. Red Hat Enterprise Linux (RHEL)

Docker technology uses the Linux kernel and its features to run different processes separately. In this thesis, RHEL has been chosen as a Linux distribution as this distribution is more intended for large companies and offers these companies support [9]. RHEL adds several benefits that are in the interest of the company SAAB, including life-cycle management, which is an important part of the business. RHEL divides the life cycle into different phases that are designed to reduce changes that can occur in the system when large releases are implemented, which increases predictability and availability. RHEL version 8.3 which will be used in this thesis offers a 10-year life cycle where it is planned that small releases will be released every six months instead of releasing large releases over time. The system then has time to adapt a little at a time without creating major changes that may occur within the releases.

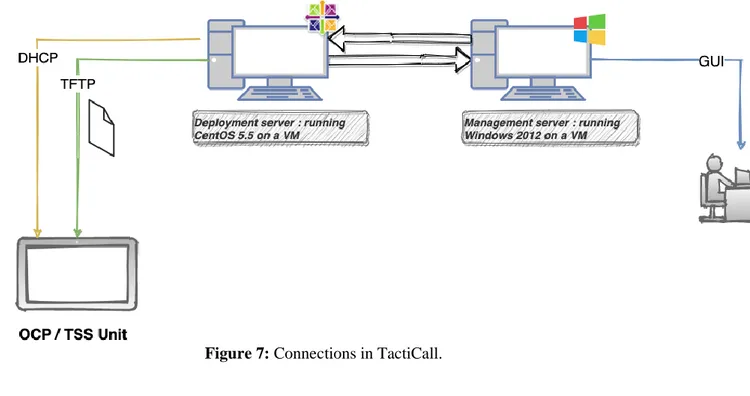

2.4. TactiCall

TactiCall is an intergrated naval communication system that handles multilevel secure communication within armed forces [3]. This system runs on two different virtual machines, one used for management and the other for deployment (see Figure 7). The deployment server is the part of TactiCall that is of interest. Its functionality must be recreated in a RHEL 8.3 server and build into containers. It is important to note that the objective of this thesis is to recreate the functionality of TactiCall's deployment server in containers. Management is a Windows server machine that will also be installed on VMware. This machine is already configured by the company, the only configuration I will do on this machine is to set the correct IP address within the network to be able to access the server. The Management machine will point to the server and will be used mostly as a GUI (graphical user interface) for TactiCall. It is important to note that the Management machine will not be containerized like the deployment server. As previously mentioned, TactiCall is a communication system, it involves, among other things, physical telephone units called OCP. These OCPs come with a GUI to facilitate the use of the telephony. There are also TSS that are of the same type as the

12

OCP but are smaller units. The main task of the deployment server is to provide addresses to these units through DHCP and give them access to TFTP directories so that they can

download the necessary files needed to function, files such as application software and operating system software.

Figure 7: Connections in TactiCall.

2.5. VMware Workstation

VMware is a virtualization software that enables virtualization of machines with different types of operating systems on one computer [10]. In VMware Workstation it is possible to create several instances and thus be able to test and run different types of operating systems without affecting the host and its operating system. There is other virtualization software that can run virtual machines such as VirtualBox. VMware Workstation as an already licensed version is available on the lab computer that has been provided by the company. In the VMware Workstation a RHEL 8.3 server will be installed and configured in order to first recover the functionality of TactiCall and then add its functionality into containers. One can simply say that VMware Workstation will be the experimental environment for the

implementaion.

2.6. Trivial File Transfer Protocol (TFTP)

TFTP is a file transfer protocol that uses UDP to transfer files over a local network [11, pp.38]. The protocol does not use any authentication for the file transfer and due to this security issue, it is most often used to transfer boot files and configuration files in a local

13

network. TFTP will be used in this system to enable file transfer between the deployment server, management server and the communication units such as OCP and TSS.

2.7. Secure Shell Protocol (SSH)

SSH is a secure protocol that has been developed to be able to connect securely remotely, among other things [12, pp.72]. The deployment server will occasionally need to be accessed via remote access and thus SSH is configured.

2.8. Dynamic Host Configuration Protocol (DHCP)

DHCP is a protocol that handles the assignment of IP addresses to the devices in a network [12, pp.356]. This assignment is based on the parameters set by the administrator. DHCP handles the assignment of both static and dynamic IP addresses in a network. DHCP will be configured on the deployment server to provide IP addresses to the OCP and TSS units so that they can connect to the deployment server.

14

3. Method

The thesis work will be performed in two phases.

3.1. Litterature Study

To gather information and knowledge about container-based virtualization, an in-depth

literature study was done on the topic in question and its surrounding areas. Surrounding areas are those that are relevant to understand in order to be able to perform the thesis. Such areas are virtualization overall, various container-based solutions, hypervisor-based virtualization, etc. To get an overview of what approach is best to take to solve the task of recreating TactiCall's deployment server in Docker containers, information was collected from the official Docker documentation. An in-depth literature study of peer-reviewed reports and studies of the subject area and its surrounding areas was done to get a clearer picture of what is important to keep in mind when comparing these two techniques. These reports and studies have also come to be the basis for answering the questions formulated for this thesis.

Technology interest around the world is very large where there are constant discussions about IT and computer system solutions. The Internet is full of expertise in the IT field, and this has been used to advantage to see how other people perceived the subject area and how they have gone about solving similar tasks. It has also been very helpful in troubleshooting and in times where additional information is needed to complete a certain task. Moreover, the study also included a number of websites, e.g., Stackoverflow, Github, Quora, as well as various

technical blogs where people have shared their work and the problems they have encountered with similar work. Säfsten and Gustavsson write in their book “Forskningsmetodik för

ingenjörer och andra problemlösare'' that a good foundation for research always starts with

the researcher creating an in-depth understanding of the problem area in question to then handle it in a suitable scientific way [13, pp.112]. Thus, first an in-depth review of relevant literature was conducted to get an increased understanding and qualitative data. The final goal here was to see if containers are in matter of fact a better solution and therefore it makes sense to go for it.

3.2. Proof of Concept Implementation

This part of the thesis is the practical part where a proof of concept is to be performed. In this step, system development is used as a method. System develpoment is a research

methodology used in engineering and applied data science [14]. The method is, as the name suggests, used to develop a system. This method is about proving the usefulness of a solution that is based, among other things, on new solutions or techniques. Figure 8 shows the main steps that this method follows. In this case, TactiCall's deployment server will first be moved from an old server to the latest version of RHEL. The next step is to test this development and see that it meets the system requirements and then further develop it to run its services in containers. The final goal here was to see if the switch can be done for the particular system and its specific features, requirements and shortcomings. Several tests have been performed to check the functional requirements.

15

16

4. Ethical and Societal Considerations

4.1. Ethical Considerations

In this work, implementation will be done on physical hardware where all information that is sensitive to the company SAAB has been deleted. It is extremely important to understand that SAAB delivers products ranging from military to civil security and information of this type is sensitive and has a major impact on several parties. It is thus my responsibility to follow the ethics of duty. In the book “Forskningsmetodik för ingenjörer och andra problemlösare'', Säfsten and Gustavsson explain that ethics of duty is a guide for what actions are right to perform in relation to the duties one has on oneself [13, pp.242]. According to Säfsten and Gustavsson, this formulation of duty ethics applies to, among others, citizens, employees and various professional groups. This means that each job comes with duties and obligations, and it is extremely important that they are followed by the person performing the job. Together with SAAB, I have performed and will perform internal safety training that will give me more knowledge about how to handle this work based on my responsibility and duty to the

company. It can also be considered an ethical issue for some as SAAB deals with military products. The results of this work will be published publicly, which may affect passive parties. Passive parties refer to container and VM providers. If taken seriously by businesses that are considering switching from the traditional VM solution to a container solution, this result can influence their decision. For example, results that show that containers are a better virtualization solution can create a positive impact on container providers. It is important to understand that this work has been done transparently, there is no purpose to influence any passive party in any way. The purpose of this work is to compare the two different

virtualization methods to provide the best conditions for SAAB. It is a curiosity that drives this work and focuses on exploring the challenges and opportunities that may come with moving from VMs to containers.

4.2. Societal Considerations

There is a discussion about costs such as energy consumption when choosing a virtualization method. Global warming is a hot topic all around the world and what steps must be taken to reduce the environmental impact. A transition from traditional VMs to containers may reduce energy consumption as containers are said to consume less energy than hypervisor-based virtualization [15]. If the result of this work backs up this claim, it may affect energy consumption, perhaps not drastically but it is still an improvement. Costs for the company may also decrease during the transition from VMs to containers as you reduce hardware resources, space and energy consumption.

17

5. Containers vs VMs – Literature Study

Containers-based virtualization is a fairly recent method compared with hypervisor-based method that has been around for a while. The container-based solution has undoubtedly awakend great interest around the world and has become a hot topic. There are many

questions that have been asked whether containers will replace VMs, if they are faster, have better performance, more secure, etc. This has resulted in several comperative studies

between these two technologies [16] [17] [18] [19]. The company is interested in aspects such as performance, security, scalability and whether these are better in containers than in VMs. A comparison between the respective methods is in the company's interest to find a clearer picture of which method is most suitable for the company. Comperative studies that have been done in these aspects between containers and VMs will give a clearer picture whether containers are a better solution than VMs. The comperative studies will also answer the two first questions contained in the problem formulation of this thesis. These studies are based on cases that are relevant to the company's interest.

5.1. Performance

When talking about performance, there are several factors involved such as overhead, scalability, CPU-, memory-, disk and bandwith performance etc.

In a comperative study [20] the author was interested in comparing the performance and scalability between Linux containers and virtual machines that were running the same application by measuring overhead and scalibility. Overhead is a parameter that shows how much resources the system uses to perform a task, e.g., resources such as memory, bandwidth, computation time, etc. Overhead is calculated through a measurement of the latency and data flow that occurs when a task is performed. Latency is the time interval from when something in the system has happened until the system actually responds to the event. Scalability is an interest of this study as it is important to know how the system would react in the event of a failure, where the system quickly needs to scale up or down to maintain its functionality. Performance comparison was done using a Joomla application running on a front-end

application server and a postgreSQL database running on a back-end server. The database was used to store and retrieve data collected from the Joomla application which is filled with test data. Joomla is an open-source content management system that uses PHP to build websites and applications. Jmeter was used, which is a tool that measures and analyzes the

performance of web applications. Jmeter was used for stress testing of the application by sending as many messages as possible to the Joomla application within 10 minutes. Messages were sent until the servers began to experience performance disruptions. The authors of this study came to the conclusion that the Docker container could process significantly more messages in 400 seconds than the virtual machine. This was three times more messages than the virtual machine could process (see Figure 9). Moreover, it was shown that the virtual machine took much longer to process a single request compared to the container. According to the authors, this was because virtual machines were less consistent in their performance than Docker, although virtual machines use physical memory. The author believes that when the pressure increased on the server in the virtual machine, it could not cope with the load only with the physical memory and then the host started to swap the memory between the physical memory and the hard disk (see Figure 10). To test the system scalability, Jmeter is used again to trigger the scaling by increasing the CPU utilization over 80%. The results showed that containers took much less time to adapt to this change and scale up than the virtual machine. The virtual machine took about 3 minutes to scale up while the container

18

took 8 seconds. This means that the container was 22 times faster with the scaling than the virtual machine (see Figure 11).

Figure 9: Number of requests processed within 600 seconds (Figure borrowed from [20, pp.345]).

19

Figure 11: Scalability comparison between the container and the VM (Figure borrowed from [20, pp.345]).

S. Soltesz et al [21] also conducted a similar comparative study between container based and hypervisor-based virtualization techniques. The study examined the conditions under which a container solution would be more appropriate than the use of hypervisors. The study showed that in conditions where performance and scalability are important, the container-based solution becomes a more suitable alternative. This is because the container-based system turned out to be 2 times better in performance such as disk-, CPU-, bandwith and memory performance and also scaled up significantly faster than the hypervisor-based system. N. Naik [22] made a performance comparison of a cloud-based distributed system in both Docker containers and virtual machines. In this study, the Docker Swarm containerized distributed system was compared with a virtualized distributed system. The simulation of the containerized distributed system was set up using Docker Swarm, radis and nginx. Docker Swarm is an orchestration tool built into Docker for communication between Docker Clients and Daemons. Nginx is a web server with HTTP properties. Redis stands for Remote

Dictionary Server and is an open-source, in-memory key-value database, cache and message broker. Docker Swarm nodes were set up in VirtualBox on a Mac OS X. The simulation of the virtualized distributed system is similar to the containerized system. An exactly similar environment with the same number of nodes was set up to get a fair picture of the comparison between these two techniques. The virtualized distributed system was set up using

VirtualBox, Ubuntu, nginx and redis. The results of this study showed that the containerized distributed system used significantly fewer resources and had less overhead than the

virtualized system (see Figure 12 ). The author states that this may be due to the architecture of the respective systems. Docker Swarm has features that facilitate the work as a distributed

20

system while virtual machines are independent of each other and isolated, which requires extra resources to be coordinated as a distributed system.

Figure 12: Resources and operational overhead for the VM vs Docker Swarm in a distributed system (Figure borrowed from [22, pp.7]).

P. Sharma et al. [23] conducted a study comparing performance between containers and virtual machines in large data center environments. What was of interest for this study is to investigate what could cause performance interference for these two techniques. Performance and interference were measured in terms of several aspects such as CPU, memory, disk and network workloads. The results of this study showed that in multi-tenant scenarios, containers are affected by performance interference more than virtual machines. This is due to the fact that containers share the same OS kernel. In this aspect, VMs overcome over containers. However, it was also found that containers have soft limits when it comes to resource use. Soft limits mean that applications are allowed to use resources beyond the resources they have been allocated originally. This is positive in scenarios where resources are under-utilized by some applications, while other applications need more resources to function. This is not possible for VMs as they have hard limits where it is only possible to use the resources that have been allocated. Containers are thus more efficient in scenarios where overcommitment is important because it has an efficient resource utilization.

5.2. Portability

When we talk about portability in a matter of virtualization, we are talking about the ability to move an application from one host environment to another [24]. Such a move may involve moving an application to another operating system, for example from Windows to Linux. It can also be to move the application on the same operating system but to a different version, for example from running on ubuntu 16.04 to 18.04. This move can result in either easy work where the application is easily moved to another environment without having to make any changes. In some cases, the move may be more difficult, and some files or source codes will need to be modified to work as usual. In the worst case, it is not possible to move an

application to another environment as the functionality of the application is not supported in the other environment. Today's businesses have a great interest in being able to move their applications from different environments if desired. From the beginning, portability was

21

achieved by configuring systems in such a way that it is independent of any specific operating system or tool, and then saved the configuration in plain text files to then be able to deploy them elsewhere. Virtual machines became another way of achieving portability as

applications can simply be run on a virtual machine. This means, for example, that you can run an application on a Windows server with the help of a VM when it could actually only be run on Linux from the beginning. This solution has its limitations where you are dependent on using a specific guest operating system to be able to deploy the application.

With Docker containers, one can achieve high portability as containers do not relay on VMs or need to modify applications when moving to another environment [25]. Docker containers have a so-called "Build once, run everywhere" concept which involves building the

application and placing it in the docker image which can then be saved and shipped to another environment. In the new environment, it is then only to load the image and run it. This is possible as the application's configuration files and binaries are stored inside the container and are independent of the variables outside the container. In short, it can be said that with the help of containers one only needs to build the application once and place it inside an image to then be able to run it on any host environment. This is as long as the host environment has support for Docker and is within the same operating system family. For example, it is possible to build an application in a Docker container in a RHEL 8.3 server in order to then be able to move the application to an Ubuntu 20.04 without having to modify anything as it is based on the container image. Before containers, it was much more difficult to move an application from one linux distribution to another without having to build new packages for it. For

example, it was not possible to install RPM packages that an RHEL server uses on an Ubuntu server that uses Debian packages. Thus, the container facilitates this process.

However, Docker containers come with a limitation as they do not support porting across different operating systems [24] [25]. There is thus no possibility to run a containerized application in Windows and then be able to move it to Linux and vice versa. In this particular aspect, Docker containers are less portable than VMs where it is possible to run a Windows-based VM on a Linux host and vice versa. It is also not possible to move a Docker

containerized application to MacOS or Android. To improve portability, Docker launched LinuxKit in 2017, a tool that enables the creation of what Docker calls the "Linux

subsystem". Linux subsystem offers what one might think is the maximum portability just for linux applications. This is because with LinuxKit it is possible to run a linux-based

application on a container that has actually been running on another type of operating system. This is possible only for Linux applications and so far, it is not possible to run a Windows application in a container running a Linux operating system.

5.3. Security

When talking about security between VMs and containers, containers are considered to be more vulnerable [22]. This is due to a number of factors, one of which is isolation where virtual machines are better known for their strong isolation.

Kernel exploitation: Both VMs and containers keep the applications completely isolated

from each other, which means that if one application fails, this will not affect the other applications [26]. The difference here is that containers share the same host kernel and operating system and thus share the same resources. In an event where a container is exposed and attacked, all other containers on the same host are exposed. A single small bug in the host's kernel in a container can result in large data loss as all other containers on the host risk

22

being affected. Unlike containers, VMs do not share the same operating system and the host's kernel remains isolated. That is, in order for an application to be able to damage the host kernel, it must first get through the kernel of the VM and then get to the host kernel by breaking through the hypervisor layer. This isolation does not allow any direct integration between an application and hardware, resulting in a failure or attack by a host not affecting the host's kernel. This is much more difficult than with containers where all containers share the same host OS and thus have a direct interaction to it. There are several measures that can be taken to counter attacks that occur as a result of the lack of insulation on containers. Such measures can be to keep multi-trusted containers on one and the same machine and ensure that root privileges are not used by untrusted containers. Turning off communication between containers that do not need to communicate with each other reduces the risk of attacks and their impact. It is also important to make sure that you only make the necessary installations so as not to accidentally download malicious installations to the system.

Denial of Service (DoS) Attacks: Isolation is a factor that can also lead to a DoS attack, by

gaining access to multiple processes on the host kernel, an attacker can easily perform a DoS [27]. Thus, containers are more vulnerable to a DoS attack than VMs. Another factor that makes containers more vulnerable to DoS attacks is their resource limits. By default, Docker has disabled resource limits and allowed containers to access more resources. In a case where a container breaks out, this can take control of the resources on the host and lock them away from the other processes which can result in a DoS attack as the other processes will starve without resources. A solution to this can be allocating a fair share of resources such as disk I / O, memory space and CPU. In this way, scenarios are avoided where a container can take over all resource use and attacks that accompany this are avoided.

Infected Images: As previously mentioned, containers are built with the help of images.

Images are those that can be created individually and can also be downloaded from Docker's official registery, Docker hub [26]. In the Docker hub, there are several million images available to be downloaded and deployed directly. The problem here is that Docker does not authenticate these downloaded images, which means that an attacker may have filled an image with malicious code to gain access to the system. Another vulnerability with images can occur when using out-dated images. A solution to this can be that the user who downloads these images himself authenticates them manually before it can be imported and run in the system.

Deployment knowledge: Another factor why VMs is considered safer is that they have been

in deployment for a longer time where a strong knowledge has been created for its attack surface and vulnerabilities. Container solutions are considered relatively new and more like an unknown ground and thus are less predictable when it comes to the attack surface.

The conclusion from the comparative studies is that containers seem to be a better alternative to performance, scalability and even more portable than VMs. The scenarios where containers are more portable than VMs are when it comes to Linux OS. TactiCall's deployment server is always intended to run on Linux based distributions and thus not be affected by the portability limitation with other OS that containers have. In terms of security, isolation is a factor where VMs are considered safer as applications are completely separated from each other and from the OS kernel while containers share the same kernel. Safety is such a factor that can be strengthened for containers by identifying the risks early in the creation and taking action to counteract them. By keeping the system up to date, exposing only the necessary ports,

23

inserting the correct permissions so containers can be made safer. Containers are definitely worth a try for the company as it comes with several advantages that can benefit the company and its IT systems.

24

6. From A Virtual Machine to Containers - Proof of concept

implementation

The conclusion from the comparative study has shown that containers come with several advantages and is worth for SAAB to give it a chance and see if it is possible to run

containers on one of their subsystems, namely TactiCall. What is to be tested is only part of the TactiCall system, the deployment server. One of the concerns in this work is that the deployment server is an old server running centOS 5.5, this version had its release in 2007. It is not favorable for the company to run containers on the current deployment server partly due to its life- cycle is coming to an end, it is expected to be supported until June 2024. Another factor is that SAAB has the vision to always be at the forefront of today's technology and having an old server running containers is not the right way. This server has been delivered to the company and put directly into the work. The concern here is that if it is possible to

recreate this server with its functionality into a RHEL 8.3 server which is the latest version of RHEL. Without being able to recreate the functionality of the server, containers should not be created. In this thesis, SAAB's current deployment server will be called TactiCall's

deployment server while the server created for this work will be called RHEL deployment server as it is based on RHEL.

The requirements for this thesis are those that are based on the functionality of TactiCall's deployment server. One of the requirements is that the RHEL deployment server can communicate with the other part of TactiCall which is namely the management server. The management server is the GUI part of this system and is what shows the user the information retrieved from the server and allows the user to perform necessary tasks. Another requirement is that the RHEL deployment server should be able to assign addresses to specific devices based on their physical address and also allow these devices to retrieve necessary boot files at startup. This places requirements on specific services and protocols that must be present on the RHEL deployment server for it to work as TactiCall's deployment server. Another concern is the file structure on TactiCall's deployment server, this file structure must be recreated on the RHEL deployment server for file management to work. These requirements will only be met when it has been possible to recreate TactiCall's deployment server. Thus, it will be necessary to first investigate what this server actually does, how it is built up and what services it uses. Then, the next step is to recreate the server's functionality and after that, it will be possible to continue to creating containers. In short, the big concern is that if it is possible to recreate TactiCall's deployment server from scratch from centOS 5.5 to a RHEL 8.3 server and test that it works as desired. Another concern regarding containers is the network challenges, Docker containers come with several network practices and the question of what limitations and challenges there may be with containers when they are to be deployed in the company's network. This concern is based on the fact that the services will no longer be directly on the host but will instead be inside multi-containers. The question is what

challanges arise when the communication is to take place between these containers and the host and also between containers and the outside network.

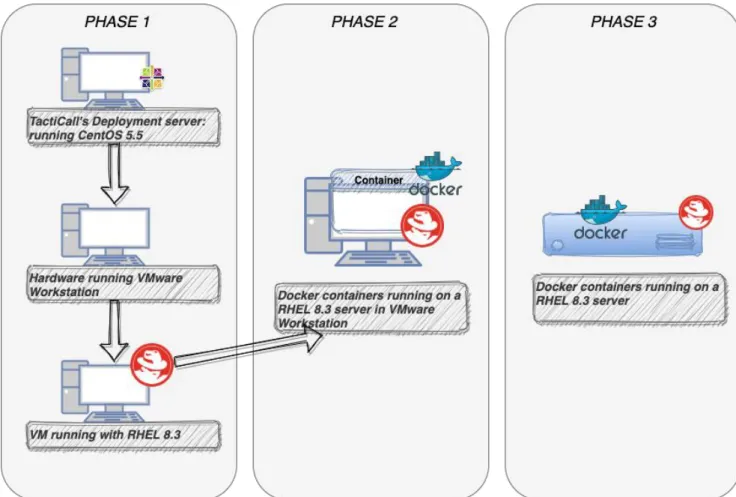

The work on the implementation part of this thesis project was divided into three phases which facilitate the implementation of the objective of this work, to recreate TactiCalls functionality into containers (see Figure 13).

25

Phase 1 will be about recreating TactiCall's functionality in a RHEL 8.3 server and testing

that it works as intended.

Phase 2 is the phase where Docker, more specifically Docker-compose, will be set up and

tested. This is done on the same virtual machine as in Phase 1.

Phase 3 will be about deploying the container in the company's offline network environment.

Figure 13: The phases into which the implementation is divided.

6.1. Phase 1: Functionality recreation

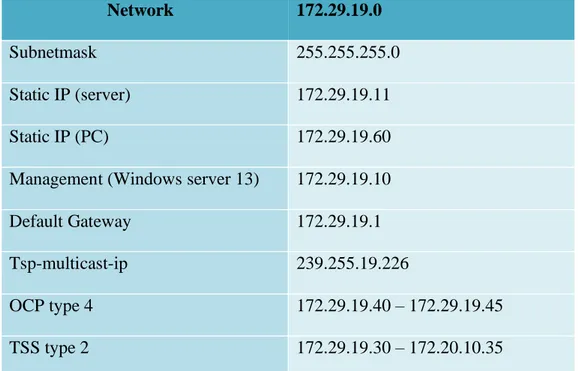

Since the company's network information must be kept hidden from the public, an

experimental environment was set up on VMware Workstation where an RHEL 8.3 server was installed (see Figure 14). In this phase, the challenge has been to figure out which parts constitute TactiCall's deployment server functionality and recreate these into a RHEL 8.3 server. In order to set up the RHEL deployment server with the desired functionality, a computer with Windows OS was used, on this computer VMware Workstation is installed to be able to run virtual machines. The RHEL 8.3 server was installed on VMware Workstation and configured to handle DHCP, TFTP and SSH services. These services are what have seemed to be the building blocks of TactiCall's deployment server and are critical to have to recreate its functionality.

26

Network 172.29.19.0

Subnetmask 255.255.255.0

Static IP (server) 172.29.19.11

Static IP (PC) 172.29.19.60

Management (Windows server 13) 172.29.19.10

Default Gateway 172.29.19.1

Tsp-multicast-ip 239.255.19.226

OCP type 4 172.29.19.40 – 172.29.19.45

TSS type 2 172.29.19.30 – 172.20.10.35

Figure 14: Network configuration.

6.1.1. RHEL deployment Server

From the beginning, the company required for the containers to be built in the latest Ubuntu version, which at this time is Ubuntu 20.04. Ubuntu is a free open-source software Linux distribution based on Debian. The company's current deployment server comes in Ubuntu version 16.04. It is then possible to import this server into VMware and update it to version 20.04 through internet access. This is done in two steps, first updating from 16.04 to 18.04 and then from 18.04 to 20.04. The update takes place in two steps to minimize changes that can occur in the system due to the major releases. The advantage of this is that I myself do not have to recreate the functionality of the deployment server and can directly proceed to

building containers. However, this plan was changed, the company decided instead that a container solution in the latest RHEL version which at this time is 8.3 was something they were more interested in. Why the choice of RHEL 8.3 was made is based on the benefits presented in section 2.3 Red Hat Enterprise Linux. The change in the company's requirements for the Linux distribution then caused a change in the implementation step. First, the

functionality of the deployment server must be recreated and tested before it is possible to proceed with building containers.

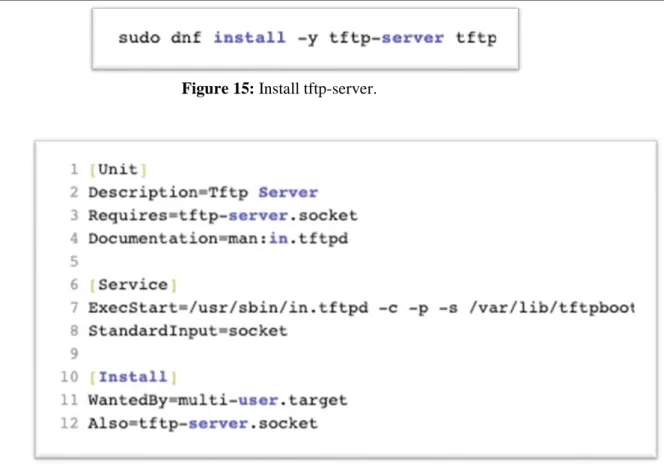

6.1.2. TFTP Server

The TFTP server package is available for download from the RHEL BaseOS repository using the dnf command (see Figure 15). All Red Hat Package Manager-based Linux distributions need to use a software package manager such as dnf to update, install, and remove packages. After the TFTP server package is installed, the /etc/systemd/system/tftp-server.service file is edited to the following values (see Figure 16).

27

Figure 15: Install tftp-server.

Figure 16: tftp-server.service file.

The ExecStart = /usr/sbin /in.tftpd -c -p -s /var/lib/tftpboot command is used to run the TFTP server daemon. The -c option allows new files to be created. When creating new files through TFTP, there may occur some permission issues on the files and directories and the -p option is used to resolve these issues. -s option sets the TFTP server's root directory which in this case by default is /var/lib /tftpboot. After the file has been changed to the correct parameters, the TFTP server can be started and enabled. This is done with the commands shown in Figure 17. To be able to download and upload files through the TFTP server, the correct permissions must be set on the /var/lib/tftpboot directory also allow TFTP traffic through the firewall (see Figure 18). The last step is to verify that the TFTP server daemon is up and running (see Figure 19). In order for TFTP to work as intended, a script was created to set up the necessary directories and links between them (see appendix A). A user called Tacticall has also been created to access this file structure created in the script. An important note that the script must be run as root to avoid issues with permissions required by certain commands. This script has been made to create the same file structure with the same permissions as TactiCall's deployment server seemed to have.

28

Figure 18: TFTP permissions and allow TFTP Traffic.

Figure 19: TFTP Server status.

6.1.3. DHCP Server

The DHCP like TFTP server, also has its package to download from the RHEL BaseOS repository using the dnf command (see Figure 20). The DHCP server will run according to the configuration found in the /etc/dhcp/dhcpd.conf file where it is specified which network and which addresses are essential for the DHCP server (see appendix B). After the changes are made in the dhcpd.conf file, the DHCP server can be enabled and started. The DHCP traffic must also be allowed through the firewall (see Figure 21). The DHCP server is up and running listening on interface ens160 which is activated on the server and has the static IP address 172.19.19.11 (see Figure 22).

Figure 20: Install DHCP Server.

29

Figure 22: DHCP Server Status.

6.1.4. SSH Server

The installation of a SSH server is as simple as download the package from the RHEL BaseOS repository, allow SSH traffic through the firewall and enable and start the daemon (see Figure 23). The SSH daemon is up and running listening on port 22 (see Figure 24). This phase was then tested by connecting an OCP to the server and ensured that it obtained an IP address within the configured range specified in the dhcpd.conf file. The device could also access the necessary files via TFTP and was able to boot up and start as as desired.

30

Figure 24: SSH Server Status.

6.2. Phase 2: Docker Setup

The choice to build containers with Docker was made in dialogue with the company about a suitable method to build containers with. The choice was between Docker and Kubernetes, which builds containers in clusters. In this task, a single node will be containerized and thus the choice of Kubernetes is eliminated. Kubernetes can be of interest when the company wants to move several systems to containers and build a cluster of them. This choice was made at an early stage before the Linux distribution decision was changed from Ubuntu 20.04 to RHEL 8.3. For several reasons, RHEL has decided to remove the Docker container engine together with all docker commands for RHEL 8. Instead, RHEL offers tools such as podman and buildah to build containers without a container engine. These tools aim to be compatible to the docker images and functionalities but without a need for a container engine. However, an equivalent tool to docker-compose does not yet exist. Although Docker is not supported by RHEL 8, it is still available to download from other sources such as an external repository. Docker is available in two versions, Docker Community Edition (CE) and Docker Enterprise Edition (EE) [28]. Both versions come with the same core functionality and offer roughly equivalent features for building containers. However, the Docker EE version offers more support, flexibility and security for enterprises that require these specifications. This work is a more proof of concept for testing Docker and thus the choice of Docker CE is considered appropriate. To be able to download Docker CE, it is required that RHEL 8 is updated, internet connection and that commands are run either with sudo or with root privileges. It is important that RHEL´s container tools podman and buildah are removed before installing Docker. This is because RHEL 8 will conflict with Docker and thus Docker will not work as intended. To install Docker CE correctly for it to work on a RHEL 8.3 server, the following steps were performed:

1) Install Docker CE Official Package Repository 2) Install Containerd.io

3) Install Docker CE 4) Install Docker Compose

31

6.2.1. Create Dockerfile and docker-compose.yml

As previously mentioned in section 2.2.2 Dockerfile a Docker file is used by Docker to build images which in turn build containers while Docker compose is an orchestration tool used by Docker to enable multiple containers to run simultaneously. Docker compose uses a YML file to build these multi-containers. TFTP and DHCP are the services that are the building blocks for TactiCall's deployment server, and which must be deployed in the respective containers. Appendix C contains the Docker file that was used to create the Docker image and appendix D contains the YML file used by Docker compose to run the TFTP and DHCP services in multi-containers.

6.2.2. Testing – functionality

After the respective files have been created, it was time to move on and build and run the multi-containers with its services. To test that the RHEL server meets the functionality

requirements with its containers, an OCP unit was connected to the server, and it was possible to verify that it received an IP address from the DHCP container and could retrieve its boot files from the TFTP container. The end goal of this phase was to recreate the functionality of TactiCall's deployment server in a RHEL deployment server where those services are run in containers. This phase has thus proven two things, one is that it is possible to recreate the functionality of TactiCall's deployment server and the other is that it is possible to run the services in containers in this server.

6.3. Phase 3: Docker in Offline Environment

So far, it has been confirmed that it is possible to recreate TactiCall's deployment server functionality in an RHEL server and run its services in Docker multi-containers. But phase 1 and phase 2 have been done with the help of virtual machines and the purpose of this work is to replace virtual machines with containers. This phase is based on actually doing the same steps as in phase 1 and 2, but instead on a physical server which is integrated in the company's network. In this step, there was both a re-creation of TactiCal deployment server and then put its services inside Docker multi-containers. The difference between the setup of this phase and phase 2 apart from the fact that it is done on a physical server is that the installation and all packages could only be done offline as the company's network is not connected to the internet. An advantage that has been mentioned before is the portability that containers offer within the same type of Olympic family. To rebuild the containers all that was really required was to download Docker and Docker Compose offline and then import the images created in phase 2. This means that based on the images in phase 2 it was possible to rebuild the

containers with a single simple build command. This again highlights the benefits of container portability. Briefly, the following steps were taken offline:

• Recreate TactiCall’s deployment server in the physical server • Uninstall podman, buildah and other RHEL tools for containers. • Install Docker Offline

• Install Docker Compose Offline • Build from Images

32

6.3.1. Final functionality test

Testing of this phase determines whether or not there will be a successful proof of concept. Up to this phase, it has been possible to recreate a functional recreation of TactiCall's

deployment server and this recreation has also worked with multi-containers running on it. In this RHEL physical server, Docker was successfully downloaded, and it was verified that a TFTP and a DHCP container were running on the server. The server was configured to access the corporate network through the correct IP address and parameters. The testing took place in the same way as in phase 1 and phase 2, where an OCP was connected to the server to ensure that it receives an IP address from the DHCP container and can retrieve its boot files from the TFTP container. However, the first test did not go as expected as the OCP unit did not get an IP address from the DHCP container. What differs between the TFTP container and the DHCP container is that TFTP is done using multicast while DHCP is done with broadcast. This is a problem that arises with the way the Docker network is set up. There are several types of networks that a container can use such as bridge-, overlay-, macvlan and host network. When installing Docker, Docker creates a default bridge network that each new container will automatically join unless otherwise specified. It is important to choose the right network for successful communication between the host and the containers. For broadcast to work for DHCP, the Docker containers have been configured in network host mode, which means that the containers will not be assigned a static IP address but will instead be attached to the host's network. The motivation behind this is that the host is within the corporate network and the containers should also be within this network so that it is possible to

communicate with them. Another thing that needed to be considered is the port exposure. It is important to expose the ports to be used as Docker by default does not expose the ports of a container unless specified. When the ports are exposed, Docker will create a firewall rule that will map the host port with the container port. The command -p 8080: 80 / udp will map port 8080 on the host to UDP port 80 on the container. After the correct network parameters have been set, the communication takes place as it has been disired. The OCP unit receives both a DHCP address and can retrieve its boot files from the TFTP container. This is a proof of concept that it is possible to recreate TactiCall's deployment server in a RHEL server of the latest version and also run it as a container solution instead of today's hypervisor-based solution.

Measurements have been done to see the difference in start and stop times between

containers and the virtual machine. Start and stop times are the time it takes for each solution to be started and shut down respectively. Five measurements were done for each parameter, Figure 25 shows the averages results from these runs. It could be stated that both containers were significantly faster to start up and shut down than the virtual machine. One of the factors to this is that virtual machines first need to boot a copy of the OS to boot unlike containers that can be booted with a single command. Another parameter that affects this start and stop times is the size of the containers, containers are only a few megabytes in size while virtual machines are several gigabytes in size. This makes containers easier to start and shut down. Another measurement that was made is the CPU usage, this measurement was made in percentage (see Figure 26). The results showed that the two containers together use 45% less CPU than the virtual machine running these two services.

33

Figure 25: Start/Stop time containers vs VM.

34

6.3.2. Discussion

The first goal of this section has been to reach a successful proof of concept that proves that it is possible to recreate the functionality of TactiCall's old deployment server into a RHEL deployment server of the latest version. This has been important in order to be able to

continue the work of testing the container solution for this deployment. The container solution has been implemented and tested. The results show that it is possible to recreate the

functionality of TactiCall's old deployment server in a new RHEL server with a container solution. The network for each container must be specified correctly in order for

communication to be possible between the containers and the outside network.

The two research questions about the differences between containers and VMs in terms of performance, security, portability and also scalability has been answered. The performed literature study on the benefits and drawbacks of VMs and containers shows that container solutions come with significant performance improvements and seem to be a better alternative to scalability and portablility than VMs. The scenarios where containers are more portable than VMs are when it comes to porting within the samt type of OS family. Performed measurements show that containers are significantly faster and use less CPU resources than VMs which supports the conclusions made earlier from the literature study. By developing a prototype running a container-based solution with the same functionality as the old prototype running the hypervisor-based solution proves that the migration of the system is possible. This answers the last research question on how the company applications can be recreated to run in a container in the same way as they do on the virtual machine.

However, only a part of TactiCall was considered in the current thesis work and I in order to draw a fair conclusion as to whether the company should migrate to containers from VMs, the entire TactiCall system should be migrated, and corresponding performance analysis should be made. This thesis work serves as a first step in this bigger work. Moreover, it is also recommended for the company to identify the security vulnerabilities for TactiCall.

Containers come with several advantages but loose in secuirity compared to VMs. Security is an aspect that plays a major role for a company like SAAB that works with military products. It is therefore a recommendation for the company to make an investigation of what

vulnerabilities there may be for TactiCall with containers and how it can be secured. A worthwhile solution for the company to look at to increase security in containers is to merge the container solution and the VM solution. Inside a virtual machine, it is possible to run multiple containers at the same time and take advantage of their benefits and at the same time keep them isolated with the help of VMs isolation. This will strengthen the safety of the containers as the OS isolation is the major problem in the safety aspect for containers. Because containers are so relatively small in size and do not have much overhead, this solution will not impair the current performance of the system.

35

7. Conclusions

The main goal of this thesis work was to look at containers as a possible solution for SAAB’s TactiCall system that is currently running VM as a virtualization method. The work started with a literature study needed to get an overall picture of what the pros and cons are between each solution and whether it is worthwhile for the company to test the container solution. This was answered with the help of several comperative studies between containers and VMs. The conclusion of these comperative studies is that containers outpeform VMs in scalability and such parameters as overhead, latency, bandwith-, memory-, CPU-, disk usage, etc. A

weakness that arises with containers is the isolation as all containers share the same host OS-kernel, which creates vulnerabilities and makes this solution less secure compared to VMs. In other hand this OS-kernel isolation does make containers better in CPU and resource

utilization compared to VMs. when it comes to portability, containers are more portable when moving applications in different environments of the same type of OS family, e.g., from Ubuntu to RHE. The final conclusion from the comparative studies shows that the container solution is a worthwhile solution for the company to test as it comes with several advantages over VMs.

The next part of the work was to make a proof of concept that it is possible to recreate the functionality of SAAB’s TactiCall system's old deployment server into a RHEL server of the latest version. The purpose of this was that TactiCall's current deployment server is an old CentOS 5.5 that is in an end of life and needs to be replaced in the near future. Testing the container solution on an old server would not be ideal for the company or the very concept of utilizing today's new technologies. The conclusion of the whole work is that there is no doubt that the container solution has come with several benefits and is here to stay. These benefits are those that SAAB is interested in developing and expanding their system utilization. This thesis work can be seen as a first step in transitioning towards container solutions. As the next possible step, Saad AB should move all the components of the system, do necessary performance tests. Only then a conclusion could be drawn about which solution is more suitable for the company. This thesis has partly proven that in general the container solution outperforms the VM solution in certain aspects such as scalability, resource and CPU utilization as well as performance aspects. It has also been proven that a transition from TactiCall's deployment server to a newer RHEL server running its services in containers is possible.

![Figure 11: Scalability comparison between the container and the VM (Figure borrowed from [20, pp.345])](https://thumb-eu.123doks.com/thumbv2/5dokorg/4443408.107669/19.892.162.678.199.512/figure-scalability-comparison-container-vm-figure-borrowed-pp.webp)

![Figure 12: Resources and operational overhead for the VM vs Docker Swarm in a distributed system (Figure borrowed from [22, pp.7])](https://thumb-eu.123doks.com/thumbv2/5dokorg/4443408.107669/20.892.108.852.181.488/figure-resources-operational-overhead-docker-distributed-figure-borrowed.webp)