Mälardalen University Press Dissertations No. 166

PLANNING AND SEQUENCING THROUGH MULTIMODAL

INTERACTION FOR ROBOT PROGRAMMING

Batu Akan

2014

School of Innovation, Design and Engineering Mälardalen University Press Dissertations

No. 166

PLANNING AND SEQUENCING THROUGH MULTIMODAL

INTERACTION FOR ROBOT PROGRAMMING

Batu Akan

2014

Mälardalen University Press Dissertations No. 166

PLANNING AND SEQUENCING THROUGH MULTIMODAL INTERACTION FOR ROBOT PROGRAMMING

Batu Akan

Akademisk avhandling

som för avläggande av teknologie doktorsexamen i datavetenskap vid Akademin för innovation, design och teknik kommer att offentligen försvaras måndagen

den 8 december 2014, 09.15 i Gamma, Mälardalens högskola, Västerås. Fakultetsopponent: Professor Bengt Lennartson, Chalmers University of Technology

Akademin för innovation, design och teknik Copyright © Batu Akan, 2014

ISBN 978-91-7485-175-5 ISSN 1651-4238

Mälardalen University Press Dissertations No. 166

PLANNING AND SEQUENCING THROUGH MULTIMODAL INTERACTION FOR ROBOT PROGRAMMING

Batu Akan

Akademisk avhandling

som för avläggande av teknologie doktorsexamen i datavetenskap vid Akademin för innovation, design och teknik kommer att offentligen försvaras måndagen

den 8 december 2014, 09.15 i Gamma, Mälardalens högskola, Västerås. Fakultetsopponent: Professor Bengt Lennartson, Chalmers University of Technology

Akademin för innovation, design och teknik

Mälardalen University Press Dissertations No. 166

PLANNING AND SEQUENCING THROUGH MULTIMODAL INTERACTION FOR ROBOT PROGRAMMING

Batu Akan

Akademisk avhandling

som för avläggande av teknologie doktorsexamen i datavetenskap vid Akademin för innovation, design och teknik kommer att offentligen försvaras måndagen

den 8 december 2014, 09.15 i Gamma, Mälardalens högskola, Västerås. Fakultetsopponent: Professor Bengt Lennartson, Chalmers University of Technology

Abstract

Over the past few decades the use of industrial robots has increased the efficiency as well as the competitiveness of several sectors. Despite this fact, in many cases robot automation investments are considered to be technically challenging. In addition, for most small and medium-sized enterprises (SMEs) this process is associated with high costs. Due to their continuously changing product lines, reprogramming costs are likely to exceed installation costs by a large margin. Furthermore, traditional programming methods of industrial robots are too complex for most technicians or manufacturing engineers, and thus assistance from a robot programming expert is often needed. The hypothesis is that in order to make the use of industrial robots more common within the SME sector, the robots should be reprogrammable by technicians or manufacturing engineers rather than robot programming experts. In this thesis, a novel system for task-level programming is proposed. The user interacts with an industrial robot by giving instructions in a structured natural language and by selecting objects through an augmented reality interface. The proposed system consists of two parts: (i) a multimodal framework that provides a natural language interface for the user to interact in which the framework performs modality fusion and semantic analysis, (ii) a symbolic planner, POPStar, to create a time-efficient plan based on the user's instructions. The ultimate goal of this work in this thesis is to bring robot programming to a stage where it is as easy as working together with a colleague.This thesis mainly addresses two issues. The first issue is a general framework for designing and developing multimodal interfaces. The general framework proposed in this thesis is designed to perform natural language understanding, multimodal integration and semantic analysis with an incremental pipeline. The framework also includes a novel multimodal grammar language, which is used for multimodal presentation and semantic meaning generation. Such a framework helps us to make interaction with a robot easier and more natural. The proposed language architecture makes it possible to manipulate, pick or place objects in a scene through high-level commands. Interaction with simple voice commands and gestures enables the manufacturing engineer to focus on the task itself, rather than the programming issues of the robot. The second issue addressed is due to inherent characteristics of communication with the use of natural language; instructions given by a user are often vague and may require other actions to be taken before the conditions for applying the user's instructions are met. In order to solve this problem a symbolic planner, POPStar, based on a partial order planner (POP) is proposed. The system takes landmarks extracted from user instructions as input, and creates a sequence of actions to operate the robotic cell with minimal makespan. The proposed planner takes advantage of the partial order capabilities of POP to execute actions in parallel and employs a best-first search algorithm to seek the series of actions that lead to a minimal makespan. The proposed planner can also handle robots with multiple grippers, parallel machines as well as scheduling for multiple product types.

ISBN 978-91-7485-175-5 ISSN 1651-4238

Abstract

Over the past few decades the use of industrial robots has increased the efficiency as well as the competitiveness of several sectors. Despite this fact, in many cases robot automation investments are considered to be technically challenging. In addition, for most small and medium-sized enterprises (SMEs) this process is associated with high costs. Due to their continuously changing product lines, reprogramming costs are likely to exceed installation costs by a large margin. Furthermore, traditional programming methods of industrial robots are too complex for most technicians or manufacturing engineers, and thus assistance from a robot programming expert is often needed. The hypothesis is that in order to make the use of industrial robots more common within the SME sector, the robots should be reprogrammable by technicians or manufacturing engineers rather than robot programming experts.

In this thesis, a novel system for task-level programming is proposed. The user interacts with an industrial robot by giving instructions in a structured natural language and by selecting objects through an augmented reality interface. The proposed system consists of two parts: (i) a multimodal framework that provides a natural language interface for the user to interact in which the framework performs modality fusion and semantic analysis, (ii) a symbolic planner, POPStar, to create a time-efficient plan based on the user’s instructions. The ultimate goal of this work in this thesis is to bring robot programming to a stage where it is as easy as working together with a colleague.

This thesis mainly addresses two issues. The first issue is a general framework for designing and developing multimodal interfaces. The general framework proposed in this thesis is designed to perform natural language un-derstanding, multimodal integration and semantic analysis with an incremental pipeline. The framework also includes a novel multimodal grammar language, which is used for multimodal presentation and semantic meaning generation.

Abstract

Over the past few decades the use of industrial robots has increased the efficiency as well as the competitiveness of several sectors. Despite this fact, in many cases robot automation investments are considered to be technically challenging. In addition, for most small and medium-sized enterprises (SMEs) this process is associated with high costs. Due to their continuously changing product lines, reprogramming costs are likely to exceed installation costs by a large margin. Furthermore, traditional programming methods of industrial robots are too complex for most technicians or manufacturing engineers, and thus assistance from a robot programming expert is often needed. The hypothesis is that in order to make the use of industrial robots more common within the SME sector, the robots should be reprogrammable by technicians or manufacturing engineers rather than robot programming experts.

In this thesis, a novel system for task-level programming is proposed. The user interacts with an industrial robot by giving instructions in a structured natural language and by selecting objects through an augmented reality interface. The proposed system consists of two parts: (i) a multimodal framework that provides a natural language interface for the user to interact in which the framework performs modality fusion and semantic analysis, (ii) a symbolic planner, POPStar, to create a time-efficient plan based on the user’s instructions. The ultimate goal of this work in this thesis is to bring robot programming to a stage where it is as easy as working together with a colleague.

This thesis mainly addresses two issues. The first issue is a general framework for designing and developing multimodal interfaces. The general framework proposed in this thesis is designed to perform natural language un-derstanding, multimodal integration and semantic analysis with an incremental pipeline. The framework also includes a novel multimodal grammar language, which is used for multimodal presentation and semantic meaning generation.

ii

Such a framework helps us to make interaction with a robot easier and more natural. The proposed language architecture makes it possible to manipulate, pick or place objects in a scene through high-level commands. Interaction with simple voice commands and gestures enables the manufacturing engineer to focus on the task itself, rather than the programming issues of the robot.

The second issue addressed is due to inherent characteristics of commu-nication with the use of natural language; instructions given by a user are often vague and may require other actions to be taken before the conditions for applying the user’s instructions are met. In order to solve this problem a symbolic planner, POPStar, based on a partial order planner (POP) is proposed. The system takes landmarks extracted from user instructions as input, and creates a sequence of actions to operate the robotic cell with minimal makespan. The proposed planner takes advantage of the partial order capabilities of POP to execute actions in parallel and employs a best-first search algorithm to seek the series of actions that lead to a minimal makespan. The proposed planner can also handle robots with multiple grippers, parallel machines as well as scheduling for multiple product types.

Sammanfattning

De senaste decenniernas anv¨andning av industrirobotar har ¨okat effektiviteten och konkurrenskraften i flera sektorer. Trots detta faktum, anses i m˚anga fall investeringar i robotautomation vara tekniskt utmanande. Dessutom ¨ar denna process, f¨or de flesta sm˚a och medelstora f¨oretag (SMF), f¨orknippad med h¨oga kostnader. P˚a grund av f¨oretagens st¨andigt f¨or¨anderliga pro-duktlinjer kommer kostnaderna f¨or omprogrammering sannolikt att ¨overstiga installationskostnaderna med stor marginal. Det ¨ar ocks˚a k¨ant att traditionella programmeringsmetoder anses vara f¨or komplexa f¨or anv¨andare av dessa system, m.a.o. tekniker eller tillverkningsingenj¨orer. Hypotesen ¨ar den att f¨or att g¨ora industrirobotar vanligare inom SMF-sektorn, b¨or robotarna kunna omprogrammeras av tekniker eller tillverkningsingenj¨orer snarare ¨an robotprogrammeringsexperter.

I denna avhandling f¨oresl˚as ett nytt system som bygger p˚a task-niv˚a programmering. Anv¨andaren interagerar med en industrirobot genom att ge instruktioner med ett strukturerat naturligt spr˚ak samt v¨alja objekt genom ett augmented reality gr¨anssnitt. Det f¨oreslagna systemet best˚ar av tv˚a delar: (i) ett multimodalt ramverk som ¨aven inneh˚aller ett naturligt spr˚ak gr¨anssnitt f¨or anv¨andaren att interagera i samt utf¨ora fusion av olika modaliteter och semantisk analys, (ii) en symbolisk planeringsalgoritm, POPStar, f¨or att skapa en tidseffektiv plan utifr˚an anv¨andarens instruktioner. Det fr¨amsta m˚alet med denna avhandling ¨ar att f¨ora robotprogrammering till ett stadium d¨ar det ¨ar lika enkelt att arbeta tillsammans med roboten som med en kollega.

Denna avhandling adresserar tv˚a fr˚agor. Den f¨orsta handlar om utveckling av ett ramverk f¨or att designa och utveckla multimodala gr¨anssnitt. Det generella ramverket som f¨oresl˚as i denna avhandling ¨ar utformad f¨or att utf¨ora f¨orst˚aelse av naturligt spr˚ak, multimodal integration och semantisk analys med en inkrementell pipeline. Den inkluderar ¨aven ett nytt multimodalt spr˚ak som anv¨ands f¨or multimodal representation av information och generering

ii

Such a framework helps us to make interaction with a robot easier and more natural. The proposed language architecture makes it possible to manipulate, pick or place objects in a scene through high-level commands. Interaction with simple voice commands and gestures enables the manufacturing engineer to focus on the task itself, rather than the programming issues of the robot.

The second issue addressed is due to inherent characteristics of commu-nication with the use of natural language; instructions given by a user are often vague and may require other actions to be taken before the conditions for applying the user’s instructions are met. In order to solve this problem a symbolic planner, POPStar, based on a partial order planner (POP) is proposed. The system takes landmarks extracted from user instructions as input, and creates a sequence of actions to operate the robotic cell with minimal makespan. The proposed planner takes advantage of the partial order capabilities of POP to execute actions in parallel and employs a best-first search algorithm to seek the series of actions that lead to a minimal makespan. The proposed planner can also handle robots with multiple grippers, parallel machines as well as scheduling for multiple product types.

Sammanfattning

De senaste decenniernas anv¨andning av industrirobotar har ¨okat effektiviteten och konkurrenskraften i flera sektorer. Trots detta faktum, anses i m˚anga fall investeringar i robotautomation vara tekniskt utmanande. Dessutom ¨ar denna process, f¨or de flesta sm˚a och medelstora f¨oretag (SMF), f¨orknippad med h¨oga kostnader. P˚a grund av f¨oretagens st¨andigt f¨or¨anderliga pro-duktlinjer kommer kostnaderna f¨or omprogrammering sannolikt att ¨overstiga installationskostnaderna med stor marginal. Det ¨ar ocks˚a k¨ant att traditionella programmeringsmetoder anses vara f¨or komplexa f¨or anv¨andare av dessa system, m.a.o. tekniker eller tillverkningsingenj¨orer. Hypotesen ¨ar den att f¨or att g¨ora industrirobotar vanligare inom SMF-sektorn, b¨or robotarna kunna omprogrammeras av tekniker eller tillverkningsingenj¨orer snarare ¨an robotprogrammeringsexperter.

I denna avhandling f¨oresl˚as ett nytt system som bygger p˚a task-niv˚a programmering. Anv¨andaren interagerar med en industrirobot genom att ge instruktioner med ett strukturerat naturligt spr˚ak samt v¨alja objekt genom ett augmented reality gr¨anssnitt. Det f¨oreslagna systemet best˚ar av tv˚a delar: (i) ett multimodalt ramverk som ¨aven inneh˚aller ett naturligt spr˚ak gr¨anssnitt f¨or anv¨andaren att interagera i samt utf¨ora fusion av olika modaliteter och semantisk analys, (ii) en symbolisk planeringsalgoritm, POPStar, f¨or att skapa en tidseffektiv plan utifr˚an anv¨andarens instruktioner. Det fr¨amsta m˚alet med denna avhandling ¨ar att f¨ora robotprogrammering till ett stadium d¨ar det ¨ar lika enkelt att arbeta tillsammans med roboten som med en kollega.

Denna avhandling adresserar tv˚a fr˚agor. Den f¨orsta handlar om utveckling av ett ramverk f¨or att designa och utveckla multimodala gr¨anssnitt. Det generella ramverket som f¨oresl˚as i denna avhandling ¨ar utformad f¨or att utf¨ora f¨orst˚aelse av naturligt spr˚ak, multimodal integration och semantisk analys med en inkrementell pipeline. Den inkluderar ¨aven ett nytt multimodalt spr˚ak som anv¨ands f¨or multimodal representation av information och generering

iv

av semantiskt korrekta meningar. Det multimodala ramverket hj¨alper till att g¨ora interaktionen med industriroboten enklare och mer naturlig. Den f¨oreslagna spr˚akarkitekturen g¨or det m¨ojligt att manipulera, plocka upp eller placera f¨orem˚al i en scen genom h¨ogniv˚akommandon. Interaktion med enkla r¨ostkommandon och gester g¨or att tekniker eller tillverkningsingenj¨orer kan fokusera p˚a sj¨alva uppgiften, snarare ¨an fr˚agor kring programmering av industriroboten.

Den andra fr˚agan som adresseras bygger p˚a de inneboende egenskaperna hos kommunikation som sker genom naturligt spr˚ak; instruktionerna fr˚an anv¨andare ¨ar ofta vaga och kan kr¨ava andra ˚atg¨arder som b¨or vidtas innan villkoren f¨or till¨ampning av anv¨andarens instruktioner uppfylls. F¨or att l¨osa detta problem f¨oresl˚as en symbolisk planerare, POPStar, som baseras p˚a partial order planner (POP). Systemet tar landm¨arken som extraheras fr˚an det som anv¨andares s¨ager, eller gestikulerar, som indata. D¨arefter skapas en sekvens av en plan f¨or att styra robotcellen med minimal makespan. Den f¨oreslagna planeringsalgoritmen utnyttjar POP:s f¨orm˚aga att hantera partiella planer f¨or att jobba parallellt och agerar som ett b¨ast-f¨orsta s¨okalgoritm f¨or att s¨oka bland sekvenser som leder till en minimal makespan. Planeringsalgoritmen kan ocks˚a hantera robotar med flera gripdon, celler son inneh˚aller parallella maskiner samt schemal¨aggning f¨or flera produkttyper.

iv

av semantiskt korrekta meningar. Det multimodala ramverket hj¨alper till att g¨ora interaktionen med industriroboten enklare och mer naturlig. Den f¨oreslagna spr˚akarkitekturen g¨or det m¨ojligt att manipulera, plocka upp eller placera f¨orem˚al i en scen genom h¨ogniv˚akommandon. Interaktion med enkla r¨ostkommandon och gester g¨or att tekniker eller tillverkningsingenj¨orer kan fokusera p˚a sj¨alva uppgiften, snarare ¨an fr˚agor kring programmering av industriroboten.

Den andra fr˚agan som adresseras bygger p˚a de inneboende egenskaperna hos kommunikation som sker genom naturligt spr˚ak; instruktionerna fr˚an anv¨andare ¨ar ofta vaga och kan kr¨ava andra ˚atg¨arder som b¨or vidtas innan villkoren f¨or till¨ampning av anv¨andarens instruktioner uppfylls. F¨or att l¨osa detta problem f¨oresl˚as en symbolisk planerare, POPStar, som baseras p˚a partial order planner (POP). Systemet tar landm¨arken som extraheras fr˚an det som anv¨andares s¨ager, eller gestikulerar, som indata. D¨arefter skapas en sekvens av en plan f¨or att styra robotcellen med minimal makespan. Den f¨oreslagna planeringsalgoritmen utnyttjar POP:s f¨orm˚aga att hantera partiella planer f¨or att jobba parallellt och agerar som ett b¨ast-f¨orsta s¨okalgoritm f¨or att s¨oka bland sekvenser som leder till en minimal makespan. Planeringsalgoritmen kan ocks˚a hantera robotar med flera gripdon, celler son inneh˚aller parallella maskiner samt schemal¨aggning f¨or flera produkttyper.

Acknowledgments

My journey in Sweden has been a long one, but I have known my co-supervisor Baran C¸¨ur¨ukl¨u for even longer. We have discussed about many things, from cameras, guitars, whiskey, Japanese kitchen knives, to why the pipes of the buildings in Sweden are inside rather than outside, but most importantly lots and lots of research. Lots of questions and ideas going around the room in heated discussions, which I enjoyed very much (most of the time). I could not have written this thesis in fact I wouldn’t even be here writing these lines without his support.

Many thanks go to my supervisors Lars Asplund and Baran C¸¨ur¨ukl¨u for teaching me a lot of new stuff, for guidance and support, for all the fruitful discussions, and for the company during the conference trips. Last but not least i would like to thank Mikael Ekstr¨om for his feedback on this thesis as well as Stefan Cedergren and Daniel Sundmark for reviewing the PhD proposal.

Many thanks go to, Fredrik Ekstrand, Carl Ahlberg, J¨orgen Lidholm, Leo Hatvani, Nikola Petroviˇc and Stefan (Bob) Bygde for all the funny stuff, the humor, the support and for sharing the office space with me where working is both fruitful and fun. I owe many thanks to Afshin Ameri for helping me as co-author, co-developer and as friend, so thank you Afshin. I wish to thank the people at IDT; Carola Ryttersson, Malin ˚Ashuvud, Jenny H¨agglund, Ingrid Andersson, Susanne Fronn˚a and Sofia J¨ader´n for making life at the department easier for all of us. I would like to thank many more people at this department, Adnan and Aida ˇCauˇsevi´c, Aneta Vulgarakis, Antonio Ciccheti, Cristina Seceleanu, Dag Nystr¨om (Now I know why the birds sing), Farhang Nemati, Giacomo Spampinato, H¨useyin Aysan, Jagadish Suryadeva, Josip Maraˇs, Juraj Feljan, Kathrin Dannmann, Luka Ledniˇcki, Mikael ˚Asberg, Daniel Kade, Saad Mubeen, Moris Benham, Radu Dobrin, S´everine Sentilles, Svetlana Girs, Thomas Nolte, Tiberiu Seceleanu, and Yue Lu for all the fun coffee breaks, lunches, parties, whispering sessions and the crazy ideas such as

Acknowledgments

My journey in Sweden has been a long one, but I have known my co-supervisor Baran C¸¨ur¨ukl¨u for even longer. We have discussed about many things, from cameras, guitars, whiskey, Japanese kitchen knives, to why the pipes of the buildings in Sweden are inside rather than outside, but most importantly lots and lots of research. Lots of questions and ideas going around the room in heated discussions, which I enjoyed very much (most of the time). I could not have written this thesis in fact I wouldn’t even be here writing these lines without his support.

Many thanks go to my supervisors Lars Asplund and Baran C¸¨ur¨ukl¨u for teaching me a lot of new stuff, for guidance and support, for all the fruitful discussions, and for the company during the conference trips. Last but not least i would like to thank Mikael Ekstr¨om for his feedback on this thesis as well as Stefan Cedergren and Daniel Sundmark for reviewing the PhD proposal.

Many thanks go to, Fredrik Ekstrand, Carl Ahlberg, J¨orgen Lidholm, Leo Hatvani, Nikola Petroviˇc and Stefan (Bob) Bygde for all the funny stuff, the humor, the support and for sharing the office space with me where working is both fruitful and fun. I owe many thanks to Afshin Ameri for helping me as co-author, co-developer and as friend, so thank you Afshin. I wish to thank the people at IDT; Carola Ryttersson, Malin ˚Ashuvud, Jenny H¨agglund, Ingrid Andersson, Susanne Fronn˚a and Sofia J¨ader´n for making life at the department easier for all of us. I would like to thank many more people at this department, Adnan and Aida ˇCauˇsevi´c, Aneta Vulgarakis, Antonio Ciccheti, Cristina Seceleanu, Dag Nystr¨om (Now I know why the birds sing), Farhang Nemati, Giacomo Spampinato, H¨useyin Aysan, Jagadish Suryadeva, Josip Maraˇs, Juraj Feljan, Kathrin Dannmann, Luka Ledniˇcki, Mikael ˚Asberg, Daniel Kade, Saad Mubeen, Moris Benham, Radu Dobrin, S´everine Sentilles, Svetlana Girs, Thomas Nolte, Tiberiu Seceleanu, and Yue Lu for all the fun coffee breaks, lunches, parties, whispering sessions and the crazy ideas such as

viii

having meta printers that could print printers for printing anything.

I dont know where I would be if it was not for Ingemar Reyier, Johan Ernlund and Anders Thunell. Thank you for helping me with many technical and theoretical challenges that I have had.

Along the way I picked up lots of new and precious friends both in and outside the university environment and without whom I believe I could not have continued further. Thank you Burak Tunca, Cihan K¨okler and Cem Hizli. Thank you to Fanny ¨Angvall and Anton Janhager for keep me from going insane in V¨aster˚as.

Finally, I would like to express my gratitude to my parents Nimet Ersoy and Mehmet Akan as well as to my sister Banu Akan for their unconditional love and support through out my life.

This project is funded by Robotdalen, VINNOVA, Sparbanksstiftelsen Nya, EU European Regional Development Fund.

Thank you all!!

Batu Akan V¨aster˚as, December, 2014

List of Publications

Papers included in the thesis

1Paper A Object Selection Using a Spatial Language for Flexible Assembly, Batu Akan, Baran C¸¨ur¨ukl¨u, Giacomo Spampinato, Lars Asplund, In Proceedings of the 14th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA’09), p 1-6, Mallorca, Spain, September, 2009.

Paper B A General Framework for Incremental Processing of Multimodal

Inputs, Afshin Ameri E., Batu Akan, Baran C¸¨ur¨ukl¨u, Lars Asplund,

In Proceedings of the 13th International Conference on Multimodal Interaction (ICMI’11), p 225-228, Alicante, Spain, November, 2011. Paper C Intuitive Industrial Robot Programming Through Incremental

Multi-modal Language and Augmented Reality, Batu Akan, Afshin Ameri E.,

Baran C¸¨ur¨ukl¨u, Lars Asplund, In proceedings of the IEEE International Conference on Robotics and Automation (ICRA’11), p 3934-3939, Shanghai, China, May, 2011.

Paper D Scheduling for Multiple Type Objects Using POPStar Planner, Batu Akan, Afshin Ameri E., Baran C¸¨ur¨ukl¨u, In Proceedings of the 19th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA’14), p 1-7, Barcelona, Spain, September, 2014 Paper E Towards Creation of Robot Programs Through User Interaction,

Batu Akan, Afshin Ameri E., Baran C¸¨ur¨ukl¨u, To be submitted as a journal paper

1The included articles are reformatted to comply with the PhD thesis layout

viii

having meta printers that could print printers for printing anything.

I dont know where I would be if it was not for Ingemar Reyier, Johan Ernlund and Anders Thunell. Thank you for helping me with many technical and theoretical challenges that I have had.

Along the way I picked up lots of new and precious friends both in and outside the university environment and without whom I believe I could not have continued further. Thank you Burak Tunca, Cihan K¨okler and Cem Hizli. Thank you to Fanny ¨Angvall and Anton Janhager for keep me from going insane in V¨aster˚as.

Finally, I would like to express my gratitude to my parents Nimet Ersoy and Mehmet Akan as well as to my sister Banu Akan for their unconditional love and support through out my life.

This project is funded by Robotdalen, VINNOVA, Sparbanksstiftelsen Nya, EU European Regional Development Fund.

Thank you all!!

Batu Akan V¨aster˚as, December, 2014

List of Publications

Papers included in the thesis

1Paper A Object Selection Using a Spatial Language for Flexible Assembly, Batu Akan, Baran C¸¨ur¨ukl¨u, Giacomo Spampinato, Lars Asplund, In Proceedings of the 14th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA’09), p 1-6, Mallorca, Spain, September, 2009.

Paper B A General Framework for Incremental Processing of Multimodal

Inputs, Afshin Ameri E., Batu Akan, Baran C¸¨ur¨ukl¨u, Lars Asplund,

In Proceedings of the 13th International Conference on Multimodal Interaction (ICMI’11), p 225-228, Alicante, Spain, November, 2011. Paper C Intuitive Industrial Robot Programming Through Incremental

Multi-modal Language and Augmented Reality, Batu Akan, Afshin Ameri E.,

Baran C¸¨ur¨ukl¨u, Lars Asplund, In proceedings of the IEEE International Conference on Robotics and Automation (ICRA’11), p 3934-3939, Shanghai, China, May, 2011.

Paper D Scheduling for Multiple Type Objects Using POPStar Planner, Batu Akan, Afshin Ameri E., Baran C¸¨ur¨ukl¨u, In Proceedings of the 19th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA’14), p 1-7, Barcelona, Spain, September, 2014 Paper E Towards Creation of Robot Programs Through User Interaction,

Batu Akan, Afshin Ameri E., Baran C¸¨ur¨ukl¨u, To be submitted as a journal paper

1The included articles are reformatted to comply with the PhD thesis layout

x

Other relevant publications

Licentiate Thesis

• Human Robot Interaction Solutions for Intuitive Industrial Robot Pro-gramming, Batu Akan, Licentiate Thesis, ISBN 978-91-7485-060-4, M¨alardalen University Press, March, 2012.

Conferences and Workshops

• Scheduling POP-Star for Automatic Creation of Robot Cell Programs, Batu Akan, Afshin Ameri E., Baran C¸¨ur¨ukl¨u, Lars Asplund, 18th IEEE International Conference on Emerging Technologies and Factory Automation - ETFA 2013, IEEE, Cagliari, Italy, September, 2013 • Augmented Reality Meets Industry: Interactive Robot Programming,

Af-shin Ameri E.,Batu Akan, Baran C¸¨ur¨ukl¨u, SIGRAD, Svenska Lokalavdel-ningen av Eurographics, p 55-58 V¨aster˚as, Sweden, 2010

• Incremental Multimodal Interface for Human-Robot Interaction, Afshin Ameri E.,Batu Akan, Baran C¸¨ur¨ukl¨u, 15th IEEE International Confer-ence on Emerging Technologies and Factory Automation, p 1-4, Bilbao, Spain, September, 2010

• Towards Industrial Robots with Human Like Moral Responsibilities, Baran C¸¨ur¨ukl¨u, Gordana Dodig-Crnkovic,Batu Akan, 5th ACM/IEEE International Conference on Human-Robot Interaction, p 85-86, Osaka, Japan, March, 2010

• Towards Robust Human Robot Collaboration in Industrial Environ-ments,Batu Akan, Baran C¸¨ur¨ukl¨u, Giacomo Spampinato, Lars Asplund, 5th ACM/IEEE International Conference on Human-Robot Interaction, p 71-72 , Osaka, Japan, March, 2010

• Object Selection Using a Spatial Language for Flexible Assembly, Batu Akan, Baran C¸¨ur¨ukl¨u, Giacomo Spampinato, Lars Asplund, SWAR, p 1-2, V¨aster˚as, September, 2009

• Interacting with Industrial Robots Through a Multimodal Language and Sensory Systems, Batu Akan, Baran C¸¨ur¨ukl¨u, Lars Asplund, 39th International Symposium on Robotics, p 66-69, Seoul, Korea, October, 2008

xi

• Gesture Recognition Using Evolution Strategy Neural Network, Johan H¨agg,Batu Akan, Baran C¸¨ur¨ukl¨u, Lars Asplund, ETFA 2008, p 245-248, IEEE, Hamburg, Germany, September, 2008

x

Other relevant publications

Licentiate Thesis

• Human Robot Interaction Solutions for Intuitive Industrial Robot Pro-gramming, Batu Akan, Licentiate Thesis, ISBN 978-91-7485-060-4, M¨alardalen University Press, March, 2012.

Conferences and Workshops

• Scheduling POP-Star for Automatic Creation of Robot Cell Programs, Batu Akan, Afshin Ameri E., Baran C¸¨ur¨ukl¨u, Lars Asplund, 18th IEEE International Conference on Emerging Technologies and Factory Automation - ETFA 2013, IEEE, Cagliari, Italy, September, 2013 • Augmented Reality Meets Industry: Interactive Robot Programming,

Af-shin Ameri E.,Batu Akan, Baran C¸¨ur¨ukl¨u, SIGRAD, Svenska Lokalavdel-ningen av Eurographics, p 55-58 V¨aster˚as, Sweden, 2010

• Incremental Multimodal Interface for Human-Robot Interaction, Afshin Ameri E.,Batu Akan, Baran C¸¨ur¨ukl¨u, 15th IEEE International Confer-ence on Emerging Technologies and Factory Automation, p 1-4, Bilbao, Spain, September, 2010

• Towards Industrial Robots with Human Like Moral Responsibilities, Baran C¸¨ur¨ukl¨u, Gordana Dodig-Crnkovic,Batu Akan, 5th ACM/IEEE International Conference on Human-Robot Interaction, p 85-86, Osaka, Japan, March, 2010

• Towards Robust Human Robot Collaboration in Industrial Environ-ments,Batu Akan, Baran C¸¨ur¨ukl¨u, Giacomo Spampinato, Lars Asplund, 5th ACM/IEEE International Conference on Human-Robot Interaction, p 71-72 , Osaka, Japan, March, 2010

• Object Selection Using a Spatial Language for Flexible Assembly, Batu Akan, Baran C¸¨ur¨ukl¨u, Giacomo Spampinato, Lars Asplund, SWAR, p 1-2, V¨aster˚as, September, 2009

• Interacting with Industrial Robots Through a Multimodal Language and Sensory Systems, Batu Akan, Baran C¸¨ur¨ukl¨u, Lars Asplund, 39th International Symposium on Robotics, p 66-69, Seoul, Korea, October, 2008

xi

• Gesture Recognition Using Evolution Strategy Neural Network, Johan H¨agg, Batu Akan, Baran C¸¨ur¨ukl¨u, Lars Asplund, ETFA 2008, p 245-248, IEEE, Hamburg, Germany, September, 2008

Contents

I

Thesis

1

1 Introduction 3

1.1 Outline of thesis . . . 5

2 Background 7 2.1 Human-Robot Interaction (HRI) . . . 7

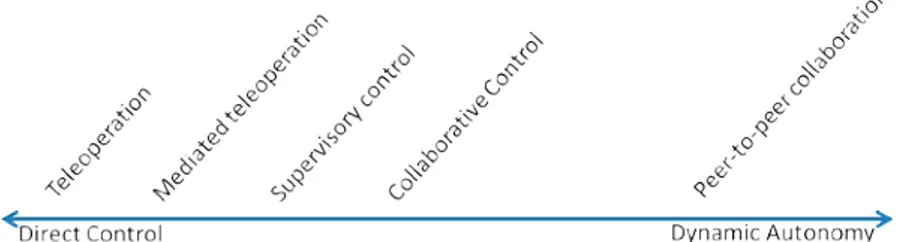

2.1.1 Levels of Autonomy . . . 8

2.1.2 Nature of Information Exchange . . . 10

2.1.3 Structure of the Team . . . 11

2.1.4 Adaptation, Learning and Training . . . 11

2.1.5 Task Shaping . . . 12

2.2 Robot Programming Systems . . . 13

2.2.1 Manual Programming Systems . . . 14

2.2.2 Automatic Programming Systems . . . 16

2.3 Multimodal Interaction . . . 19

2.4 Symbolic Planning . . . 20

2.4.1 Planning with State-space Search . . . 20

2.4.2 Partially Ordered Planners . . . 21

2.5 Summary of Verification for Growth Process . . . 22

3 Research Goals and Methodology 25 3.1 Research Goal . . . 25 3.2 Research Subgoals . . . 26 3.2.1 Research subgoal 1. . . 26 3.2.2 Research subgoal 2. . . 27 3.2.3 Research subgoal 3. . . 28 3.3 Research Methodology . . . 29 xiii

Contents

I

Thesis

1

1 Introduction 3

1.1 Outline of thesis . . . 5

2 Background 7 2.1 Human-Robot Interaction (HRI) . . . 7

2.1.1 Levels of Autonomy . . . 8

2.1.2 Nature of Information Exchange . . . 10

2.1.3 Structure of the Team . . . 11

2.1.4 Adaptation, Learning and Training . . . 11

2.1.5 Task Shaping . . . 12

2.2 Robot Programming Systems . . . 13

2.2.1 Manual Programming Systems . . . 14

2.2.2 Automatic Programming Systems . . . 16

2.3 Multimodal Interaction . . . 19

2.4 Symbolic Planning . . . 20

2.4.1 Planning with State-space Search . . . 20

2.4.2 Partially Ordered Planners . . . 21

2.5 Summary of Verification for Growth Process . . . 22

3 Research Goals and Methodology 25 3.1 Research Goal . . . 25 3.2 Research Subgoals . . . 26 3.2.1 Research subgoal 1. . . 26 3.2.2 Research subgoal 2. . . 27 3.2.3 Research subgoal 3. . . 28 3.3 Research Methodology . . . 29 xiii

xiv Contents

4 Related Work 31

4.1 Programming Industrial Robots . . . 31

4.2 Multimodal Approach . . . 32 4.3 Augmented Reality . . . 34 4.4 Scheduling . . . 34 4.5 Planning . . . 35 5 Results 37 5.1 Contributions . . . 37

5.1.1 Object-Based Programming Scheme . . . 37

5.1.2 General Multimodal Framework . . . 39

5.1.3 POPStar Planner . . . 39 5.1.4 Simulation Environment . . . 40 5.2 Overview of Papers . . . 40 5.2.1 Paper A . . . 40 5.2.2 Paper B . . . 41 5.2.3 Paper C . . . 41 5.2.4 Paper D . . . 42 5.2.5 Paper E . . . 43

6 Conclusions and Future Work 45 6.1 Conclusions . . . 45 6.2 Future Work . . . 47 6.2.1 Multimodal Framework . . . 47 6.2.2 POPStar . . . 48 Bibliography 49

II

Included Papers

59

7 Paper A: Object Selection using a Spatial Language for Flexible Assembly 61 7.1 Introduction . . . 637.2 Architecture . . . 65

7.2.1 Speech Recognition . . . 65

7.2.2 Visual Simulation Environment and High Level Move-ment Functions . . . 66

7.2.3 Spatial Terms . . . 67

7.2.4 Knowledge Base and Reasoning System . . . 68

Contents xv 7.3 Experimental Results . . . 71

7.4 Discussion . . . 74

Bibliography . . . 77

8 Paper B: A General Framework for Incremental Processing of Multimodal Inputs 79 8.1 Introduction . . . 81

8.2 Background . . . 81

8.3 Architecture . . . 82

8.3.1 COLD Language . . . 82

8.3.2 Incremental Multimodal Parsing . . . 83

8.3.3 Modality Fusion . . . 85 8.3.4 Semantic Analysis . . . 86 8.4 Results . . . 88 8.5 Conclusion . . . 89 Bibliography . . . 91 9 Paper C: Intuitive Industrial Robot Programming Through Incremental Multimodal Language and Augmented Reality 93 9.1 Introduction . . . 95

9.2 Architecture . . . 97

9.2.1 Augmented and Virtual Reality environments . . . 97

9.2.2 Reasoning System . . . 99 9.2.3 Multimodal language . . . 101 9.3 Experimental Results . . . 104 9.3.1 Experiment 1 . . . 105 9.3.2 Experiment 2 . . . 105 9.4 Conclusion . . . 107 Bibliography . . . 111 10 Paper D: Scheduling for Multiple Type Objects Using POPStar Planner 115 10.1 Introduction . . . 117

10.2 Background . . . 118

10.3 POPStar . . . 120

10.3.1 Partial Order Planner (POP) . . . 120

xiv Contents

4 Related Work 31

4.1 Programming Industrial Robots . . . 31

4.2 Multimodal Approach . . . 32 4.3 Augmented Reality . . . 34 4.4 Scheduling . . . 34 4.5 Planning . . . 35 5 Results 37 5.1 Contributions . . . 37

5.1.1 Object-Based Programming Scheme . . . 37

5.1.2 General Multimodal Framework . . . 39

5.1.3 POPStar Planner . . . 39 5.1.4 Simulation Environment . . . 40 5.2 Overview of Papers . . . 40 5.2.1 Paper A . . . 40 5.2.2 Paper B . . . 41 5.2.3 Paper C . . . 41 5.2.4 Paper D . . . 42 5.2.5 Paper E . . . 43

6 Conclusions and Future Work 45 6.1 Conclusions . . . 45 6.2 Future Work . . . 47 6.2.1 Multimodal Framework . . . 47 6.2.2 POPStar . . . 48 Bibliography 49

II

Included Papers

59

7 Paper A: Object Selection using a Spatial Language for Flexible Assembly 61 7.1 Introduction . . . 637.2 Architecture . . . 65

7.2.1 Speech Recognition . . . 65

7.2.2 Visual Simulation Environment and High Level Move-ment Functions . . . 66

7.2.3 Spatial Terms . . . 67

7.2.4 Knowledge Base and Reasoning System . . . 68

Contents xv 7.3 Experimental Results . . . 71

7.4 Discussion . . . 74

Bibliography . . . 77

8 Paper B: A General Framework for Incremental Processing of Multimodal Inputs 79 8.1 Introduction . . . 81

8.2 Background . . . 81

8.3 Architecture . . . 82

8.3.1 COLD Language . . . 82

8.3.2 Incremental Multimodal Parsing . . . 83

8.3.3 Modality Fusion . . . 85 8.3.4 Semantic Analysis . . . 86 8.4 Results . . . 88 8.5 Conclusion . . . 89 Bibliography . . . 91 9 Paper C: Intuitive Industrial Robot Programming Through Incremental Multimodal Language and Augmented Reality 93 9.1 Introduction . . . 95

9.2 Architecture . . . 97

9.2.1 Augmented and Virtual Reality environments . . . 97

9.2.2 Reasoning System . . . 99 9.2.3 Multimodal language . . . 101 9.3 Experimental Results . . . 104 9.3.1 Experiment 1 . . . 105 9.3.2 Experiment 2 . . . 105 9.4 Conclusion . . . 107 Bibliography . . . 111 10 Paper D: Scheduling for Multiple Type Objects Using POPStar Planner 115 10.1 Introduction . . . 117

10.2 Background . . . 118

10.3 POPStar . . . 120

10.3.1 Partial Order Planner (POP) . . . 120

xvi Contents

10.4 Results . . . 126

10.4.1 Single Object Case . . . 128

10.4.2 Multiple Part Types . . . 130

10.4.3 Changing Part Types . . . 130

10.5 Conclusion . . . 130

Bibliography . . . 133

11 Paper E: Towards Creation of Robot Programs Through User Interaction 137 11.1 Introduction . . . 139 11.2 Related Work . . . 141 11.3 Architecture . . . 143 11.3.1 Multimodal Framework . . . 143 11.3.2 POPStar . . . 146 11.4 Results . . . 153

11.4.1 The Single Object Scenario . . . 158

11.4.2 Multiple Object Types . . . 158

11.4.3 Changing Object Types . . . 159

11.5 Discussions . . . 159

Bibliography . . . 165

List of Figures

2.1 Levels of autonomy with emphasis on human interaction. . . 102.2 Categories of robot programming systems . . . 13

2.3 A screenshot of the ABB Robot Studio [1] . . . 15

2.4 Lego Midstorm programming environment . . . 17

7.1 Block diagram of the proposed system.. . . 65

7.2 Example showing weak spatial relations. . . 68

7.3 A view from the top of the table, overlaid with gaussian kernels representing left, right, behind and infront regions for the red object.. 69

7.4 Screenshot of the simulator where the robot is asked to pick and place all the blue objects over the conveyor band. . . 72

7.5 Layout of the objects . . . 72

8.1 Some Sample code of COLD . . . 84

8.2 Different components involved in parsing. . . 85

8.3 output of the system while parsing the sentence “put it here” and a click. . . 87

8.4 AR UI. (a) Blue objects are highlighted after user says “pickup a blue object”. (b) While the robot is holding an object, the user says “put”, and all empty locations are highlighted. . . 89

9.1 Hardware ad software components of the system. . . 98

9.2 Screenshot of the system. Yellow lines represents the path the to be taken by the robot and the red cubes represent a gripper action, whether grip or release. . . 99

9.3 Screenshots from Augmented and Virtual reality operating modes of the system. . . 100

xvi Contents

10.4 Results . . . 126

10.4.1 Single Object Case . . . 128

10.4.2 Multiple Part Types . . . 130

10.4.3 Changing Part Types . . . 130

10.5 Conclusion . . . 130

Bibliography . . . 133

11 Paper E: Towards Creation of Robot Programs Through User Interaction 137 11.1 Introduction . . . 139 11.2 Related Work . . . 141 11.3 Architecture . . . 143 11.3.1 Multimodal Framework . . . 143 11.3.2 POPStar . . . 146 11.4 Results . . . 153

11.4.1 The Single Object Scenario . . . 158

11.4.2 Multiple Object Types . . . 158

11.4.3 Changing Object Types . . . 159

11.5 Discussions . . . 159

Bibliography . . . 165

List of Figures

2.1 Levels of autonomy with emphasis on human interaction. . . 102.2 Categories of robot programming systems . . . 13

2.3 A screenshot of the ABB Robot Studio [1] . . . 15

2.4 Lego Midstorm programming environment . . . 17

7.1 Block diagram of the proposed system.. . . 65

7.2 Example showing weak spatial relations. . . 68

7.3 A view from the top of the table, overlaid with gaussian kernels representing left, right, behind and infront regions for the red object.. 69

7.4 Screenshot of the simulator where the robot is asked to pick and place all the blue objects over the conveyor band.. . . 72

7.5 Layout of the objects . . . 72

8.1 Some Sample code of COLD . . . 84

8.2 Different components involved in parsing. . . 85

8.3 output of the system while parsing the sentence “put it here” and a click. . . 87

8.4 AR UI. (a) Blue objects are highlighted after user says “pickup a blue object”. (b) While the robot is holding an object, the user says “put”, and all empty locations are highlighted. . . 89

9.1 Hardware ad software components of the system. . . 98

9.2 Screenshot of the system. Yellow lines represents the path the to be taken by the robot and the red cubes represent a gripper action, whether grip or release. . . 99

9.3 Screenshots from Augmented and Virtual reality operating modes of the system. . . 100

xviii List of Figures

9.4 A sample of the 3MG language. . . 102 9.5 Multimodal parser and its subsystems in our speech/mouse setup. . . 103 9.6 Experimental setup consisting of. . . 106 9.7 Setup for experiment 2, where the users were asked to build stacks of

wooden blocks. . . 107 9.8 Setup for experiment 2, where the users were asked to sort the

numbered wooden blocks in an ascending order. . . 108 10.1 Landmarks graph together with ordering constraints. . . 121 10.2 Two consecutive stages of the plan, including a state change. . . 122 10.3 A sample plan demostrating the idle machine times tmand idle object

times to, as well as the last action done by the robot tr. . . 125

10.4 Different landmark topologies.. . . 126 10.5 Gantt charts for the cases for (a) a robot cell that produces single

type object , (b) a robot cell which produces 2 types of parts, and (c) a cell where production changes between different part types. Rows represent machines m1 to mM and the actions of the robot

and grippers r1G1and r1G2. Bars represent, particular object being processed in a machine. . . 129 11.1 Overview of the system components, including the interaction

com-ponents, the multimodal framework and the POPStar planner. The arrows show the direction the of information flow. The information received from the user is processed by the multimodal framework. Based on specific recognition events, the landmarks are passed on to the POPStar planner. . . 143 11.2 Landmarks graph together with ordering constraints. Vertical

process-ing steps represent handlprocess-ing an object through different landmarks. Horizontal processing steps represent handling of different objects through the same landmark. . . 146 11.3 Two consecutive stages of the plan are shown. (a) Before the change

has occurred, and (b) after the change has occurred. . . 148 11.4 A Gantt chart for a sample plan. G1 and G2 depict the grippers of the

robot. m1 through m3 are the machines in the cell. The idle machine times are shown by tmand idle object times are shown by to. The

time for the last action performed by the robot is represented by tr. . 152

List of Figures xix 11.5 Different landmark topologies. (a) Two different products are

pro-duced simultaneously. (b) Two different products are propro-duced in the cell one after the other. (c) This case illustrates the introduction of a second product type while there is an ongoing activity. When Type 2 is produced the cell goes back to Type 1. . . 153 11.6 A COLD code snippet that presents the pickup command. . . 154 11.7 Screenshot showing the augmented reality interface seen by the user.

The input palette holds nine objects, all numbered individually, in the range of 1-9. Further up the figure the black and white AR tag is shown. The yellow line overlays the path of the robot. This helps to assist the user in understanding the movements of the robot. In this specific case the robot will approach the input palette from the left in order to pick up object 1. . . 155 11.8 Actions defined in the planning domain, consists jog, pickup, load,

process action schemas. Pickup and load actions are overloaded to have a different number of parameters. . . 156 11.9 Landmarks generated for a simple machine tending example. The

robot picks up the object Obj1 from the input palette I and loads it into a machine which processes stage one. Later the Obj1 is placed on the output palette O. . . 157 11.10Gantt charts for the cases for (a) a robot cell that produces a single

type object , (b) a robot cell which produces 2 types of object, and (c) a cell where production changes between different object types. Rows represent machines m1 to mM and the actions of the robot

and grippers r1G1and r1G2. Bars represent particular objects being

processed in a machine. . . 162 11.11User’s commands for the multiple object types case. The left column

shows the instructions given by the user. The right column shows the generated landmarks from the corresponding instructions. . . 163

xviii List of Figures

9.4 A sample of the 3MG language. . . 102 9.5 Multimodal parser and its subsystems in our speech/mouse setup. . . 103 9.6 Experimental setup consisting of. . . 106 9.7 Setup for experiment 2, where the users were asked to build stacks of

wooden blocks. . . 107 9.8 Setup for experiment 2, where the users were asked to sort the

numbered wooden blocks in an ascending order. . . 108 10.1 Landmarks graph together with ordering constraints. . . 121 10.2 Two consecutive stages of the plan, including a state change. . . 122 10.3 A sample plan demostrating the idle machine times tmand idle object

times to, as well as the last action done by the robot tr. . . 125

10.4 Different landmark topologies.. . . 126 10.5 Gantt charts for the cases for (a) a robot cell that produces single

type object , (b) a robot cell which produces 2 types of parts, and (c) a cell where production changes between different part types. Rows represent machines m1 to mM and the actions of the robot

and grippers r1G1and r1G2. Bars represent, particular object being processed in a machine. . . 129 11.1 Overview of the system components, including the interaction

com-ponents, the multimodal framework and the POPStar planner. The arrows show the direction the of information flow. The information received from the user is processed by the multimodal framework. Based on specific recognition events, the landmarks are passed on to the POPStar planner. . . 143 11.2 Landmarks graph together with ordering constraints. Vertical

process-ing steps represent handlprocess-ing an object through different landmarks. Horizontal processing steps represent handling of different objects through the same landmark. . . 146 11.3 Two consecutive stages of the plan are shown. (a) Before the change

has occurred, and (b) after the change has occurred. . . 148 11.4 A Gantt chart for a sample plan. G1 and G2 depict the grippers of the

robot. m1 through m3 are the machines in the cell. The idle machine times are shown by tmand idle object times are shown by to. The

time for the last action performed by the robot is represented by tr. . 152

List of Figures xix 11.5 Different landmark topologies. (a) Two different products are

pro-duced simultaneously. (b) Two different products are propro-duced in the cell one after the other. (c) This case illustrates the introduction of a second product type while there is an ongoing activity. When Type 2 is produced the cell goes back to Type 1. . . 153 11.6 A COLD code snippet that presents the pickup command. . . 154 11.7 Screenshot showing the augmented reality interface seen by the user.

The input palette holds nine objects, all numbered individually, in the range of 1-9. Further up the figure the black and white AR tag is shown. The yellow line overlays the path of the robot. This helps to assist the user in understanding the movements of the robot. In this specific case the robot will approach the input palette from the left in order to pick up object 1. . . 155 11.8 Actions defined in the planning domain, consists jog, pickup, load,

process action schemas. Pickup and load actions are overloaded to have a different number of parameters. . . 156 11.9 Landmarks generated for a simple machine tending example. The

robot picks up the object Obj1 from the input palette I and loads it into a machine which processes stage one. Later the Obj1 is placed on the output palette O. . . 157 11.10Gantt charts for the cases for (a) a robot cell that produces a single

type object , (b) a robot cell which produces 2 types of object, and (c) a cell where production changes between different object types. Rows represent machines m1 to mM and the actions of the robot

and grippers r1G1and r1G2. Bars represent particular objects being

processed in a machine. . . 162 11.11User’s commands for the multiple object types case. The left column

shows the instructions given by the user. The right column shows the generated landmarks from the corresponding instructions. . . 163

List of Tables

7.1 List of commands to control the robot. . . 71

List of Tables

7.1 List of commands to control the robot. . . 71

I

Thesis

I

Thesis

Chapter 1

Introduction

Robots have become more powerful and intelligent over the last decades. Companies concentrated on large scale production such as car industries have been using industrial robots for machine tending, joining and welding metal sheets for several decades now. Thus, in many cases an investment in industrial robots is seen as a vital step that will strengthen a company’s position in the market through increased productivity. However, in small and medium enterprises (SMEs) robots are not commonly found due to a number of key factor, e.g. low volume production, necessity of continuous reprogramming, and layout of SME shop floors.

Even though the hardware costs of industrial robots have decreased, integration as well as programming costs make them unfavorable among many SMEs. Unlike large scale production industries, many SMEs deal with small volume production, with continuously changing product types. From the programming point of the view, no matter how simple the production process is, one has to rely on a professional programmer to integrate and reprogram the robot cell for a new product. Either the company will have to setup a dedicated software department responsible for programming the robots or out-source this need. Maintaining a software department or hiring a consultant from an integrator company is costly for SMEs as well as larger companies. Furthermore, reconfiguring a robot cell is a time consuming process even for skilled engineers. Also it may be difficult to find experts when needed. More specifically, an SME may be forced to rely on a limited number of experts or integrators that are located in the close proximity of the company. This is a real challenge since in Sweden many integrators try to serve a limited geographic

Chapter 1

Introduction

Robots have become more powerful and intelligent over the last decades. Companies concentrated on large scale production such as car industries have been using industrial robots for machine tending, joining and welding metal sheets for several decades now. Thus, in many cases an investment in industrial robots is seen as a vital step that will strengthen a company’s position in the market through increased productivity. However, in small and medium enterprises (SMEs) robots are not commonly found due to a number of key factor, e.g. low volume production, necessity of continuous reprogramming, and layout of SME shop floors.

Even though the hardware costs of industrial robots have decreased, integration as well as programming costs make them unfavorable among many SMEs. Unlike large scale production industries, many SMEs deal with small volume production, with continuously changing product types. From the programming point of the view, no matter how simple the production process is, one has to rely on a professional programmer to integrate and reprogram the robot cell for a new product. Either the company will have to setup a dedicated software department responsible for programming the robots or out-source this need. Maintaining a software department or hiring a consultant from an integrator company is costly for SMEs as well as larger companies. Furthermore, reconfiguring a robot cell is a time consuming process even for skilled engineers. Also it may be difficult to find experts when needed. More specifically, an SME may be forced to rely on a limited number of experts or integrators that are located in the close proximity of the company. This is a real challenge since in Sweden many integrators try to serve a limited geographic

4 Chapter 1. Introduction

region in their close proximity, e.g. they try to avoid project which may force them to have experts staying over night. It is plausible to assume that similar problems exist in other countries that have high labor costs.

There are additional challenges as well. An industrial robot must be placed in a cell that will occupy valuable workspace and maybe operate only a couple of hours a day. In comparison to large industries, SME shop floors are more often less structured, therefore it is even more challenging to deploy robots to various SMEs. Under these circumstances, it is hard to motivate a SME, which is constantly under pressure, to carry out a risky investment in robot automation. Obviously, these issues result in challenges with regard to high costs, limited flexibility, and reduced productivity.

In order to make industrial robots more favorable in the SME sector, the issues described have to be resolved. Typically for those SMEs, that have frequently changing applications, it is quite expensive to afford a professional programmer or technician, therefore, in our view a human-robot interaction solution is demanded. Using a high-level language, which hides the low-level programming details from the user, will enable a technician or a manufacturing engineer who has knowledge about the manufacturing process to easily program the robot at task-level and to let the robot switch between previously programmed tasks.

The goal of this thesis is to provide tools and methods to make task level robot programming easier and more available to a wider range of users. The primary goal can be divided into several subgoals:

1. Understanding the user’s intentions by the system

2. Giving proper feedback to the user confirming that the user’s intentions are understood properly

3. Generating a complete robot program based on user’s instructions that would utilize the resources in a robot cell optimally.

The driving idea behind this thesis is to bring robot programming to a higher level. Rather than mapping instruction to individual commands, the goal is to provide a complete framework where most of the planning and scheduling tasks are taken care of by the proposed robot programming system. Ideally, the users’ part in programming is to give out a brief summary of order of machines to be used in natural language, and all the low-level details to be determined by the system. The whole process of programming would be as easy as teaching the task to a new member of the work team.

1.1 Outline of thesis 5 This doctoral thesis presents a novel system to support for easy high-level programming of industrial robots. The proposed system includes:

• A multi-modal, incremental framework for rapid development of multi

modal interfaces;

• A simulator which checks and verifies robot code, and also acts as visual

feedback to the user;

• POPStar planner which is based on partial order planner, to plan and

schedule the operations of machines and robots in the cell, based on users instructions

1.1 Outline of thesis

The remainder of this thesis consists of two main parts. The first part contains six chapters: Chapter 2 introduces human-robot interaction (HRI) and methods used for programming robots. It also introduces technical concepts which are used throughout the thesis. Chapter 3 formulates the main research goal, derives research subgoals, and describes the research method that is used. Chapter 4 surveys related work. Chapter 5 presents the research results in line with the research goals and finally Chapter 6 concludes and summarizes the thesis, and gives directions for possible future work. The second part of the thesis is a collection of four peer-reviewed conference and workshop papers.

4 Chapter 1. Introduction

region in their close proximity, e.g. they try to avoid project which may force them to have experts staying over night. It is plausible to assume that similar problems exist in other countries that have high labor costs.

There are additional challenges as well. An industrial robot must be placed in a cell that will occupy valuable workspace and maybe operate only a couple of hours a day. In comparison to large industries, SME shop floors are more often less structured, therefore it is even more challenging to deploy robots to various SMEs. Under these circumstances, it is hard to motivate a SME, which is constantly under pressure, to carry out a risky investment in robot automation. Obviously, these issues result in challenges with regard to high costs, limited flexibility, and reduced productivity.

In order to make industrial robots more favorable in the SME sector, the issues described have to be resolved. Typically for those SMEs, that have frequently changing applications, it is quite expensive to afford a professional programmer or technician, therefore, in our view a human-robot interaction solution is demanded. Using a high-level language, which hides the low-level programming details from the user, will enable a technician or a manufacturing engineer who has knowledge about the manufacturing process to easily program the robot at task-level and to let the robot switch between previously programmed tasks.

The goal of this thesis is to provide tools and methods to make task level robot programming easier and more available to a wider range of users. The primary goal can be divided into several subgoals:

1. Understanding the user’s intentions by the system

2. Giving proper feedback to the user confirming that the user’s intentions are understood properly

3. Generating a complete robot program based on user’s instructions that would utilize the resources in a robot cell optimally.

The driving idea behind this thesis is to bring robot programming to a higher level. Rather than mapping instruction to individual commands, the goal is to provide a complete framework where most of the planning and scheduling tasks are taken care of by the proposed robot programming system. Ideally, the users’ part in programming is to give out a brief summary of order of machines to be used in natural language, and all the low-level details to be determined by the system. The whole process of programming would be as easy as teaching the task to a new member of the work team.

1.1 Outline of thesis 5 This doctoral thesis presents a novel system to support for easy high-level programming of industrial robots. The proposed system includes:

• A multi-modal, incremental framework for rapid development of multi

modal interfaces;

• A simulator which checks and verifies robot code, and also acts as visual

feedback to the user;

• POPStar planner which is based on partial order planner, to plan and

schedule the operations of machines and robots in the cell, based on users instructions

1.1 Outline of thesis

The remainder of this thesis consists of two main parts. The first part contains six chapters: Chapter 2 introduces human-robot interaction (HRI) and methods used for programming robots. It also introduces technical concepts which are used throughout the thesis. Chapter 3 formulates the main research goal, derives research subgoals, and describes the research method that is used. Chapter 4 surveys related work. Chapter 5 presents the research results in line with the research goals and finally Chapter 6 concludes and summarizes the thesis, and gives directions for possible future work. The second part of the thesis is a collection of four peer-reviewed conference and workshop papers.

Chapter 2

Background

This chapter introduces important technical concepts that are used thoughout the thesis. It provides a general introduction to human-robot interaction, a general overview of methods for programming robots. Followed by multi modal interaction and symbolic planning.

2.1 Human-Robot Interaction (HRI)

Robots are artificial agents with capacities of perception and action in the physical world. As robot technology develops and the robots start moving out of the research laboratories in to the real world, the interaction between robots and humans becomes more important. Human-robot interaction (HRI) is the field of study that tries to understand, design and evaluate robot systems for use by or with humans [2].

Communication of any sort between humans and robots can be regarded as interaction. Communication can be of many different forms. However, the distance between the human and the robot alters the nature of communication. Communication, and thus interaction, can be divided into the following two categories: proximate interaction and remote interaction [2]. In proximate interaction, the user and the robot share the same environment, e.g., the user may be located in the robot cell during the programming phase. In remote interaction, the user and the robot can be spatially and temporally separated from each other, e.g., controlling Mars rovers implies that the user and the rovers are both temporally and spatially separated from each other. In most

Chapter 2

Background

This chapter introduces important technical concepts that are used thoughout the thesis. It provides a general introduction to human-robot interaction, a general overview of methods for programming robots. Followed by multi modal interaction and symbolic planning.

2.1 Human-Robot Interaction (HRI)

Robots are artificial agents with capacities of perception and action in the physical world. As robot technology develops and the robots start moving out of the research laboratories in to the real world, the interaction between robots and humans becomes more important. Human-robot interaction (HRI) is the field of study that tries to understand, design and evaluate robot systems for use by or with humans [2].

Communication of any sort between humans and robots can be regarded as interaction. Communication can be of many different forms. However, the distance between the human and the robot alters the nature of communication. Communication, and thus interaction, can be divided into the following two categories: proximate interaction and remote interaction [2]. In proximate interaction, the user and the robot share the same environment, e.g., the user may be located in the robot cell during the programming phase. In remote interaction, the user and the robot can be spatially and temporally separated from each other, e.g., controlling Mars rovers implies that the user and the rovers are both temporally and spatially separated from each other. In most

8 Chapter 2. Background

cases remote interaction is solely limited to spatial separation, e.g. in the case with teleoperated surgery robots. This division helps to distinguish between applications that require mobility, physical manipulation or social interaction. As an example teleoperation and telemanipulations use remote interaction to control mobile remote robot and manipulate objects that are not in the immediate surrounding of the user, whereas proximate interaction, lets say with a mobile service robot, requires social interaction [2].

In social interactions, the robots and the humans interact as peers or companions, however the important factor is that social interaction often requires close proximity.

While the distance between the robot and the user alters the nature of communication, it doesn’t define the level or quality of the interaction. The designer, attempts to understand and shape this interaction process in the hope of making it more beneficial for the user. From the designers point of view, the following five attributes can be altered to affect the interaction process [2]:

• Level and behavior of autonomy • Nature of the information exchange • Structure of the team

• Adaptation, learning and training of users and the robot • Shape of the task

2.1.1 Levels of Autonomy

Robots that can perform the desired tasks in an unstructured environment without human intervention are autonomous [2]. From an operational point of view, the amount of time during which a robot can be left without supervision is an important characteristic of autonomy. A robot with high autonomy can be left alone for longer periods of time, whereas a robot with lower autonomy needs continuous supervision and user control. Autonomy, however, is not the highest achievable goal in the field of HRI, but only a means to support productive interaction. Therefore in a human centered applications the notion of levels of autonomy (LOA) gains more importance. Even though there are many scales for LOA the following one proposed by Sheridan and Verplank [3] is the most cited one [2]:

1. Computer offers no assistance; human does it all.

2.1 Human-Robot Interaction (HRI) 9 2. Computer offers a complete set of action alternatives.

3. Computer narrows the selection down to a few choices. 4. Computer suggests a single action.

5. Computer executes that action if the user approves.

6. Computer allows the human limited time to veto before automatic execution.

7. Computer executes automatically then necessarily informs the human. 8. Computer informs human after automatic execution only if human asks. 9. Computer informs human after automatic execution only if it decides

too.

10. Computer decides everything and acts autonomously, ignoring the hu-man.

Note that, these scales may not always be applicable to the whole problem domain but are more beneficial when applied to the subtasks.

The scale proposed by Sheridan helps to determine how autonomous a robot is under certain circumstances, however it does not help to evaluate the level of interaction between the user and the robot from an HRI point of view. This can be illustrated by the following example: A service robot should exhibit different levels of autonomy during the programming phase and the execution phase. A different perspective of autonomy regarding the level of interaction is presented in Figure 2.1. It should be noted the ends of the scale do not indicate less versus more autonomy, thus on the direct control side of the scale, the challenge is to develop a user interface that minimizes the operator’s cognitive load. At the other end of the scale, the issue is to create robots with the appropriate cognitive skills in order to interact naturally and efficiently to achieve peer-to-peer collaboration with a human [2]. In addition to fully autonomy at sub-level tasks peer-to-peer collaboration also requires robot with social skills for seamless HRI, therefore it is often considered more difficult to achieve than full autonomy alone.

![Figure 2.3: A screenshot of the ABB Robot Studio [1]](https://thumb-eu.123doks.com/thumbv2/5dokorg/4438128.107435/41.718.149.613.159.436/figure-screenshot-abb-robot-studio.webp)