V¨

aster˚

as, Sweden

Thesis for the Bachelor’s Degree in Computer Science 15.0 credits

AUTOMATED CHECKING OF

PROGRAMMING ASSIGNMENTS

USING STATIC ANALYSIS

Kenneth Sterner

ksr15001@student.mdh.se

Examiner: Bj¨

orn Lisper

M¨

alardalen University, V¨

aster˚

as, Sweden

Supervisor: Jean Malm

M¨

alardalen University, V¨

aster˚

as, Sweden

Abstract

Computer science and software engineering education usually contain programming courses that require writing code that is graded. These assignments are corrected through manual code review by teachers or course assistants. The large amount of assignments motivates us to find ways to automatically correct certain parts of the assignments. One method to ensure certain requirements of written code is fulfilled is by using static analysis, which analyzes code without executing it. We utilize Clang-tidy and Clang Static Analyzer, existing static analysis tools for C/C++, and extend their capabilities to automate requirement checking based on existing assignments, such as prohibiting certain language constructs and ensuring certain function signatures match the ones provided in instructions. We evaluate our forked version of the Clang tooling on actual student hand-ins to show that the tool is capable of automating some aspects that would otherwise require manual code review. We were able to find several errors, even in assignments that were considered complete. However, Clang Static Analyzer also failed to find a memory leak, which leads us to conclude that despite the benefits, static analysis is best used as a complement to assist in finding errors.

Table of Contents

1. Introduction 1 1.1. Problem Formulation . . . 1 2. Background 2 3. Related Work 5 4. Method 5 5. Ethical and Societal Considerations 6 6. Developing and evaluating the checkers 6 6.1. Clang-tidy . . . 66.2. Clang Static Analyzer . . . 7

6.3. Developing the checkers . . . 7

6.4. Evaluating the checkers . . . 8

7. Results 10 8. Discussion 12 8.1. Future Work . . . 13 9. Conclusions 14 References 14 Appendix A Acknowledgements 15

1.

Introduction

Programming courses are a staple of computer science and software engineering curricula. These courses usually contain practical programming assignments that complement the theoretical as-pects of the course. Currently, checking the correctness of these assignments is done through manual code review by teachers or course assistants which consumes time that could be spent on providing more valuable feedback to the student instead. This issue becomes increasingly prevalent as the Massive Open Online Course (MOOC) format for courses increases in usage, resulting in manual checking not scaling well due to the high number of students. The intended demographic of this report is mainly course instructors that are interested in applying static analysis to assist in grading programming assignments.

At M¨alardalens H¨ogskola (MDH), many of the programming courses, in particular the intro-ductory programming courses, have assignments with specific requirements that aim to encourage good coding style and software engineering practices. For example, the use of global variables and GOTO statements are explicitly prohibited, and the return value of memory allocation functions such as malloc() should always be checked for NULL.

Previous studies on automatic assignment checking for programming assignments relied on output of running the program or comparison with reference solutions (or some variation thereof). However, a potential method that has not been studied is how the usage of an existing static analysis tool could be used to find errors when correcting assignments.

Some of the courses at MDH use runtime testing of programs with test cases to test correctness of programs, which is a type of dynamic analysis; however, another method of testing programs without executing them, static analysis, could also be used to solve this issue. The advantage of static analysis is that it acts on the source code level, which makes many of the requirements listed in the assignment instructions possible to verify since they only require syntactic verification, which is currently done manually by teaching staff.

To showcase the viability of using a static analysis for this purpose, we extended Clang, a C/C++ compiler which is distributed with several additional tools that perform static analysis. Clang was chosen due to the fact that most programming courses at MDH, in particular the introductory programming courses, uses C as the programming language. The modified version of Clang was later evaluated by running it on assignments sent by students. We were able to find several errors in the assignments, despite some of them being previously corrected. Static analysis can be a useful tool when correcting assignments, but should be used with care since they are not always flawless and can sometimes miss certain kinds of errors.

1.1.

Problem Formulation

The purpose of this study is to investigate solutions towards automatically the verifying the cor-rectness of students’ programming assignments with regards to assignment instructions using static analysis.

The motivation is that many of the requirements in the assignments that are manually checked by teaching staff can be formalized and statically checked, reducing both time spent by the teaching staff while also eliminating the risk of human error.

The main research goal can thus be formulated as follows: how can an existing tool used for static analysis such as Clang-tidy be used to check student assignments, and how viable is that tool for that purpose with regards to its capabilities and accuracy? The following requirements are based on existing assignment instructions and are expected to be implemented:

• Check for usage of specific constructs in certain contexts (for example, function f contains a loop, which also contains an if statement).

v o i d f ( x ) { f o r ( i n t i = 0 ; i < 1 0 ; i ++) i f ( x > 0 ) . . . }

The rationale behind checking this is that the assignments generally have a specific solution in mind, and performing the calculations in a certain way. Note that some considerations such as performing loops in different ways (while, for, do) have to be accounted for. Also, different levels of granularity should also be considered, since some assignments are more specific than others and can afford to make more assumptions.

• Check that function declarations fulfill certain predicates (correct arguments and types, in-cluding passing by reference instead of passing by value). As an example, a restriction could be imposed that structs are always passed by reference rather than value in function decla-rations.

• Prohibiting certain constructs, such as GOTO statements.

• Check that data structures fulfill certain predicates (in other words, they contain the data necessary to meet specifications). This is a very specific checker that is useful when an assignment expects a specific form on a struct declaration. As an example, a data structure representing a point in a coordinate system could be enforced to have the following form (naming and the possibility of typedefs aside).

s t r u c t p { f l o a t x ; f l o a t y ; }

• Some value control checking for proper error handling, especially with regards to proper error handling return values of dynamic memory allocation.

c h a r ∗ p t r = m a l l o c ( n ) ; i f ( ! p t r ) {

p r i n t f ( ” E r r o r m e s s a g e ” ) ; e x i t ( EXIT FAILURE ) ; }

The above is one example of aborting a program upon a malloc failure; however, it is not the only way since the malloc call could be inlined in the if condition itself which needs to be considered.

2.

Background

Many properties of programs can be examined without actually executing the program; in fact, many aspects of programs that are important when checking student assignments are only properly verifiable before compilation, since executing the program only tells us if the code behaves as expected, while the source code tells us how the code works. Automated analysis of source code is known as static program analysis (usually referred to as simply “static analysis” from here on). Examples of these include “linting” tools that examine the source code to find potential sources of errors in code, such as unsafe programming constructs (e.g. implicit fallthrough in switch statements in C). Most modern compilers have built-in support for many of these features, but in modern contexts linting usually implies a more thorough analysis than what the compiler provides and can also be applied to interpreted languages such as Python that do not require a separate compilation phase. The opposite of static analysis is dynamic analysis, which is performed when executing the program. Examples of dynamic analysis tools for C/C++ are the tools provided by Valgrind [1], a framework for developing said tools. The most well known of these tools is Memcheck, which is used to find memory bugs related to memory, such as uses of undefined memory. Valgrind uses dynamic binary instrumentation, which adds additional analysis information to the program that is tracked during execution. This code is added on runtime to the machine level instructions, thus avoiding the requirement of recompilation to add debugging symbols (as is usually the case for general-use debuggers).

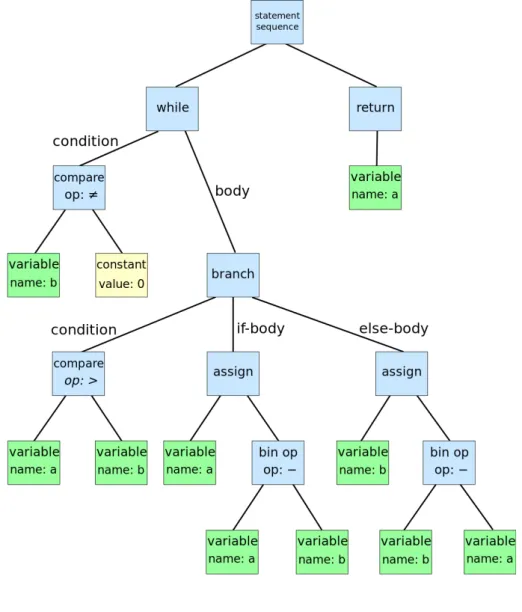

The abstract syntax tree (AST) is a representation of the source code during the compilation phase after parsing. It differs from the parse tree (or concrete syntax tree) in that syntactical information that can be seen in the source code which is important for parsing, such as parentheses

(denoting higher operator precedence), is excluded, since the tree structure itself conveys the same information implicitly (subtrees are evaluated first). See figure 1 for an example of an abstract syntax tree.

Clang is an open source C/C++ compiler part of the LLVM project, a compiler infrastructure with a modular design and an easy to understand codebase (relative to the size of the codebase), which are qualities that make it suitable for extension [2]. Clang is designed as a library first and foremost, which simplifies reusing individual components across different parts of the compiler without necessarily understanding how the rest of the code works. Clang aims to be compatible with GCC (GNU Compiler Collection), another open source compiler, which includes supporting nonstandard extensions that GCC provides. This means it is possible to benefit from the internal design of Clang without giving up any existing functionality when making the switch from GCC.

Clang-tidy 1 is a tool that is part of Clang and serves as the project’s linting tool. Linting tools (or linters) are static analysis tools that find bugs and other possible sources of errors in source code, similarly to compiler warnings. In practice, these warnings usually complement the existing compiler warnings for the language they are written for and are usually more extensive or cover more specialized cases, such as coding style violations. Linters exist for most popular programming languages and are especially useful for interpreted languages that lack compilation phases (and therefore compiler warnings). In the case of Clang, the modularity makes it easy to extend Clang’s functionality by editing the source code and adding extra features, especially additional checkers for diagnosing programming errors. Clang-tidy works on the AST level, which is efficient for simple checks, but restricted when it comes to more advanced capabilities such as investigating the possible values of a variable.

The Clang Static Analyzer2(sometimes shortened to Clang SA) is a more advanced tool than Clang-tidy that also belongs to the Clang toolchain. Clang SA performs path-sensitive analysis [3]; that is, it considers every possible path the program may take, based on branching (e.g. if-else statements and conditional loops). Internally, this is accomplished by using a Control-Flow Graph (CFG), a graph that represents the possible branches of the source code. The nodes of the graph represent the blocks of code without branches, while the edges represent the branches. The paths themselves are represented in an exploded graph, which keeps track of the effects of previously evaluated statements [4]. The advanced functionality comes at a cost however; it is compara-tively slower than AST analysis, with potentially exponential time complexity. Additionally, false positives may also occur in the analysis due to imprecision.

MOOC describes courses with open access on the web with potentially thousands of partici-pators. Following the trend of integrating technology in education, MOOC courses are rising in popularity, with much research focusing on its methods and merits in recent years [5].

1https://clang.llvm.org/extra/clang-tidy/ 2https://clang-analyzer.llvm.org/

Figure 1: An example of an abstract syntax tree representing pseudocode for the euclidean algo-rithm. Image taken from the Wikipedia article on abstract syntax trees.

3.

Related Work

[6] describes both the static and dynamic approaches to automated assessment of student assign-ments. While the most common approach is cited as being testing and comparing program outputs (a type of dynamic analysis) and that static analysis was mostly used for historic reasons, it is also stated that static analysis provides many benefits that dynamic analysis cannot perform. Notably, one aspect mentioned that is relevant to our study is the ability to detect errors such as dead code and redundant conditionals, which is similar to our use case.

One previous study that focuses on automatic assessment of Java programs in an elementary programming course [7] uses dynamic analysis. The actual evaluation is based on comparing the output of students’ programs with that of a reference implementation and a score is given based on the accuracy. A survey was performed at the end of the study to analyze student satisfaction that resulted in positive feedback due to the instant feedback received. The authors concluded that the program was helpful for students learning a new programming language.

On the static analysis side of things, [8] is a study that aims to automate grading of assignments using static analysis, however it places less emphasis on error checking and more on the grading itself along with following coding style guidelines, such as indentation, use of goto statements, line length, etc. [9] uses an alternative approach; the authors implement a graph specification language that targets the Java language and combines information from its AST and the Java System Dependency Graph (a graph used in Java that shows information about data flow) to find specific solution patterns, similarly to the first type of check presented in the problem formulation, but more in-depth. Using this method resulted in occasional false positives depending on the usage, but the overall result was accurate.

In [10], the authors opted to use finite state machines with datapaths (FSMD), a Turing-complete mathematical model for computer programs, and converted both the student assignments and a reference solution which are then checked for equivalence. However, this approach also requires that a reference solution is provided. The paper also highlights an important aspect of static analysis, namely that while comparing program output works for showing if a program works, it does not tell us anything about how it is done which is especially important in an introductory programming course, where a good coding style is necessary to pass in addition to a functioning program. In addition, it is also mentioned that static analysis avoids some drawbacks of dynamic analysis when it comes to automation, such as nontermination when running student programs.

In general, our work differs from previous studies in that we focus entirely on finding errors in students’ assignments using static analysis, of which no prior studies were found. We are not interested in doing any automated grading, especially since the grading in the courses we are developing checkers for are either pass or fail, with nothing in between. Some of the aforementioned solutions also rely on comparison with a reference solution, but we are only interested in checking for violations of the lab instructions.

4.

Method

The first step was to search in online research databases to find previous research on static analysis being used for automatic checking of programming assignments. We did not find any studies using static analysis for this specific purpose; previous studies relied on dynamic methods such as running the program or emphasized other aspects such as grading.

To study the potential of static analysis for assignment checking in practice, an implementation was developed as a proof-of-concept. Since the study focuses on programs developed in C (being the language used in many of the courses at MDH, including the introductory programming courses), we chose Clang as the tool for developing the implementation. Clang features extensible tooling that can be used to perform static analysis, including Clang-tidy, a tool that can inspect the abstract syntax tree of C/C++ programs. This suits our intended use case well since most of the expected requirements should be decidable on the AST level.

We contacted the course examiners for the introductory programming courses for input on re-quirements and instructions for previous instances of the course. We discussed which rere-quirements would be feasible to implement based on the scope of the project as well as limitations of the

anal-ysis tool. The implementation was developed based on these requirements, which are previously listed in the report.

Our method of testing the quality of the implementation was to evaluate it by running it on student assignments and collecting data. Note that the assignments used for testing were previously completed assignments from previous years, and therefore expected to be completely free of errors, since they have been corrected and approved. The data we were interested in collecting were first and foremost accuracy: do any errors slip by, or are there any false positives? It should be noted that comparing these require manual checking, so additional responsibility is necessary here. We also considered performance since Clang checkers can be slow (especially when using Clang SA), but since the assignment source files are small we did not expect performance to be an issue. Another thing we considered was the usability of name matching in assignments in comparison to non-named matchers, since precise naming was not a hard requirement in the assignments.

We collected the results of the observations and drew our conclusions based on them. We also consider the warnings of built-in checkers.

5.

Ethical and Societal Considerations

Since actual student assignments are used, there was some additional legal work necessary in order to conduct the evaluation. We ensured that the original authors of the code were given credit and their names were not used for any data collection purposes.

On a larger scale, automating correcting in this fashion presents some ethical issues regarding responsibility of the user; the intention of the tool is to only use it as a complement to manual and should not be treated as an absolute decision when used.

6.

Developing and evaluating the checkers

6.1.

Clang-tidy

Clang-tidy is distributed as part of the Clang source code. Clang is a front end for LLVM, a modular compiler infrastructure that can work with different front ends (to support different programming languages) and different back ends (to generate instructions for different instruction sets), which makes it easy to extend. Clang also follows this philosophy by using a library-based design that makes it easy to develop tools based on Clang without necessarily going through the compilation phase.

Extending Clang-tidy with custom checkers is done by directly editing the source code and recompiling the source code. Clang-tidy checkers are divided into matcher and check functions. Matchers are used to select nodes of interest in the AST using a DSL (Domain Specific Language) that is used to form predicates on the AST by composing function calls. These nodes can be bound to identifiers that can later be retrieved and further processed by the corresponding check. For simple cases the action will most likely consist of emitting a diagnostic warning, similar to the ones that compilers emit, but for more advanced checks a more thorough analysis of the matched node might be necessary and result in additional traversing of the tree. An example of simple checker used to warn against the usage of global variables is shown below.

v o i d A s s i g n m e n t G l o b a l s C h e c k : : r e g i s t e r M a t c h e r s ( MatchFinder ∗ F i n d e r ) { F i n d e r −>addMatcher ( v a r D e c l ( h a s G l o b a l S t o r a g e ( ) ) . b i n d ( ” v a r ” ) , t h i s ) ; }

v o i d A s s i g n m e n t G l o b a l s C h e c k : : c h e c k ( c o n s t MatchFinder : : M at ch Res ul t &R e s u l t ) { c o n s t a u t o ∗ MatchedDecl = R e s u l t . Nodes . getNodeAs<VarDecl >(” v a r ” ) ;

d i a g ( MatchedDecl−>g e t L o c a t i o n ( ) , ”%0 i s g l o b a l o r s t a t i c ” ) << MatchedDecl ;

}

Matchers are divided into different categories; varDecl is a node matcher that finds all variable declarations, while hasGlobalStorage is a narrowing matcher that constraints the results to only include variables with global storage duration. Note that this is not limited to variables with global

scope, but also includes static variables declared inside functions. bind adds an identifier to the matched node that allows it to be retrieved in the callback function check.

check is the callback function that is invoked on every successful match. getNodeAs is used to access the bound node using its identifier. Note that the check function has no knowledge of the node’s type and requires a dynamic cast using a user-provided type (in this case VarDecl). diag is used to emit a warning by calling it with the source code location and a format string.

6.2.

Clang Static Analyzer

Just like Clang-tidy, the Clang Static Analyzer is distributed as part of the Clang source code. Clang SA performs path-sensitive analysis; that is, it considers every possible branch the program may take. This is done using symbolic execution, which is similar to executing a program, but instead of using concrete values, symbolic values are used in place of the actual values and are used to represent the constraints the value may have. For example, in the following code:

x = m a l l o c ( n ) ; i f ( x != NULL) { . . . } e l s e { . . . }

there is no way to know to know what the return value of x is, since it is possible for malloc to return NULL instead of a valid pointer, and therefore there is no way to know which branch is taken afterwards. However, by exploring both branches with a different set of constraints x in both branches, we can draw conclusions based on the possible values of x, since we know x is a valid pointer in the then-branch of the conditional, while it is guaranteed to be NULL in the else-branch. Extending Clang SA is somewhat similar to Clang-tidy in that it uses an event callback model, however, since Clang SA performs data flow analysis, the events are more general and refer to stages of execution instead (calling a function, executing a statement, memory being deallocated due to going out of scope, etc.). The matcher-callback model is also eschewed in favor of more freedom in implementation, but also results in a steeper initial learning curve.

6.3.

Developing the checkers

The checkers were mostly developed using Clang-tidy, since most of the checkers are simple enough that AST analysis is sufficient to determine. Especially straightforward were the checkers for goto statements and variables with global storage, since they do not require any the further process-ing and the matchers are built-in with no narrowprocess-ing needed. Initially, the struct and function declaration checkers were implemented as separate checkers that were modified on a case-by-case basis, i.e. hard coded for specific assignments. However, this had the drawback of making it im-possible to easily change checkers for different assignments; unlike the other checkers, which are applied to all assignments (since they simply enforce a coding standard), declarations vary between assignments and the checkers would therefore produce error messages on every assignment that they didn’t apply to unless the source code was recompiled (which is slow due to the size of the LLVM codebase and unsuitable for correcting different assignments in succession). We solved this by merging the struct and function checkers into a generalized declaration checker that uses an optionally provided JSON3 file to provide the actual declaration information at runtime. LLVM provides its own JSON library which made it possible to add this feature without introducing any external dependencies.

The allocation checker that ensures that the return value of malloc/calloc is checked for NULL was originally implemented as a Clang-tidy checker, however, the result had some issues due to the inherent limitations of performing AST-level analysis, despite theoretically being possible. The implementation itself suffered from a bug where only the first occurrence of each type of error was found and it was notoriously difficult to debug due to the increased complexity of the imple-mentation. Additionally, the limitations of syntactic analysis made it difficult to account for all

3JavaScript Object Notation; a data format based on the JavaScript object syntax but is used by many different

the possible ways in which the return value can be checked for NULL before using the pointers. Therefore, we made the decision of implementing this checker using Clang SA instead. The im-plementation of this version is more difficult to understand due to Clang SA being a considerably more advanced tool, it pays off in the end by being more concise and requiring no explicit handling of different edge cases, since the analyzer itself keeps track of value information.

Implementing the allocation return checker involves keeping track of function calls (to detect calls to malloc/calloc) as well as dereferencing pointers that are possibly NULL; conveniently, Clang SA already supports both events, the latter being represented by ImplicitNullDerefEvent 4. Symbolic values for pointers are represented by memory regions, which abstract the memory that the pointer can point to; it has a starting location (which the pointer points to) and a (most likely unknown) range. Determining if a dereference was performed on a dynamic memory region is achieved by keeping track of all symbolic values representing memory regions and checking if any dereferenced memory region belongs to any of the dynamically allocated regions. Note that some extra work is necessary to handle memory offsets, such as in array indexing, since p[5] does not refer to the same memory location as *p (the latter being equivalent to p[0] for example); fortunately, a method is already provided to get the base of the memory region and the problem is reduced to simply checking equivalence of memory locations.

6.4.

Evaluating the checkers

The Clang fork was evaluated on a set of previously submitted exercises from two courses: DVA117 and DVA127. These courses were considered suitable for the study due to them being taken by a large amount of students, which suits the need for automation, but the content is also fundamental enough that formalizing the requirements is easy. One of our initial ideas was to test the fork as part of the grading of the course by allowing teaching assistants to use the program and compare the time it takes to see if there is a notable improvement in time spent and ease of use, but the idea was abandoned mainly due to time constraints, but also issues regarding details on how the program should be used and its distribution (since the program requires installation on the host machine). Therefore we did not expect to find many errors due to all of these being previously corrected assignments, but at the very least it allows us to find errors in the Clang fork and find potential areas of improvement.

We acquired assignments from eight students in total, with seven of them being from DVA127 and one from DVA117. The DVA127 set contains two assignments for each student, while DVA117 has 7 assignments for each student. It should be noted that the assignments vary in length, particularly for the early assignments in DVA117.

The reason behind the low amount of assignments used for evaluation stems from the need to avoid legal issues regarding third-parties being given access to them, which means we had to request permission from the students themselves. This was done by posting requests5 in the accessible course pages within the learning platform. After approval was given, all existing versions of the code submissions were collected, anonymised and passed along to the author for evaluation, along with basic information of the submission result.

The evaluation was performed by running our forked version of Clang-tidy on all of the assign-ment source code files and comparing the output with any errors found when manually checking the code to see if the analyzer missed anything, along with any other observations. In addition to enabling the checkers developed as part of this study, several built-in checks 6 were enabled. Clang-tidy can also run Clang SA checks7, which were also enabled (including the aforementioned custom checker that guarantees NULL checking of memory allocation), which means everything can be done in one pass without having to combine the tools ourselves. Checks are enabled or disabled using globs which are passed as a command-line option. We performed some initial test runs on the assignments to determine a suitable set of checks that allows us to find erroneous code without being too pedantic and harming readability due to superfluous error messages. We arrived at the following checks:

4https://clang.llvm.org/doxygen/classclang 1 1ento 1 1CheckerDocumentation.html

5Done by the supervisor of the thesis, due to access and availability reasons. The anonymised code files are

archived in a restricted online repository. Access for the purpose of verifying the experiments can be requested

6https://clang.llvm.org/extra/clang-tidy/checks/list.html 7https://clang.llvm.org/docs/analyzer/checkers.html

Included checks: • misc-assignment-* • misc-unused-parameters • misc-no-recursion • clang-analyzer-* • clang-diagnostic-* • bugprone* • performance* • readability* • portability* Excluded checks: • -bugprone-narrowing-conversions • -bugprone-reserved-identifier • -clang-analyzer-security.insecureAPI.DeprecatedOrUnsafeBufferHandling • -clang-analyzer-security.insecureAPI.strcpy • -readability-isolate-declaration • -readability-braces-around-statements • -readability-else-after-return • -readability-non-const-parameter • -readability-magic-numbers

Note that the asterisks are wildcards and matches any checker that matches the prefix before it. This provides a concise way to quickly enable several Clang checks, since they are grouped into different categories and checkers in the same category share a common prefix. Exclusions are applied after the inclusions to remove any unwanted checks that were included.

misc-assignment-* is the prefix used for the Clang-tidy checks developed as part of this project, which has already been described. clang-analyzer-* enables all Clang SA checks, which might seem drastic, but resulted in only a few error messages. clang-diagnostic-* enables checks that are normally only part of the compilation diagnostics; one of them checks for unused variables. This also requires that additional compiler flags are passed to Clang-tidy (we use -Wall and -Wextra). misc-no-recursion ensures that no recursion is used; this also includes mutual recursion. One issue that has occurred in previous instances of the course DVA117 is that the main() function was called from other parts of the code. misc-unused-parameters covers a similar use case, but for the case of function parameters specifically. performance* contains several checks relating to performance, although most of these only apply to C++ rather than C. bugprone* covers different cases of common mistakes, such as integer division when a floating-point value is expected. portability* makes sure that no platform-specific libraries are used. To put it shortly, we include most of the checks available aside from codebase-specific checks.

The excluded checks are mostly excluded due to being too pedantic for an introductory course in programming and their inclusion makes it harder to find more important mistakes. The var-ious readability checks are highly subjective and shows warnings for multiple assignments in a

single statement isolate-declaration), requiring braces in if-else statements braces-around-statements) and return statements in in the if-portion of an if-else-statement (readability-else-after-return). readability-non-const-parameter recommends that pointers that are not modi-fied by the function should be passed const-qualimodi-fied, which sounds tempting, but is probably ex-cessive to demand from an introductory programming course. readability-magic-numbers advises to use named constants instead of numeric literals which sounds tempting, but also reacts to vari-able assignments which makes it excessive. The security clang-analyzer-security.insecureAPI.strcpy considers strcpy outdated due to its inherent insecurity (unbounded copying) and clang-analyzer-security.insecureAPI.DeprecatedOrUnsafeBufferHandling advises against scanf for the same reason. bugprone-narrowing warns about implicit casts from size t to int. bugprone-reserved-identifier warns against CRT SECURE NO WARNINGS which is sometimes defined in MSVC to silence warnings against the (normally considered unsafe) scanf function in C. Since these are introductory programming courses, scanf is considered the canonical way to accept user input due to its ease of use.

7.

Results

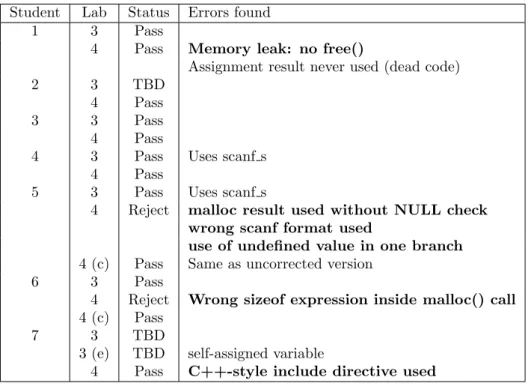

In total, the extended clang fork was evaluated against 35 .c files written by students in two different introductory courses. Tables 1 and 2 show the results of the evaluation.

Student Lab Status Errors found

1 3 Pass

4 Pass Memory leak: no free()

Assignment result never used (dead code)

2 3 TBD

4 Pass

3 3 Pass

4 Pass

4 3 Pass Uses scanf s

4 Pass

5 3 Pass Uses scanf s

4 Reject malloc result used without NULL check wrong scanf format used

use of undefined value in one branch 4 (c) Pass Same as uncorrected version

6 3 Pass

4 Reject Wrong sizeof expression inside malloc() call 4 (c) Pass

7 3 TBD

3 (e) TBD self-assigned variable

4 Pass C++-style include directive used

Table 1: Results for the DVA127 course. Bold text indicate errors the author considers important. Labs marked with (c) means that it is a corrected version of a previous submission; a number is added if multiple revisions exist. Those marked with an (e) are extended versions as part of the examination process of the course. The status are Pass (ready for the formal examination), TBD (to be determined) meaning handed in, but not corrected, and Reject means it needs correction and resubmission before the examination moment.

Student Lab Status Errors found

1 1.2-1 Pass

1.3-2 Pass Uses scanf s 2.1-1 Pass

2.2-1 Pass 2.3-1 Pass

3.1 Pass Parse failure due to umlauts in identifiers 3.2 Pass Parse failure due to umlauts in identifiers 4.1 Pass

4.2 Pass Uses gets()

No return value in one branch 5.1 Pass

5.2 Pass Parse failure due to umlauts in identifiers Uses gets()

Declared but unused variable 5.3 Pass

6.1 Pass Uses gets()

7 Reject Allocation functions used without including stdlib.h return used without value despite being expected Incompatible pointer type assignment

Uses gets()

Use of undefined function Unused variable

Passing struct to free() rather than its address 7 (c1) Reject Allocation functions used without including stdlib.h

return used without value despite being expected Uses gets()

Unused variable

Passing struct to free() rather than its address 7 (c2) TBD Same as previous

additional warning against assignment in condition Table 2: Results for DVA117 assignment evaluation. Note that labs 1-5 are generally small stand-alone programs, whereas 7 is an extension of the program developed in lab 6.

Note that some errors that appeared in the output were considered insignificant and purposely omitted from the tables. For example, minor issues such as comparing the return value of strlen() (which is of type size t) with an int type causes warnings, but as previously mentioned the courses are introductory programming courses and there are more important parts to focus on rather than different integer types.

The built-in checks were useful in finding many errors, ranging from minor to severe. While scanf() is considered the ”canonical” way to read user input in these courses, some students use scanf s() or gets() instead. The former is not portable due to being an MSVC extension, while the latter is considered deprecated in modern versions of C and outright removed in the C11 standard. Some examples of ”dead code” were found, such as unused results of assignment and variables that are declared but not used. While these are not inherently dangerous, they can sometimes give us a hint on where underlying issues of the code can found.

Among the more severe errors found with the built-in checks was one instance of a memory leak due to the lack of free(). There were also some instances of functions not returning values in certain situations despite the containing function having a return type, resulting in undefined behavior. Similarly, there were a few instances of values being undefined under certain conditions (based on branching in the code). These errors can be difficult to find manually since it is not always clear if all branches of the code behave as expected. Clang-tidy also found issues with pointers that compilers generally do not report by default, such as suspicious sizeof expressions

inside malloc() calls due to type mismatches.

Regarding the checks developed as part of the study, results mostly matched our expectations. None of the assignments contained any usage of GOTO statements or global variables, which prevents us from making any significant conclusions regarding them. This doesn’t make them redundant since they should (in theory) assist in preventing the usage of these constructs, but their reliability or usefulness cannot be determined at this moment. The experimental function/struct checks were used on the labs in DVA127, although all of the assignments matched the correct signatures with regards to types, meaning no errors were found. Some name mismatches occurred; student 1 in DVA127 capitalized the first letter of the identifiers, while student 3 named one function differently in lab 3. These are not considered errors since the naming is not a strict requirement. The unnamed counterparts to these checks did function as expected; but it does raise the question whether identifier naming should be enforced to make the error messages clearer, since identifying functions and structs entirely based on type makes it more difficult to identify where the error is. The first mismatch could also be fixed by using case-insensitive matching although that does raise the possibility of extending it further to support other variants of the same function name, such as underscores instead of camelCase.

Finally, the check that enforces NULL checking of memory allocation functions which was developed for Clang SA managed to find the only case of an unchecked return value from malloc(). Further testing would be necessary to conclude how reliable it is, and if it triggers any false positives or fails in certain situations.

Interestingly, some assignments marked as pass contained important errors, which speaks fa-vorably for the usage of static analysis in programming courses. When considering the amount of assignments that are corrected during the duration of the courses, it is not difficult to believe that some mistakes would go by unnoticed. If static analysis is used, less effort can be spent on finding these errors and more on other issues as well as feedback.

One thing that stood out was that the memory leak checker (which is a built-in check in Clang SA) was unable to catch a memory leak in the assignment from lab 4 from student 5, which contains no call to free(), but no warning was given from Clang-tidy. Isolating the issue, we find that it is surprising easy to ”fool” Clang-tidy:

i n t ∗ q = m a l l o c ( s i z e o f ( i n t ) ∗ 1 0 ) ; i f ( q == NULL) r e t u r n EXIT FAILURE ; f o r ( i n t i = 0 ; i < 1 0 ; i ++) q [ i ] = 0 ; r e t u r n EXIT SUCCESS ; i n t ∗ q = m a l l o c ( s i z e o f ( i n t ) ∗ 1 0 ) ; i f ( q == NULL) r e t u r n EXIT FAILURE ; r e t u r n EXIT SUCCESS ;

Since free() is not used on the pointer returned by malloc() before the program terminates, both programs contain memory leaks. However, for some reason the for loop in the code on the left prevents Clang SA from finding any memory leak errors. While finding memory leaks in general can be difficult, it is not clear why Clang SA fails in this particular case.

We also considered the possibility of performance being an issue due to the usage of Clang SA, but this turned out to be a non-issue due to the small size of the source files of the assignments despite including most of the checks available among Clang-tidy and Clang SA.

8.

Discussion

The evaluation shows that static analysis, while not perfect, is a viable tool for finding certain errors in students’ assignments in programming courses. Especially notable is that we were able to find errors in assignments that were considered correct. Extending an existing static analyzer provides the opportunity to tailor the behavior according to existing guidelines for the course assignments, though it should also be mentioned that even the built-in checks were useful in finding errors.

However, one should not rely too heavily on static analysis for correctness. As our results showed, even a state of the art static analyzer like Clang was unable to find a (supposedly simple)

memory leak. Our recommendation is that static analysis should be used to complement existing error finding methods and its users should be aware of the limitations of their tools.

It should also be noted that the error messages from Clang-tidy are not always obvious and those that wish to use a static analyzer should familiarize themselves with the basics of how the tool works. In the case of Clang SA, path-sensitive checks will sometimes show additional output that shows which path is taken in the code, which can be confusing to those unfamiliar with path-sensitive analysis. Despite these shortcomings, we believe that the initial investment to learn these tools pays off in the end.

Another issue we found is that Clang-tidy uses the filename extension to determine whether a file is a C or C++ file, with no apparent way to change this with a command-line option. Some students use the .cpp extension for C source files, most likely because it is automatically generated by Visual Studio. This was resolved temporarily by changing the filename extensions of the .cpp files, which forces the parser to fail if any C++ constructs are encountered, since C++-exclusive features are not allowed. However one way to support both extensions and achieving the same result is to temporarily rename the file, since a wrapper script was used to run Clang-tidy.

8.1.

Future Work

There are many different ways to expand upon the basic ideas presented in this paper. We only covered the use case of static analysis for this paper, but in practice, this method could be combined with the aforementioned textual output checking or test cases when applicable. A larger study could be conducted where both methods complement other; maybe a comparison could be performed to see which one is more useful in finding errors, perhaps in relation to the time spent on implementing them?

The study was also limited; as mentioned earlier, we initially planned to incorporate the use of static analysis in the courses themselves by letting the teaching staff themselves apply the tool. Such a study could also measure the time taken compared to performing manual and find out whether there is a noticeable difference in time spent on correcting. This would also result in a much larger data set than this study.

The issue with using LLVM for the implementation is that any modifications are made by modifying the original source code, which makes it necessary to maintain a separate fork of LLVM. The LLVM codebase is also large and has long compile times, which results in harder development and deployment, the latter being especially apparent when distributing the program since the source code needs to be compiled on each computer. Depending on the needs, perhaps a different tool could be chosen, such as C AST walkers available in other languages? This seems reasonable for the Clang-tidy checkers, but would also limit the program to syntactical checking. It should also be mentioned that many programming courses use a different language from C/C++, and similar implementations for different languages would require completely different frameworks to work with. It should also be mentioned that we used JSON files to adapt checkers to different assignments without requiring recompilation, but the inherent limitations of the JSON format also presents some limitations. In our case, the function signatures and struct members require exact matches, but one might wish to formulate more general predicates, such as a function requiring at least 2 integers in its argument, but also being allowed additional parameters without causing an error.

At one point we considered the possibility of a DSL (domain specific language) that acts as a front end to Clang AST matchers, which would consist of a simple predicate language that would generate code for AST matchers, which would then be compiled as usual. The advantage of this approach would be additional simplicity for teaching assistants to create additional checkers without having to study the LLVM source code. The idea was abandoned for several reasons: firstly, creating a front end that is guaranteed to produce correct code that compiles without errors for AST matchers is difficult, considering that matchers can only be composed in certain ways. Secondly, the fashion in which additional files are added to Clang-tidy requires that either several files are manually modified correctly or that an included Python script is used which does the same thing; either way, there is an additional potential source of bugs that could potentially remove any benefits the front end aimed to abstract to begin with. Finally, as mentioned before, LLVM has built-in support for dealing with JSON data at runtime, which removes any need of

recompilation to begin with.

9.

Conclusions

The upward trend of MOOC courses with a large amount of participants makes the demand for au-tomation tempting, since less time spent on correcting means more time can be spent on providing valuable feedback to the students. The programming courses at MDH contain several requirements that are decidable on the AST level, making them a prime candidate for static analysis. Previ-ous studies focused mostly on other issues regarding grading in programming courses, but none focused on using an existing static analysis tool for this purpose. We extended Clang-tidy as well as Clang SA to account for requirements in existing introductory courses at MDH and evaluated the implementation by using it on student assignments in these courses.

The evaluation was performed on a mixture of completed and ungraded assignments. We found errors in the assignments, ranging from minor to significant. Especially significant were that some of the completed assignments contained notable errors, which could have been prevented if static analysis had been used. In most cases the analyzer behaved as expected, but it did fail to find one memory leak. This leads to our conclusion that static analysis is a good complement to manual checking despite the initial learning curve.

References

[1] N. Nethercote and J. Seward, “Valgrind: a framework for heavyweight dynamic binary in-strumentation,” ACM Sigplan notices, vol. 42, no. 6, pp. 89–100, 2007.

[2] L. Voufo, M. Zalewski, and A. Lumsdaine, “Conceptclang: an implementation of c++ concepts in clang,” in Proceedings of the seventh ACM SIGPLAN workshop on Generic programming, 2011, pp. 71–82.

[3] T. Kremenek, “Finding software bugs with the clang static analyzer,” Apple Inc, 2008. [4] A. Dergachev. (2019) Developing the clang static analyzer. [Online]. Available: https:

//www.youtube.com/watch?v=g0Mqx1niUi0

[5] J. Goopio and C. Cheung, “The mooc dropout phenomenon and retention strategies,” Journal of Teaching in Travel & Tourism, vol. 0, no. 0, pp. 1–21, 2020. [Online]. Available:

https://doi.org/10.1080/15313220.2020.1809050

[6] K. M. Ala-Mutka, “A survey of automated assessment approaches for programming assignments,” Computer Science Education, vol. 15, no. 2, pp. 83–102, 2005. [Online]. Available: https://doi.org/10.1080/08993400500150747

[7] H. Kitaya and U. Inoue, “An online automated scoring system for java programming assign-ments,” International Journal of Information and Education Technology, vol. 6, no. 4, p. 275, 2016.

[8] S. A. Mengel and V. Yerramilli, “A case study of the static analysis of the quality of novice student programs,” SIGCSE Bull., vol. 31, no. 1, p. 78–82, Mar. 1999. [Online]. Available:

https://doi-org.ep.bib.mdh.se/10.1145/384266.299689

[9] C. Kollmann and M. Goedicke, “A specification language for static analysis of student exer-cises,” in 2008 23rd IEEE/ACM International Conference on Automated Software Engineer-ing, 2008, pp. 355–358.

[10] K. K. Sharma, K. Banerjee, and C. Mandal, “A scheme for automated evaluation of programming assignments using fsmd based equivalence checking,” in Proceedings of the 6th IBM Collaborative Academia Research Exchange Conference (I-CARE) on I-CARE 2014, ser. I-CARE 2014. New York, NY, USA: Association for Computing Machinery, 2014, p. 1–4. [Online]. Available: https://doi-org.ep.bib.mdh.se/10.1145/2662117.2662127

A

Acknowledgements

I would like to offer my thanks to examiner Bj¨orn Lisper for making this project possible and getting the supervisor involved, as well as the supervisor himself, Jean Malm, for coming up with the idea for the thesis project, ensuring it was not only educational but also interesting. I would also like to give thanks to the students that allowed their assignments to be used as part of this study, giving us a data set to work with.