n A R TIF IC IA L I NT EL LIG EN C E F O R N O N -C O NT A C T-B A SE D D R IV ER H EA LT H M O N IT O R IN G 202 1 ISBN 978-91-7485-499-2 Address: P.O. Box 883, SE-721 23 Västerås. Sweden

Address: P.O. Box 325, SE-631 05 Eskilstuna. Sweden

Hamidur Rahman

(EE) emphasized in digital image processing, computer vision and signal processing. During that time, he conducted master’s thesis on 3D Structure Detection Implementing Forward Camera Motion. In February 2015, he joined as a research assistant at Mälardalen University, Sweden in the department of Innovation, Design and Engineering (IDT). He started his doctoral studies in November 2015 in the same department. He has conducted research and developed methods for non-contact vision-based solutions for driver health monitoring using Artificial Intelligence (AI), Machine Learning (ML) and Deep Learning (DL). During his PhD studies, he has worked in several research projects such as SafeDriver: A Real-Time Driver’s State Monitoring and Prediction System, INVIP: Indoor navigation for visually impaired using deep learning, Into DeeP: Deep learning for industrial applications, and HR R-peak detection quality index analysis: Non-contact based heart rate R-peak detection using fa-cial image sequence and published articles in international journals and conferences. Hamidur would like to carry out his research in the area of AI, ML and DL specially for vision-based applications.

degree in Electronics and Telecommunication Engineering (ETE), he continued his master’s stud-ies at Blekinge Institute of Technology (BTH), Sweden and studied in Electrical Engineering

Mälardalen University Press Dissertations No. 330

ARTIFICIAL INTELLIGENCE FOR NON-CONTACT-BASED

DRIVER HEALTH MONITORING

Hamidur Rahman 2021

School of Innovation, Design and Engineering

Mälardalen University Press Dissertations No. 330

ARTIFICIAL INTELLIGENCE FOR NON-CONTACT-BASED

DRIVER HEALTH MONITORING

Hamidur Rahman 2021

Copyright © Hamidur Rahman, 2021 ISBN 978-91-7485-499-2

ISSN 1651-4238

Printed by E-Print AB, Stockholm, Sweden

Copyright © Hamidur Rahman, 2021 ISBN 978-91-7485-499-2

ISSN 1651-4238

Mälardalen University Press Dissertations No. 330

ARTIFICIAL INTELLIGENCE FOR NON-CONTACT-BASED DRIVER HEALTH MONITORING

Hamidur Rahman

Akademisk avhandling

som för avläggande av teknologie doktorsexamen i datavetenskap vid Akademin för innovation, design och teknik kommer att offentligen försvaras onsdagen den 7 april 2021, 13.15 i Delta + digitalt via Zoom, Mälardalens högskola, Västerås.

Fakultetsopponent: Docent Hasan Fleyeh, Högskolan Dalarna

Akademin för innovation, design och teknik

Mälardalen University Press Dissertations No. 330

ARTIFICIAL INTELLIGENCE FOR NON-CONTACT-BASED DRIVER HEALTH MONITORING

Hamidur Rahman

Akademisk avhandling

som för avläggande av teknologie doktorsexamen i datavetenskap vid Akademin för innovation, design och teknik kommer att offentligen försvaras onsdagen den 7 april 2021, 13.15 i Delta + digitalt via Zoom, Mälardalens högskola, Västerås.

Fakultetsopponent: Docent Hasan Fleyeh, Högskolan Dalarna

Abstract

In clinical situations, a patient’s physical state is often monitored by sensors attached to the patient, and medical staff are alerted if the patient’s status changes in an undesirable or life-threatening direction. However, in unsupervised situations, such as when driving a vehicle, connecting sensors to the driver is often troublesome and wired sensors may not produce sufficient quality due to factors such as movement and electrical disturbance. Using a camera as a non-contact sensor to extract physiological parameters based on video images offers a new paradigm for monitoring a driver’s health and mental state. Due to the advanced technical features in modern vehicles, driving is now faster, safer and more comfortable than before. To enhance transport security (i.e. to avoid unexpected traffic accidents), it is necessary to consider a vehicle driver as a part of the traffic environment and thus monitor the driver’s health and mental state. Such a monitoring system is commonly developed based on two approaches: driving behaviour-based and physiological parameters-based.

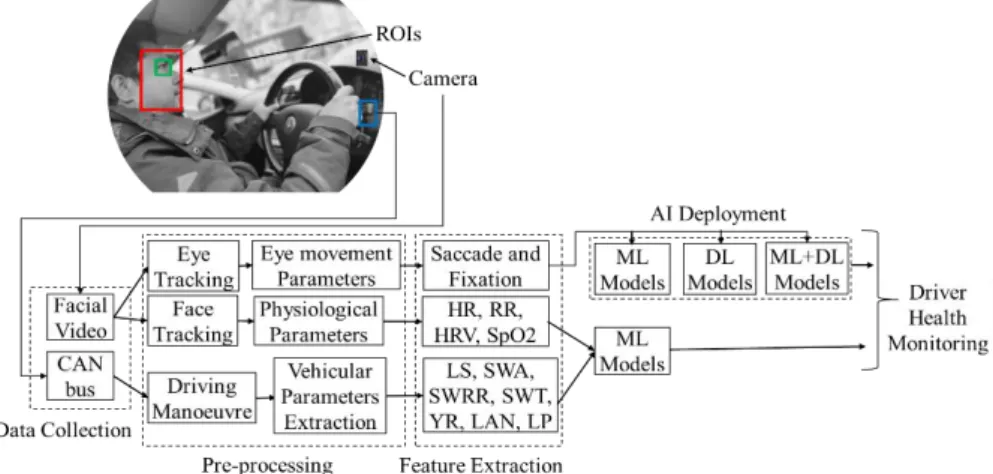

This research work demonstrates a non-contact approach that classifies a driver’s cognitive load based on physiological parameters through a camera system and vehicular data collected from control area networks considering image processing, computer vision, machine learning (ML) and deep learning (DL). In this research, a camera is used as a non-contact sensor and pervasive approach for measuring and monitoring the physiological parameters. The contribution of this research study is four-fold: 1) Feature extraction approach to extract physiological parameters (i.e. heart rate [HR], respiration rate [RR], inter-beat interval [IBI], heart rate variability [HRV] and oxygen saturation [SpO2]) using a camera system in several challenging conditions (i.e. illumination, motion, vibration and movement); 2) Feature extraction based on eye-movement parameters (i.e. saccade and fixation); 3) Identification of key vehicular parameters and extraction of useful features from lateral speed (SP), steering wheel angle (SWA), steering wheel reversal rate (SWRR), steering wheel torque (SWT), yaw rate (YR), lanex (LAN) and lateral position (LP); 4) Investigation of ML and DL algorithms for a driver’s cognitive load classification. Here, ML algorithms (i.e. logistic regression [LR], linear discriminant analysis [LDA], support vector machine [SVM], neural networks [NN], k-nearest neighbours [k-NN], decision tree [DT]) and DL algorithms (i.e. convolutional neural networks [CNN], long short-term memory [LSTM] networks and autoencoders [AE]) are used.

One of the major contributions of this research work is that physiological parameters were extracted using a camera. According to the results, feature extraction based on physiological parameters using a camera achieved the highest correlation coefficient of .96 for both HR and SpO2 compared to a reference system. The Bland Altman plots showed 95% agreement considering the correlation between the camera and the reference wired sensors. For IBI, the achieved quality index was 97.5% considering a 100 ms R-peak error. The correlation coefficients for 13 eye-movement features between non-contact approach and reference eye-tracking system ranged from .82 to .95.

For cognitive load classification using both the physiological and vehicular parameters, two separate studies were conducted: Study 1 with the 1-back task and Study 2 with the 2-back task. Finally, the highest average accuracy achieved in terms of cognitive load classification was 94% for Study 1 and 82% for Study 2 using LR algorithms considering the HRV parameter. The highest average classification accuracy of cognitive load was 92% using SVM considering saccade and fixation parameters. In both cases, k-fold cross-validation was used for the validation, where the value of k was 10. The classification accuracies using CNN, LSTM and autoencoder were 91%, 90%, and 90.3%, respectively.

This research study shows such a non-contact-based approach using ML, DL, image processing and computer vision is suitable for monitoring a driver’s cognitive state.

ISBN 978-91-7485-499-2 ISSN 1651-4238

Abstract

In clinical situations, a patient’s physical state is often monitored by sensors attached to the patient, and medical staff are alerted if the patient’s status changes in an undesirable or life-threatening direction. However, in unsupervised situations, such as when driving a vehicle, connecting sensors to the driver is often troublesome and wired sensors may not produce sufficient quality due to factors such as movement and electrical disturbance. Using a camera as a non-contact sensor to extract physiological parameters based on video images offers a new paradigm for monitoring a driver’s health and mental state. Due to the advanced technical features in modern vehicles, driving is now faster, safer and more comfortable than before. To enhance transport security (i.e. to avoid unexpected traffic accidents), it is necessary to consider a vehicle driver as a part of the traffic environment and thus monitor the driver’s health and mental state. Such a monitoring system is commonly developed based on two approaches: driving behaviour-based and physiological parameters-based.

This research work demonstrates a non-contact approach that classifies a driver’s cognitive load based on physiological parameters through a camera system and vehicular data collected from control area networks considering image processing, computer vision, machine learning (ML) and deep learning (DL). In this research, a camera is used as a non-contact sensor and pervasive approach for measuring and monitoring the physiological parameters. The contribution of this research study is four-fold: 1) Feature extraction approach to extract physiological parameters (i.e. heart rate [HR], respiration rate [RR], inter-beat interval [IBI], heart rate variability [HRV] and oxygen saturation [SpO2]) using a camera system in several challenging conditions (i.e. illumination, motion, vibration and movement); 2) Feature extraction based on eye-movement parameters (i.e. saccade and fixation); 3) Identification of key vehicular parameters and extraction of useful features from lateral speed (SP), steering wheel angle (SWA), steering wheel reversal rate (SWRR), steering wheel torque (SWT), yaw rate (YR), lanex (LAN) and lateral position (LP); 4) Investigation of ML and DL algorithms for a driver’s cognitive load classification. Here, ML algorithms (i.e. logistic regression [LR], linear discriminant analysis [LDA], support vector machine [SVM], neural networks [NN], k-nearest neighbours [k-NN], decision tree [DT]) and DL algorithms (i.e. convolutional neural networks [CNN], long short-term memory [LSTM] networks and autoencoders [AE]) are used.

One of the major contributions of this research work is that physiological parameters were extracted using a camera. According to the results, feature extraction based on physiological parameters using a camera achieved the highest correlation coefficient of .96 for both HR and SpO2 compared to a reference system. The Bland Altman plots showed 95% agreement considering the correlation between the camera and the reference wired sensors. For IBI, the achieved quality index was 97.5% considering a 100 ms R-peak error. The correlation coefficients for 13 eye-movement features between non-contact approach and reference eye-tracking system ranged from .82 to .95.

For cognitive load classification using both the physiological and vehicular parameters, two separate studies were conducted: Study 1 with the 1-back task and Study 2 with the 2-back task. Finally, the highest average accuracy achieved in terms of cognitive load classification was 94% for Study 1 and 82% for Study 2 using LR algorithms considering the HRV parameter. The highest average classification accuracy of cognitive load was 92% using SVM considering saccade and fixation parameters. In both cases, k-fold cross-validation was used for the validation, where the value of k was 10. The classification accuracies using CNN, LSTM and autoencoder were 91%, 90%, and 90.3%, respectively.

This research study shows such a non-contact-based approach using ML, DL, image processing and computer vision is suitable for monitoring a driver’s cognitive state.

ISBN 978-91-7485-499-2 ISSN 1651-4238

Sammanfattning

Moderna fordon har blivit utrustade med avancerade tekniska funktioner för att göra fordonen snabbare, säkrare, och bekvämare. Dock, för att höja transporttrygghet och transportsäkerhet så är det nödvändigt att ta hänsyn till fordonsförares fysiska hälsa och mentala tillstånd, samt anpassa de nödvändiga fordonsfunktionerna eller agera, till exempel möjlighet att kontrollera hastighet i enlighet med situationen.

De två vanligaste tillvägagångssätten, för att övervaka förares fysiska hälsa och mentala tillstånd, i litteraturen är: baserad på förarbeteende respektive baserad på fysiologiska parametrar. Tillvägagångssättet som bygger på fysiologiska parametrar är ett av de tillförlitliga tillvägagångssätt som ger en noggrannare indikation av fysisk och mental hälsa. Dock, för detta, så är sensorer ofta kopplade till människokroppen vilket ofta är besvärligt och obekvämt samt benäget att införa mätstörningar i dynamiska situationer som i körsituationer. För att eliminera sådana nackdelar, visionsbaserad fysilogisk parameterextraktion erbjuder en ny referensram för övervakning av förares fysiska och mentala status. Den här doktorsavhandlingen presenterar ett intelligent kontaktfritt tillvägagångssätt för att övervaka förares kognitiva belastning baserad på två olika typer av parametrar, det vill säga, visionsbaserade fysiologiska parametrar och fordonsparametrar.

Bidraget i denna forskningsstudie är fyrfaldigt: 1) implementerar kontaktfria metoder med hjälp av kamera för att extrahera fysiologiska parametrar i flera utmanande omständigheter, det vill säga: belysning, rörelse, vibration, och rörelsebegrepp; 2) implementerar en kontaktfri metod för extraktion av fordonsparametrar (till exempel hastighet i sidled, reverseringshastighet för ratt) från en körsimulator; 3) implementerar en kontaktfri metod för extraktion av ögonrörelseparametrar (det vill säga sackad som är en snabb ryckig ögonrörelse, samt visuell fixering); 4) förares kognitiva belastning med hjälp av olika algoritmer för maskininlärning och även speciellt djupinlärningsalgoritmer.

Resultaten visar att det föreslagna kontaktfria kamerabaserade tillvägagångssättet har en noggrannhet på 95% jämfört med trådbundna sensorer. Också, uttryckt som klassificering av kognitiv belastning, den högsta

uppnådda medelnoggrannheten är 94% med hjälp av

maskininlärningsalgoritmer, det vill säga, behandling med logistisk regression. Å andra sidan, användande av djupinlärning, det vill säga, länkade neurala nätverk uppnådde en medelnoggrannhet av 91%. Som ett konceptbevis, den här forskningen visar att det kamerabaserade kontaktfria

tillvägagångssättet med hjälp av bildbehandling, datorseende,

Sammanfattning

Moderna fordon har blivit utrustade med avancerade tekniska funktioner för att göra fordonen snabbare, säkrare, och bekvämare. Dock, för att höja transporttrygghet och transportsäkerhet så är det nödvändigt att ta hänsyn till fordonsförares fysiska hälsa och mentala tillstånd, samt anpassa de nödvändiga fordonsfunktionerna eller agera, till exempel möjlighet att kontrollera hastighet i enlighet med situationen.

De två vanligaste tillvägagångssätten, för att övervaka förares fysiska hälsa och mentala tillstånd, i litteraturen är: baserad på förarbeteende respektive baserad på fysiologiska parametrar. Tillvägagångssättet som bygger på fysiologiska parametrar är ett av de tillförlitliga tillvägagångssätt som ger en noggrannare indikation av fysisk och mental hälsa. Dock, för detta, så är sensorer ofta kopplade till människokroppen vilket ofta är besvärligt och obekvämt samt benäget att införa mätstörningar i dynamiska situationer som i körsituationer. För att eliminera sådana nackdelar, visionsbaserad fysilogisk parameterextraktion erbjuder en ny referensram för övervakning av förares fysiska och mentala status. Den här doktorsavhandlingen presenterar ett intelligent kontaktfritt tillvägagångssätt för att övervaka förares kognitiva belastning baserad på två olika typer av parametrar, det vill säga, visionsbaserade fysiologiska parametrar och fordonsparametrar.

Bidraget i denna forskningsstudie är fyrfaldigt: 1) implementerar kontaktfria metoder med hjälp av kamera för att extrahera fysiologiska parametrar i flera utmanande omständigheter, det vill säga: belysning, rörelse, vibration, och rörelsebegrepp; 2) implementerar en kontaktfri metod för extraktion av fordonsparametrar (till exempel hastighet i sidled, reverseringshastighet för ratt) från en körsimulator; 3) implementerar en kontaktfri metod för extraktion av ögonrörelseparametrar (det vill säga sackad som är en snabb ryckig ögonrörelse, samt visuell fixering); 4) förares kognitiva belastning med hjälp av olika algoritmer för maskininlärning och även speciellt djupinlärningsalgoritmer.

Resultaten visar att det föreslagna kontaktfria kamerabaserade tillvägagångssättet har en noggrannhet på 95% jämfört med trådbundna sensorer. Också, uttryckt som klassificering av kognitiv belastning, den högsta

uppnådda medelnoggrannheten är 94% med hjälp av

maskininlärningsalgoritmer, det vill säga, behandling med logistisk regression. Å andra sidan, användande av djupinlärning, det vill säga, länkade neurala nätverk uppnådde en medelnoggrannhet av 91%. Som ett konceptbevis, den här forskningen visar att det kamerabaserade kontaktfria

maskininlärning (speciellt djupinlärning) har en enorm potential för övervakning av förares hälsotillstånd och mentala tillstånd.

maskininlärning (speciellt djupinlärning) har en enorm potential för övervakning av förares hälsotillstånd och mentala tillstånd.

Abstract

In clinical situations, a patient’s physical state is often monitored by sensors attached to the patient, and medical staff are alerted if the patient’s status changes in an undesirable or life-threatening direction. However, in unsupervised situations, such as when driving a vehicle, connecting sensors to the driver is often troublesome and wired sensors may not produce sufficient quality due to factors such as movement and electrical disturbance. Using a camera as a non-contact sensor to extract physiological parameters based on video images offers a new paradigm for monitoring a driver’s health and mental state. Due to the advanced technical features in modern vehicles, driving is now faster, safer and more comfortable than before. To enhance transport security (i.e. to avoid unexpected traffic accidents), it is necessary to consider a vehicle driver as a part of the traffic environment and thus monitor the driver’s health and mental state. Such a monitoring system is commonly developed based on two approaches: driving behaviour-based and physiological parameters-based.

This research work demonstrates a non-contact approach that classifies a driver’s cognitive load based on physiological parameters through a camera system and vehicular data collected from control area networks considering image processing, computer vision, machine learning (ML) and deep learning (DL). In this research, a camera is used as a non-contact sensor and pervasive approach for measuring and monitoring the physiological parameters. The contribution of this research study is four-fold: 1) Feature extraction approach to extract physiological parameters (i.e. heart rate [HR], respiration rate [RR], inter-beat interval [IBI], heart rate variability [HRV] and oxygen saturation

[SpO2]) using a camera system in several challenging conditions (i.e.

illumination, motion, vibration and movement); 2) Feature extraction based

on eye-movement parameters (i.e. saccade and fixation); 3) Identification of

key vehicular parameters and extraction of useful features from lateral speed (LS), steering wheel angle (SWA), steering wheel reversal rate (SWRR), steering wheel torque (SWT), yaw rate (YR), lanex (LAN) and lateral position (LP); 4) Investigation of ML and DL algorithms for a driver’s cognitive load classification. Here, ML algorithms (i.e. logistic regression [LR], linear discriminant analysis [LDA], support vector machine [SVM], neural networks [NN], k-nearest neighbours [k-NN], decision tree [DT]) and DL algorithms (i.e. convolutional neural networks [CNN], long short-term memory [LSTM] networks and autoencoders [AE]) are used.

One of the major contributions of this research work is that physiological parameters were extracted using a camera. According to the results, feature extraction based on physiological parameters using a camera achieved the

Abstract

In clinical situations, a patient’s physical state is often monitored by sensors attached to the patient, and medical staff are alerted if the patient’s status changes in an undesirable or life-threatening direction. However, in unsupervised situations, such as when driving a vehicle, connecting sensors to the driver is often troublesome and wired sensors may not produce sufficient quality due to factors such as movement and electrical disturbance. Using a camera as a non-contact sensor to extract physiological parameters based on video images offers a new paradigm for monitoring a driver’s health and mental state. Due to the advanced technical features in modern vehicles, driving is now faster, safer and more comfortable than before. To enhance transport security (i.e. to avoid unexpected traffic accidents), it is necessary to consider a vehicle driver as a part of the traffic environment and thus monitor the driver’s health and mental state. Such a monitoring system is commonly developed based on two approaches: driving behaviour-based and physiological parameters-based.

This research work demonstrates a non-contact approach that classifies a driver’s cognitive load based on physiological parameters through a camera system and vehicular data collected from control area networks considering image processing, computer vision, machine learning (ML) and deep learning (DL). In this research, a camera is used as a non-contact sensor and pervasive approach for measuring and monitoring the physiological parameters. The contribution of this research study is four-fold: 1) Feature extraction approach to extract physiological parameters (i.e. heart rate [HR], respiration rate [RR], inter-beat interval [IBI], heart rate variability [HRV] and oxygen saturation

[SpO2]) using a camera system in several challenging conditions (i.e.

illumination, motion, vibration and movement); 2) Feature extraction based

on eye-movement parameters (i.e. saccade and fixation); 3) Identification of

key vehicular parameters and extraction of useful features from lateral speed (LS), steering wheel angle (SWA), steering wheel reversal rate (SWRR), steering wheel torque (SWT), yaw rate (YR), lanex (LAN) and lateral position (LP); 4) Investigation of ML and DL algorithms for a driver’s cognitive load classification. Here, ML algorithms (i.e. logistic regression [LR], linear discriminant analysis [LDA], support vector machine [SVM], neural networks [NN], k-nearest neighbours [k-NN], decision tree [DT]) and DL algorithms (i.e. convolutional neural networks [CNN], long short-term memory [LSTM] networks and autoencoders [AE]) are used.

One of the major contributions of this research work is that physiological parameters were extracted using a camera. According to the results, feature extraction based on physiological parameters using a camera achieved the

highest correlation coefficient of .96 for both HR and SpO2 compared to a

reference system. The Bland Altman plots showed 95% agreement considering the correlation between the camera and the reference wired sensors. For IBI, the achieved quality index was 97.5% considering a 100 ms R-peak error. The correlation coefficients for 13 eye-movement features between non-contact approach and reference eye-tracking system ranged from .82 to .95.

For cognitive load classification using both the physiological and vehicular parameters, two separate studies were conducted: Study 1 with the 1-back task and Study 2 with the 2-back task. Finally, the highest average accuracy achieved in terms of cognitive load classification was 94% for Study 1 and 82% for Study 2 using LR algorithms considering the HRV parameter. The highest average classification accuracy of cognitive load was 92% using SVM considering saccade and fixation parameters. In both cases, k-fold cross-validation was used for the cross-validation, where the value of k was 10. The classification accuracies using CNN, LSTM and autoencoder were 91%, 90%, and 90.3%, respectively.

This research study shows such a non-contact-based approach using ML, DL, image processing and computer vision is suitable for monitoring a driver’s cognitive state.

highest correlation coefficient of .96 for both HR and SpO2 compared to a

reference system. The Bland Altman plots showed 95% agreement considering the correlation between the camera and the reference wired sensors. For IBI, the achieved quality index was 97.5% considering a 100 ms R-peak error. The correlation coefficients for 13 eye-movement features between non-contact approach and reference eye-tracking system ranged from .82 to .95.

For cognitive load classification using both the physiological and vehicular parameters, two separate studies were conducted: Study 1 with the 1-back task and Study 2 with the 2-back task. Finally, the highest average accuracy achieved in terms of cognitive load classification was 94% for Study 1 and 82% for Study 2 using LR algorithms considering the HRV parameter. The highest average classification accuracy of cognitive load was 92% using SVM considering saccade and fixation parameters. In both cases, k-fold cross-validation was used for the cross-validation, where the value of k was 10. The classification accuracies using CNN, LSTM and autoencoder were 91%, 90%, and 90.3%, respectively.

This research study shows such a non-contact-based approach using ML, DL, image processing and computer vision is suitable for monitoring a driver’s cognitive state.

Acknowledgment

All praises to Allah and His blessing for the completion of this thesis. It would be very difficult to finish this PhD thesis without the unconditional support and inspiration of several great individuals and who influenced and assisted me during this journey - I would like to acknowledge them!

First and foremost, I wish to express my sincere appreciation to my supervisor Associate Professor Mobyen Uddin Ahmed, who convincingly guided and encouraged me to be professional and do the right thing even when the road got tough. Without his persistent help, selfless time and care, the goal of this thesis would not have been realized. I appreciate his guidance through each stage of the process. I am also indebted to my co-supervisors Professor Shahina Begum and Professor Peter Funk for their invaluable knowledge and advice, valuable time and guidelines. They always have provided suggestions, comments and feedback throughout my doctoral studies. I would like to thank them for their support in different situations and they have always been there and guided me when I was in a deadlock position to solve problems and for inspiring my interest in the development of innovative technologies.

Thanks to the Swedish Knowledge Foundation (KKS), Volvo Car Corporation (VCC), Toyota Motor Europe (TME), The Swedish National Road and Transport Research Institute (VTI), Karolinska Institute (KI), Autoliv, Senseair, Anpassarna and Prevas for their support of the research projects in this thesis. I would like to acknowledge the contribution of all the members of safeDriver project: Anna Anund (VTI), Bo Svanberg (VCC), Bertil Hök´(SenseAir) and Johan Karlsson (Autolive). Thank you all for your opinions and suggestions on different occasions during the meetings. Special thanks to Johan Karlsson for the camera support for data collection. I am also grateful to all the members of the projects of Vehicle Driver Monitoring (VDM), Indoor Navigation for Visual Impairment Persons using Computer Vision and Machine learning (INVIP), SimuSafe (the project has received funding from the European Union's Horizon 2020 research and innovation programme under grant agreement No 723386), intoDEEP, FutureE, AporC and SALUTOGEN where I got the opportunity to work with many good people and I have learned a lot from them. I wish to express my deepest gratitude to all of the test subjects in data collection experiments for their time and cooperation.

I am thankful to the professors at Mälardalen University including Professor Maria Lindén, Professor Mikael Sjödin, Professor Mikael Ekström, Professor Moris Behnam, Professor Ning Xiong, Professor Thomas Nolte,

Acknowledgment

All praises to Allah and His blessing for the completion of this thesis. It would be very difficult to finish this PhD thesis without the unconditional support and inspiration of several great individuals and who influenced and assisted me during this journey - I would like to acknowledge them!

First and foremost, I wish to express my sincere appreciation to my supervisor Associate Professor Mobyen Uddin Ahmed, who convincingly guided and encouraged me to be professional and do the right thing even when the road got tough. Without his persistent help, selfless time and care, the goal of this thesis would not have been realized. I appreciate his guidance through each stage of the process. I am also indebted to my co-supervisors Professor Shahina Begum and Professor Peter Funk for their invaluable knowledge and advice, valuable time and guidelines. They always have provided suggestions, comments and feedback throughout my doctoral studies. I would like to thank them for their support in different situations and they have always been there and guided me when I was in a deadlock position to solve problems and for inspiring my interest in the development of innovative technologies.

Thanks to the Swedish Knowledge Foundation (KKS), Volvo Car Corporation (VCC), Toyota Motor Europe (TME), The Swedish National Road and Transport Research Institute (VTI), Karolinska Institute (KI), Autoliv, Senseair, Anpassarna and Prevas for their support of the research projects in this thesis. I would like to acknowledge the contribution of all the members of safeDriver project: Anna Anund (VTI), Bo Svanberg (VCC), Bertil Hök´(SenseAir) and Johan Karlsson (Autolive). Thank you all for your opinions and suggestions on different occasions during the meetings. Special thanks to Johan Karlsson for the camera support for data collection. I am also grateful to all the members of the projects of Vehicle Driver Monitoring (VDM), Indoor Navigation for Visual Impairment Persons using Computer Vision and Machine learning (INVIP), SimuSafe (the project has received funding from the European Union's Horizon 2020 research and innovation programme under grant agreement No 723386), intoDEEP, FutureE, AporC and SALUTOGEN where I got the opportunity to work with many good people and I have learned a lot from them. I wish to express my deepest gratitude to all of the test subjects in data collection experiments for their time and cooperation.

I am thankful to the professors at Mälardalen University including Professor Maria Lindén, Professor Mikael Sjödin, Professor Mikael Ekström, Professor Moris Behnam, Professor Ning Xiong, Professor Thomas Nolte,

Associate Professor Damir Isovic and others. I have learned a lot from them during meetings, lectures and seminars. Special thanks to Professor Maria Lindén for her comments and feedback regarding this thesis.

I would like to thank my group mates and colleagues Dr. Shaibal Barua, Mir Riyanul Islam, Sharmin Sultana, MD Aquif Rahman for their support. In particular, I would like to recognize the invaluable assistance of Shaibal Barua that he provided during my study. I thank my course mates Ashalata, Filip, Hans, Husni, LanAnh, Mirgita, Nandinbaatar and others for their cooperation. I would like to show my gratitude to my friends and colleagues in the department of IDT: Fredrik Ekstrand, Nikola Petrovic, Ivan Tomasic, Arash Ghareh Baghi, Miguel Leon Ortiz, Elaine Åstrand, Anna Åkerberg, Carl Ahlberg, Hossein Fotouhi, Maryam Vahabi, Per Hellström, Sara Abbaspour, Nesredin Mahmud, Abu Naser Masud, Shahriar Hasan and others.

I would like to express my gratitude to all my family members, relatives and friends who were involved to support and encouragement for completing my PhD thesis. I would like to pay special regards to my brother Mizanur Rahman who is my mentor and friend and who has helped me a lot through his guidance. I also want to acknowledge my in-law’s family members for their endless love, blessings and inspiration.

Finally, I wish to acknowledge the support and great love of my wife Shamima who kept me going on and this work would not have been possible without her infinite support and encouragement. I am blessed to have my little son, Abrian, who has brought tremendous love to our life.

Hamidur Rahman April, 2021 Västerås, Sweden

Associate Professor Damir Isovic and others. I have learned a lot from them during meetings, lectures and seminars. Special thanks to Professor Maria Lindén for her comments and feedback regarding this thesis.

I would like to thank my group mates and colleagues Dr. Shaibal Barua, Mir Riyanul Islam, Sharmin Sultana, MD Aquif Rahman for their support. In particular, I would like to recognize the invaluable assistance of Shaibal Barua that he provided during my study. I thank my course mates Ashalata, Filip, Hans, Husni, LanAnh, Mirgita, Nandinbaatar and others for their cooperation. I would like to show my gratitude to my friends and colleagues in the department of IDT: Fredrik Ekstrand, Nikola Petrovic, Ivan Tomasic, Arash Ghareh Baghi, Miguel Leon Ortiz, Elaine Åstrand, Anna Åkerberg, Carl Ahlberg, Hossein Fotouhi, Maryam Vahabi, Per Hellström, Sara Abbaspour, Nesredin Mahmud, Abu Naser Masud, Shahriar Hasan and others.

I would like to express my gratitude to all my family members, relatives and friends who were involved to support and encouragement for completing my PhD thesis. I would like to pay special regards to my brother Mizanur Rahman who is my mentor and friend and who has helped me a lot through his guidance. I also want to acknowledge my in-law’s family members for their endless love, blessings and inspiration.

Finally, I wish to acknowledge the support and great love of my wife Shamima who kept me going on and this work would not have been possible without her infinite support and encouragement. I am blessed to have my little son, Abrian, who has brought tremendous love to our life.

Hamidur Rahman April, 2021 Västerås, Sweden

List of Publications

This dissertation is based on the following papers, which are referred to in the text by their alphabetic numerals.

A. Hamidur Rahman, Shaibal Barua, Shahina Begum, “Intelligent Driver

Monitoring Based on Physiological Sensor Signal: Application Using Camera”, In Proceeding of IEEE 18th Int. Conf. on Intelligent

Transport System (ITSC2015), Spain, 2015.

B. Hamidur Rahman, Mobyen Uddin Ahmed, Sahina Begum,

“Non-Contact Physiological Parameters Extraction using Camera”, In

Proceeding of the 1st Workshop on Embedded Sensor Systems for Health through Internet of Things (ESS-H IoT), Italy, 2015.

C. Hamidur Rahman, Shahina Begum, Mobyen Uddin Ahmed,

“Non-contact Heart Rate Monitoring Using Lab Colour Space”, In

Proceeding of the 13th International Conference on Wearable, Micro & Nano Technologies for Personalized Health (pHealth2016), Greece, 2016.

D. Mobyen Uddin Ahmed, Hamidur Rahman, Shahina Begum, “Quality

Index Analysis on Camera-based R-peak Identification Considering Movements and Light Illumination”, 15th International

Conference on Wearable, Micro & Nano technologies for Personalized Health (pHealth2018), Norway, June’2018.

E. Hamidur Rahman, Mobyen Uddin Ahmed, Shahina Begum,

“Non-contact Physiological Parameters Extraction using Facial Video considering Illumination, Motion, Movement and Vibration”, IEEE

Transaction on Biomedical Engineering, May’2019.

F. Hamidur Rahman, Mobyen Uddin Ahmed, Shaibal Barua and Shahina Begum, “Classification of Driver’s Cognitive Load using

Non-contact based Physiological and Vehicular Parameters”,

International Journal of Biomedical Signal Processing and Control (BSPC), November’2019.

G. Hamidur Rahman, Mobyen Uddin Ahmed, Shaibal Barua, Peter Funk and Shahina Begum, “Driver’s Cognitive Load Classification based

on Eye Movement through Facial Image using Machine Learning”,

submitted in International Journal of Applied Intelligence, February’2021.

List of Publications

This dissertation is based on the following papers, which are referred to in the text by their alphabetic numerals.

A. Hamidur Rahman, Shaibal Barua, Shahina Begum, “Intelligent Driver

Monitoring Based on Physiological Sensor Signal: Application Using Camera”, In Proceeding of IEEE 18th Int. Conf. on Intelligent

Transport System (ITSC2015), Spain, 2015.

B. Hamidur Rahman, Mobyen Uddin Ahmed, Sahina Begum,

“Non-Contact Physiological Parameters Extraction using Camera”, In

Proceeding of the 1st Workshop on Embedded Sensor Systems for Health through Internet of Things (ESS-H IoT), Italy, 2015.

C. Hamidur Rahman, Shahina Begum, Mobyen Uddin Ahmed,

“Non-contact Heart Rate Monitoring Using Lab Colour Space”, In

Proceeding of the 13th International Conference on Wearable, Micro & Nano Technologies for Personalized Health (pHealth2016), Greece, 2016.

D. Mobyen Uddin Ahmed, Hamidur Rahman, Shahina Begum, “Quality

Index Analysis on Camera-based R-peak Identification Considering Movements and Light Illumination”, 15th International

Conference on Wearable, Micro & Nano technologies for Personalized Health (pHealth2018), Norway, June’2018.

E. Hamidur Rahman, Mobyen Uddin Ahmed, Shahina Begum,

“Non-contact Physiological Parameters Extraction using Facial Video considering Illumination, Motion, Movement and Vibration”, IEEE

Transaction on Biomedical Engineering, May’2019.

F. Hamidur Rahman, Mobyen Uddin Ahmed, Shaibal Barua and Shahina Begum, “Classification of Driver’s Cognitive Load using

Non-contact based Physiological and Vehicular Parameters”,

International Journal of Biomedical Signal Processing and Control (BSPC), November’2019.

G. Hamidur Rahman, Mobyen Uddin Ahmed, Shaibal Barua, Peter Funk and Shahina Begum, “Driver’s Cognitive Load Classification based

on Eye Movement through Facial Image using Machine Learning”,

submitted in International Journal of Applied Intelligence, February’2021.

Additional publications, not included in this dissertation:

i. Mobyen Uddin Ahmed, Shahina Begum, Rikard Gestlöf, Hamidur Rahman, Johannes Sörman, ”Machine Learning for Cognitive Load Classification

– a Case Study on Contact-free Approach”, 16th International Conference

on Artificial Intelligence Applications and Innovations (AIAI 2020), Aug’2020.

ii. Mobyen Uddin Ahmed, Mohammed Ghaith Altarabichi, Shahina Begum, Fredrik Ginsberg, Robert Glaes, Magnus Östgren, Hamidur Rahman, Magnus Sörensen, “A Vision-based Indoor Navigation System for

Individuals with Visual Impairment”, International Journal of Artificial

Intelligence (IJAI), May’2020.

iii. Hamidur Rahman, Mobyen Uddin Ahmed, Shahina Begum, “Deep

Learning-based Person Identification using Facial Images”, The 4th EAI

International Conference on IoT Technologies for HealthCare (HealthyIOT'17).

iv. Hamidur Rahman, Mobyen Uddin Ahmed, Shahina Begum., “Vision Based

Remote Heart Rate Variability Monitoring Using Camera”, The 4th EAI

International Conference on IoT Technologies for HealthCare (HealthyIOT'17).

v. Hamidur Rahman, Shaibal Barua, Mobyen Uddin Ahmed, Shahina Begum, Bertil Hök, “A Case-Based Classification for Drivers’ Alcohol Detection

Using Physiological Signals”, The 3rd EAI International Conference on IoT

Technologies for HealthCare (HealthyIoT'16).

vi. Hamidur Rahman, Shankar Iyer, Caroline Meusburger, Kolja Dobrovoljski, Mihaela Stoycheva, Vukan Turkulov, Shahina Begum, Mobyen Uddin Ahmed, “SmartMirror: An Embedded Non-contact System for Health

Monitoring at Home”, The 3rd EAI International Conference on IoT

Technologies for HealthCare (HealthyIoT'16).

vii. Hamidur Rahman, Shahina Begum, Mobyen Uddin Ahmed, Peter Funk, “Real Time Heart Rate Monitoring From Facial RGB Colour Video

Using Webcam”, The 29th Annual Workshop of the Swedish Artificial

Intelligence Society (SAIS 2016).

viii. Hamidur Rahman, Shahina Begum, Mobyen Uddin Ahmed, “Driver

Monitoring in the Context of Autonomous Vehicle”, The 13th

Scandinavian Conference on Artificial Intelligence (SCAI 2015).

Additional publications, not included in this dissertation:

i. Mobyen Uddin Ahmed, Shahina Begum, Rikard Gestlöf, Hamidur Rahman, Johannes Sörman, ”Machine Learning for Cognitive Load Classification

– a Case Study on Contact-free Approach”, 16th International Conference

on Artificial Intelligence Applications and Innovations (AIAI 2020), Aug’2020.

ii. Mobyen Uddin Ahmed, Mohammed Ghaith Altarabichi, Shahina Begum, Fredrik Ginsberg, Robert Glaes, Magnus Östgren, Hamidur Rahman, Magnus Sörensen, “A Vision-based Indoor Navigation System for

Individuals with Visual Impairment”, International Journal of Artificial

Intelligence (IJAI), May’2020.

iii. Hamidur Rahman, Mobyen Uddin Ahmed, Shahina Begum, “Deep

Learning-based Person Identification using Facial Images”, The 4th EAI

International Conference on IoT Technologies for HealthCare (HealthyIOT'17).

iv. Hamidur Rahman, Mobyen Uddin Ahmed, Shahina Begum., “Vision Based

Remote Heart Rate Variability Monitoring Using Camera”, The 4th EAI

International Conference on IoT Technologies for HealthCare (HealthyIOT'17).

v. Hamidur Rahman, Shaibal Barua, Mobyen Uddin Ahmed, Shahina Begum, Bertil Hök, “A Case-Based Classification for Drivers’ Alcohol Detection

Using Physiological Signals”, The 3rd EAI International Conference on IoT

Technologies for HealthCare (HealthyIoT'16).

vi. Hamidur Rahman, Shankar Iyer, Caroline Meusburger, Kolja Dobrovoljski, Mihaela Stoycheva, Vukan Turkulov, Shahina Begum, Mobyen Uddin Ahmed, “SmartMirror: An Embedded Non-contact System for Health

Monitoring at Home”, The 3rd EAI International Conference on IoT

Technologies for HealthCare (HealthyIoT'16).

vii. Hamidur Rahman, Shahina Begum, Mobyen Uddin Ahmed, Peter Funk, “Real Time Heart Rate Monitoring From Facial RGB Colour Video

Using Webcam”, The 29th Annual Workshop of the Swedish Artificial

Intelligence Society (SAIS 2016).

viii. Hamidur Rahman, Shahina Begum, Mobyen Uddin Ahmed, “Driver

Monitoring in the Context of Autonomous Vehicle”, The 13th

Publications in other domains:

1. Ivan Tomasic, Ilker Erde, Hamidur Rahman, Alf Andersson, Peter Funk, ”Sources of Variation Analysis in Fixtures for Sheet Metal Assembly

Process”, Swedish Production Symposium 2016 (SPS 2016).

2. Alf Andersson, Ilker Erdem, Peter Funk, Hamidur Rahman, Henrik Kihlman, Kristofer Bengtsson, Petter Falkman, Johan Torstensson, Johan Carlsson, Michael Scheffler, Stefan Bauer, Joachim Paul, Lars Lindkvist, Per Nyqvist, “Inline Process Control – a concept study of efficient

in-line process control and process adjustment with respect to product geometry”, Swedish Production Symposium 2016 (SPS 2016).

3. Hamidur Rahman, Shahina Begum, Mobyen Uddin Ahmed, “Ins and Outs

of Big Data: A Review”, The 3rd EAI International Conference on IoT

Technologies for HealthCare (HealthyIoT'16).

4. Algorithm, Hamidur Rahman, Johan Sandberg, Lennart Eriksson, Mohammad Heidari, Jan Arwald, Peter Eriksson, Shahina Begum, Maria Lindén, Mobyen Uddin Ahmed, “Falling Angel – a Wrist Worn Fall

Detection System Using k-NN”, The 3rd EAI International Conference on

IoT Technologies for HealthCare (HealthyIoT'16).

Publications in other domains:

1. Ivan Tomasic, Ilker Erde, Hamidur Rahman, Alf Andersson, Peter Funk, ”Sources of Variation Analysis in Fixtures for Sheet Metal Assembly

Process”, Swedish Production Symposium 2016 (SPS 2016).

2. Alf Andersson, Ilker Erdem, Peter Funk, Hamidur Rahman, Henrik Kihlman, Kristofer Bengtsson, Petter Falkman, Johan Torstensson, Johan Carlsson, Michael Scheffler, Stefan Bauer, Joachim Paul, Lars Lindkvist, Per Nyqvist, “Inline Process Control – a concept study of efficient

in-line process control and process adjustment with respect to product geometry”, Swedish Production Symposium 2016 (SPS 2016).

3. Hamidur Rahman, Shahina Begum, Mobyen Uddin Ahmed, “Ins and Outs

of Big Data: A Review”, The 3rd EAI International Conference on IoT

Technologies for HealthCare (HealthyIoT'16).

4. Algorithm, Hamidur Rahman, Johan Sandberg, Lennart Eriksson, Mohammad Heidari, Jan Arwald, Peter Eriksson, Shahina Begum, Maria Lindén, Mobyen Uddin Ahmed, “Falling Angel – a Wrist Worn Fall

Detection System Using k-NN”, The 3rd EAI International Conference on

Contents

PART 1 ... 1

THESIS ... 1

1 Introduction ... 3

1.1. Motivation and Goal ... 6

1.2. Problem Formulation ... 7

1.3. Research Questions ... 7

1.4. Research Contributions ... 8

1.5. Thesis Outline ... 10

2 Background and Related Works ... 13

2.1. Non-Contact Camera System ... 13

2.2. Parameters Extraction ... 14

2.3. Driver Health Monitoring Systems ... 19

3 Materials and Methods ... 23

3.1. Research Methodology ... 23

3.2. Data Acquisition ... 24

3.3. Ethical Issue ... 24

3.4. Methods ... 26

3.4. Evaluation Methods ... 34

4 Cognitive Load Classification... 41

4.1. Physiological Parameters ... 41

4.2. Vehicular Features ... 44

4.3. Eye-Movement Features ... 44

4.4. Cognitive Load Classification ... 45

5 Results and Evaluation ... 49

5.1. Physiological Parameters Extraction ... 49

5.2. Eye-Movement Parameters ... 52

5.3. Cognitive Load Classification ... 54

6 Summary of Included Papers ... 57

6.1. Paper A ... 57 6.2. Paper B ... 58 6.3. Paper C ... 59 6.4. Paper D ... 60

Contents

PART 1 ... 1 THESIS ... 1 1 Introduction ... 31.1. Motivation and Goal ... 6

1.2. Problem Formulation ... 7

1.3. Research Questions ... 7

1.4. Research Contributions ... 8

1.5. Thesis Outline ... 10

2 Background and Related Works ... 13

2.1. Non-Contact Camera System ... 13

2.2. Parameters Extraction ... 14

2.3. Driver Health Monitoring Systems ... 19

3 Materials and Methods ... 23

3.1. Research Methodology ... 23

3.2. Data Acquisition ... 24

3.3. Ethical Issue ... 24

3.4. Methods ... 26

3.4. Evaluation Methods ... 34

4 Cognitive Load Classification... 41

4.1. Physiological Parameters ... 41

4.2. Vehicular Features ... 44

4.3. Eye-Movement Features ... 44

4.4. Cognitive Load Classification ... 45

5 Results and Evaluation ... 49

5.1. Physiological Parameters Extraction ... 49

5.2. Eye-Movement Parameters ... 52

5.3. Cognitive Load Classification ... 54

6 Summary of Included Papers ... 57

6.1. Paper A ... 57

6.2. Paper B ... 58

6.3. Paper C ... 59

6.5. Paper E ... 61

6.6. Paper F ... 63

6.7. Paper G ... 64

7 Discussion, Conclusions and Future Work ... 67

7.1. Discussion of RQs ... 67

7.2. Conclusions and Future Work ... 73

REFERENCES ... 75 APPENDICES... 85 PART II ... 121 INCLUDED PAPPERS ... 121 6.5. Paper E ... 61 6.6. Paper F ... 63 6.7. Paper G ... 64

7 Discussion, Conclusions and Future Work ... 67

7.1. Discussion of RQs ... 67

7.2. Conclusions and Future Work ... 73

REFERENCES ... 75

APPENDICES... 85

PART II ... 121

List of Figures

Figure 1:A brief overview of the non-contact-based approach for cognitive

load classification. ... 5

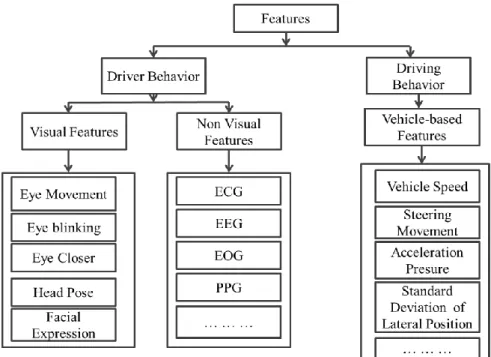

Figure 2: Overview of useful features for driver monitoring systems. ... 20

Figure 3: Intelligent driver monitoring system [82]. ... 22

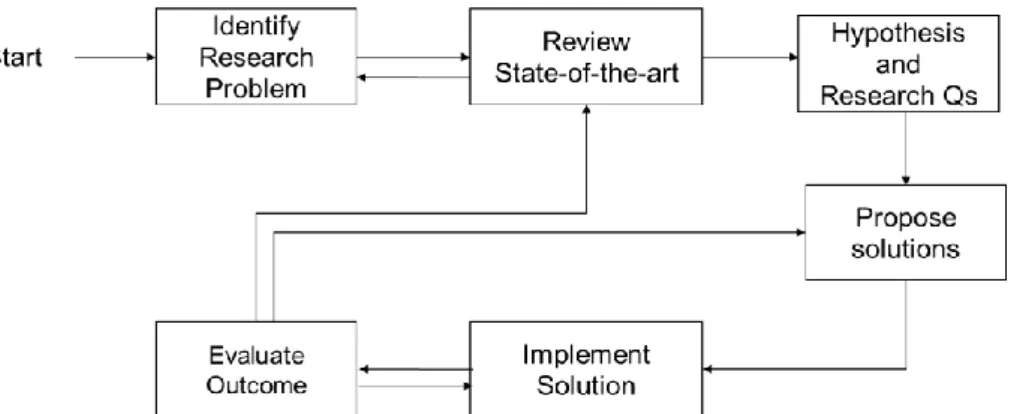

Figure 4: Overview of research methodology. ... 23

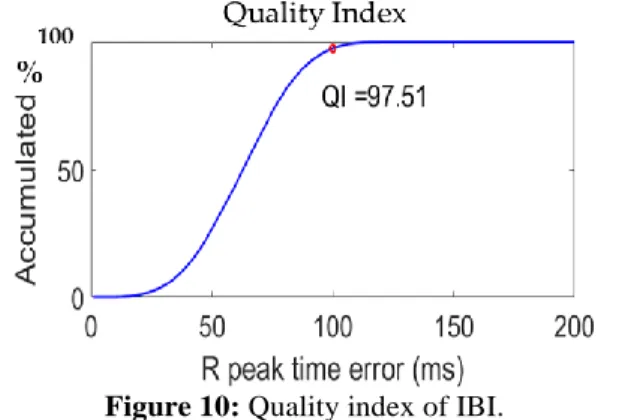

Figure 5: Identify the corners of Facial ROI. ... 26

Figure 6: Geometrical formulas for ROI correction. ... 27

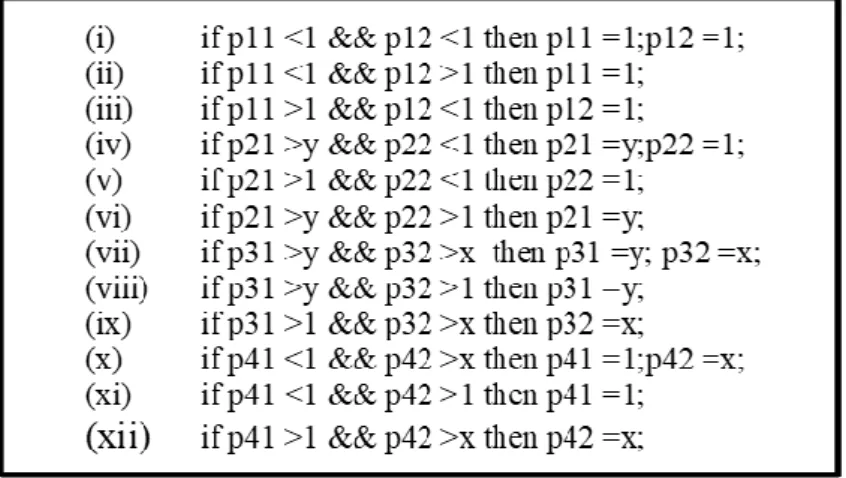

Figure 7:Scatter plot and correlation coefficient of HR between the camera and cStress system. ... 36

Figure 8: Bland Altman plot for HR. ... 37

Figure 9: Poincare’ plot for IBI. ... 37

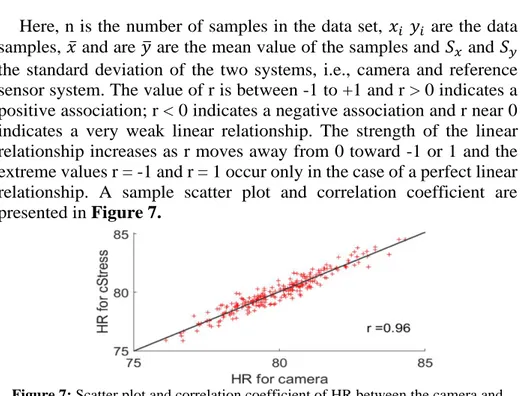

Figure 10: Quality index of IBI. ... 38

Figure 11: Representation of colour matrix considering ROI. ... 42

Figure 12: Representation of colour signals considering colour matrix. ... 42

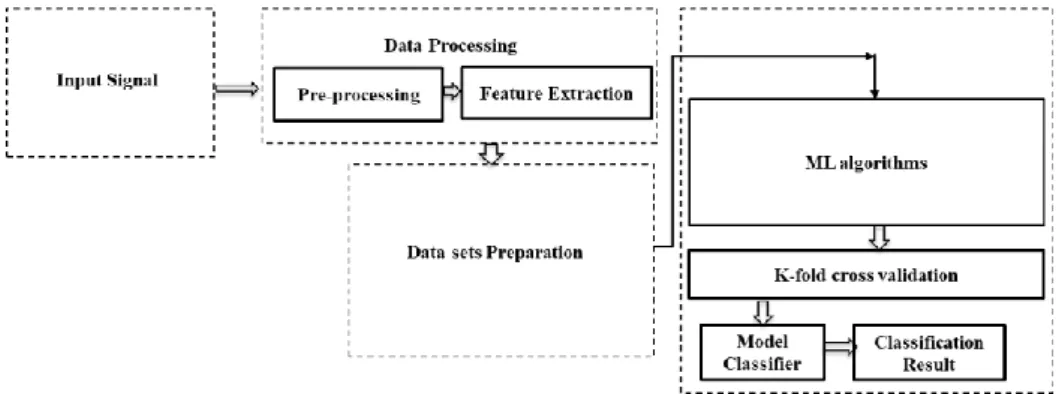

Figure 13: Block diagram of the cognitive load classification scheme for ML. ... 45

Figure 14: Block diagram of the cognitive load classification for ML+DL. 46 Figure 15: Average cumulative percentage of all 30 test subjects [Paper G]. ... 53

Figure 16: Correlation coefficients between the features extracted between eye-tracking and camera systems [Paper G]. ... 53

Figure 17: Research contribution timeline. ... 57

List of Figures

Figure 1:A brief overview of the non-contact-based approach for cognitive load classification. ... 5Figure 2: Overview of useful features for driver monitoring systems. ... 20

Figure 3: Intelligent driver monitoring system [82]. ... 22

Figure 4: Overview of research methodology. ... 23

Figure 5: Identify the corners of Facial ROI. ... 26

Figure 6: Geometrical formulas for ROI correction. ... 27

Figure 7:Scatter plot and correlation coefficient of HR between the camera and cStress system. ... 36

Figure 8: Bland Altman plot for HR. ... 37

Figure 9: Poincare’ plot for IBI. ... 37

Figure 10: Quality index of IBI. ... 38

Figure 11: Representation of colour matrix considering ROI. ... 42

Figure 12: Representation of colour signals considering colour matrix. ... 42

Figure 13: Block diagram of the cognitive load classification scheme for ML. ... 45

Figure 14: Block diagram of the cognitive load classification for ML+DL. 46 Figure 15: Average cumulative percentage of all 30 test subjects [Paper G]. ... 53

Figure 16: Correlation coefficients between the features extracted between eye-tracking and camera systems [Paper G]. ... 53

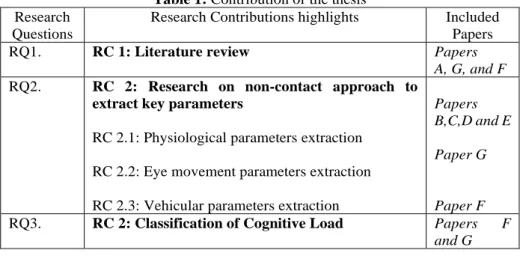

List of Tables

Table 1: Contribution of the thesis ... 10 Table 2. Average QI of R-peak time difference considering different

conditions. ... 52 Table 3. Cognitive load classification for LR using physiological and

vehicular parameters ... 54

Table 4:The Sensitivity, Specificity, Precision, F1-score, and Accuracy for

ML and DL classifiers for both eyeT and camera data. ... 55

List of Tables

Table 1: Contribution of the thesis ... 10 Table 2. Average QI of R-peak time difference considering different

conditions. ... 52 Table 3. Cognitive load classification for LR using physiological and

vehicular parameters ... 54

Table 4:The Sensitivity, Specificity, Precision, F1-score, and Accuracy for

List of Abbreviations

AE Auto Encoder

BN Bayesian Network

CAN Controller Area Network

CCD Charge-Coupled Device

CFR Car From Right

CNN Convolutional Neural Network

CNN Convolutional Neural Networks

DL Deep Learning

DT Decision Tree

ECG Electrocardiography

EEG Electroencephalogram

eyeT Eye Tracking

FPS Frames Per Second

GSR Galvanic Skin Response

HCL High Cognitive Load

HE Hidden Exit

HR Heart Rate

HRV Heart Rate Variability

IBI Inter-beat-Interval

IVA Independent Vector Analysis

KLT Kanade-Lucas-Tomasi

LAN lanex

LCL Low Cognitive Load

LDA Linear Discriminant Analysis

LR Logistic Regression

LS Lateral Speed

LSTM Long Short Term Memory

MCCA Multiset Canonical Correlation Analysis

ME Mean Error

ML Machine Learning

NLMS Normalized Mean Least Square

List of Abbreviations

AE Auto Encoder

BN Bayesian Network

CAN Controller Area Network

CCD Charge-Coupled Device

CFR Car From Right

CNN Convolutional Neural Network

CNN Convolutional Neural Networks

DL Deep Learning

DT Decision Tree

ECG Electrocardiography

EEG Electroencephalogram

eyeT Eye Tracking

FPS Frames Per Second

GSR Galvanic Skin Response

HCL High Cognitive Load

HE Hidden Exit

HR Heart Rate

HRV Heart Rate Variability

IBI Inter-beat-Interval

IVA Independent Vector Analysis

KLT Kanade-Lucas-Tomasi

LAN lanex

LCL Low Cognitive Load

LDA Linear Discriminant Analysis

LR Logistic Regression

LS Lateral Speed

LSTM Long Short Term Memory

MCCA Multiset Canonical Correlation Analysis

ME Mean Error

ML Machine Learning

QI Quality Index

RMSE Root Mean Squared Error

ROI Region of Interest

RR Respiration Rate

SDE Standard Deviation of Error

SpO2 Oxygen Saturation

STD Standard Deviation

SVM Support Vector Machine

SW Side Wind

SWA Steering Wheel Angle

SWRR Steering Wheel Reversal Rate

SWRR Steering Wheel Reversal Rate

SWT Steering Wheel Torque

VCA Visual Cue Adaptation

YR Yaw Rate

QI Quality Index

RMSE Root Mean Squared Error

ROI Region of Interest

RR Respiration Rate

SDE Standard Deviation of Error

SpO2 Oxygen Saturation

STD Standard Deviation

SVM Support Vector Machine

SW Side Wind

SWA Steering Wheel Angle

SWRR Steering Wheel Reversal Rate

SWRR Steering Wheel Reversal Rate

SWT Steering Wheel Torque

VCA Visual Cue Adaptation

P

ART

1

T

HESIS

P

ART

1

C

HAPTER

1

Introduction

This chapter presents an introduction together with motivation and goal, problem formulation, research question, and research contributions. An outline of the thesis report is also presented here. As vehicles (e.g. cars) become more autonomous (autonomous levels 1–4), it is important to ensure that the driver is prepared to handle any upcoming driving situation if needed [1]. A driver's cognitive load is considered a good indication of whether the driver is alert or distracted. However, determining cognitive load is challenging and the acceptance of wire sensor solutions, including electroencephalography (EEG) is not preferred by automobile companies and drivers [2]. The recent development of artificial intelligence (AI) and digital image processing and decreasing hardware prices have enabled the creation of new non-contact solutions to monitor the driver’s state. Several physiological parameters can be extracted from facial image sequences using non-contact approaches that are currently explored in driver monitoring research [2]. Several vehicular parameters can also be used to monitor the driver’s cognitive load, which are calculated based on driving manoeuvre [3].

Traditional contact-based sensors are used to monitor humans’ physiological parameters, such as HR, RR, IBI, HRV, SpO2and eye movement. These sensors are attached to the human body and the parameters are monitored simultaneously. The development of wireless sensors has further improved the monitoring of physiological parameters. Wireless sensors, such as CardioSecur1 and Alivecor2, work in a similar way to regular sensors by measuring the electrical potential of the heart through the skin, and the measurements are saved on an application on a smartphone and uploaded to a computer through Bluetooth or Cloud technologies. Although these sensors provide

1 https://www.cardiosecur.com/ 2 https://www.alivecor.com/

C

HAPTER

1

Introduction

This chapter presents an introduction together with motivation and goal, problem formulation, research question, and research contributions. An outline of the thesis report is also presented here. As vehicles (e.g. cars) become more autonomous (autonomous levels 1–4), it is important to ensure that the driver is prepared to handle any upcoming driving situation if needed [1]. A driver's cognitive load is considered a good indication of whether the driver is alert or distracted. However, determining cognitive load is challenging and the acceptance of wire sensor solutions, including electroencephalography (EEG) is not preferred by automobile companies and drivers [2]. The recent development of artificial intelligence (AI) and digital image processing and decreasing hardware prices have enabled the creation of new non-contact solutions to monitor the driver’s state. Several physiological parameters can be extracted from facial image sequences using non-contact approaches that are currently explored in driver monitoring research [2]. Several vehicular parameters can also be used to monitor the driver’s cognitive load, which are calculated based on driving manoeuvre [3].

Traditional contact-based sensors are used to monitor humans’ physiological parameters, such as HR, RR, IBI, HRV, SpO2and eye movement. These sensors are attached to the human body and the parameters are monitored simultaneously. The development of wireless sensors has further improved the monitoring of physiological parameters. Wireless sensors, such as CardioSecur1 and Alivecor2, work in a similar way to regular sensors by measuring the electrical potential of the heart through the skin, and the measurements are saved on an application on a smartphone and uploaded to a computer through Bluetooth or Cloud technologies. Although these sensors provide

1 https://www.cardiosecur.com/ 2 https://www.alivecor.com/

excellent signals in lab conditions where subjects are supervised and controlled, they can often be troublesome and inconvenient in unsupervised/uncontrolled scenarios (e.g. driving a car). Additionally, electrical interference might occur due to attaching the sensor to the human body. Physiological parameters extraction based on video images using a camera as a non-contact sensor offers a new paradigm for monitoring the driver’s health and mental state.

In recent decades, there has been increasing interest in low-cost3, non-contact and pervasive methods for measuring physiological parameters. These physiological parameters can be obtained through facial skin colour variation caused by blood circulation and parameters are subsequently quantified and compared to corresponding reference measurements. Many researchers have found that the driver’s cognitive load can be classified using HRV, in which HRV features were extracted from IBI using an electrocardiogram (ECG) sensor [4-6]. Again, eye movement is another physiological parameter that is used to monitor the driver’s cognitive load [7]. Saccade and fixation are two eye-movement parameters that are extracted from the sequence of eye pupil positions. The significance of eye movements with regards to the perception of and attention to the visual world has been acknowledged in the literature [8]. Vehicular parameters are also used to classify the driver’s cognitive load. For example, drivers’ cognitive load can be identified using a steering wheel reversal rate (SWRR) [3]. Again, the authors in [9] used several vehicular parameters, such as steering angle, steering torque and brake stroke, to detect a driver’s cognitive load and concluded that vehicular parameters are influenced by the driver’s cognitive load.

In this thesis, a non-contact-based approach has been implemented to classify the driver’s cognitive load. An overview of the driver’s cognitive load classification based on the non-contact approach is presented in Figure 1.

excellent signals in lab conditions where subjects are supervised and controlled, they can often be troublesome and inconvenient in unsupervised/uncontrolled scenarios (e.g. driving a car). Additionally, electrical interference might occur due to attaching the sensor to the human body. Physiological parameters extraction based on video images using a camera as a non-contact sensor offers a new paradigm for monitoring the driver’s health and mental state.

In recent decades, there has been increasing interest in low-cost3, non-contact and pervasive methods for measuring physiological parameters. These physiological parameters can be obtained through facial skin colour variation caused by blood circulation and parameters are subsequently quantified and compared to corresponding reference measurements. Many researchers have found that the driver’s cognitive load can be classified using HRV, in which HRV features were extracted from IBI using an electrocardiogram (ECG) sensor [4-6]. Again, eye movement is another physiological parameter that is used to monitor the driver’s cognitive load [7]. Saccade and fixation are two eye-movement parameters that are extracted from the sequence of eye pupil positions. The significance of eye movements with regards to the perception of and attention to the visual world has been acknowledged in the literature [8]. Vehicular parameters are also used to classify the driver’s cognitive load. For example, drivers’ cognitive load can be identified using a steering wheel reversal rate (SWRR) [3]. Again, the authors in [9] used several vehicular parameters, such as steering angle, steering torque and brake stroke, to detect a driver’s cognitive load and concluded that vehicular parameters are influenced by the driver’s cognitive load.

In this thesis, a non-contact-based approach has been implemented to classify the driver’s cognitive load. An overview of the driver’s cognitive load classification based on the non-contact approach is presented in Figure 1.

Figure 1:A brief overview of the non-contact-based approach for cognitive load

classification.

First, physiological parameters, such as HR, HRV, RR, and SpO2, were extracted from the sequence of facial images both in the normal sitting positions in lab environment and during driving using a simulator. Several challenging factors such as illumination, motion, movement and vibration were considered during physiological parameters extraction. Here, signal processing methods (i.e. First Fourier Transform [FFT], Independent Component Analysis [ICA] and Principal Component Analysis [PCA]) were investigated on the colour channels of the collected video recordings. Next, eye-movement parameters saccade and fixation were extracted using eye pupil positions using the driver’s facial images. Finally, several vehicular parameters, such as the lateral speed, steering wheel angle, steering wheel torque, yaw rate, lane departure and lateral position, were calculated based on the driving manoeuvre. Based on the extracted features, several ML algorithms such as SVM, LR, LDA, NN, k-NN, and DT were deployed to classify the driver’s cognitive load. To take advantage of automatic feature extraction from raw eye-movement data and classification of cognitive load, three deep learning (DL) algorithms (CNN, LSTM and AE) were deployed. The results show that a drivers’ cognitive load can be successfully classified using ML and DL and their combined approach for the selected physiological and vehicular parameters.

Physiological parameters: Physiological parameters or vital signs are measurements of the most basic functions of the human body, which are generally useful in detecting or monitoring medical problems or the

Figure 1:A brief overview of the non-contact-based approach for cognitive load

classification.

First, physiological parameters, such as HR, HRV, RR, and SpO2, were extracted from the sequence of facial images both in the normal sitting positions in lab environment and during driving using a simulator. Several challenging factors such as illumination, motion, movement and vibration were considered during physiological parameters extraction. Here, signal processing methods (i.e. First Fourier Transform [FFT], Independent Component Analysis [ICA] and Principal Component Analysis [PCA]) were investigated on the colour channels of the collected video recordings. Next, eye-movement parameters saccade and fixation were extracted using eye pupil positions using the driver’s facial images. Finally, several vehicular parameters, such as the lateral speed, steering wheel angle, steering wheel torque, yaw rate, lane departure and lateral position, were calculated based on the driving manoeuvre. Based on the extracted features, several ML algorithms such as SVM, LR, LDA, NN, k-NN, and DT were deployed to classify the driver’s cognitive load. To take advantage of automatic feature extraction from raw eye-movement data and classification of cognitive load, three deep learning (DL) algorithms (CNN, LSTM and AE) were deployed. The results show that a drivers’ cognitive load can be successfully classified using ML and DL and their combined approach for the selected physiological and vehicular parameters.

Physiological parameters: Physiological parameters or vital signs are measurements of the most basic functions of the human body, which are generally useful in detecting or monitoring medical problems or the