M

ÄLARDALEN

U

NIVERSITY

S

CHOOL OF

I

NNOVATION

,

D

ESIGN AND

E

NGINEERING

V

ÄSTERÅS

,

S

WEDEN

Thesis for the Degree of Master of Science (60 credits) in Computer

Science with Specialization in Software Engineering

U

SING

A

UTONOMOUS

A

GENTS FOR

S

OFTWARE

T

ESTING BASED ON

JADE

Adlet Nyussupov

adlet.nyussupov@gmail.com

Examiner:

Daniel Sundmark

Mälardalen University, Västerås, Sweden

Supervisor: Eduard Enoiu and Mirgita Frasheri

Mälardalen University, Västerås, Sweden

Company Supervisor: None

Adlet Nyussupov

Using Distributed Agents for Testing Java Code

Adlet Nyussupov

Using Distributed Agents for Testing Java Code

Abstract

The thesis work describes the development of a multiagent testing application (MTA) based on an agent

approach for solving challenges in regression testing domain, such as: reducing the complexity of testing,

optimizing the time consumption, increasing the efficiency and implementing the automation of this

approach for regression testing. All these challenges related to effectiveness and cost, can be represented

as measures of achieved code coverage and number of test cases created. A multiagent approach is

proposed in this thesis since it allows the implementation of the autonomous behaviour and optimizes

the data processing in a heterogeneous environment. In addition, the agent-based approach provides

flexible design methods for building multitask applications and conducting parallel task execution.

However, all of these advantages of using an agent-based approach need to be investigated in the

regression testing domain for realistic scenarios. Therefore, a hypothesis was formulated in order to

investigate the efficiency of the MTA approach using an experiment as the main research method for

obtaining results. The thesis includes a comparison analysis between the MTA and well-known test case

generation tools (i.e. EvoSuite and JUnitTools) for identifying the differences in terms of efficiency and

code coverage achieved. The comparison results showed advantages of the MTA within regression

testing context due to optimal level of code coverage and test cases. The outcome of the thesis work

moves toward solving the aforementioned problems in regression testing domain and shows some

advantages of using the multagent approach within regression testing context.

Adlet Nyussupov

Using Distributed Agents for Testing Java Code

Table of Contents

1.

Introduction ... 4

2.

Background ... 5

3.

Problem Formulation ... 6

4.

Methodology ... 7

4.1.

Experiment Research Method ... 7

4.2.

Tools for Creating the MTA ... 7

4.2.1.

JADE Framework... 8

4.2.2.

JaCoCo Tools ... 8

4.2.3.

Spoon Library ... 9

5.

Related Work ... 10

6.

Creation of an Autonomous Agent Based Application for Testing Java

Code ... 12

6.1.

High-level Design of the MTA ... 12

6.1.1.

Basic Structure of the MTA ... 12

6.1.2.

Activity Diagram of Agents ... 12

6.2.

Low-Level Design of MTA ... 14

6.2.1.

The Class Diagram of the MTA ... 14

6.2.2.

Low-Level Design of the User Interface... 17

6.2.3.

Test Case Generation of the MTA ... 19

7.

Comparative Analysis of the MTA against Other Test Case Generation

Tools ... 20

7.1.

Planning ... 20

7.2.

Operation ... 21

7.3.

Analysis and interpretation ... 22

8.

Discussion ... 25

9.

Conclusions ... 26

10.

Future Work ... 27

11.

References ... 28

12.

Appendix A: Code Implementation of the MTA ... 30

12.1. GUI Cass Code Cmplementation ... 30

12.2. Constants Class Code Implementation ... 35

12.3. Agent_i Class Code Implementation ... 35

12.4. MainAgent Class Code Implementation ... 42

Adlet Nyussupov

Using Distributed Agents for Testing Java Code

1. Introduction

Software testing is an increasingly important domain for research [1]. Growth of the software size and

complexity of the current systems lead to challenges connected to automation of testing [2]. The

automation of testing is used to improve the testing process and reduce complexity of test case

scheduling. In particular, automation is often used in the regression testing domain [3]. Regression

testing requires many resources to validate software following modifications. In particular, regression

testing faces key challenges in reducing the scheduling complexity, optimizing the time consumed by

executing test cases, increasing the speed and implementing its execution automation for regression

testing. The cost-effectiveness of regression testing is based on the characteristics of test cases. In this

thesis work, we consider the number of test cases as an efficiency measure and code coverage as a

characteristic of effectiveness. In order to improve these characteristics, the autonomous test entities -

autonomous agents are used [3]. Every agent can perform laborious tasks, such as gathering information

from various sources, resolving inconsistencies in the retrieved information, processing information

within a heterogeneous environment, filtering away irrelevant information. All these features are useful

for building applications with different complexities.

The thesis work comprises 13 sections. Sections 1-4 explain the challenges of autonomous regression

testing propose tools for solving the challenges and provide a review of related works. Section 5

describes the used instruments and tools for building the autonomous multiagent application. Java Agent

Development Framework (JADE) with the Java Code Coverage Tools (JaCoCo) and Spoon libraries are

described in the section. Section 6 discusses ethical and societal considerations. Section 7 describes the

MTA implementation and provides low level and high-level designs in order to show the implementation

of the autonomous agents. Structural, activity and class diagrams are included into the designs with the

descriptions. Section 8 includes a comparison analysis based on experiment research method. During

the experiment, three types of execution data (execution time, number of test cases and code coverage)

were collected and analysed. Sections 9-12 contain discussions, outcomes of the thesis work, future work

and references. Section 13 represents code samples of the MTA implementation.

In this thesis work, we aim to increase the effectiveness and decrease the cost of regression testing using

agent-based approaches and tools.

Adlet Nyussupov

Using Distributed Agents for Testing Java Code

2. Background

Regression testing is one of the most researched sub-fields of software testing [4]. The main goal of

regression testing is to ensure that newly added changes in software do not affect the other parts that

were not meant to be changed. Growing complexity of modern software poses new challenges for the

regression testing. The key challenge in regression testing is generation and selecting test cases for the

software under test (SUT) in order to identify errors in a cost effective way. The process of generation

is time consuming and involves a lot of human resources (testing staff). It can also be problematic to

conduct regression testing in the large software products that has many dependencies and characterized

with high heterogeneity. It also touches the problems of automation where there is a need to perform a

large quantity of similar testing activities. For instance, it can be a test generation process with repetitions

for every function of the SUT. This activity requires a lot of effort from testing staff because every time

they need to compare the results between units of generation and gain the optimal set of the test cases,

on the other hand, this will require much less resource and time when done through hardware. One more

problem that comes up is precise selecting of the test cases, in other words, deleting duplicates of the

test cases that have different input values but bring the same results. It is important because unnecessary

and extra test cases can lead to some confusions in future testing and can bring additional problems

related to effectiveness testing overall. Overload of the testing platform and unnecessary extra

complexities are the one of such negative results of imprecise test case selecting.

Standard regression testing tools in most cases rely on centralized approach when test cases for the SUT

are stored in several containers but are executed all together. It can negatively influence the performance

of the testing because executing all the test cases in one time reduces the flexibility of the testing and

increases time for selection of the test cases. The test case selection influences the code coverage.

Increasing this parameter is an example of a main improvement goal during the testing process. In the

regression testing, code coverage in native software parts should not be changed. In order to check this

property, it is necessary to conduct all the testing probes every time when new software part is

implemented. This process brings the same problem of cost and effectiveness. These kind of complicated

tasks require a lot of hardware, software and human resources.

Adlet Nyussupov

Using Distributed Agents for Testing Java Code

3. Problem Formulation

In the thesis work, the cost-effectiveness measures are considered as aspects of regression testing that

need to be improved. In particular, we focus on the number of test cases and code coverage parameters.

Reducing the number of test cases and increasing the code coverage percentage of SUT are main

challenges that thesis work tries to investigate. Given that in this thesis work, we use also the generation

of test cases, several other problems are studied, including:

− reducing the time for generation and selecting optimal test cases that allow to gain code

coverage on appropriate level;

− adapting the test case generation for complex SUT;

− reducing the errors during the test case generation.

Additionally, the key challenge of the thesis is to investigate how collaborative agents can be used in

order to improve code coverage and number of test cases that can serve as measures of effectiveness and

cost. To this end, in this thesis we need to design collaboration mechanisms that agents can use to help

and request help from one another. By introducing the agents, we try to make the process of software

testing easier, optimize effectiveness and cost metrics for regression testing using autonomous behaviour

of the agents.

Adlet Nyussupov

Using Distributed Agents for Testing Java Code

4. Methodology

The methodology of the thesis is based on the following steps:

1. Review and analyze the literature under the study.

2. Develop a multiagent testing application (MTA) using the information from the literature

review.

3. Formulate the hypothesis and conduct the experiment research for identifying the effectiveness

of the MTA.

4. Reach a conclusion and show the outcomes.

The literature review includes an analysis of the works that are related to the automation, regression

testing, multiagent approach and other topics from the different sources in the given research domain.

The development of the MTA is based on the methods and instruments that are gained from the literature

review and incorporates creating and describing of the high and low level designs. The null and

alternative hypotheses are formulated in order to check the effectiveness of the MTA using the

experiment research method. Then there is a need to make a conclusion based on the results of the

experiment and gaining the outcomes of the research. The main goal is to identify the efficiency of using

agents in test case selection for the regression testing against other approaches. The research work is

done within the thesis work programme of Mälardalen University.

4.1. Experiment Research Method

Experiments are used when we want to provide a high level of control of the situation and manipulate

behaviour precisely, directly and systematically. The formal experiment has the following definitions:

1. Independent variables - all variables in an experiment that are controlled and manipulated.

2. Dependent variables - all variables that are used under the study in order to see the effect of the

changes in the independent variables.

3. Treatment - one particular value of a factor. It is usually an independent variable.

4. Factor - group of independent variables that are controlled and manipulated.

5. Subjects - people that apply to the treatment.

6. Objects - instances that are used during the study.

An experiment involves quantitative analysis of data using descriptive statistics and hypothesis testing

methods. The descriptive statistics include presentation and numerical processing of a data. It can be in

form of different plots that usually show the mean of the given data. The hypothesis testing is intended

for rejecting the null hypothesis and moving toward the alternative ones. It is based on a variety of

statistical tests, such as Shapiro-Wilk, ANOVA (ANalysis Of VAriance), Kruskal-Wallis and other

tests.

In the scope of the thesis work, the technology-oriented experiment is prepared. It implies applying

different treatments to objects. In particular, three test case generation tools are applied to the same SUT

classes. It is needed for identifying the effectiveness of the MTA against similar tools. It includes

conducting the comparison analysis where three types of data (execution time, number of test cases and

code coverage) are collected and analysed using the one MTA and two third party testing tools (EvoSuite

and JUnitTools).

4.2. Tools for Creating the MTA

The main requirement of the MTA is providing optimal generation and selecting of the test cases for

gaining appropriate level of code coverage within regression testing context. In order to implement these

functions we need to use external tools that allow us to design agents, gain code coverage information

and manipulate code of SUT class. The following tools are selected based on literature review:

− JADE (Java Agent Development Framework);

− JaCoCo (Java Code Coverage Tools);

Adlet Nyussupov

Using Distributed Agents for Testing Java Code

− Spoon library.

The JADE framework is selected for implementation of the autonomous agents and their functions. For

gaining code coverage information, JaCoCo library is used. Generation test cases and source code

analysis are based on Spoon transformation code coverage library.

4.2.1.

JADE Framework

The widely used JADE framework [5] is selected since it provides flexible and effective developing

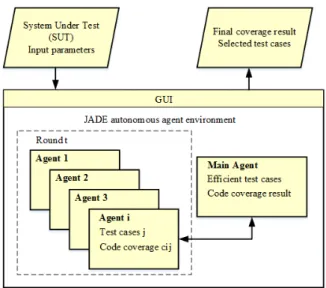

tools (Figure 1).

Fig. 1: Overview JADE structure [6]

It incorporates libraries that contain classes, a set of graphical utilities for monitoring agent activity and

agent runtime. The agents in JADE can provide different levels of abstraction and be simple (just

responsive) or complex (cognitive). The framework includes one main and secondary agent containers

[7] that can be distributed between different hosts. Communication between the agents is based on ACL

(Agent Communication Language) language [8]. The message format incorporates following fields:

sender, receiver, content and communicative act. Sender and receiver are agents that form connections

between each other. Communicative act implies the intention of the agent that is established using the

Foundation for Intelligent Physical Agents (FIPA) protocol [9]. This protocol contains 22

communicative acts. Content field contains actual information for sharing.

Agents in JADE have the following properties:

− Reactivity: agents can react on a dynamically changing environment.

− Social ability: agents can communicate with each other using specific communication language.

− Autonomy: agents can perform own tasks without human interaction.

− Proactiveness: agents can conduct tasks without external request.

4.2.2.

JaCoCo Tools

JaCoCo [10] is a code coverage library for the Java language that provides a variety of methods for code

coverage analysis. Unlike other tools like Cobertuna [11] and EMMA [12], JoCoCo supports current

Java version and is constantly updated by its authors. It has a lightweight and flexible features for

building a testing environment in different usage scenarios. JaCoCo can be integrated to any testing

software using a flexible application programming interface (API).

JaCoCo features:

− Supports different code coverage criteria: instructions, methods, lines, branches, complexity

and types.

Adlet Nyussupov

Using Distributed Agents for Testing Java Code

− Uses Java byte code manipulation and provides offline instrumentation without actual

execution of source file.

− Has different report format (HTML, CSV and XML).

− Allows different integration scenarios including custom class loaders.

− Good for large scale testing due to minimal runtime overhead.

− Supports regression tests and provides completed functional test coverage.

Instruction coverage by JaCoCo includes information about what amount of byte code were executed

during the testing. Branch coverage provides coverage information for every condition (“if” or “switch”

statements). It has no coverage, partial and full coverage states that depend on executions of conditional

statements. Complexity coverage [13] is the number of paths that can generate all possible paths

through a method. Line coverage calculates coverage for every line in the code and as a branch coverage

has three states: no coverage, partial and full coverage. Method coverage provides information about

how many methods are executed during the runtime at least once.

4.2.3.

Spoon Library

Spoon [14] is an open source library for Java that allows making analysis and transformation of the

source code. The case of transformation of the code is very useful in regression testing context because

it allows an analysis of all the statements in the code in order to generate proper test cases. It has a Java

metamodel for representing abstract syntax tree (AST) of a current Java project. This model can be

transformed for different purposes and then implemented for the real AST of the project. It can be useful

when it is necessary to automate some code creation process based on the type of the elements.

Transformation of the code can be done in the easiest way without using comprehensive Java native

parsing.

Spoon features:

− Code analysis: computing source code elements (finds quantity of methods, finds elements by

annotation, gets inputs, returns statements etc.)

− Code generation: creating samples of code, classes, methods, variables, inputs etc.

− Code transformation: automated refactoring, modifying semantic of the code, instrumenting

code.

Adlet Nyussupov

Using Distributed Agents for Testing Java Code

5. Related Work

Many related works describe benefits of agent technology within software development and testing

processes. All the related works are grouped into:

− works that describe agents theory and implementing agents in testing;

− works that describe agents in autonomous perspective for testing purposes;

− works that describe agent-based test case generation;

− works that describe agents for optimization testing in web domain;

− works that describe agents for improving testing in object oriented development;

− works that describe agents for model-based testing.

The basic concepts of agents in testing are provided in different sources. In paper [15] agent technology

is described in rational point of view. Paper provides “Belief – Desire – Intention” (DDI) design of

agents. The authors try to move forward to practice and consider implementation of BDI agents. Paper

[16] reviews testing methods and techniques with respect to the multi-agent systems. Authors consider

testing in different levels that include unit, agent, group, system and acceptance. Testing information

was gained from related literature and grouped according to these levels. Alongside the paper [17]

provides enhancement of agent theory in software testing and introduces agent-oriented software testing

concept.

The autonomous agents are widely used for testing purposes. Agent’s autonomy theory that is derived

from software architecture is provided in paper [18]. Authors consider problems of defining autonomy

of agents and its dimensions. Special emphasis is placed on cognitive type of agents that can improve

overall autonomy. In paper [19] autonomous agent technology is applied for web testing. The results of

the paper show regression testing, web testing and web usability improvements. In paper [20]

agent-oriented software engineering is used for construction autonomous software testing systems. Distributed

and testing agents are created for prototype software in order to assess existing systems using different

testing methods. Paper [21] provides automotive test generation approach that uses the genetic algorithm

based on agents. The agents are built using JADE platform and implement key features of the platform.

Agent’s simulation that is conducted in the paper shows positive results related to the feasibility of the

approach. The intelligent agents are implemented for collaborative testing of service-based systems in

paper [22]. Paper proposed test agent model that also includes agents` knowledge, events, actions etc.

Results of the paper experiment shows that agents have the potential to improve collaborative testing

and test automation, cost reduction and flexibility. Paper [23] discusses functions and behaviour of

collaborative autonomous agents. Attention is being given to interactions between the agents and their

delegation of the tasks. In the model, three states are provided: idle, execute and interact. According to

the results of the paper, proposed methods can improve overall collaboration. In the model, three states

are provided: idle, execute and interact. In addition, automated agent based framework is provided for

testing MMORPG (multiplayer on-line role playing game) type of video games [24]. Framework allows

to conduct testing using graphical user interface (GUI).

Agent oriented approach also is used for test case generation purpose. In paper [25] multiagent test

environment is developed for test generation and evaluation of web services. It includes BPEL-based

(Business Process Execution Language for Web Services) Web Service. Test characteristics in the agent

environment are included in agent ontology thereby providing proper execution of the test tasks. Paper

[21] provides automotive test generation approach based on genetic agents. Agents are developed using

JADE platform and implement key features of the platform. Simulation of the agents that are conducted

in the paper provides positive results related to the feasibility of the approach. Agent based approach is

proposed for regression testing that also includes test case generation [26]. Authors provide algorithms

for monitor agent and test case generation agent. The purpose of the agents is to improve effectiveness

and cost properties during the regression testing. In the paper [3] authors conducted a systematic review

of agent-based test case generation for regression testing. Review identifies advantages of using agents

in software test case generation and necessity of using agents in regression testing.

Adlet Nyussupov

Using Distributed Agents for Testing Java Code

Many agent methods are proposed for web testing. In paper [19] an agent technology is applied into web

testing domain. The authors of the paper consider automatic web testing that includes autonomous

agents. It involves regression testing and web testing improvements. Cooperative agent approach is

provided for quality assurance within the web environment [27]. These types of agents are aimed to

support web- applications that was built based on Lehman’s theory of software evolution. Mobile agent

that supports web services testing is developed in paper [28]. This agent allows improving

trustworthiness of the web services and increasing effectiveness of the verification and validation

processes. In paper [29] agents are used for security testing in web environments. Mobile agents are

provided in order to improve system supporting and maintaining. It also included study related to the

security testing. Along with the papers mobile agent based tool that supports web service testing is

provided in paper [30]. Authors propose design and development of the web service oriented testing

platform. The testing platform solves stated technical challenges that are related to web services.

Agents for improving testing in an object-oriented development are proposed in different sources. In

paper [31] agents are used for testing object oriented software. Authors developed integrated multi-agent

framework that includes well known testing techniques like mutation testing and capability testing.

Authors attempt for developing automated software testing environment and use agents for these

purposes. In paper [32] authors consider agent-oriented paradigm as an extension to the object-oriented

paradigm. Authors provide test agent oriented system that was implemented using JADE platform.

In addition, agent-based approach is used in model-based and simulation testing. Paper [33] provides

3D agent based modeling techniques for testing efficacy of platform and train passenger boarding. The

agent based modeling is aimed to mitigate financial risks with respect to new trains concepts design.

Paper [34] proposed agent based simulation to solve challenges in testing robotic software in human

robotics interactions. Agents use two-tiered model based test generation process. Authors introduce BDI

agents for test generation. Results of the paper demonstrate that the agents can fully automate producing

effective test suits for verification process.

Overall, all related works were considered and different multi-agent based testing approaches were

identified. All the results show benefits of the agents to perform effective testing that also includes

autonomous actions and developing agents using JADE.

Adlet Nyussupov

Using Distributed Agents for Testing Java Code

6. Creation of an Autonomous Agent Based Application for Testing

Java Code

Developing of the multiagent testing application (MTA) includes creating high and low level designs.

The high-level design is elaborated in the 6.1 subsection. It contains basic structure and activity diagram

of the MTA. The level design is elaborated in the 6.2 subsection. It includes class diagram and

low-level implementation of the graphical user interface (GUI).

6.1. High-level Design of the MTA

High-level design of MTA includes basic structure of entire application and activity diagrams of main

and other agents.

6.1.1.

Basic Structure of the MTA

The structure of MTA is represented in Figure 2.

Fig. 2. Structure of agent based testing application

As it can be seen from the structure, the MTA works based on independent agents that perform their

tasks within the JADE environment. The MTA has inputs in the form of the system under test (SUT)

and input parameters, then outputs as final code coverage results and selected test cases. The main agent

communicates with all agents by sending unique requests. Every agent i in {a1, ... ai, ... an} where n is

the number of agents, generates a test case j, and gets a code coverage cij (Figure 2). As soon as an agent

i has code coverage results, either positive (code coverage is improved) or negative (code coverage is

not improved) it will send them to the main agent. The main agent collects only positive test cases in

other words efficient test cases that can improve the code coverage of the SUT. The quantity of n number

is adaptable and changes dynamically based on the structure of the SUT. In order to increase the number

of generated test cases for the SUT, the MTA allows setting rounds. An agent i is recreated by the MTA

and generates new test cases in every round t in {r1, … rt, … rm } where m is the number of rounds.

The final results are provided by the MTA using graphical user interface (GUI) and includes code

coverage information that is based on selected test cases.

6.1.2.

Activity Diagram of Agents

The activity diagram of the main agent and agent i are shown in Figure 3. Two agents start their activity

independently due to the asynchronous type of the architecture of JADE. The main agent starts its

activity with initialization and accepts additional manual test cases if user sets them. The next step is the

execution of manual test cases and gaining code coverage results. If the results comply with the given

requirement, the main agent stops his activity and shuts down the agent platform (JADE platform that

contains all agents). Otherwise, it starts a new round t. If user does not set the manual test cases, the

main agent skips aforementioned actions and starts new round. The number of all rounds - m is

Adlet Nyussupov

Using Distributed Agents for Testing Java Code

predefined by user of the MTA. In order to avoid problems in synchronization between the agents, the

main agent waits when all agents i have status “ready” and then sends them request for help including

code coverage information. The main agent waits for a reply from some of the agents i. Next steps of

the main agent depends on the type of reply. If an agent i cannot help to improve code coverage, the

main agent checks three conditions: if all agents i are executed, if the code coverage is satisfied to the

requirement or if all rounds t are executed. Based on these conditions the main agent decides whether to

shutdown the platform or start the next round. If agent i can help to improve the code coverage it sends

own (generated) test case to the main agent. The main agent accepts the received test case, puts it into

the data store (database that contains all selected test cases) and checks the aforementioned three

conditions with the same actions. If the code coverage is equal, it means that test case of the agent i can

potentially be positive and improves the code coverage of the SUT. It might be due to different types of

metrics for measuring code coverage. Main agent might have better code coverage in some metrics,

worst in another and similarly, agent i might have the worst in some, and better in others. In this case,

actual code coverage of the SUT would be different despite identical general code coverage information.

Therefore, the main agent sends own test cases from data store to the agent i to check this assumption.

Fig. 3. Activity diagram of the main agent and agent i

Agent i starts with initialization and generates new test case for the SUT. Then agent i sets status “ready”

and waits request for help from the main agent. If the request is received, agent i executes own

(generated) test case and makes comparison analysis using the own code coverage data and the data that

was sent from the main agent. If the result is positive an agent i sends generated test case and replies that

it can help to improve code coverage to the main agent. Then it deletes itself. If the result is negative,

agent i replies that it cannot help to improve code coverage and deletes itself. If the result is undefined

in other words equal code coverage, agent i waits test cases from the main agent. It merges own test case

with received test cases, executes it, and checks if the code coverage was improved. Based on results

agent i reply either code coverage was improved or not.

Adlet Nyussupov

Using Distributed Agents for Testing Java Code

Overall, each of the use cases has a unique thread and it implies that every agent also has unique thread.

Their communication is based on exchanging messages including code coverage and test cases. Selected

test cases and final code coverage information are contained in the data store.

6.2. Low-Level Design of MTA

Low-level design of the MTA is represented in the class diagram and low-level design of user interface.

Realization of the MTA can be found in GitHub repository [35].

6.2.1.

The Class Diagram of the MTA

Class diagram shows internal structure and functionality of application including classes, methods,

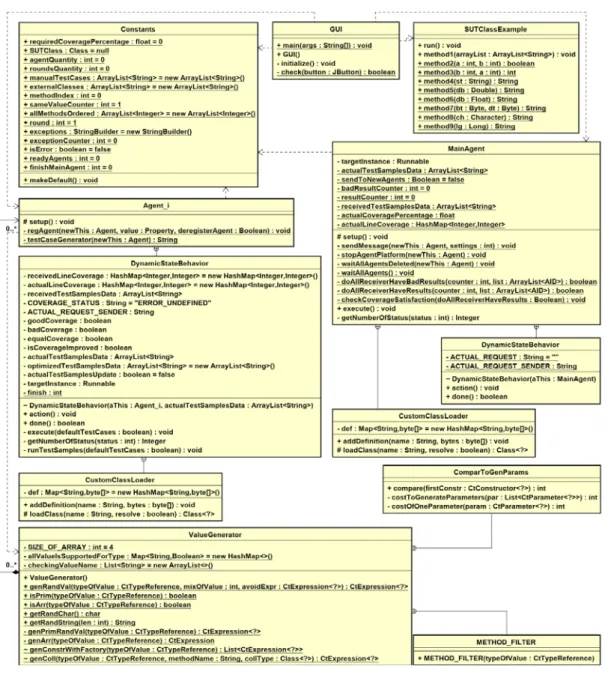

variables, etc. Class diagram of the MTA is shown on the Figure 4.

Fig. 4. Class diagram of multiagent testing application

Class diagram comprises the following classes: GUI (Graphical User Interface), Constants, MainAgent,

Agent_i, ValueGenerator and other inner classes. All the classes depend from standard Java Libraries,

some of them from JaCoCo and Spoon libraries. The MTA starts working using the GUI class that

Adlet Nyussupov

Using Distributed Agents for Testing Java Code

implements the user interface. The code implementation of the GUI class is shown in Appendix A1. The

main methods of the GUI class are represented in Table 1.

TABLE 1

THE MAIN METHODS OF GUI CLASS

Method

Description

main

Primary method that starts the MTA.

initialize Implements user interface elements. Allows selecting SUT class, adding rounds quantity,

adding required code coverage percentage, auto filing all inputs values, starting testing

and stopping testing. Uses the Spoon library instances for observing the inner structure of

the SUT class.

check

Allows starting testing only when all required input values are set in the user interface.

The Constants class contains variables that are necessary for passing the information between GUI,

MainAgent and Agent_i classes. It has single method “makeDefault” that is used before testing and

resets values of its variables. Code implementation of the Constant class is shown in Appendix A2. The

main variables of Constants class are shown in Table 2.

TABLE 2

THE MAIN VARIABLES OF CONSTANT CLASS

Variable

Description

requiredCoveragePercentage

Contains input values of required code coverage for the SUT class.

SUTClass

Contains input SUT class.

agentQuantity

Contains quantity of agents that are created for testing. This value

is always the same as a quantity of methods of the SUT class.

roundsQuantity

Contains input values that express testing rounds quantity.

manualTestCases

Contains input manual test cases.

externalClasses

Contains names of external SUT classes. Agents use this

information in order to find external classes in the same folder

where SUT class is located and then make proper compilation and

testing.

methodIndex

Contains method index that belongs to the SUT class. Agents i use

actual index for test case generation for the specific method of the

SUT class.

allMethodsOrdered

Contains all methods quantity that are necessary for agents creation.

round

Contains input values that express rounds quantity.

readyAgents

Contains ready agents quantity that is used by the main agent in

order to avoid thread errors.

The Agents_i and MainAgent classes include special variables and methods that are necessary for agents

action and communication with one another. These classes have instances of the JADE library that

allows initialization and setting an agent’s behaviour. Furthermore, they have the

DynamicStateBehaviour inner class that implements unique behaviour for these agents. Code

implementation of the MainAgent and Agent_i classes are shown in the Appendix A3 and A4. The main

methods of the Agent_i class are represented in the Table 3:

TABLE 3

THE MAIN METHODS OF AGENT_I CLASS

Method

Description

setup

Primary method that initializes the main agent. It accepts manual test cases and

activates behaviour using DynamicStateBehaviour inner class.

Adlet Nyussupov

Using Distributed Agents for Testing Java Code

testCaseGenerator Generates test cases for one method of the SUT class using ValueGenerator

class.

The main methods and variables of the DynamicStateBehaviour inner class of the Agent_i class are

shown in Table 4.

TABLE 4

THE MAIN METHODS AND VARIABLES OF DYNAMICSTATEBEHAVIOUR INNER CLASS

OF THE AGENT_I CLASS

Variable

Description

receivedLineCoverage

Contains line coverage value that is received from the main agent.

actualLineCoverage

Contains line coverage value that is gained from the execution of the

generated test case of the agent i.

receivedTestSamplesData

Contains test samples data that is received from the main agent.

goodCoverage

Logic values that represent results of the comparison analysis of the

received and actual code coverage data of an agent i and the main

agent.

badCoverage

equalCoverage

isCoverageImproved

Logic value that shows the code coverage improvement after the

execution merged test samples data of agent i and main agent.

actualTestSamplesData

Contains generated test case of an agent i.

optimizedTestSamplesData Contains merged test cases of an agent i and the main agent.

targetInstance

Instance of SUT class that implements runnable interface of Java class.

The instance is used for testing SUT class.

finish

Variable that is used for finishing the behaviour of an agent i.

Method

Description

action

Main method of the Agent_i class that implements communication

between an agent i and the main agent.

done

Finishes the agents communication.

execute

Uses the JaCoCo library instances for executing test cases and gaining

the code coverage information from the SUT class. During the

execution, it uses external and inner classes that are specified in the

SUT class.

Main methods and variables of the MainAgent class are shown in Table 5.

TABLE 5

THE MAIN METHODS AND VARIABLES OF THE MAIN AGENT CLASS

Variable

Description

targetInstance

Instance of SUT class that implements runnable interface of Java class.

The instance is used for testing SUT class.

actualTestSamplesData

Contains manual test cases of the main agent.

badResultCounter

Contains a number of negative results that are received from the agent

i.

resultCounter

Contains number of overall results that are received from the agent i.

receivedTestSamplesData

Contains test case that is received from the agent i.

actualCoveragePercentage Contains actual code coverage data that is gained from execution of the

manual test cases of the main agent.

actualLineCoverage

Contains line code coverage value that is gained from execution the

manual test cases of the main agent.

Method

Description

setup

Main method of the MainAgent class that implements communication

between the main agent and agent i.

Adlet Nyussupov

Using Distributed Agents for Testing Java Code

stopAgentPlatform

Terminates agents platform after finishing the testing.

waitAllAgentsDeleted

If all agents i have negative results, the main agent activates this

method and waits when all agents delete themselves.

waitAllAgents

Waits all agents before starting the testing in order to avoid thread

errors.

checkCoverageSatisfaction Checks code coverage satisfaction to the given requirement in the end

of every round.

execute

Uses the JaCoCo library’ instances for executing test cases and gaining

the code coverage information from the SUT class. During the

execution, it uses external and inner classes that are specified in the

SUT class.

The DynamicStateBehaviour inner class of the MainAgent class has the same methods as an inner class

of the Agent_i class and implements the similar communication functions. The inner classes of the

custom class loader are needed for loading the bytecode of the SUTClass and passing these values to the

instances of the JaCoCo library.

During the operation of the agents i, the test cases are generated using ValueGenerator class. This class

is based on the Spoon library and uses different methods for generation of random values. It depends on

the inputs of the SUT class methods. In addition, it has auxiliary inner classes that help to generate

values. Code implementation of the ValueGenerator class is shown in Appendix A5. The main methods

of the ValueGenerator class are represented in the Table 6.

TABLE 6

THE MAIN METHODS OF THE VALUEGENERATOR CLASS

Method

Description

genRandVal

Generates random values that depend on input type of the SUT class methods. It

invokes other methods inside itself.

isPrim

Checks if the value is a primitive.

isArr

Checks if the value is an array.

getRandChar

Returns random Java Character class value.

getRandString

Returns random Java String class value.

genPrimRandVal Generates a primitive random value.

genArr

Generates an array.

genColl

Generates a collection.

The code implementation of the all classes are shown in the Appendix A.

6.2.2.

Low-Level Design of the User Interface

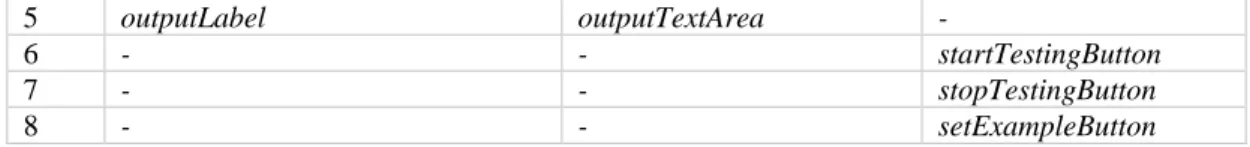

All the elements and functions of the GUI are implemented using the GUI class. The GUI class has a

frame, buttons, fields, labels and other necessary instances for user interface realization. All the instances

have properties in the form of size, location, name etc. Buttons and fields are connected with special

ActionListener class of Java that allows adding actions for the variety of input devices. In the case of

MTA, actions are performed with computer mouse and keyboard. Frame instance represents main

window of MTA. It contains list of auxiliary instances that are represented in the Table 1.

TABLE 7

List of the auxiliary instances

Type Label

Field, text pane or text

area

Button

1

sutClassLabel

sutClassTextPane

sutClassSelectButton

2

requiredCoveragePercentageLabel coveragePercTextField

coveragePercButton

3

roundsQuantityLabel

roundsTextField

roundsButton

Adlet Nyussupov

Using Distributed Agents for Testing Java Code

5

outputLabel

outputTextArea

-

6

-

-

startTestingButton

7

-

-

stopTestingButton

8

-

-

setExampleButton

The graphical representation of the MTA`s GUI is shown in Figure 5.

Fig. 5. Screen of graphical user interface of the MTA.

There are eight types of instances. Every type has common functionality and belongs to one group. Type

one is in charge of adding SUT class to the MTA. Required code coverage percentage is handled by

instances of type two. Type three instances set rounds quantity. Manual test cases are processed by type

four. Type five shows information in the output text area of user interface. Type six and seven allow to

start or stop agent testing. All the example properties of user interface are set by type eight instances.

The button “sutClassSelectButton” activates sequence of following key actions:

1. Creates an instance of JFileJooser class that shows in the user interface the modal frame for

choosing files from the file system. The instance allows choosing files that only have “.class”

extension.

2. If the file is selected, the instance of URLClassLoader class is created. It loads SUT class into

the runtime of Java. Loaded class passes to the “SUTClass” variable that is located in the

Constants class.

3. Instance of File[] class is created. All files that have “.class” extension load into instance of file

array and pass to the “files” variable of the Constants class.

4. Then instances of the Spoon library are created. The Spoon instances get access to the list of

the external classes inside the selected SUTClass and put it into the “externalClasses” variable

that is located in the Constants class.

Button “coveragePercButton” passes inputs of required code coverage value to the

“requiredCoveragePercentage” variable of Constants class. The “roundsButton” passes input quantity of

testing rounds to “roundsQuantity” variable of the Constant class. Manual test cases value passes to the

“manualTestCases” variable of the Constant class using “manualTestCasesButton”. The

“setExampleButton” puts example values of rounds, required code coverage, SUTClassExample class

and manual test cases into the corresponding variables of the Constant class.

Adlet Nyussupov

Using Distributed Agents for Testing Java Code

The main functionality of the GUI class starts with activation “startTestingButton”. The button is

available only when method “check” returns value true, in other words, when all the required fields are

set. It starts sequence of the following key activities:

1. Activates method “makeDefault” inside the Constants class.

2. Creates instances from Spoon library. The instances inspect all methods inside the SUT class

and pass these values into the “allMethodsOrdered” variable of the Constants class.

3. Then instances of JADE library are created. The runtime of JADE starts using instance of

Runtime class. AgentController class instance starts agents container and creates agents i using

the Agent_i class and single main agent using the MainAgent class. The i value depends on the

quantity of methods inside the SUT class. For instance if SUT class has 4 methods, the quantity

of the agents i will be the same.

The “stopTestingButton” invokes termination of the agents container and deletes all the agents.

6.2.3.

Test Case Generation of the MTA

One of the key features of the MTA is random test case generation for the SUT classes. The

ValueGenerator class provides this feature. Agents i of the MTA generate random test cases based on

the inputs of the methods of the SUT classes. In particular, the generation depends on input types. For

instance, if the method has “int” or “String” input types it generates random numbers or text. However,

it only generates invocation (i.e. for method “setNumber(int n)” generates “setNumber(232324)” that

increases the coverage) and it doesn't contain assertions like “assertEqual(setNumbers(232324),

232324)”.

The random generation supports the following input types:

− Boolean

− Character

− Byte

− Short

− Integer

− Long

− Float

− Double

− all types of Arrays

− Java objects

.

Adlet Nyussupov

Using Distributed Agents for Testing Java Code

7. Comparative Analysis of the MTA against Other Test Case

Generation Tools

Comparative analysis is conducted under the experiment research method. The following subsections

include planning, operation and analysis of processes of the experiment.

7.1. Planning

In order to conduct the experiment based on statistical analysis the null and alternative hypotheses are

formulated:

− H

0. The MTA has the same efficiency in regression testing context against other test generation

tools.

− H

1. The MTA has better efficiency in regression testing context against other test generation

tools.

− H

2. The MTA has worse efficiency in regression testing context against other test generation

tools.

Experiment has the following variables and samples:

− Independent variables are MTA, EvoSuite and JUnit-Tools. They form one factor - test

generation tools.

− Dependent variables are execution time, number of test cases and code coverage.

− Objects are SUT classes.

− Population consists of Java classes.

The samples in the experiment are 15 randomly selected SUT classes that were selected using the simple

random sampling method. Population of the selected samples are the simplified Java classes that has

more than one condition statements. The experiment design type is one factor with more than two

treatments. Blocking design principle is used in order to avoid undesired effect in the study. In particular,

treatments had default and fixed settings during the entire process of the experiment.

The MTA has not been passed through the comprehensive verification. Because of that, during the

experiment, some SUT classes caused error and were modified or replaced in the sample list. It is related

to the validity threats and can influence the results of the experiment. However, the same SUT classes

are used for the all treatments and therefore the influences are potentially insignificant. Apart from it,

there are some additional threats to the validity of the experiment, where the significance is low statistical

power of the samples and random heterogeneity of the objects. Heterogeneity of the objects is high due

to high complexity of the population. Therefore, it is a comprehensive challenge to make the samples

fully representative. In order to match its population it is necessary to execute a huge number of SUT

classes from different sources including the real projects from open and closed repositories. Every new

SUT class can potentially cause an error of the MTA. These technical barriers worsen the precision of

the study and make it comprehensive. Therefore matching the population is the external validity thread.

Experiment is carried out using the one desktop computer with fixed performance. All the executions

are performed in the same conditions.

The MTA is compared against well-known open source testing tools like EvoSuite [36] and JUnit-Tools

[37]. The EvoSuite is the search-based tool that uses a genetic approach and automatically generates test

cases from the given SUT class or the entire system. The generated test cases are evolved using

appropriate search methods (crossover, selection and mutation) and provide better solutions with respect

to the optimization. The EvoSuite also can generate JUnit assertions. The JUnit-Tools is an extension of

the Eclipse IDE (Integrated development environment). It can generate unit test elements based on

existing code of the SUT class. Similar to EvoSuite, it can generate the assertions that have actual and

expected parameters and can generate test cases for the entire system under test.

Adlet Nyussupov

Using Distributed Agents for Testing Java Code

7.2. Operation

During the operation process, the inputs in form of SUT classes were collected. The structures of these

classes are vary from the simple to complex. They consist of different types of statements and conditions.

The goal of the operation is to measure the three types of data: execution time in seconds, number of test

cases and code coverage percentage. The quantitative data that are gained during the experiment are

shown in Table 8.

TABLE 8

The quantitative data of the experiment

N

Samples

Dependent variables

Independent variables

SUT Classes

MTA

EvoSuite

JUnit-Tools

1

BinaryConverter

Execution time (sec.)

22.44

85.99

5.34

Number of test cases

4

8

3

Code coverage (%)

79.70

83.60

53.90

2

BubbleSort

Execution time (sec.)

5.70

83.31

4.48

Number of test cases

1

2

1

Code coverage (%)

98.10

98.10

54.70

3

Counter

Execution time (sec.)

12.31

117.50

15.53

Number of test cases

3

8

1

Code coverage (%)

73.40

98.40

67.20

4

CreateASet

Execution time (sec.)

23.34

88.94

3.42

Number of test cases

3

8

3

Code coverage (%)

83.60

97.40

38.80

5

EightQueens

Execution time (sec)

52.59

297.19

18.05

Number of test cases

13

43

4

Code coverage (%)

59.00

95.00

14.90

6

IntListVer2

Execution time (sec.)

17.61

157.26

3.82

Number of test cases

7

25

5

Code coverage (%)

58.50

98.90

55.50

7

IntListVer3

Execution time (sec.)

32.83

97.77

4.12

Number of test cases

7

32

10

Code coverage (%)

45.70

99.20

53.50

8

LeapYear

Execution time (sec)

19.00

29.42

5.47

Number of test cases

3

8

2

Code coverage (%)

79.60

98.10

64.80

9

Life

Execution time (sec.)

37.23

219.75

8.85

Number of test cases

7

20

3

Code coverage (%)

94.00

100.00

18.00

10

MineSweeper

Execution time (sec.)

20.01

79.00

7.77

Number of test cases

2

5

2

Code coverage (%)

17.80

17.80

4.10

11

PalindromeCheck

Execution time (sec.)

12.42

86.53

4.40

Number of test cases

2

6

2

Code coverage (%)

66.70

91.10

13.30

12

Prime

Execution time (sec.)

24.85

173.25

3.74

Number of test cases

4

7

2

Code coverage (%)

97.90

97.90

59.60

13

PrimeEx

Execution time (sec.)

80.09

198.45

6.78

Number of test cases

6

19

5

Code coverage (%)

93.00

97.00

32.70

14

Triangle

Execution time (sec.)

12.52

83.91

8.05

Number of test cases

3

13

1

Code coverage (%)

90.50

93.70

19.00

15

UnsortedHashSet<E>

Execution time (sec.)

26.62

82.96

27.42

Number of test cases

4

13

5

Code coverage (%)

25.40

100.00

31.40

Adlet Nyussupov

Using Distributed Agents for Testing Java Code

Overall the 15 SUT classes were passed throw the MTA, EvoSuite and JUnit-Tools for collecting the

data on execution time and number of test cases. The coverage information is gained manually via the

execution of given test cases inside the JUnit extension of the Eclipse IDE.

7.3. Analysis and interpretation

In order to analyse the execution data and reject the null hypothesis the parametric and non-parametric

tests are used. These tests require identifying the normality of the distribution for each of the given data.

It is made by using the Shapiro-Wilk normality test. All the tests are conducted in the RStudio software

that provides statistical computing and graphics on R language. The normality test gives value. The

p-value allows identifying the normality of the data distribution and selecting either a parametric or

non-parametric test. All the p-value data are represented in the Table 9:

TABLE 9

The results of Shapiro-Wilk normality test

Data

MTA

EvoSuite

JUnit-Tools

Execution time (sec.)

p-value = 0.005987

p-value = 0.02328

p-value = 0.0005912

Number of test cases

p-value = 0.01364

p-value = 0.01629

p-value = 0.00584

Code coverage (%)

p-value = 0.07796

p-value = 1.171e-06

p-value = 0.1503

These data are also represented graphically in order to check visual distribution (Figure 6):

Fig. 6. Normal plots of the given data

As it can be seen from the Table 9 almost all the p-value have p<0.05 implying that the distribution of

the data are different from normal distribution. Only code coverage data of the MTA and JUnit-Tools

have the p-values that shows the normal distribution. Graphical representation supports the analysis and

represents similarly that many of the data have the deviation from the normal line (Figure 6). Therefore,

for the coverage percentage data ANOVA (ANalysis Of VAriance) parametric test is used. The

execution time with number of test cases, data are analyzed by Kruskal-Wallis non-parametric test. The

ANOVA test is based on the total variability of the data and the variability partition with respect to

Adlet Nyussupov

Using Distributed Agents for Testing Java Code

different components. The Kruskal-Wallis is an alternative test to the one factor analysis of variance.

The results of the Kruskal-Wallis and ANOVA tests are shown in the Table 10.

TABLE 10

The results of the Kruskal-Wallis and ANOVA tests

Data of the MTA, EvoSuite and JUnitTools

P-values

Execution time (sec.)

3.291e-08 (Kruskal-Wallis test)

Number of test cases

5.573e-05 (Kruskal-Wallis test)

Code coverage (%)

5.79e-07 (ANOVA test)

The tests are applied for all three tools` each type of data. The results of tests show that all p-values are

less than the significance level 0.05. It means that there are significant differences between the

independent variables. Therefore, the H

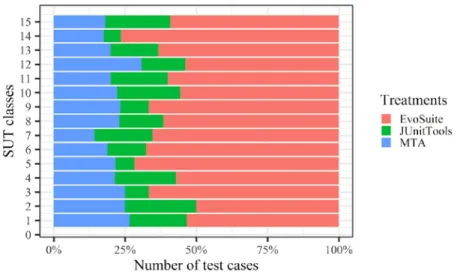

0hypothesis can be rejected. In order to visualize the data of the

treatments and check alternative hypothesis the descriptive statistics are used (Figure 7, 8 and 9).

Fig. 7. Bar chart of the execution time data

As it can be seen from the bar chart of Figure 7, the EvoSuite takes more time during the execution of

the test cases rather than other tools. The lowest time belongs to the JUnitTools. The middle value

belongs to the MTA.

Adlet Nyussupov

Using Distributed Agents for Testing Java Code

The bar chart of Figure 8 shows that the JUnitTools generates the minimum number of test cases and

the EvoSuite generates maximum number of the test cases. The middle value belongs to the MTA.

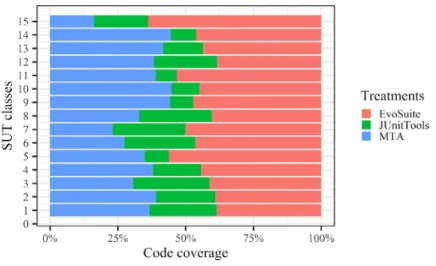

Fig. 9. Bar chart of the code coverage data

![Fig. 1: Overview JADE structure [6]](https://thumb-eu.123doks.com/thumbv2/5dokorg/4844988.131212/9.892.295.581.309.561/fig-overview-jade-structure.webp)