V¨

aster˚

as, Sweden

Thesis for the Degree of Bachelor of Science in Computer Science

15.0 credits

DESIGNING A HUMAN CENTERED

INTERFACE FOR A NOVEL

AGRICULTURAL MULTI-AGENT

CONTROL SYSTEM

Fredrik Arvidsson

fad16001@student.mdh.se

Examiner: Sasikumar Punnekkat

M¨

alardalen University, V¨

aster˚

as, Sweden

Supervisors: Afshin Ameri

M¨

alardalen University, V¨

aster˚

as, Sweden

Rikard Lindell

M¨

alardalen University, V¨

aster˚

as, Sweden

1

Abstract

The subject of this report is the Command and Control (CaCS) system which is a component whose purpose is to simplify planning, scheduling and surveying work done on a farm in a goal-oriented way. CaCS is part of a larger project, the Aggregate Farming in the Cloud platform (AFarCloud), whose purpose is to simplify the use of contemporary technology to increase the efficiency of farms. AFarCloud is an EU project spanning between 2018 to 2020 and as such, the CaCS is in its infancy. Since the intended users of AFarCloud and CaCS is small to medium sized agricultural businesses, the interface of the CaCS should be constructed in such a way that it is useful and easy to learn. In order to live up to those standards, a combination of live interviews, prototype evaluations and a comparison with similar software were performed and then compared with the International Standard document on Human-Centered Design for Interactive Systems (ISO 9241-210). The results indicate that a modular interface, where only the information relevant for the unique user’s farm is displayed, is preferable in order to increase the usability of the CaCS. Furthermore, use of icons and explanatory text must be made in consideration of the mental models of the users in order to improve learnability and avoid confusion.

Table of Contents

1 Abstract 1

2 Introduction 1

3 Background 1

3.1 Acronyms and Terminology . . . 1

3.2 AFarCloud . . . 3

3.2.1 The Command and Control System . . . 3

3.2.2 User needs and requirements . . . 4

3.2.3 Responsibilities and functionalities . . . 4

3.2.4 ERP . . . 4

3.2.5 GCS . . . 5

3.2.6 The User Interface . . . 5

4 Related Work 6 5 Problem Formulation 10 6 Method 11 6.1 Sketching . . . 11 6.2 PACT . . . 11 6.2.1 People . . . 12 6.2.2 Activities . . . 12 6.2.3 Context . . . 12 6.2.4 Technologies . . . 12 6.3 Prototyping . . . 12

6.3.1 Low fidelity prototype . . . 12

6.3.2 Exploratory test and Usability testing . . . 13

6.3.3 High fidelity prototype . . . 13

6.3.4 Assessment Test . . . 13 7 Design Process 14 7.1 Pilot study . . . 14 7.2 PACT analysis . . . 14 7.2.1 People: . . . 14 7.2.2 Activities: . . . 15 7.2.3 Context: . . . 15 7.2.4 Technology: . . . 17 7.3 Sketching . . . 17 7.4 Additional research . . . 20

7.5 First low fidelity prototype . . . 22

7.6 First Evaluation . . . 24

7.7 Second low fidelity prototype . . . 27

7.8 Second Evaluation . . . 27

7.9 Final research . . . 29

7.10 High-fidelity prototype . . . 29

7.11 Final Evaluation . . . 32

7.11.1 First part: mental models . . . 33

7.11.2 Customization and moving panels: . . . 34

7.11.3 Third part: Recovering from errors . . . 34

7.11.4 Further comments: . . . 35

8 Results 36

10 Conclusions and future work 38

References 42

11 Appendix A 43

12 Appendix B 44

2

Introduction

The purpose of this thesis is to lay the foundations for the graphical user interface (UI) for a mission planning component named the Command and Control System (CaCS) which is part of a agricultural computing platform named Aggregate Farming In The Cloud (AFarCloud). It will be the product of a 3 year long EU-project that incorporates the planning, supervising and operating of (semi-) autonomous vehicles, robots and equipment within agricultural management.

Farming has come a long way since the days when man and beast worked the land together and laid the outcome in the hands of divine powers. These days, the cutting edge of agricultural tech-nologies involves automated systems capable of analyzing, predicting and planning at a scale that humans are unable to keep up with.

But the cutting edge is far from commonplace. In their study of adaption of Smart Farming tech-nologies among European farmers, Kernecker et al. [1] states that the size of the farm and the economic unit value of the crops grown at the farm are strong indicators for adoption of Smart Farming technology and furthermore states that in addition to economic barriers for adoption a major issue is with the usability of the technologies themselves. This sentiment is echoed by several other researchers within agricultural computing such as Fountas [2], Nikkila [3] and Sorensen [4][5] whose articles were part of the related work section of this thesis.

Since issues related to usability and user interface design are common hindrance for the adoption of software such as the CaCS I performed user studies and evaluated prototypes to attempt to find out what could be the cause of such issues among our intended users. At the same time I will be researching what sort of information a user interface for the CaCS must be able to convey and how it can be presented in a learnable way, this part will be done by looking at the technological aspects which could be involved in the development of the CaCS. Together this will allow me to answer the research question of:

”what domain-specific knowledge related to agriculture is important for developing a UI for the CaCS, and how can human-centered design aid in making the CaCS design more learnable?” For more information about the complexities involved in agriculture and the development of Smart Farming technologies such as CaCS and AFarCloud please refer to the background section.

3

Background

In this section you can find information about acronyms and terminology used in this thesis. You may also find more detailed information about the Command and Control System and the Aggregate Farming in the Cloud platform.

3.1

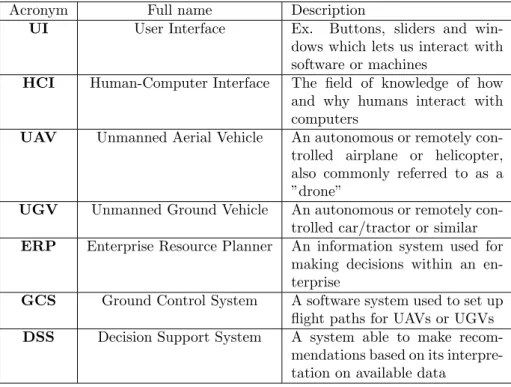

Acronyms and Terminology

Here follows tables of acronyms and terminologies used within this thesis, as well as their meaning within this context.

Acronym Full name Description

UI User Interface Ex. Buttons, sliders and

win-dows which lets us interact with software or machines

HCI Human-Computer Interface The field of knowledge of how and why humans interact with computers

UAV Unmanned Aerial Vehicle An autonomous or remotely con-trolled airplane or helicopter, also commonly referred to as a ”drone”

UGV Unmanned Ground Vehicle An autonomous or remotely con-trolled car/tractor or similar ERP Enterprise Resource Planner An information system used for

making decisions within an en-terprise

GCS Ground Control System A software system used to set up flight paths for UAVs or UGVs DSS Decision Support System A system able to make

recom-mendations based on its interpre-tation on available data

Table 1: A table of commonly occurring acronyms in this text Terminology Description

Signifiers Signs and signals which tells a user what interactions is possible with an object

Accessibility Ease of use for users with the widest range of capabilities Usability The extent to which a service, system or product can be

used by specified users to achieve specified goals respect to effectiveness, efficiency and satisfaction in a specified con-text of use

Effectiveness The accuracy and completeness with which users achieve specified goals

Efficiency Resources expended in relation to effectiveness

Satisfaction Freedom from discomfort and positive attitudes towards the use of the product

Learnability Initial usability and/or improvement of usability over time, such as a learning curve

Mission The collective goals to be reached within a specified time-period, such as pressing all hay-bales on the farm and plac-ing specific amounts of them on specific fields

Goal A desired outcome, such as having placed 10 hay-bales on a specific field

Task Single or continuous process part of reaching a goal, such as pressing hay-bales

Action Atomic component of a task, such as turning a handle or pressing a button

Agent An individual, robot, drone or machine capable of pursuing goals

3.2

AFarCloud

AFarCloud, short for ”Aggregate Farming in the Cloud”, is an EU project aimed at increasing productivity for small to medium sized farming businesses by providing cost-efficient solutions. As described on the project website [6]: ”AFarCloud will provide a distributed platform for au-tonomous farming that will allow the integration and cooperation of agriculture Cyber Physical Systems in real-time in order to increase efficiency, productivity, animal health, food quality and reduce farm labor costs. This platform will be integrated with farm management software and will support monitoring and decision- making solutions based on big data and real time data mining techniques.

The AFarCloud project also aims to make farming robots accessible to more users by enabling farming vehicles to work in a cooperative mesh, thus opening up new applications and ensuring re-usability, as heterogeneous standard vehicles can combine their capabilities in order to lift farmer revenue and reduce labor costs.”

While there already exist services which are capable of performing subsets of the features AFar-Cloud sets out to do, such as analyzing agricultural data collected by UAVs, I have not found any system which claims to be capable of both farm management activities and drone/robot automa-tion during my research. Neither have I found any GCS which combines mission planning of aerial UAVs (drones) with (semi-) automated ground vehicles, robots, sensor readings and personnel. I believe that what AFarCloud sets out to do is novel.

AFarCloud is an important project because the population of earth is still growing while the amount of available farmland remains largely stagnant. For that reason, farming must be made more efficient in order to keep the human population fed. AFarCloud aims to aid in that process by providing tools both for planning activities on the farm and for managing data regarding the status of the farm. However, the act of collecting data does not help the farmer unless it is also presented in a way that is both easily understood and relevant to their needs and requirements. Such a transfer of information happens through the UI, thus my research is relevant because as of now the needs and requirements of the farmers are not fully understood by us and because of this it needs to be researched and any design decisions made must be evaluated.

3.2.1 The Command and Control System

As previously mentioned, AFarCloud will provide a platform capable of data analysis and decision support, and it is a complex system which will consist of many components working together to provide a holistic solution. The Command and Control system will have responsibilities related to planning and supervising the activities of autonomous and semi-autonomous actors. Because of its roles, the Command and Control system will need a user interface that can convey information gathered and processed by other components in the AFarCloud platform as well as allow for the user to make direct decisions regarding the activities of actors and to some extent allow AI-aided scheduling of activities.

In theory we could imagine this situation:

The farm manager sits down in front of the office table and turns on the screen. Logs into his AFarCloud software, is greeted with a summary of how well the farm is doing as well as the results of last nights data analysis. The decision support system reminds the manager that ten of his cows are expected to calve this week. Within two clicks the manager is able to ask AFarCloud to generate health reports for his livestock in preparation for the veterinary visit. Then (s)he continues to read the checklist for the day’s tasks in the CaCS to make sure that there are enough personnel for today’s tasks and that all the machines are in working condition. After checking off the list (s)he decides that the potato field needs to be sprayed with pesticides and sees how only the tractors whose distance in between the inside of the wheels matches with the potato rows are now highlighted, so that (s)he doesn’t accidentally pick a tractor that would run over any potatoes. After adding the task and assigning a worker to the tractor, and updating their schedules, the mission is started.

While the farm manager surveys how the tractors begin to move towards the fields through the CaCS interface a handful of drones starts their daily schedule to fly over the fields. As the day progresses they will come back to replace their batteries and upload the collected data for analysis

and then continue their mission until all goals have been met.

This is a scenario that I envision for the CaCS based on what I have learned of its purpose.

3.2.2 User needs and requirements

Documentation for project AFarCloud currently lists a total of 33 identified unique needs, which ranges from weather forecasting to 3D scans of a cow’s paralumbar fossa. Because the farms differ in what type of crops/livestock they produce there is little overlap among needs. When it comes to crops or plants, the type of plant they grow and the way they grow it dictate their needs. There are of course similarities: plants need water, sunlight and nutrition. But the way these needs takes shape to the farmers differs. For an example, a greenhouse owner wants to regulate how much water he spends by keeping track of when the plants needs water and controlling irrigation and the environmental conditions in the greenhouse automatically through feedback from various sensors. Similarly an outdoor grower of the same plant also cares about the amount of water the plants get, but in that case the worry is that plants will not get enough water through rainfall alone and would benefit from weather predictions in order to plan in advance since watering several hundred acres of fields is usually more demanding than watering in a greenhouse. So while the similarity is in regulating irrigation of plants, the needs are different and thus needs different technological implementations.

My conjecture of the information that was shared with me is that for the Command and Con-trol system to be viable for our intended users, raw data should most likely be hidden away. Instead its meaning could be communicated through a Decision Support System by means of eX-planatory Artificial Intelligence (XAI) in order to convey what is happening instead of leaving the interpretation to the user. Although I did not perform user tests on XAI I believe that it is worth pursuing for future research in this subject.

3.2.3 Responsibilities and functionalities

Given the information from the AFarCloud website [6] and the needs listed in the project’s docu-mentation, what kind of softwares are AFarCloud and CaCS? By comparing this information to a definition of Agricultural Information Systems by Sorensen et al. [7] which can be seen in figure 1 below.

Based on this definition and the descriptions and requirements in the AFarCloud project documen-tation I believe that the responsibilities of AFarCloud would need to be fit within an Enterprise Resource Planning system (ERP), with the CaCS performing a subset of the functionalities of an Information System (IS). What complicates things is that these definitions themselves are rather vague, so I further identified the duties of the CaCS as involving those of a Ground Control System (GCS) as well since it will be scheduling and possibly exerting control over unmanned or semi-automated vehicles.

3.2.4 ERP

ERP systems are developed to keep track of statistics, inventory and book-work related to running a specific business. Since agriculture is a complex enterprise these systems are useful for keeping track of large amounts of data on the farm, and data-analysis is a popular service among farm managers who use these enterprise resource planning systems. An example of an agricultural ERP software is Farm Wizard - Beef Manager [8], which is used by cattle ranchers to keep track of and monitor parameters such as health, meat yield and lineage of the cattle. Thus ensuring healthier and beefier animals.

Figure 1: Concept of management information systems, by Sorensen [7]

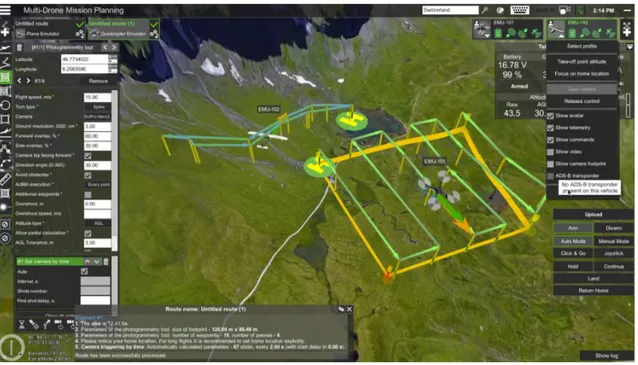

3.2.5 GCS

A ground control station is used to direct traffic in order to plan efficient routes, avoid congestion or collision and to act as a communication central. There are several commercial software which acts as ground control stations for drones in the sense that they can be used to plan and carry out automated tasks such as photography or photogrammetry (which is a camera-based 3D scanning technique).

3.2.6 The User Interface

The user interface of the Command and Control system should facilitate the scheduling -and supervision- of activities on the farm. What this means in practice is to assign agents to perform tasks in a given location and at a given time. The decision will ultimately be done by the farmer, but a Decision Support System (DSS) will be available to guide them through parts of this process. This could be done by providing the farmer with all relevant data, but a study by Kernecker [1] have shown that such a solution will quickly overburden them with information. Furthermore, since one of the goals of the CaCS is to enable a single user to control multiple UAVs the cognitive load of the user must be managed for this to be possible [9]. However, there must always be the option for an expert user to ask for more data to make their own decisions. The challenge in creating a user interface for the Command and Control system is thus to create an easily learnable interface that can still be expanded for the expert user and allow for different Levels Of Autonomy (LoA).

4

Related Work

This thesis has used a qualitative approach to measuring success of the selected design choices, and the methodology was based on the ISO 9241-210 document: The ergonomics of human-system interaction part 210: Human-centered design for interactive systems. As such, I have modelled my design space around the needs and requirements of the farmer to the extent that I was able to define it. At the same time I exploring the possible responsibilities and functionalities of the CaCS.

Human-centered design focuses on the needs and requirements of the user, and the intended user group of the AFarCloud project were European farmers who runs small to middle size businesses. There are several studies which have attempted to uncover more information about this population or a subset thereof. Hennessy et al. [10] analyzed data gathered from nearly 900 Irish farmers and discovered that the adoption of computer use in relation to agricultural businesses was positively connected with the size of the farm and the type of products it produces, with large farms and dairy farms being the most common type of farms among computer adapters. The only pure negative connection was that of age. The positive connection between size and adoption was also found by Kernecker et al. [1] who surveyed the adoption of Smart Farming Techniques (SFT) among nearly 290 farmers across 7 EU countries. Furthermore their statistics showed that adoption vs non-adoption was significantly related to the countries in which the surveys had been performed, with the extremes of UK having 30 adopters out of 34 and Serbia having 5 adopters out of 36. One of the most common reasons for non-adoption was that SFT:s were considered too complex to use, while adopters on the other hand had a common complaint that the data produced by their SFT was too complicated to interpret. Regarding the differences in adoption rates, one clue might lie in a report from the OECD study ”Skills matter” [11] from 2016 which shows that the level of education and assessed problem solving skills vary vastly within European countries. For an example the number of senior citizens from Sweden with a tertiary education was roughly 3 times higher than their Italian counterparts. But when the age group between 25 to 34 years of age was compared the numbers were only tilted in Sweden’s favor by a few percent. However, this division of age groups was not done in their assessment of computer skills and problem solving, which means we cannot tell if the numbers would be more balanced if accounting for different age groups. Given the fact that farmers are an aging group [12], this divide in education level and problem solving skills might give a clue as to the reasons why non-adoption was high in certain EU countries.

Regarding the demography of farmers, a study done in America by Bowen, Nicol Conway [13] primarily on farmers with less than 10 years of experience on farming shows that 18 out of 20 had a tertiary level of education. While this study was done in America, a trade report [14] by the United States Department of Agriculture which compares U.S - EU shows enough similarities between EU and U.S farming that I would consider their work as relevant.

A big exception which skews statistics is found in the country of Romania. Romania accounts for nearly one third of all farms smaller than 5 acres within Europe, and 9 out of 10 farms in Romania are smaller than 5 acres [15]. Although Romania has a greater number of small farmers, there were huge differences in between the member states of the EU, as can be seen in the Eurostat Farm Structure Survey from 2016 [16].

Further on the topic of adoption of computer software for agricultural businesses, Nikkil¨a et al. [3] mentions in their paper on the architecture of a Farm Management Information System that lo-calization and usability issues were major factors of non-adoption. The usability issues were also mentioned by Sorensen et al. [5] and Fountas et al. [2]. These were important factors to consider regarding the identity of the intended userbase.

To tackle issues regarding non-adoption and adoption are both important. Speaking in favor of adoption of Information Systems in agriculture were shown by Gallo et al. [17] where automation of operational monitoring of activities in agricultural and forestry tasks resulted in freeing up time for the farm manager while delivering equivalent data. According to them, roughly 70% of the farm manager’s time would otherwise be spent on data-collecting activities, leaving little time for evaluation, control and coordination tasks. For smaller, family-run, companies it was stated that the task of collecting data was seldom even an option since the farmers were generally too busy with their daily tasks to do so.

Regarding usability issues for both adopters and non-adopters, it is regarded as an important de-sign principle to communicate to users in terms they understand. As Kernecker et al. [1] stated that even among adopters there were sometimes issues with understanding the data gathered by their Smart Farming Systems. While there can be different ways of solving this issue, such as offering support or data analysis services, one concept I touched upon was Explanatory Artificial Intelligence (XAI). As explained by Herrera et al. [18] an XAI can have many purposes, but an ideal XAI would always be capable of explaining its reasoning to a user and not just its conclusions. A system such as this could have beneficial effects on human trust in AI decisions and therefore increase adoption. While the subject of XAI is still mostly theoretical due to its complexity, other subjects such as Collaborative Robotics and Adaptive Autonomy were also of interest since the CaCS should enable interaction between a farm manager and multiple agents in the field.

Using Sheridans [19] revised scale of Level Of Automation (LOA), Ronkainen [20] writes about design considerations for ISOBUS1 for class 3 machinery systems from a Human-Machine

Inter-action perspective. Ronkainen brings up two major difficulties regarding designing for safety in an ISOBUS system. The first one is due to a lack of standard interfaces and control elements for ISOBUS terminals, as seen in figure 2.

Figure 2: Artistic rendition of four different ISOBUS terminals

The second one is because, according to Risk Homeostatis Theory, human operators tend to main-tain a constant level of risk regardless of what security implements are in place. Meaning that for an example if a crane operator is given a system that stops the load before it hits an obstacle, the operators will soon drive the crane at full speed towards obstacles, relying on the safety system to stop it before an accident happens. At the same time, the operator’s situational awareness decreases since that is no longer their sole responsibility. What this means in the context of the CaCS is that when designing for ISOBUS terminals perhaps only a subset of the CaCS features should be available and that even for a desktop version the designers would have to consider how the LOA could affect the farm managers risk taking and how this should be communicated to the user. Once the responsibility is moved from the user to the field agents there must be clear guidelines as to what they are authorized to do and where the users responsibilities lie. Since the CaCS should be able to aid with planning it is of interest to consider to what extent this feature might function and how that affects the user interface. One model for adaptive autonomy for col-laborative agents was proposed by Frasheri [21] which suggests collaboration could be handled by considering factors such as agent’s energy level, knowledge, equipment, abilities, tools, perceived environment risk, perceived collaborator risk, task progress, task trade-off, and own performance. While such a model can result in efficient execution of tasks, how would this model be explained to the user and what influence would they have to alter these parameters?

An alternative to a graphic interface was suggested by C¨ur¨ukl¨u et al. [22] in which a simulation was performed to evaluate the use of a set of instructions based on natural language in human-robot collaboration. This is an important reminder that user interfaces doesn’t have to be restricted to being graphical user interfaces.

On the topic of robots, Ramin Shamshiri et al. [23] presents a comprehensive list of agricultural robots, their duties and the technologies involved in their functions. In their conclusion they men-tion that some of the challenges in utilizing robots for precision agriculture and digital farming lies in developing simple controls and multi-robot systems. Furthermore, they state that some form of human-robot collaboration might be necessary to solve some challenges for agricultural robots that cannot yet be automated. What this means in relation to the CaCS is that the role(s) of the user(s) should be the subject of further research since it is unclear if this means that N human operators will be able to perform the same duties as M workers by controlling M robots, where N <M.

In the field of Aviation it is common to use Cognitive Load as a measurement of the usability of a system. Goodrich and Cummings [9] approached the question of controlling multiple military drones from a human factors’ perspective. Their study showed that the LOA of various duties was the deciding factor. Based on the original Sheridan-Verplank scale [24] they mapped a relation between the automation of Motion Control2, Navigation3 and Mission Management4 and listed a table of maximum number of drones to be operated at once based on results from the research they had studied. While one paper estimated that a single operator might operate twelve drones at once, this was based on motion control and navigation being fully automated and mission management working at a LOA of 4 or 5. This is equivalent to a drone asking for permission to perform tasks while on auto-pilot. However, if you consider a case, provided by Ramin Shamshiri et al. [23], such as a human having to mark fruits which a robot’s fruit-identification function haven’t identified then this might be identified as a LOA of 1, since the robot would not even be aware of those fruits. According to their study this case has been covered and a maximum number of UAVs per operator would only be two in that case. In separate studies, Joseph et al. [25] and Cummings et al. [26] states that a factor in success for operation of multiple agents is an appropriate level of trust in the automated system by the operator. Too much trust means faulty decisions are overlooked, too little trust means that efficiency is degraded.

Although faulty decisions is cause for errors, Amida et al. [27] writes that 67% of UAV accidents can be traced indirectly or directly to human factors issues, and that out of those accidents 24% can be directly attributed to human factors issues related to interface design and the design of ground control stations. By following ISO 9241-210 recommendations for human-centered design Amida et al. created a new interface which they tested on pilots and used the NASA task load

2Piloting the vehicle remotely

3Ex. Setting up routes and no-fly zones 4Ex. taking photographs

index to show that a human-centered design can lead to better usability in the context of GCS. Last, but not least, a research article by Schaeffer and Lindell [28] about automation in control rooms reminded me of the importance not to assume too much about things I don’t know any-thing about. In their work they had began with the same assumption that I had, that monitoring automatic processes is an inherently dull job and have to be made more interesting by a designer in order to prevent accidents, but found that their assumptions was far from the truth. Thanks to this reminder I believe I saved myself from attempting to solve problems that never existed. Satisfied that this area of research was considered important enough to be pursued and armed with more knowledge about the intended users I set out to learn more about how to go about designing the interface for the CaCS.

5

Problem Formulation

Existing ERP software often makes use of satellite maps and provide the ability to draw waypoints and fields on top of the map and to specify actions to be taken on those locations. Drone GCS can operate on geospatial information such as fields and waypoints. ERP works with information concerning farm management and GCS works with information concerning drone-piloting, and the challenge lies in finding out how to merge the functionalities of these different software while at the same time keeping the information flow so minimal that an untrained novice can still learn it in a reasonable amount of time. As mentioned by Kerneker [1], Fountas [2] and Sorensen [5], usability issues is cited as one of the primary hinderances for the adaption of smart farming software. This means that for the CaCS to be useful to the farmers, it must have a UI that enables good usability and learnability.

In order to solve this problem I have asked myself the question: ””what domain-specific knowledge related to agriculture is important, with regards to signifiers and desired features, for developing a user interface for the CaCS, and how can human-centered design aid in making the CaCS design more learnable?””

Because the intended user is European farmers in general I am working with the limitations of only having access to local, Swedish, farmers. This gives cause to the following limitations:

1. The needs of the local farmers are only a subset of the needs of European farmers 2. The culture and governance differ among European countries

3. The levels of education and technological adaptation differs between EU countries

As such I needed to weigh my own findings against knowledge from research done in the same field and to study existing ERP and GCS software.

6

Method

Since the purpose of this thesis was to develop a design document for the UI of the mission planner, dubbed the ”Command and Control system, I worked with Human-Computer Interface methodologies as defined by ISO 9241-210 [29] which states that: ”Once the need for developing a system, product or service has been identified, and the decision has been made to use human-centred development, four linked human-centred design activities shall take place during the design of any interactive system”:

1. understanding and specifying the context of use 2. specifying the user requirements

3. producing design solutions 4. evaluating the design

Clause 6 in ISO 9241-210 describes this as an iterative non-linear process where each activity is informed by output from other activities. My interpretation of this resulted in me using the following activities:

1. Performing a People, Activities, Context and Technologies (PACT) study, and to complement this information with further research.

2. Performing interviews, researching articles and reading the documentation of the AFarCloud project.

3. Producting sketches and then low- as well as high- fidelity prototypes.

4. Discussing the sketches with my supervisors and performing ”think out loud” usability testing with the intended user group.

6.1

Sketching

Sketching and prototyping is an important part of design. Greenberg [30] explains it with an analogue to a hill climbing problem which is a sort of search optimization problem. We would like to have a great design, but we are working blindly and the design space is NP-hard since it consists of every possible combination of design choices. Our only heuristic is what our users tell us about the software. As such, we need to iterate often and try to adjust in reaction to their feedback, anything else would be equivalent to a random walk. After enough iterations, we should in theory find a good enough design. Sketches allows us to perform fast iterations, which gives us a better chance of finding a good design. A common problem for search optimization is “premature convergence”, meaning that we get stuck with a premature solution which we cannot escape from. For software design this can be remedied by allowing radical changes between prototypes in the early stages of development. This is because once a design gets chosen for a final product it generally becomes too costly to overhaul the complete design. Instead we would at that point favor small changes which are easier to implement and that doesn’t risk alienating the existing userbase.

6.2

PACT

Having a human-centric approach to design is important for several reasons; it ensures that soft-ware fits the needs of the users instead of hypothetical needs conjured by the designer’s intellect. It will take social and self-identical aspects into consideration to make sure that the user feels comfortable using and recommending the product from an ethical perspective rather than just using it out of necessity (such design also strengthens the brand of the producer of the product). It also promotes the safety and well-being of the user by considering the working environment and common requirements that the user might have. A common methodology for human-centric design is the PACT model. Using PACT gained further insight into the professional and personal needs of a subset of the intended users for the AFarCloud platform; for what tasks they will be using the

software, where they will be using it and how it fits in with the other technology already utilized. I gained important information related to this through a combination of interviews, academic re-search and documentation for the AFarCloud project.

6.2.1 People

The people aspect of PACT looks at the humans who will be using our software. An example of a people-focused question is:

”Mental models”:

Does farmers have conceptual models which relates to our software? For an example are they used to viewing maps of their own land for whatever reason? Does these hypothetical maps have information or visual design that differs from our intended design?

6.2.2 Activities

To our users the software is just a tool, so it should be a good tool which helps them with their needs instead of forcing them into performing tasks that we have invented for them. For an example we could ask ourselves: ”What are they doing?”

We know what our software wants to accomplish, but what does the farmers want to accomplish? By studying activities we can break down activities into smaller components such as tasks and actions.

6.2.3 Context

The context aspect can be further broken down into subcategories. Some questions that can be asked from a contextual viewpoint is:

”What is the physical context?”

Will the planner work both in the office or in the field? If so, should there be a ”slimmed down” mobile version? What is the social context? Can the personnel on the farm work autonomously once a mission has been planned, or will they require continuous communication?

When looking at the context there is also the issue of ergonomics, for an example is the environment noisy or quiet? A noisy environment might require louder warning signals, or stronger visual cues. 6.2.4 Technologies

A modern farm already involves a lot of technology. Questions that can be asked is for an example: ”Will our software need to communicate with special devices?”

For an example do they have any devices on-board tractors that we will have to communicate with?

6.3

Prototyping

A prototype can be seen as a middle ground between a sketch and a finished product. The more work we put into the prototype the better we can evaluate our design, but at the cost of time. For this reason we want to make sure that what we are prototyping is actually worth pursuing. Because of this it is common to develop both low- and high- fidelity prototypes

6.3.1 Low fidelity prototype

A low fidelity prototype is a conceptual prototype where the focus lies on the way the user interacts with it rather than representing a ready product. In my case the prototype consisted of a digital document containing representations of different states for the UI.

6.3.2 Exploratory test and Usability testing

My first intention was to evaluate the users’ mental model of the software by showing them various states and asking them questions about how they interpreted various icons and how they would go about to perform certain tasks. The purpose of the exercise was to find out what sort of icons were familiar to them and how they would interpret visual constructs such as objects being grouped together by color coding or separators. This information turned out to be of great importance and made me seek out more specific information about design principles and journals I had previously not thought of.

6.3.3 High fidelity prototype

The purpose of the high fidelity prototype is to simulate the Command and Control system interface as accurately as possible. The design choices that I had committed myself to were present in the high-fidelity prototype to the extent of my capabilities. The high fidelity prototype is extra important since it was my last chance to test my design choices. Any additions to design elements after that would thus be untested and purely speculative.

6.3.4 Assessment Test

By creating an interactive prototype I was able to evaluate how users would interact with a modular desktop environment in general and the implementation done with the Weifen Luo DockPanel Suite5 in particular. This was important since the decision to create a modular solution was the result of previous testing and research.

7

Design Process

The design process consisted of wireframe prototyping, a PACT analysis and the implementation of both low- and high- fidelity prototypes. Throughout the prototyping process the design guidelines were evaluated to make sure that they were beneficial when applied to our work.

7.1

Pilot study

During the pilot study I searched for and read documentation which I believed was relevant to designing the user interface. I read books on design: ”Rocket Surgery Made Easy” [31] by Steve Krug for learning practical usability testing. ”Sketching User Experiences” [30] by Bill Buxton, Saul Greenberg, Sheelagh Carpendale and Nicolai Marquarde for learning more about the design process and how to structure designing. ”The Design of Everyday Things” [32] by Don Norman for learning more about how humans go about solving problems when faced with a new product. ”100 Things Every Designer Needs To Know About People” [33] by Susan M. Weinschenk for practical information about how humans learn and process information. ”Universal Principles of Design” [34] by William Lidwell for learning about design principles so I could select which ones I would base my design on.

In addition to this I read articles on drone ground control systems and multi-agent collaboration since those subjects were central to the role of the CaCS. Because it was my understanding that project AFarCloud should be able to present information to aid the users in making decisions for their farms I also looked into commercially available Enterprise Resource Planner software. And since even if the CaCS should handle UGVs in addition to UAVs, the latter is more economically viable in many use cases and also more commonplace than robots in commercial agriculture; thus I decided to learn specifically about commercial drone GCS. For a table of surveyed ERP and GCS software, see Appendix A.

7.2

PACT analysis

7.2.1 People:

While AFarCloud has stakeholders within research groups and technology developers, the main group of users are those who manage or work on a farm. Since the Command and Control system is intended to be usable both for planning and surveying missions there were several factors to consider:

• Most users have a need for mobile solutions for some or all activities due to the nature of their jobs.

• There will be a requirement for localized language support for the user interface since the users read and write in many different languages.

• The intended users are divided into several age groups, but a majority is above the age of 55.

• There might be users with decreased eyesight due to age.

• The intended users have varying levels of computer experience, few could be considered experts however.

Due to the many differences in users there is a need for the user interface of the Command and Control system to provide both great flexibility and learnability in order to be useful to the majority of users. Because of this, there needed to be several layers of complexity, only available to those who had the technical know-how to make sense of the information provided by the AFarCloud system. As such, the interface could benefit from allowing a user to customize it by for an example using a modular interface where different panels containing specific information can be stacked on top of each other in tabs or moved across the screen. In addition to this, optional automated planning through AI-assistance in order to simplify tasks could be beneficial.

7.2.2 Activities:

The purpose of the Command and Control system is to provide data-driven planning and surveil-lance for a multitude of tasks. These are some of the aspects related to the different activities:

• Activities can have environmental prerequisites, such as time-of-day, humidity, temperature, wind conditions. In some cases tasks can even be planned based on conditions found in neighboring countries, such as the case with airborne pests.

• Activities can be seen as dependent on the execution of previous activities when planning long term missions, such as drivers maneuvering semi-autonomous tractors while on public roads but switching to auto-steering once on the field.

• Activities can involve rented equipment and machinery, making it difficult to track equipment in advance.

• In some cultures it is common to trade services with other farmers, which involves external actors which cannot be as easily scheduled due to having their own farm to take care of as well.

• There are cultural and regional differences in grazing policies, making it sometimes difficult to predict where roaming cattle might be on a given date.

• Harvesting might not be done per field, but rather require a weight quota of produce to be harvested.

These are just a few examples of the complexities of generalized farming activities. Farming as such is only a blanket terminology and may involve as many specializations and levels of technical understanding as computer science. As such, planning activities down on a field level basis is not only an NP-hard problem but one might not know exactly what actors are available for each activity for too long in advance. In fact, planning for activities could potentially be done to decide what actors are needed rather than using available actors to determine the order of actions to perform.

7.2.3 Context:

The contexts under which the activities are performed are also highly individual, both depending on the nature of the farm and how the farm itself is organized. These are some of the possible contexts:

• Activities can be performed alone or in a group.

• Tractors are often noisy, making audible warnings less efficient.

• When performing a mission in a tractor, coordination over cellphone is common for larger farms.

• Farmers may be specialists or have a manager position as part of a greater organizational structure.

• Farm work can vary in intensity, making seasonal workers commonplace and extending the workdays to longer than 13 hours at times.

• Farmlands can be vast, making current drone regulations a potential hindrance for efficient automated agricultural systems.

• Farming activities can take place in very different climates, some which makes the use of drones complicated due to strong winds or low temperatures.

Because the context in which farming activities are carried out it is difficult to generalize solutions. For an example, given the conditions in a tractor it might be desirable to deliver warnings through haptic feedback such as vibrations or exaggerated visual feedback, while in other cases auditory feedback might be more desirable, for an example in a situation where a user is focusing their attention to a different screen than the one causing the alert in a multi-screen setup. The organizational setup of the farm can also be a challenge when deciding what kind of data to display to the user. An advanced pilot/operator might want detailed information about actors, such as seen in 3 , while there might be another operator whose job is to make decisions based

Figure 3: A screenshot taken from a demonstration of the UGCS software, showing a menu for photography setting on the left

on the economic viability of crops/livestock/produce. Our task is to make sure the desired data is available to the person who needs it, but to hide or abstract away information which would only complicate their job and decrease the quality of usability and learnability for the Command and Control system. Furthermore, it might not be desirable to allow every user to access any kind of data. One such reason can be that too much irrelevant information can make it more difficult for a user to navigate the interface. This is furthermore complicated by the fact that the kind of information a user might need can be both based on their role and their task at hand. For an example: A seasonal farmhand might need to access information about their tasks and nothing else. But if they go from having a task related to caring for livestock (which might involve information about the health status of individual animals) to driving a tractor at a later stage, should the livestock information be available for the duration of the work day or only for the duration of that specialized task? There are pros and cons with either solution; and as such it is one of the questions that must be answered at a later stage of the development of the interface.

7.2.4 Technology:

Farming involves tools of varying technological advancement. Some tools have not changed much since ancient times while others are going through rapid iterations of improvement and/or change. These are some of the technologies used in contemporary farming:

• ISOBUS is a standard protocol for onboard computer systems used in tractors, with support for nearly one thousand manufacturers. ISOBUS terminals are often small and robust. • Headsets that isolate the user from the noise of the tractor’s engine are sometimes used for

communicating in the field.

• Tractors in themselves are meant to pull, push or carry attachments. The attachments a tractor is equipped with determines its role.

• For remote farms Low-Powered Wide-Area Networks (LPWAN) are suitable due to their low energy consumption which makes them useful for connected field sensors and other battery operated objects.

There are great differences in technological equipment between farms. While ISOBUS terminals such as the Mueller BASIC terminal can be retrofitted into older tractors for roughly 1000e, a new high-tech tractor such as Fendt 1050 Vario can easily cost over 300,000e. Similarly, there is a big difference between a mechanical milking machine and a computerized data-gathering milking robot. The former is attached to the cow by a worker and has to be maintained by the same, the latter will both allow the cow to “milk itself” by an automatic booth which will both clean itself afterwards and logs information about the visit and update the information of the cow itself. In the end, small farms generally don’t produce enough goods to make the most modern equipment cost-efficient. As such, with the AFarCloud having small to medium size businesses as their intended user group, there has to be solutions both for those who can afford expensive robots and need to improve the efficiency of their workflow as well as for those who need smart but small scale solutions at a low cost.

7.3

Sketching

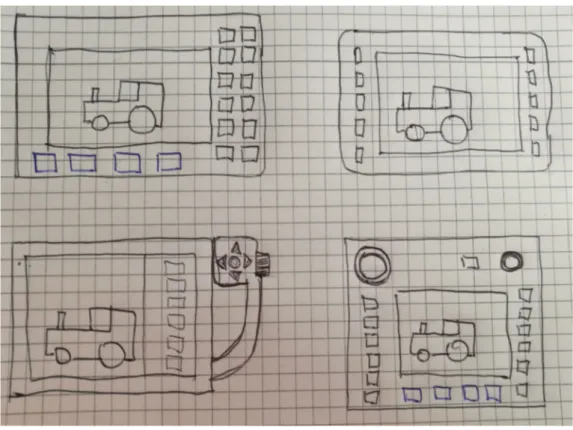

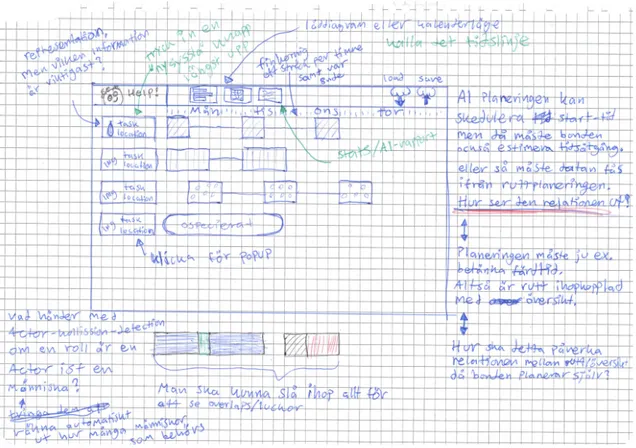

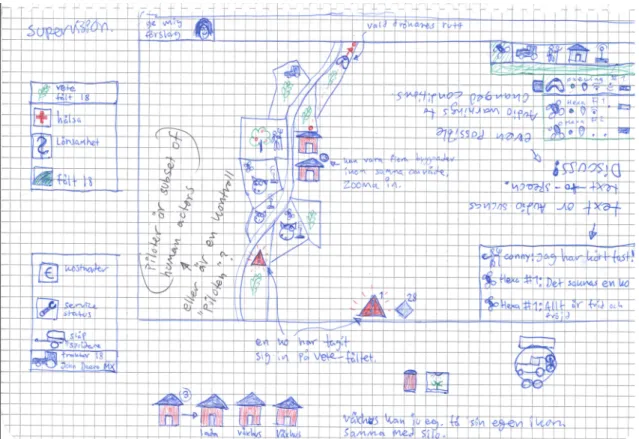

My design space began as quite broad, yet I felt as if I was missing information. By putting my thoughts and ideas down on paper it was easier for me to visualize how they could be imple-mented, and how to design for various hardware such as ISOBUS terminals, desktop computers, smartphones and tablets. This part of the design is called Divergence. While sketching is an activity I engaged in throughout the entire process, during this phase it was also the only means of visual design that I had. Some sketches from before the first prototype can be seen in figures 4, 5 and 6 below.

Figure 4: A sketch imagining a three screen workstation and the problem of simultaneous planning and supervision

The sketches above represent quick iterations that allowed me to get a clearer picture of how an interface would look like once placed on a screen. For an example in figure 4 I imagined that the windows projected on the various screens could be controlled by a panel listing the various screens, represented by icons looking like monitors, phones or terminals. This would allow a user drag icons representing subroutines such as schedules, inventory or maps onto that panel and in that way change what was visualized on those devices.

During this phase there were a lot of uncertainties that I could not even begin to answer, such as whether a task should belong to a person or a machine. There were rational reasons for having a task belong to a machine, such as the machine having the software and hardware through which the task can be completed (and logged) and thus if for an example two workers exchanged tasks the system would log progress based on the machines independently on whoever would drive operate them. However, the users favored a model which focused on the humans carrying out the tasks with the help of machines.

7.4

Additional research

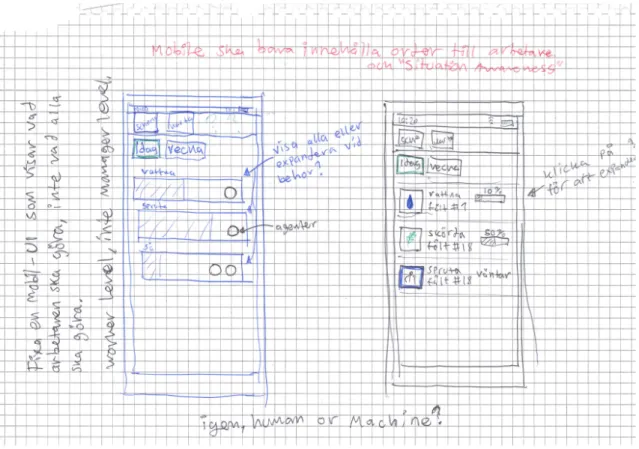

Before I began production on the first low fidelity prototype I had met with my supervisor to get approval and explain my decisions. At this point I explained that one of my ideas were using an AI tool to motivate its automatic planning suggestions to the user. One of the basis for this was that Kernecker et al. [1] had interviewed experts both for countries with high adoption of Smart Farming Techniques and those with low adoption and the conclusion was that those from low adoption countries had problems understanding the software at all, and those from high adoption had problems interpreting the data. (With the latter supported by Wolfert et al. [35] in their article ”Big Data in Smart Farming - A Review”). This led me to think that those problems could not just be solved by simplified text within the interface itself, any communication between the DSS and the human would need to be interpreted into ways the user can understand. This led to a suggestion to find out more information about Explanatory Artificial Intelligence, Adaptive Autonomy and Collaborative Robotics. In addition to this I looked briefly into ”Chatbots” as a possible means to communicate with the Decision Support System that I had come to understand would be part of AFarCloud. The sketches that I based my first low fidelity prototype on can be seen below in figures 7 and 8.

Figure 7: A sketch showing ideas for a map layout and various menus

Figure 8: A sketch showing ideas for a map layout as well as warnings and detailed information windows

7.5

First low fidelity prototype

For my first low fidelity prototype I wanted to explore both high level planning, as seen in figure 9, and low level planning as seen in figure 10.

The software I had evaluated had their navigation menus either on the top or the left side. Some of them used pure text while other used a combination of icons and text. I decided to go for a combination of icons and descriptive text based on the design principle of ”recognition over recall”, which tells us that it is easier for a person to navigate an interface based on recognizing images and layouts rather than recalling the meaning of commands. Since the CaCS will involve robots and drones there is a possibility that introducing these subjects will also introduce unfa-miliar drone/robot specific domain language and imagery, as such it is better for the user to have descriptions they can relate to and generic images for the reason that they might otherwise have to actively try to parse the meaning of this new terminology, which would worsen the learnability of the CaCS.

7.6

First Evaluation

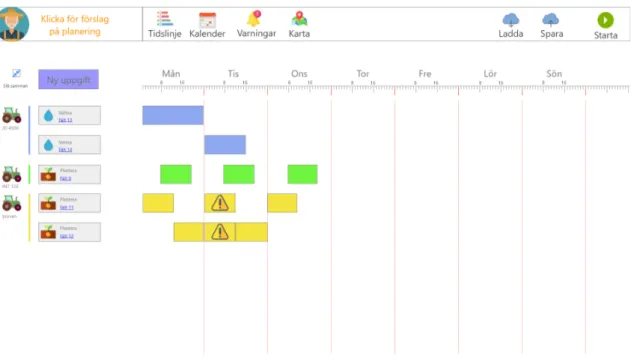

Both low fidelity tests focused on evaluating the mental models and domain-specific language of the farmers. To do this I showed them interactive slides that I had created in Adobe XD and asked them to guess the purpose or meaning of various symbols and figures. Some examples can be seen in figure 11 and figure 12 below.

My intent had been to color-code the schedules associated with respective tractors, but during the user testing I found out that the user associated the colors themselves with the tasks. Such as blue for watering and green and yellow for planting. I had not consciously chosen the colors to match with the symbols, but the user read meaning into the colors themselves. I decided to keep the colors for the second prototype to verify if different users would interpret it similarly.

When asked to identify the meaning of the triangular symbols on the yellow boxes the user con-cluded that there could be something wrong with the field or something that the driver must pay extra attention to while performing the task. The meaning of those icons was to symbolize that one machine had been issued a task on two different fields at the same time. The script used for this user testing can be seen in Appendix B.

Figure 13: An image depicting fields on a farm, populated by icons, and with a visualization of a route for a tractor

Several scenes depicted a map-overview of the farm. This scene in particular showed the route of a tractor. The user identified the visual information as a route but commented that it was unnaturally straight and furthermore expressed that he (when working on others farms) would want to see the actual entrances to the field marked on the map as it could be hard to spot. This was unexpected information that I found valuable.

Furthermore, in previous scenes the user had initially identified the symbols on the top right corner of the map as being a drag-and-drop feature for placing symbols on the map. What I intended the feature to be was to select and filter information related to actors and types of fields. However I had previously considered such a feature but discarded it since such a feature should be available while overseeing a mission and that the alternative would be to either have two different map views or the ability to toggle between supervision and placement functionality within the map view, something which I had discarded as potentially confusing to the user.

Other comments on the map overview included that fields should be color coded in order to be more easily identified, and that fields in reality are often divided into plots for growing different crops. It was also mentioned that a lot of tasks on a farm have a short duration and are no field-specific but rather location specific, such as moving a hay-bale from one location to another. I also asked the user what sort of information the user thought was valuable when viewing the specific tractors in the top-right corner. To this the user replied that the WIFI-symbol felt useless and that the function of the tractor and the person driving it would be more helpful. Furthermore my own conjecture was that I had made parts of the design too abstract, such as color-coding the schedules of the tractors, and decided to make information clearer and more informative.

7.7

Second low fidelity prototype

The development of the second prototype was mainly to make some changes based on the feedback from the first evaluation. In addition to this I read some articles from the Norman-Nielsen design group, which I consider as a credible source due to the scientific contributions of Don Norman and Jakob Nielsen in the field of User Experience. Among the articles that influenced my work the most were one who let to the OECD report on adult skills [36], one on designing for a broad userbase [37] and another one on usability testing [38].

7.8

Second Evaluation

Because of the addition and removal of some icons and visual features there were small changes done to the script. Another difference in the methodology was that this evaluation was performed with two users at once, since it was a couple who managed the farm together and stated that in a real world situation they would go through any new software regarding the farm together. In figure 14 I verified that the icons used to signify the conflict in scheduling were by themselves not enough to convey the nature of the emergency, in fact it seemed to confuse the users. Either it must be accompanied by text like everything else or at least display additional information while hoovering over it with a mouse cursor. Figure 15 shows another case of miscommunication through icons; when asked the purpose of the padlock symbols one user answered that its purpose was to prevent others from entering or changing information in those panels, the other user was confused and opted out of guessing. While my intention had been to communicate that the panel could be locked to avoid closing or minimizing it by mistake.

Figure 14: An image depicting color-coded schedules and icons representing a time-conflict, second version

In an open discussion about smart farming before performing the evaluation it was mentioned that one of the limiting factors for getting a drone was the laws and regulations which would limit its usefulness because of the size of their farms. Furthermore, they commented that when animals are involved it is very difficult to make plans in advance since something could happen that changes the priorities of the day completely. An example of this could be a cow getting hurt while graz-ing or an animal catchgraz-ing an illness. The script used for this user testgraz-ing can be seen in Appendix B.

7.9

Final research

While analyzing the results of the second evaluation I believed that the overall design direction pointed towards some sort of modular interface, to suit the unique structure of each farm and to avoid cluttering the workspace of the CaCS. However, I wanted to make some final research to see if I could find evidence for or against such a decision as it would be a big change compared to the current design. In my research I focused on finding out what sort of research had been done specif-ically with agricultural ERP, and this time I found a different definition which I had overlooked: ”Farm Management Information System”. Once I searched for the phrase in the school’s library system I found a wellspring of information and ended up selecting several of those articles for my thesis. In these newfound articles I found many similar projects, among those other EU-projects, which supported the ideas of simplicity [1], modular interfaces [5] and user customization [2] for the UI itself. Other information that I found useful was an evaluation of time/economic savings, which strongly spoke in favor for the AFarCloud project as a whole, and ethnographic research which challenged my perspective of the prospective farmer. Statistically speaking, 32% of the population of European farmers is aged 65+ and with roughly 11% being younger than 40 years [12]. My initial assumption had been that the software should cater strongly to the elderly. But given the new information about the likelihood of older farmers adopting computers [10], I decided to loosen my self-imposed restrictions on accessibility for the elderly, while not abandoning them completely.

7.10

High-fidelity prototype

The high-fidelity prototype was based on existing code and as such I had to divide my time between studying the existing codebase and implementing my changes in regards to the framework at hand. Doing so allowed me to create features which I believe I would not have been able to do otherwise given the limited time-frame.

The interface uses a library called Weifen Luo DockPanels [39], which allowed me to create drag-and-droppable panels which can be stacked on top of each other or made to fit specific regions of the screen. For a description of the interface see figure 16.

Figure 16: A screenshot of the user interface of the high-fidelity prototype, with notes written in red text

A further explanation of the notes made in figure 16:

Terminology Description

Menu Strip A Windows menu where every function available to the user is found and ordered into different categories.

Main Toolbar A panel where common functions are found and represented by icons for quick access.

Panel A panel is a surface where controls and displays are fixed.

Tab A tab holds a description of a panel, commonly used to navigate when more than one panel occupy the same space.

Transition Area Picker An abstract organization tool which will place a dragged6 panel in a

position relative to the targeted7 panel.

Transitioning Area The area which becomes highlighted to preview where a dragged panel will end up, based on which Transitioning Area Picker element it is being dragged over.

Table 3: A table of interface terminology occurring in this section

In the final version of this prototype, I decided to keep the Main Toolbar for ease of access. Its function is to open or focus panels that have been closed, but by pressing the ”ALT”-key the user may bring up a Menu Strip which could be reserved for advanced users or to add further customization to the Main Toolbar or the panels being shown. The purpose of this prototype was to test how users would be able to navigate among several panels, if they would feel the initiative to move the panels to fit their personal taste and if they would be able to recover from errors in the form of accidentally hiding or closing a panel.

6As in drag-and-drop functionality

7.11

Final Evaluation

The high-fidelity prototype was evaluated with four different users and each user performed the test twice, with roughly 1 hours in between tests, for the reason of seeing how much they could remember from the first session. The test consisted of three parts; Identifying the meaning of buttons and icons, rearranging the docking panels and recovering from errors. See figure 16 for a brief description of the user interface and figure 17 below for an image of the initial state of the high-fidelity prototype.

7.11.1 First part: mental models

In the first part I evaluated the users mental model of the content of three of the docking panels; ”Inventarie”, ”Detaljerad Information” and ”Karta”. These are the results for the first test: • 4 out of 4 users identified the intended purpose of the ”Inventarie” panel as a list/tree of

resources.

• 3 out of 4 users identified the intended purpose of the ”Detaljerad Information” panel. 1 user identified it as a ”Help” panel.

• 4 out of 4 users understood the intended purpose of the ”Karta” panel.

However, when asked to identify the purposes of the icons map-interaction panel, as seen in figure 18, the results showed poor usability in the design. Considering that there were 4 users and 7 icons per test, that gives us 28 cases. Out of those 28 cases, a total of 7 cases were correctly classified, with one user identifying three cases.

These are the results for the second test:

• 4 out of 4 users identified the intended purpose of the ”Inventarie” panel as a list/tree of resources.

• 4 out of 4 users identified the intended purpose of the ”Detaljerad Information” panel. • 4 out of 4 users understood the intended purpose of the ”Karta” panel.

In the second testing, 23 out of 28 cases were correctly identified. Out of the 5 incorrect answers, 4 of those could be attributed to uncertainty over the functions of the buttons responsible for views at the bottom part of the map-interaction panel, as seen in figure 18

7.11.2 Customization and moving panels:

In the second part I evaluated the way the users interacted with the movable panels when asked to perform specific tasks.

These are the results of the first test:

The first task was the identify the panel named ”F¨alt”. 3 out of 4 users identified the text ”F¨alt” in the ”Inventarie” panel. This was most likely due to them not sharing the same mental model of programs or design that I do, this was of minor importance for the evaluation itself but a good reminder to always communicate with users in a language that they understand.

When dragging the ”F¨alt” panel on top of the ”Detaljerad Information” panel they were asked to stop and hold the mouse and describe what they thought that the function of the Transitioning Area Picker that appeared was. Two of the users, A and B, explained it in relations to a ”drag and drop” function, the same users were able to guess that it had to do with where the panel was going to be positioned. User A also identified that the highlighted area (Transitioning area) of the target panel indicated where the dragged panel would end up.

When asked to move the same panel again and place it so that it would share the space of the ”Karta” panel and be placed on top, A did so in the intended manner. D had considerable diffi-culty in performing the task but managed to complete it.

These are the results of the second test:

3 out of 4 users identified the panel named ”F¨alt”, the 4:th user identified the text ”F¨alt” in the ”Inventarie” panel.

When asked to describe the function of the Transitioning Area Picker, 3 out of 4 users could cor-rectly describe its general purpose. Two users now identified that the highlighted area of the target panel indicated where the dragged panel would end up.

When asked to perform the third task, two users managed to do so on the first attempt. User D still struggled but managed to complete the task.

7.11.3 Third part: Recovering from errors

This part evaluated how/if the users could restore the appearance of the interface if closing or pressing the ”auto hide” button on a panel.

These are the results of the first test:

When asked to restore a closed ”Karta” panel, 3 out of 4 identified the map symbol on the Main Toolbar as the easiest way to go about it. The 4:th user stated that the keyboard shortcut ”Ctrl + Z” should have restored the panel, but also identified the map symbol of on the Main Toolbar as a viable option.

When asked to identify the results of pressing the ”ALT”-key, none of the users associated it with displaying or navigating a Windows Menu Strip as per the Windows design guidelines for keyboard navigation [40]. All users had difficulties identifying what had happened to the screen. Once the Menu Strip was identified, 3 out of 4 users picked the intended choice on the first attempt. When asked about their mental model of the ”auto-hide” button, 2 out of 4 users stated that it would prevent them from closing the panel. While the intended action was to hide the panel, I myself had the same mental model the first time I saw it.

When asked to push the ”auto-hide” button of the ”Inventarie” panel, one of the users correctly identified that the panel became hidden while the others thought that it had been closed. There-fore, when asked to restore the panel only the user which had assumed that it was hidden actively looked for a new way to restore the panel while the others looked to the Main Toolbar or the Menu Strip.

When asked where on the screen the tab of the hidden panel would be placed if the ”Planering” panel was hidden, 3 out of 4 users had a mental image that it would be placed either at the bottom or lower left corner of the windows. The 4:th user believed that it would be placed in the same position as the hidden ”Inventarie” panel.

These are the results of the second test:

When asked to restored a closed map, 3 out of 4 users used the Main Toolbar, the last user used the Menu Strip.

When asked how to open the Menu Strip, 3 out of 4 pointed out the ”ALT”-key. This task differed from the one in the first test, instead its purpose was to test how they interacted with the keyboard. The 4:th user could not identify that it was the ”ALT”-key at first but recognized that it was ”one of the bottom left keys”.

When asked to restore the hidden ”Inventarie” panel, 2 out of 4 had remembered the previous procedure. The remaining two users had forgotten that they had to press the ”auto-hide” key again after focusing the hidden panel in order to restore it.

All users identified where the tab would show up if the ”Planering” panel was hidden, either pre-cisely or its general area.

7.11.4 Further comments:

One user inquired if livestock could be removed from the inventory if it was considered to be of no use to them.

Three users commented that the ”selection button” in the map-interaction panel seemed superflu-ous given that they were already capable of clicking on objects on the map.

Every user was positive to the movable panels, stating that they considered it good to be able to customize the appearance of the program.

The tested version of the software had English descriptions in its Menu Strip, which could be considered as invalidating the results of the task it was involved in.

![Figure 1: Concept of management information systems, by Sorensen [7]](https://thumb-eu.123doks.com/thumbv2/5dokorg/4543026.115515/10.892.169.707.143.491/figure-concept-management-information-systems-sorensen.webp)