V¨

aster˚

as, Sweden

Thesis for the Degree of Master of Science in Computer Science with

Specialization in Software Engineering 15.0 credits

A SYSTEMATIC MAPPING STUDY

ON THE USE OF SOFTWARE BASED

AI ALGORITHMS TO IMPLEMENT

SAFETY USING COMMUNICATION

TECHNOLOGIES

Vlasjov Prifti

vpi18001@student.mdh.se

Examiner: Federico Ciccozzi

M¨

alardalen University, V¨

aster˚

as, Sweden

Supervisor: Sara Abbaspour Asadollah

M¨

alardalen University, V¨

aster˚

as, Sweden

Company supervisors: Rafia Inam, Alberto Hata

Ericsson, Stockholm, Sweden

Abstract

The recent development of Artificial Intelligence (AI) has increased a lot the interest of researchers and practitioners towards applying its techniques and software based AI algorithms into everyday life. Domains like automotive, health care, aerospace, etc., are benefiting from the use of AI. One of the most important aspects that technology aims to deliver is catering safety. Thus, the attempt to use AI techniques into carrying out safety issues is momentarily at a progressive state.

Communication technologies have been around for many years as an essential part of society. Cellular and non-cellular communication are embracing the use of AI as well, sharing the same issues such as observing the environmental variations, learning or planning. Furthermore, multiple software based AI algorithms are trained in the cloud, where these communication technologies play a vital role.

This thesis investigates the existing research performed on the use of software based AI algorithms to implement safety using different communication technologies. For this purpose, a systematic mapping study is conducted to summarize the recent publication trends and the current gaps in the field. The outcomes of this study contribute to the researchers presenting new challenges for further extensive research and to the practitioners to find new methods or tools in order to apply them to the industry.

Table of Contents

1 Introduction 1 1.1 Motivation . . . 1 1.2 Problem Formulation . . . 2 1.3 Expected Outcome . . . 2 1.4 Thesis outline . . . 22 Background and related work 3 2.1 Safety in Software Engineering . . . 3

2.2 AI for Software Engineering . . . 3

2.2.1 Artificial Intelligence . . . 4

2.2.2 Machine Learning . . . 4

2.3 Communication technologies . . . 5

2.3.1 Telecommunications and cellular communication . . . 5

2.3.2 Non-cellular communication . . . 6

2.4 Related Work . . . 6

3 Method 8 3.1 Definition of research questions . . . 8

3.2 Identification of search string and source selection . . . 9

3.2.1 Search string . . . 9

3.2.2 Source selection . . . 10

3.3 Performing the search . . . 10

3.4 Study Selection Criteria . . . 12

3.4.1 Inclusion Criteria . . . 12

3.4.2 Exclusion Criteria . . . 12

3.5 Performing the selection . . . 12

3.6 Classification schemes definition . . . 13

3.7 Data extraction . . . 16

3.8 Data analysis . . . 17

3.9 Threats to validity . . . 17

4 Results of the field map and discussion 18 5 Conclusions and future work 25 5.1 Future work . . . 25

References 28

Appendix A Primary studies 29

Appendix B Classification of primary studies 46

List of Figures

1 Workflow of the research method process . . . 8

2 Workflow of the search and selection process . . . 13

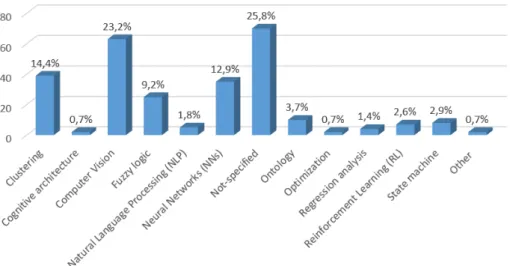

3 Distribution of studies for software based AI algorithms . . . 18

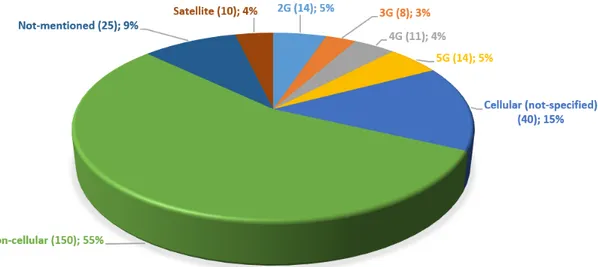

4 Distribution of studies for communication technologies . . . 19

5 Distribution of studies for application domains . . . 20

6 The relation between categories of communication technologies, software based AI algorithms and number of relevant publications . . . 20

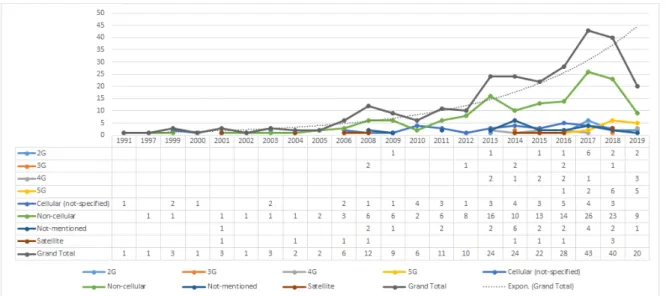

7 Distribution of publications with respect of time regarding the communication tech-nologies . . . 21

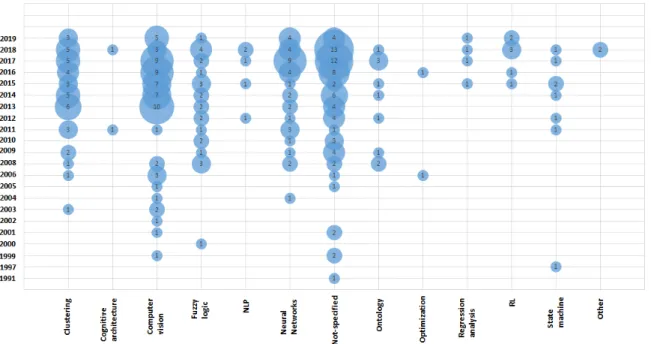

8 The relation between categories of software based AI algorithms, year of publications and number of relevant publications . . . 22

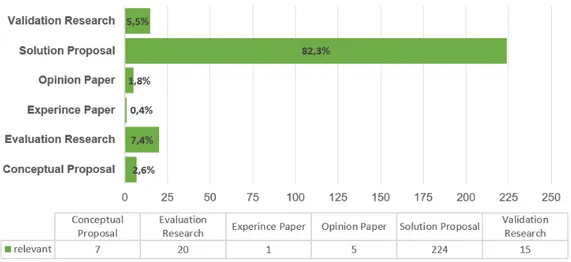

9 Distribution of studies regarding the types of research contribution . . . 23

10 Distribution of studies regarding the research types . . . 23

List of Tables

1 Composition of search string . . . 10

2 Search string . . . 11

3 Search in the digital libraries . . . 11

4 Search results . . . 11

5 Data extraction form . . . 16

6 Primary studies regarding the communication technologies classification . . . 46

7 Primary studies regarding the software based AI algorithms classification . . . 47

1

Introduction

One of the latest attempts in the software industry consists of making Artificial Intelligence (AI) an integral part of Software Engineering (SE). AI is the science of making machines able to reach the same level of intelligence as humans [1]. By embracing a set of learning and problem-solving skills, the machine together with the human mind can secure the desired result when performing an activity. The recent real-world outcomes in this field have tremendously risen the attention towards AI from the software engineers. A certain amount of activities performed by the software need a touch of human capacity, which is exactly what AI is trying to achieve. That is why in this sense the adaption of AI techniques from SE seems imminent. But, what good is this development without the visible impact of the people?

With the big step forward and the advancement of AI, revolutionizing smart solutions, humankind is trying to apply the algorithms in different domains to improve the everyday life [2]. This improvement would be negligible if safety was not being taken into consideration. Therefore, AI is progressively used as a tool to carry out safety issues.

One of the factors that boosted the demand in the use of AI and machine learning (ML) algorithms, according to the researchers, is the rapid development of industrial Internet of Things (iIoT) [3]. IoT is a really wide topic, but in the context mentioned above, it is important to highlight that iIoTs work with big amount of data, which can be operated by AI or ML-driven techniques. Shifting the spectrum to communication networks, cellular and non-cellular communication has improved a lot through the years to become an irreplaceable part of the society. Recently, sliding the attention towards intelligent communication networks, AI/ML algorithms are required to handle a large amount of data at the network and user level for the providers to offer higher quality and a variety of services [4]. Furthermore, a significant part of these and techniques are trained in the cloud and sometimes executed from there, where cellular networks play explicitly an important role.

1.1

Motivation

Every day, software engineers face complex software engineering problems and deal with designing, implementing, building, and finally testing these software systems. Considering these challenges, software engineers have harvested a lot from AI, meaning that their work is assisted by methods and algorithms emerging from the AI community. In practice, the most relevant software based AI algorithms used to solve software issues, are distributed over three categories: fuzzy and prob-abilistic reasoning, computational search and optimization techniques together with classification, learning and prediction [5].

On one hand, communication technologies are more and more adopting complete AI in use. Some of the cellular networks’ features like observing the environment variations, learning uncertainties or planning a response answer are the same obstacles that can be overcame through AI as well, creating in this way a link between AI and cellular networks, where cellular networks maintain the communication with the environment while adopting AI [2]. On the other hand, to this day, cater-ing safety is a concerncater-ing challenge that technology is increascater-ingly faccater-ing. To brcater-ing all together the above-mentioned concepts, the software based AI algorithms application in order to provide safety in different domains over communication technologies is studied. A comprehensive understanding of the existing literature and performance analysis will be done by this thesis, because it is essen-tially important to assess the state where this problem stands. The main purpose is to identify the current research gaps where AI is used to perform safety using communication technologies by acknowledging and categorizing the types of software based AI algorithms and the communication ones. Furthermore the potential application domains, where these technologies find applicability are discussed as well.

1.2

Problem Formulation

The main goal of this master thesis is performing a detailed systematic mapping study on the use of software based AI algorithms and their applicability in securing safety among various real-life domains like health care, automotive, aerospace, manufacturing and so on, considering the role of communication. This thesis aims at providing answers to the research questions elaborated in 3.1. These answers are believed to enlighten the current state-of-the-art and hopefully serve for future enhancement of this topic.

1.3

Expected Outcome

By performing this systematic mapping study a few objectives are expected to be accomplished. After finishing the final step of the thesis, analyzing the results, it is expected to have proper answers to the detailed research questions. Firstly, knowing the gaps in the use of software based AI algorithms and communication technologies within the field of safety is essential to be achieved. Secondly, determining the types of software based AI algorithms and the domains where they are mostly used, together with the communication technologies is another expected outcome. More-over, it is expected that the classification obtained from this work and the identified gaps will open new challenges for the researchers and practitioners. Finally, since this work is conducted in collaboration with Ericsson AB and the results will be reported back to the company, the outcome will practically benefit their purpose.

1.4

Thesis outline

The outline of this thesis is structured as follows: section 2 reveals the background of this topic giving a detailed overview of the major concepts used throughout the work. In the same section, the ending provides a range of related works to be compared with. Section 3 explains the method and then conducts the systematic mapping study, starting with the definition of the research questions and ending with the threats to validity. Section 4 and 5 are the last two. In section 4 the outcomes of the study alongside the discussion for each research question are presented. Finally, the thesis is concluded in section 5, where some insights about the possible future work are included as well.

2

Background and related work

In this section of the thesis, a general overview of the core notions is presented. Safety and AI features in relation to software engineering, followed by communication technologies are described widely. At the end of the section, some similar works are discussed and compared to this thesis, composing the related work.

2.1

Safety in Software Engineering

Among the most important reasons for achieving successful outcomes in different domains is es-tablishing safety to a considerably high degree. Safety is defined as the absence of catastrophic failures in an environment or the users of the environment[6] Other definitions specify safety as the degree of prevention, reduction and reaction to accidental harm [7]. Safety can be usually divided into three categories: health safety, property safety and environmental safety.

From a software engineering perspective, safety is a system property [8]. Numerous safety-critical systems depend on the software approach to achieve their goals. These systems apply to many application domains ranging from automotive and autonomous vehicles to medicine, health care, factories, and most recently IoT. However, considering the influence of the software in a system, the system itself can either benefit or be detrimental regarding safety terms. In order to generate safe software systems, the following steps are required [9]:

• acknowledging the hazards of the system

• determining how different software components of the system impact the hazards • defining safety requirements from the software components

• developing software engineering techniques or algorithms to satisfy the safety requirements

Because of the great enhancement of AI in recent years, for this master thesis, software based AI algorithms are considered in order to implement the safety requirements.

2.2

AI for Software Engineering

In Software Engineering, AI has been of high importance, with researchers trying to find its ap-plicability for many years [10]. According to [11], automatic programming is the main goal of AI employed in SE, where software engineers would get an automated program of their desire. While this is yet to be achieved, there are a couple of areas of SE, where AI techniques are proven to work, software systems have become much more complex regarding the requirements they need to provide. Below, only some of the applications are presented.

Firstly, software based AI algorithms are adapted from the real-world problems that are defined as fuzzy or probabilistic to a field of engineering called Probabilistic SE [5]. In order to create software reliability and predict software development effort Bayesian probabilistic model is of interesting use. This model is designed to show how decision-making reflects on updating procedures. Another part of SE where AI is convenient is Search Based Software Engineering (SBSE). The goal of SBSE is to express software engineering problems as optimisation ones. Then, a computational search can be used to tackle these problems. This approach works for different software phases like requirements, design, testing and maintenance.

Furthermore, software engineers use AI to assist in delivering quality architecture based on the requirements models [12]. A goodness function using some quality attributes that aid building the architecture including complexity, modularity, modifiability, clarity and reusability is defined by AI algorithms.

Recently, AI is part of project planning as well, consisting mainly of predicting the cost of the software. With basic machine learning (ML) reasoning and learning based algorithms, a thorough software project prediction is available.

2.2.1 Artificial Intelligence

To begin with, defining AI without first taking a look at intelligence itself would be simply not adequate. Historically, understanding the intelligence began with the earliest attempts in India and Greece through the well-known subject of philosophy and went on to become the main topic of computer science in the mid-50’s [13]. The numerous ideas on how to derive an eloquent definition for intelligence could not meet upon a point of agreement, leaving researchers putting it in the context of different fields accordingly.

The breakthrough on the theory of computation by John von Neumann et al. [14] pushed the limits at the time and delivered new attributes, bringing computers and intelligence together, like classification, reasoning, pattern recognition and learning from experience, which later would become the fundamentals of AI techniques. Hence, AI can be defined as the science of making machines perform tasks primarily demanding the human intelligence [15]. Contributing to the knowledge performance of the human tasks gave researchers the chance to focus on how to represent this knowledge by creating new paradigms. However, cognitive processes and their way of being cast by the theory of computation constitutes the integral assumption that led the research in AI. Being considered as a field that builds upon mathematics, computer science, engineering, etc., and based on its technical side, the goals of AI are divided into sub-groups [16], but mainly consisting on the following challenges:

• reasoning

• knowledge representation • learning

• planning

• natural language processing • and perception

Moreover, the above-mentioned challenges obviously brought a set of approaches and tools, aiming to shape and module these problems that AI faces. The main approaches are statistical methods and computational intelligence, while the tools include variants from mathematics and probability to economics such as search and mathematical optimization, artificial neural networks and progress evaluation.

2.2.2 Machine Learning

According to the researchers, Machine Learning (ML) is considered to be a subset of AI [17]. An early proper definition for ML comes from 1959 by Arthur Samuel, where he states that ML gives computers the ability to learn without explicitly being programmed. Two of the base fundamentals of AI, computational theory and pattern recognition, assisted on the emerging of ML [18]. However, the difference, in this case, is that ML expands its study on making predictive algorithms. These algorithms learn from some specific data samples, (even though as explained below that is not always the case considering unsupervised learning) and then obtain decision-making abilities. This data is known as training data as well.

Usually, the impracticality of distinct algorithms delivering a good performance when computing tasks bring the need for ML. Some of these tasks consist of computer vision, email filtering, ranking, optical character recognition, etc. As for the approaches of ML, regarding the type of the answer from the learning system, they were early divided into three categories, even though later on a few more approaches were added not belonging to any of these categories [19].

• supervised learning - a set of examples consisting of input and expected output are given to the machine, which aims to generate a mapping rule.

• unsupervised learning - the machine should be able to find a pattern in the input itself, without any training dataset.

• reinforcement learning - the machine should perform a certain task in a dynamic envi-ronment by continuously maximizing the reward function by interactively exploring the state space.

ML has a strong relationship to statistical computing and mathematical optimization. With the first one, because both study the prediction-making, while the second bears methods and theories to the field. Finally, ML finds applicability in the field of data analytics as well, creating predictive complex algorithms; widely known as predictive analytics, which observes the trend in the data.

2.3

Communication technologies

Communication has been one of the most important tools for humankind to exchange information. Being continuously developed through the years and divided in many branches, communication itself is a very broad concept. Concerning the scope of this thesis, communication technologies like cellular communication, non-cellular communication, satellite communication or vehicular commu-nication are touched upon. Otherwise, the commucommu-nication between the internal components of a system and the way that they interact and communicate with each other is not being considered.

2.3.1 Telecommunications and cellular communication

Telecommunications is defined as the technology that enables long range communication [20]. Any kind of exchange of information including signals, messages or sounds transmitted by optical, wire/wireless or radio systems are considered as telecommunication. These transmissions are split into communication channels using so-called multiplexers. It is divided into mechanical and elec-trical communication, developing from the first to the latter one using elecelec-trical systems. From the first attempts of humans to use simple methods like smoke signals or pigeons for delivering messages, to the revolution of wireless communication, telecommunication has come a long way. Nowadays, telecommunications have a crucial role in modern society in many different sectors of life. Banking, sales, payments, or travels are among the everyday necessities being accomplished. Telecommunication networks have the goal of sending and receiving information from the user to the network through three main technologies: (1) transmission, (2) switching and (3) signaling. This information comes in the shape of voice or data and the users can connect to the network by multiple devices, one of them being the cellular telephones.

Satellite communication, for instance, could be considered part of telecommunication technology since it amplifies radio telecommunications signals via a series of interconnected units so-called transponder [21]. Furthermore, satellite communication enables a communication channel between a transmitter and a receiver on different parts of the planet.

Cellular networks, or mobile networks, a modern type of telecommunication network that requires the same kind of signaling, uses wireless communication systems [22]. The network is formed by so-called cells placed in different areas, where each cell has a fixed location transceiver (a device that receives and sends out information), also known as a base station (BS). The exchange of information like data, voice, etc., is provided to the cell by the base stations. Close cells do not share similar frequencies in order to prevent interference and have better service quality. When these cells are working together, they provide wider geographical area coverage, meaning that a big number of portable and fixed transceivers such as mobile phones, laptops, tablets, etc., can communicate with each other. Even if the transceivers are on the move, going between the cells, they will still be in the network, because of the handover between base stations. A few advantages of cellular networks are [23]:

• transceivers use less power due to the cell towers being close to each other • higher capacity than a single large transmitter

Over the course of the years, the evolution of cellular networks has been tremendously huge since the first appearance of the wireless cellular networks in the 1980s [24]. The first type of mobile communication was called the first generation (1G), which provided analogue telecommunication standard. The voice call was analogue, while digital signalling brought the radio towers together with the system.

Then the scene was occupied by digital cellular networks: the second generation (2G) technology called Global System for Mobile (GSM). A part of GSM technology was General Packet Radio Service (GPRS) which became the most popular mobile data service sending and receiving data at respectively 14Kbps and 40Kbps. At the beginning of the new century, still into the 2G, GSM technology was enhanced, bringing the Enhanced Data rates for GSM Evolution (EDGE), which achieved data transmission of up to 135 Kbps.

3G was first introduced in 2001, aiming to standardize the network protocol. It assured full data service, enhancing the video/audio streaming up to 4x higher speed than 2G. New features like data roaming, where people would have access around the world, and video-conference were finally present.

The first 4G standard developed in Sweden was influenced and enhanced by the Long Term Evo-lution (LTE). It offers high-quality video streaming and access to mobile web with a speed of 1 Gbps giving users the opportunity for HD videos and online multiplayer gaming. Moreover, the voice development over LTE providing better quality went by the name Voice over LTE (VoLTE).

2.3.2 Non-cellular communication

Non-cellular communication like cellular one, uses wireless communication. Both these technologies are part of low-power wide-area networks (LPWANs) [25]. The main difference is that, while cellular communication is a standard technology used for long-range communication and provided by the mobile networks operators, non-cellular communication refers to short distance solutions that operate with radio frequencies [26]. Even though short-range communication may seem like a downgrade, the advantage is that the transmission acts at a very high bandwidth having low power consumption rate. Part of non-cellular communication are various technologies such as Wi-Fi, Bluetooth, Dedicated Short-Range Communications (DSRC), Radio-Frequency Identification (RFID), etc. Types of vehicular communication [27], like vehicle-to-vehicle (V2V) or Vehicular ad-hoc networks (VANETs) currently use mostly non-cellular communication, but researchers consider them to slowly adapt to cellular technologies.

2.4

Related Work

At the beginning of the new century, a vast amount of literature regarding AI and its adeptness to software engineering was presented. Over the past years, however, the attention turned towards the idea of how to use software based AI algorithms in real-life problems so that humanity could benefit from technology. The main concepts being observed by this thesis are found in the literature as evaluation studies such as case studies, experiments, or surveys.

Even though there is a considerable amount of work in the research area, to the best of the possessed knowledge, there does not exist a literature review or a systematic mapping study that fully addresses the problem, making this thesis the first attempt to perform a systematic mapping study on the use of software based AI algorithms and their influence on providing safety using cellular networks. Nevertheless, a couple of studies that are chosen to be compared with this thesis, describe the safety and the use of algorithms only in some specific domains.

To begin with, authors in [28], perform a systematic mapping study on the existing solutions providing safety for mobile robotic software systems (MRSs). They study this topic from a software engineering perspective. By conducting a systematic mapping study, they exploit how the safety is categorized and managed and give an overall update on the methods and techniques that address safety MRSs with a clear classification framework. In their study, the authors focus also into highlighting the emerging challenges of this field.

Both studies in [29] and [30] conduct systematic mapping studies within the field of healthcare. They manifest the use of AI supporting medicinal solutions. The authors of the first study narrow

their focus only on the impact that a particular technique like artificial neural networks (ANNs) have on decision-making tools to treat cancer, while in the second one, Joeky T Senders et al. observe the application of different ML models in neurosurgery. However, they both show that AI can amplify the decision-making of humans in healthcare.

Finally, a systematic mapping study providing safety in the field of automotive is proposed in [31]. The work reviews the different models of Convolutional Neural Networks (CNN) used to increase safety and security in modern 5G-enabled intelligent transportation systems (ITS).

3

Method

The appropriate scientific method chosen for this master thesis in order to perform a detailed study of the existing literature in the research area is a systematic mapping study. According to [32], a systematic mapping study tends to give a general overview of the literature of a specific research topic with respect to evaluating their type and quantity. It concerns the part of the literature that covers the topic and where or when this literature is published[33]. Furthermore, this kind of study performs a categorization of the relevant work within the interested area according to a predefined classification.

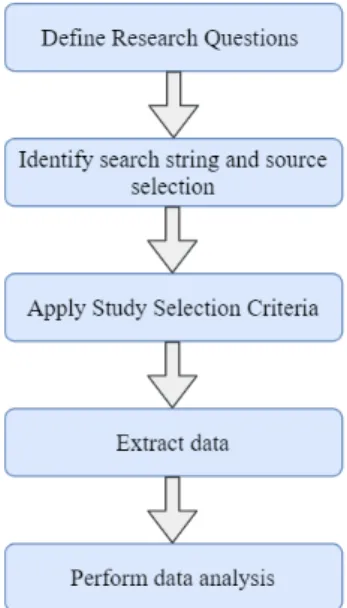

Systematic mapping studies are used by researchers based on different guidelines. It requires a rigorous process with well-defined steps. This systematic mapping study will be conducted following the guidelines carried out in [34]. The process consists of five main steps as displayed below:

Figure 1: Workflow of the research method process

3.1

Definition of research questions

The goal of this systematic mapping study is to observe the use of software based AI algorithms implementing safety using communication technologies. This leads to the set of the following research questions:

• RQ1a - What types of software based AI algorithms are used to implement safety? • RQ1b - What kind of communication technologies are mostly used to implement safety? • RQ1c - Which are the main application domains where these software algorithms find

ap-plicability in order to provide safety?

– Objective: This three-part question aims to identify the types of software based AI algorithms, communication technologies and the domains where they are used to imple-ment safety.

• RQ2 - What are the current research gaps in the use of AI to implement safety using communication technologies?

– Objective: The goal is to identify the gaps in the current research area on the use of software based AI algorithms and communication technologies

• RQ3a -What is the publication trend with respect of time in terms of cellular and non-cellular communications?

• RQ3b - What is the publication trend with respect of time in terms of software based AI algorithms?

– Objective: This two-part question aims to illustrate the current state of the topic and establishes a better understanding regarding the future trends.

• RQ4 - What type of research contributions are mainly presented in the studies?

– Objective: This research question aims to reflect new methods, tools or techniques, that might be further developed by researchers in this area.

• RQ5 - Which are the main research types being employed in the studies?

– Objective: This research question aims to understand the importance of the research approach and what it represents.

• RQ6 - What is the distribution of publications in terms of academic and industrial affiliation? – Objective: The goal is to know at what extent the academia and the industry have published in this area and establish a better understanding about possible joint work The answers to these research questions provide the outcomes of this master thesis discussed in section 4.

3.2

Identification of search string and source selection

In this subsection, the identification of the search string and the database sources selected to apply the search string are discussed. Selecting an extensive search string in this phase of the process establishes a comfortable path to perform the search and find the primary studies. As for the source selection, the main digital libraries were taken into account to be chosen.

3.2.1 Search string

In order to create a search string, the method called Population, Intervention, Comparison and Outcomes (PICO) proposed by Kitchenham and Charters in [35] is applied. PICO criterion used for systematic mapping studies in software engineering is defined as follows:

• POPULATION: It refers to a specific role, category or application area in software engi-neering

• INTERVENTION: It refers to a methodology, tool or technology

• COMPARISON: It refers to a methodology, tool or technology with which the intervention is being compared

• OUTCOME: It refers to factors of importance to practitioners

For this systematic mapping study, PICO is revealed below: • POPULATION: Software based AI algorithms and safety • INTERVENTION: Communication technologies

• COMPARISON: No empirical comparison is made, therefore not applicable

• OUTCOME: A classification of the primary studies, which indicates the role of software based AI algorithms into implementing safety using communication technologies

The identified keywords from this method are: software based AI algorithms, safety and commu-nication technologies. These keywords are grouped into sets and later their synonyms and other related terms are used to formulate the search string.

• Set 1: Scope the search for ”safety”

• Set 2: Terms related to INTERVENTION are searched for

• Set 3: Terms related to the classification of the algorithms are searched for

Based on PICO and the research questions, the following search string shown in Table 1 is com-posed accordingly. In this search string composition, logical operators AND and OR, keywords, synonyms of the main keywords and terms related to the respective field, where the classification process will be based on, are taken into consideration to be used. It has to be noted that, the use of the search string may not be precisely the same for every digital library depending on their syntax.

3.2.2 Source selection

In order to find the existing relevant occurrences for this topic, four scientific online digital libraries were chosen: IEEE Xplore Digital Library2, ACM Digital Library3, Scopus4, and Web of Science5. According to P. Brereton et al. [36] , these libraries are known to be valuable in the field of software engineering when performing literature reviews or systematic mapping studies.

POPULATION: Software based AI algorithms and safety

(safety) AND (”Artificial intelli-gen*” OR ”Machine learning” OR ”regression analysis” OR ”supervis* learning” OR ”unsupervis*learning” OR ”clustering algorithm” OR ”fuzzy logic” OR ”image process*” OR ”deep learning” OR ”computer vision” OR ”neural network” OR ”augmented reality” OR ”virtual re-ality” OR ”speech recognition” OR ”image recognition” OR ontology OR ”state machine” OR ”cogni-tive architecture” OR ”language processing”)

INTERVENTION: Cellular net-works

(communicat* OR cellular OR 2g OR 3g OR 4g OR 5g OR gsm )

Table 1: Composition of search string

3.3

Performing the search

Derived from PICO method as explained in 3.2.1, Table 2 presents the final form of the search string. It consists of three main parts related by the logical AND with each other and logical OR within.

2IEEE Xplore Digital Library [Online]. Available: https://ieeexplore.ieee.org/Xplore/home.jsp 3ACM Digital Library [Online]. Available: https://dl.acm.org/

4Scopus [Online]. Available:

https://www.scopus.com/

(((safety) AND (”Artificial intelligen*” OR ”Machine learning” OR ”re-gression analysis” OR ”supervis* learning” OR ”unsupervis*learning” OR ”clustering algorithm” OR ”fuzzy logic” OR ”image process*” OR ”deep learning” OR ”computer vision” OR ”neural network” OR ”augmented re-ality” OR ”virtual rere-ality” OR ”speech recognition” OR ”image recogni-tion” OR ontology OR ”state machine” OR ”cognitive architecture” OR ”language processing”)) AND ( communicat* OR cellular OR 2g OR 3g OR 4g OR 5g OR gsm ))

Table 2: Search string

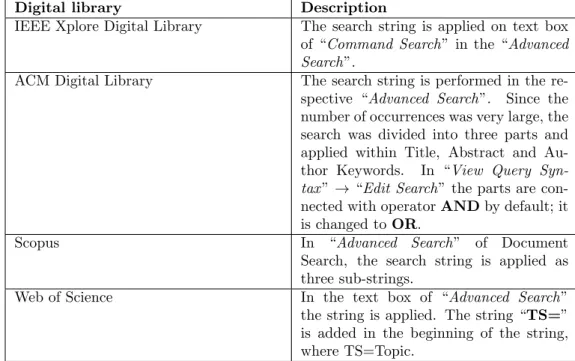

This search string is used as the input in the online digital libraries to perform the search. Typi-cally, a small derivation from the main search string, adapting it to the syntax of each library was needed. Table 3 describes the details on how the search string is applied on these digital libraries.

Digital library Description

IEEE Xplore Digital Library The search string is applied on text box of “Command Search” in the “Advanced Search”.

ACM Digital Library The search string is performed in the re-spective “Advanced Search”. Since the number of occurrences was very large, the search was divided into three parts and applied within Title, Abstract and Au-thor Keywords. In “View Query Syn-tax ” → “Edit Search” the parts are con-nected with operator AND by default; it is changed to OR.

Scopus In “Advanced Search” of Document Search, the search string is applied as three sub-strings.

Web of Science In the text box of “Advanced Search” the string is applied. The string “TS=” is added in the beginning of the string, where TS=Topic.

Table 3: Search in the digital libraries

In the end of this step, from performing the search in four different libraries, a considerable amount of primary studies, 6473, were found. In Table 4 the respective number of studies for every database is shown.

Digital library Search results IEEE Xplore Digital Library 2205 ACM Digital Library 184

Scopus 3125

Web of Science 959

Total 6473

3.4

Study Selection Criteria

The study selection criteria step is used to determine the relevant studies matching the goal of the systematic mapping study. According to [37], the selection criteria is one of the longest and time-consuming steps of the process. These criteria are divided into inclusion and exclusion criteria, described on the following subsubsections. In order for a study to be classified as relevant, it should meet all the inclusions criteria at once, and none of the exclusion ones. However, considering that these criteria will firstly apply on the Title-Abstract-Keywords (T-A-K), later on the execution of the mapping study an other sub-step for finding the relevant studies is added.

3.4.1 Inclusion Criteria

The following criteria determines when a study is included:

IC1: Studies implementing safety.

IC2: Studies presenting software based AI algorithms in different application domains. IC3: Studies proposing the use of communication technologies.

3.4.2 Exclusion Criteria

The following criteria determines when a study is excluded:

EC1: Studies that are duplicate of other studies.

EC2: Studies that are considered secondary studies to other ones.

EC3: Studies that are not peer-reviewed, short papers (4 pages and less) editorials, tutorial papers, patents and poster papers.

EC4: Studies that are not written in English language. EC5: Studies that are not available in full-text.

3.5

Performing the selection

Performing the selection process happens to be the most challenging part of the systematic mapping study. After obtaining the total amount of studies from the automatic search, the selection towards finding the relevant studies begins with the removal of the duplicates. To identify these duplicates and remove them, considering a large number of the studies, a free software provided by the university was used. This tool called EndNote 6 is widely used in research. By exporting the

citations from each digital library to EndNote, the removal of the duplicates was performed. The number of studies being removed was 1669, leaving the process with 4804 studies after this step. The selection process continues with the selection criteria. Inclusion and exclusion criteria as described in 3.4 are applied over the remaining 4804 studies. It is worth mentioning that when applying these criteria the following method was introduced:

The studies are evaluated according to T-A-K tactic. However, T-A-K reading did not always bring the desired outcome. Due to the lack of knowledge or the way that the abstract was written, sometimes it was almost impossible to determine if a study was relevant or not. The need for another sub-step was observed. This sub-step consisted of marking the unclear studies as not-clear and having to review the whole paper later on, by conducting full-text skimming. The terminology used for selecting the primary studies was:

• a study would be marked as relevant (R) if it meets all the inclusion criteria and none of the exclusion criteria.

• a study would be marked as not-relevant (NR) if it lacks one of the inclusion criteria or meets at least one of the exclusion criteria.

• a study would be marked as not-clear (NC) if there are uncertainties during T-A-K reading.

After applying the inclusion and exclusion criteria on the studies that were clear enough to be categorized as relevant or not, the process continued by reading the full text of NC studies. From a total of 85 NC studies, after full-text skimming 15 studies were considered R, bringing the map-ping study to a final number of 272 R studies.

One of the sub-steps of the selection process described in the guidelines is performing the snow-balling sampling. According to [38], snowballing is a method of identifying additional relevant studies after obtaining the primary ones. There are two types of snowballing: forward snow-balling, where the new studies are found in the citations of the paper, and backward snowballing that looks into the reference lists. Considering the sightly large number of papers obtained from the criteria described above, it was decided not to use snowballing in this systematic mapping study.

To better visualize the conducting of the selection process, in Figure 2 a detailed flow with the respective numbers for the sub-steps is presented.

Figure 2: Workflow of the search and selection process

3.6

Classification schemes definition

The next step for this systematic mapping study is establishing how the primary studies are going to be classified. To perform this, the study classification schemes are defined. These classification schemes address the research questions discussed in 3.1. As described in 3.7, the classification is composed of facets. Among the general publication data like title, name of the author, reference type or year, five main facets are added: software based AI algorithms, communication technologies, application domains, types of research contribution and research types.

For the last two classification schemes, types of research contribution and research types, the cate-gorizations presented in [33] and [39] are used as an example and then adopted to benefit the thesis. Regarding software based AI algorithms and communication technologies classification schemes an initial categorization based on the acquired knowledge from discussions with the expertise was conceived. However, in order to improve these categorizations and establish a new classification scheme for the application domains, the method of keywording, proposed in [33] is used. The keywording method is typically executed in two steps, reading and clustering.

• Reading: during this step, the abstracts and keywords of the selected primary studies were read again. The intention was to look for a particular set of keywords representing the appli-cation domains, communiappli-cation types, and software based AI algorithms used to implement safety. Regarding application domain, the keywording was mostly derived from the keywords of the studies rather than the abstract.

• Clustering: after reading and deriving a set of keywords from different studies, the keywords itself were grouped into forming clusters, which were used to create the classifications. The clusters for keywording method are found in Appendix C. After performing the keywording method the following classifications embodied below were obtained. To be highlighted is that a relevant study can be part of only one data item under each category, meaning that all categories are single-value ones.

Software based AI algorithms classification

Based on the initial categorization and the later keywording, the classification was shaped as follows. For each data item of this facet a short description is presented .

• Clustering - an unsupervised learning method, aims at building a natural classification used to group the data into clusters, without having the target features in the training data [40]. • Cognitive architecture - aims to use the research of cognitive psychology in order to create

artificial computational system processes behaving like humans [41].

• Computer Vision - aims at automating the process of visual perception by including tasks from low-level vision as noise removal or edge sharpening to high-level vision by segmenting the images or interpreting the scene [42]. In this classification, the primary studies that use image processing, Virtual Reality, or Augmented Reality are categorized as computer vision. • Fuzzy logic - solves a problem through a set of If-Then rules that takes into account the

uncertainties in the inputs and outputs by imitating human decision-making [43].

• Natural Language Processing (NLP) - aims at improving the ability of a computer program in understanding the human language.

• Neural Networks (NNs) - refers to a set of algorithms designed to recognize patterns by interpreting the data and classifying it by simulating the connection between the neurons. [44].

• Ontology - refers to a formal and structural way of representing concepts, relations or at-tributes in a domain [45].

• Optimization - refers to an iterative procedure that aims at achieving ideally the optimal solution of a problem beginning from a guessed solution [46].

• Regression analysis - aims at modeling a function that make predictions by iteratively ad-justing the function parameters to map a given set of input and outputs while studying the relationship between a dependent and independent variable [47].

• Reinforcement Learning (RL) - aims at performing a certain task in a dynamic environment by continuously maximizing the reward function by interactively exploring the state space

• State machine - represents a computational model that performs predefined actions depend-ing on a series of events that it faces [48].

• Not-specified - contains those primary studies that mention the use of AI or ML, but do not elaborate into a peculiar technique.

• Other - the primary studies that use a technique not part of the above-mentioned ones.

Communication technology classification

Based on the initial categorization for communication and the process of keywording, the final classification is: • 2G • 3G • 4G • 5G • Cellular (not-specified) • Non-cellular • Satellite • Not-mentioned

Application domain classification

According to keywording, the derived classification scheme for the domains where safety is provided by applying software based AI algorithms is:

• Agriculture • Aerospace • Automotive • Construction • Education • Factory • Health care • Marine • Mining • Robotics • Surveillance • Telecommunication • Other

Types of research contribution classification

By defining a classification framework for types of research contribution, new methods, techniques or tools can be developed in the future by the researchers to implement safety. In order to categorize this facet, the classification scheme presented and defined by Petersen et al. in [33] will be used. This scheme is highly adopted by researchers when conducting a systematic mapping study. The facet is combined by the following data items:

• Model - refers to the studies that present information and abstractions to be used in imple-menting safety by software based AI algorithms.

• Method - refers to general concepts and detailed working procedures that address specific concerns about implementing safety using software based AI algorithms.

• Tool - refers to any kind of tool tool or prototype that can be employed in the current models • Open Item - refers to the remaining studies that do not belong to any of the above mentioned

categories.

Research types classification

According to [49] the research types classification is chosen, because of the importance on under-standing the research approach and the value it represents. To categorize the research types, the classification scheme proposed by Wieringa et al. in [39] is applied. These data items are:

• Validation Research - investigates the novel techniques, that are yet to be implemented in the practice; investigations include experiments, prototyping, simulations etc.

• Evaluation Research - evaluates an implemented solution in practice considering the benefits and drawbacks of this solution; it includes case studies, field experiments etc.

• Solution Proposal - refers to a novel proposed solution for a problem or a significant extension to an existing one.

• Conceptual Proposal - gives a new approach at looking topics by structuring them in con-ceptual frameworks or taxonomy.

• Experience Paper - reflects the experience of an author explaining at what extent something is performed in practice.

• Opinion Paper - refers to studies that mostly express the opinion of the authors on certain methods.

3.7

Data extraction

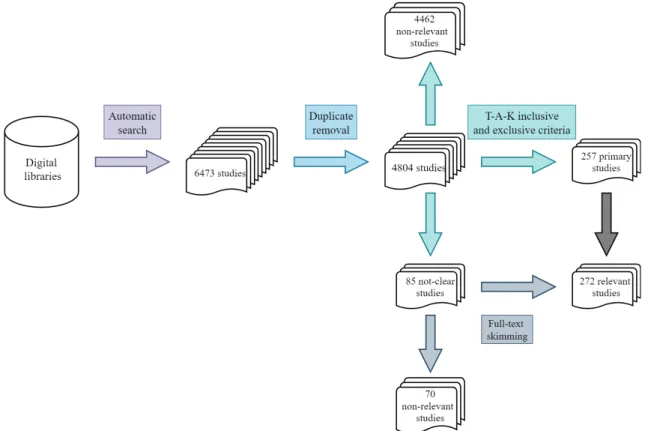

To extract the data from the selected primary studies, a data extraction form is generated. This form is derived by the classification scheme and the research questions. The data extraction form is shown in Table 5. It contains the data items and their respective values and the RQs which they are derived from. For this step of the systematic mapping study process, Microsoft Excel is used to organize the data into spreadsheets.

Data item Value RQ

Study ID Integer

Title Name of the study

Abstract Abstract of the study Keywords Set of keywords General Author Set of authors

Author affiliation Type of affiliation RQ6

Pages Integer

Reference type Type

Year of publication Calendar year RQ3a, RQ3b

Database Name of the database

Software based AI algorithm What kind of algorithm is used RQ1a, RQ2, RQ4b Communication technology Which type of communication is used RQ1b, RQ2, RQ3a Specific Application domains What is the application domain of the study RQ1c

Research contribution Type RQ4

Research type Type RQ5

3.8

Data analysis

Data analysis is the last step of the systematic mapping study process. After the extracted data is classified according to the classification schemes derived from the research questions and the keywords, the map of the field is produced. By means of plotting figures such as bubble charts, pie charts or graphs, and analyzing the results, data analysis tries to give the demanded answers for the research questions presented in subsection 3.1.

3.9

Threats to validity

When conducting a systematic mapping study, there are usually some issues that might jeopardize the validity of the work. In this subsection, the way these threats of validity are mitigated, following the guidelines of Wohlin et al. in [50] is described.

• Construct validity: Construct validity in systematic mapping studies stresses the validity of the extracted data concerning the research questions. For this systematic mapping study, the guidelines in [34] were followed. The selection of the primary studies represents the research questions in a confident way. Starting with the search string, it is well-formulated using the PICO method. Then, the most common digital libraries in software engineering were chosen to perform the automatic search, giving this way more depth to the study. Furthermore, the studies were screened under rigorous inclusion and exclusion criteria. Considering all the above-mentioned, it is believed that no relevant studies were left out of the research. • Internal validity: Internal validity represents those threats that seek to establish a causal

relationship, where some conditions may influence the study. To mitigate this threat, a pre-defined protocol with an exact data extraction form was used. Regarding the data analysis, since descriptive statistics would be employed, the threats are minimal.

• External validity: Threats to external validity define the extent of the generalizability of the outcomes of systematic mapping study. In this research, the most drastic threat related to external validity is not covering the whole scope of software based AI algorithms using communication technologies to provide safety. This threat is mitigated by a well-established search string and the automatic search. Having a good set of inclusion and exclusion criteria helped as well in the external validity of the study. However, since the T-A-K skimming was performed, due to abstracts with incomplete information, some relevant studies might have been wrongly excluded.

To further minimize the external threat, only the publications written in English (widely used language in qualitative scientific studies) were chosen and additionally only the peer-reviewed studies were accepted.

• Conclusion validity: Conclusion validity demonstrates that the study can be repeated in the future and yet have the same results. The relationship between the extracted and syn-thesized data and the outcomes of the study may influence the repetitiveness.

In order to mitigate this threat, all the steps leading to data analysis including search string, automatic search, selection criteria, etc. are well-documented. They can be used by re-searchers to replicate the study. Furthermore, the data extraction form is well-documented as well, decreasing the biases of that process. The same reasoning is followed with the classifi-cation scheme as well, where the framework is well-defined and has the respective references.

4

Results of the field map and discussion

In this section of the thesis, the results obtained from analyzing the primary studies are presented. The extensive list of the primary studies is found in Appendix A. A better understating on how this section is assembled is shown below:

• Each subsection coincides with a research question taken into account to be answered • The outcomes of the research questions are illustrated in forms of charts and/or graphs • For each plotted figure the related discussion is described accordingly

Results of RQ1(a-c)

The first research question of this thesis is a three-part question. It aims at distinguishing the types of software based AI algorithms, communication technologies, and their application domains. For each sub-question, a single figure is plotted to present the amount of the primary studies discussing these classes.

What types of software based AI algorithms are used to implement safety?

In Figure 3, the number of studies using software based AI algorithms to provide safety is il-lustrated. This bar chart shows that the most discussed algorithm with 63 studies is computer vision. However, this cannot be declared for sure, because almost 26% of the studies do not clearly specify the type of algorithm that they use. Furthermore a lot of studies present clustering and neural networks with 14,4% and 12,9% respectively. On the other hand, cognitive architecture and optimization are the least used algorithms with only 2 mentions each.

What kind of communication technologies are mostly used to implement safety? This part of the subsection depicts the publication trends with respect to the communication technologies as part of the primary studies. Previously, a classification focusing on communication technologies was discussed; it includes 2G, 3G, 4G, 5G, cellular(not-specified), non-cellular, satellite and not-mentioned.

Figure 4: Distribution of studies for communication technologies

The pie chart in Figure 4 presents the outcomes for each category addressing the number of the relevant primary studies and their percentage. From the results, it can be inferred that the most dominant technologies with 150 studies, more than half of the total primary publications (≈55%) are the non-cellular ones. On the other hand, cellular communications are mentioned in a slight bit more than a third of studies with a total of 87 publications. The use of GSM technology in 2G and the recent boost of 5G are among the most discussed categories with 5% each. Figure 4 shows that the least addressed communication category in the publications is 3G with only 3%.

Which are the main application domains where these software based AI algorithms find applicability in order to provide safety?

Figure 5 depicts a 3-D clustered bar chart presenting the number of publications and the percentage of the pool of the relevant studies addressing the application domains in which the software based AI algorithms are applied. From the chart, it can be seen an unequal distribution of the publications. The majority of the publications focus on the Automotive domain, mostly within the field of Intelligent Transportation Systems (ITS) with almost 60% (152 publications). Even though it was expected that the most discussed domain in the studies to be Automotive, considering that in the application domain classification there are 12 more different classes, this number is quite high and the gap with the other domains as well. The second most discussed domain is Telecommunication (9,2%), however the difference with the next one in line, Aerospace (8,5%), is nearly negligible, only 2 publications more for Telecommunication. Health care, observed in 7,5% of the studies, is another domain that grabs the attention. meaning that AI techniques are gaining a lot of terrains even in medicine and health care. The application domains with the least recognition are Education and Agriculture.

Figure 5: Distribution of studies for application domains

What are the current research gaps in the use of AI to implement safety

using communication technologies?

In this subsection, the relation between the use of software based AI algorithms and the commu-nication technologies in order to provide safety in terms of publications is investigated. To better picture this relation, in figure 6 a bubble chart, represented in a 2-axis scatter plot, is shown. In the x-axis are the software based AI algorithms, while in the y-axis the communication technologies.

Figure 6: The relation between categories of communication technologies, software based AI algo-rithms and number of relevant publications

In each intersection between a category of algorithms and a communication category, a bubble with different sizes shows the number of primary studies for each pair. From the figure, it is understood that the biggest bubble with the size of 35 belongs to the pair ”Not-specified - Non-cellular”. This

was expected, considering the large number of publications using non-cellular communication and the publications that at the same time do not distinguish between a particular algorithm.

The use of computer vision with non-cellular has a bubble size of 29, second largest. While computer vision is discussed together with almost all the communication technologies, it is observed the gap on using 4G and computer vision technique, with no publications addressing this relation. With a very small difference clustering and non-cellular are also widely used together, proclaimed in 28 publications.

What is the publication trend with respect of time in terms of cellular

and non-cellular communications?

This subsection of results targets the publication trends with respect to time in terms of communi-cation technologies. The total number of relevant publicommuni-cations of each communicommuni-cation technology is plotted against the publication year of every study. The outcomes are then displayed by the line chart in figure 7. Each marked line represents a different communication technology according to the classification in 3.6, while the dotted line, derived from the total number of primary studies (in the figure: ”Grand Total”), represents the trend of the whole publications over the years.

Figure 7: Distribution of publications with respect of time regarding the communication technolo-gies

From the chart, it can be observed that the first mention of particular communication technology, a cellular one, was far back in 1991. Between 1991 and 2008 the number of publications is mostly rare and not significant. In 2008 however, a total number of 12 publications in more than half of the categories, consisting more in non-cellular communication were published. It is noted from the graph that in this year the first study mentioning the use of 3G in AI to implement safety was published. After a couple of slow years that followed the 2008 boost, from the figure it can be inferred that in 2013 the big step had been taken. The number of publications doubled the one in 2008 (the highest up to that moment) with 24 in total. Then the trend moved only upwards, reaching its peak in 2017 with 43 relevant studies. The first year where each category had at least 1 publication is 2016. The figure shows a recent attraction to the research community of this topic. In the end, the significant drop from 2018 to 2019 is explainable: due to the search in the digital libraries happening in early 2020, not all the publications from 2019 were found. This happens because a lot of studies take quite a considerable amount of time to be published, considering their issues in proceedings.

What is the publication trend with respect of time in terms of software

based AI algorithms?

In figure 8, a bubble chart addressing the relationship between the software based AI algorithms, year of publications and number of relevant papers is depicted. The essence of this subsection is the publication trends with respect to time in terms of software based AI algorithms. The bubble chart is combined by the years in the y-axis, algorithms in the x-axis, and the bubbles in the middle representing the number of relevant studies.

The chart shows that the biggest bubble belongs to the pair ”Not-specified - 2018”. Similar to the discussion about RQ2, this result was likely to happen, reasoning that the number of studies that do not clarify the type of algorithm is high. While regarding the year ”2018”, the boost of algorithms use was recent. To be noted is that the majority of the studies that discuss an algorithm are published after 2013.

Overall, the outcomes present an increasing trend of the publications. However, it seems as for particular algorithms like computer vision the interest of the community has slightly decreased very recently, after peaking in 2010. The drop between 2018 and 2019 is previously discussed in RQ3a.

Figure 8: The relation between categories of software based AI algorithms, year of publications and number of relevant publications

What type of research contributions are mainly presented in the studies?

In this section, the results for RQ5 are presented. Figure 9 displays a simple clustered column chart, where every column represents the number of publications and the percentage for one type of research contributions according to the defined classification scheme. The categories are methods, metrics, models, tools and open items. From the figure it can be observed that the researchers are mostly focused on presenting methods as a research contribution, comprising 62,5% of the whole 272 publications. Categories such as models and tools follow the distribution of the publications with 20,6% and 14,3% respectively. The least amount of studies, with only 5 publications discuss metrics. From the outcomes, it can be said that the spotlight from the research community is clearly towards giving solutions in form of methods or approaches, and lacking especially metrics for providing safety using software based AI algorithms and different communication technologies.

Figure 9: Distribution of studies regarding the types of research contribution

Which are the main research types being employed in the studies?

Figure 10 depicts a stacked bar chart demonstrating the number of relevant publications and their percentage targeting the categories of the research types classification. In subsection 3.6 the research types classification was acknowledged combined of Validation Research, Evaluation Re-search, Solution Proposal, Conceptual Proposal, Experience Paper and Opinion Paper. Analyzing the chart, it can be confirmed that as expected, the vast majority of the publications embrace the Solution Proposal composing 82,3% of the total number of relevant studies. Only 7,4% and 5,5% of publications employed Evaluation Research and Validation Research. Very few studies employ the remaining research types. These outcomes suggest that a large number of solutions are yet to be evaluated by the industry, due to the novelty of the topic and its recent boost.

What is the distribution of publications in terms of academic and

indus-trial affiliation?

In order to have a better overview of the publications, whether they come from academia or the industry, the affiliations of the authors were taken into account. The categorization is created based on the one in [49]. It contains three data items: academia, industry and both. The following categorization was applied:

• Academia - the study is written only by academic authors • Industry - the study is written only by industrial authors

• Both - the study is written as a consequence of a collaboration between academic and indus-trial authors

In Figure 11, a 3-D pie chart depicts the distribution of the publications addressing the affiliation of the authors. It is clear from the chart that the academic publications are by far the leading ones. 82% of all the relevant studies come from only academic authors. The rest 18% is divided between industry and both. The collaboration amongst academia and industry is engaged in 15% of the publications, while just 3% comes exclusively from the industry. The lack of industrial publications, confirmed by the low number of industrial authors participating in the research area, is somehow expected. Usually, industrial researchers do not take much time to write or publish papers. Even if they do, most of industrial publications consist in form of patents or white papers that are less in number and reveal less information, which are not being considered by this work as stated in the exclusion criteria. That is why, this low number of papers is not a realistic indicator, considering the fact that AI techniques are adopted by a lot of real-life application domains triggering the industry interest. However, in the end there can be seen a tentative to increase the joint studies, bringing the academia and industry to work together.

5

Conclusions and future work

The main goal of this thesis is to grasp an overview of the current state-of-the-art research in the use of software based AI algorithms implementing safety using different communication technolo-gies. As introduced in section 1, the enhancement of AI and ML shifted the interest of researchers into finding ways how to apply these techniques in everyday life. Furthermore, one of the most important properties in different application domains is safety. Providing safety in automotive, health care, industries, etc. is ultimately essential. On top of this, communication networks come into the help of AI, facing similar obstacles like learning uncertainties or response planning. How-ever, the need for assessing the current research state and perceiving its actual gaps arises. In order to achieve this goal, a systematic mapping study was conducted. The steps of the sys-tematic mapping study are described in detail in section 3 of this thesis. From an initial total of 6473 studies obtained after the automatic search, a final number of 272 primary studies were identified at the end of the study selection step. These primary studies accomplish the predefined inclusion and exclusion criteria. In terms of relevance, these publications cover the three main aspects of the study: describe a software based AI algorithm, specific communication technology and implement safety as a property. Observing the commonalities between the studies, offered the opportunity to come up with some classification schemes regarding the algorithms, communication and application domain categories. Additionally, the study presents two more categories including the types of research contributions and the research types. The obtained data is extracted and analyzed with the purpose of answering the research questions structuring this way the results (section 4).

The outcomes of this study suggest that regarding the software based AI algorithms, the atten-tion of researchers is focused more towards applying computer vision techniques, even though a high percentage of studies do not specify the technique, threatening the validation of the previ-ous statement. Meanwhile, less preferred were the use of techniques like optimization or cognitive architecture. Concerning the other categories, non-cellular and automotive are clearly dominant in communication and application domains respectively. An increasing recent interest in one of the latest cellular networks such as 5G is observed. However, the topic is generally gaining the attention of the researchers in the past years and the trend seems to remain the same. Furthermore it is obvious that the majority of studies contribute with methods and solution proposals.

5.1

Future work

Concerning the future work, the results of this systematic mapping study benefit the researchers and the industry at the same time. Researchers could use this work, to further deepen their knowledge considering new challenges in this topic. The various classifications provided in this work may serve researchers as initial categorizations or a base foundation with the will to further extend them in conducting other systematic mapping studies or systematic literature reviews. The lack of evaluation researches indicates that the industry is not putting the research into practice. Therefore, an heightened interest in evaluating the proposed solutions like case studies or field experiments is expected. In the near future, more effort should be put into providing new tools and defining metrics, as it, for now, the research area is missing it.

To summarize, the study provides a good state-of-the-art for the topic and solid foundations for developing new tools and performing more evaluation researches in the use of software based AI algorithms to provide safety using communication technologies.

References

[1] S. Srivastava, A. Bisht, and N. Narayan, “Safety and security in smart cities using artificial intelligence—a review,” in 2017 7th International Conference on Cloud Computing, Data Science & Engineering-Confluence. IEEE, 2017, pp. 130–133.

[2] R. Li, Z. Zhao, X. Zhou, G. Ding, Y. Chen, Z. Wang, and H. Zhang, “Intelligent 5g: When cellular networks meet artificial intelligence,” IEEE Wireless Communications, vol. 24, no. 5, pp. 175–183, 2017.

[3] D. Lund, C. MacGillivray, V. Turner, and M. Morales, “Worldwide and regional internet of things (iot) 2014-2020 forecast: A virtuous circle of proven value and demand. 2014 may,” IDC f248451 www. idc. com.

[4] W. Liang, M. Sun, B. He, M. Yang, X. Liu, B. Zhang, and Y. WANG, “New technology brings new opportunity for telecommunication carriers: Artificial intelligent applications and practices in telecom operators,” ITU Journal: ICTDıscoveries, vol. 1, no. 1, pp. 1–7, 2018. [5] M. Harman, “The role of artificial intelligence in software engineering,” in 2012 First

Interna-tional Workshop on Realizing AI Synergies in Software Engineering (RAISE). IEEE, 2012, pp. 1–6.

[6] A. Aviˇzienis, J.-C. Laprie, and B. Randell, “Dependability and its threats: a taxonomy,” in Building the Information Society. Springer, 2004, pp. 91–120.

[7] D. G. Firesmith, “Common concepts underlying safety security and survivability engineering,” CARNEGIE-MELLON UNIV PITTSBURGH PA SOFTWARE ENGINEERING INST, Tech. Rep., 2003.

[8] M. P. Heimdahl, “Safety and software intensive systems: Challenges old and new,” in Future of Software Engineering (FOSE’07). IEEE, 2007, pp. 137–152.

[9] R. R. Lutz, “Software engineering for safety: a roadmap,” in Proceedings of the Conference on the Future of Software Engineering, 2000, pp. 213–226.

[10] R. Kowalski, “Ai and software engineering,” in Artificial Intelligence and Software Engineer-ing. Ablex Publishing, 1991, pp. 339–352.

[11] C. Rich and R. C. Waters, Readings in artificial intelligence and software engineering. Morgan Kaufmann, 2014.

[12] H. H. Ammar, W. Abdelmoez, and M. S. Hamdi, “Software engineering using artificial intelli-gence techniques: Current state and open problems,” in Proceedings of the First Taibah Uni-versity International Conference on Computing and Information Technology (ICCIT 2012), Al-Madinah Al-Munawwarah, Saudi Arabia, 2012, p. 52.

[13] V. Honavar, “Artificial intelligence: An overview,” 2006.

[14] B. McMullin, “John von neumann and the evolutionary growth of complexity: Looking back-ward, looking forback-ward,” Artificial life, vol. 6, no. 4, pp. 347–361, 2000.

[15] N. J. Nilsson, Principles of artificial intelligence. Morgan Kaufmann, 2014.

[16] D. S. Grewal, “A critical conceptual analysis of definitions of artificial intelligence as applicable to computer engineering,” IOSR Journal of Computer Engineering, vol. 16, no. 2, pp. 09–13, 2014.

[17] M. Mohri, A. Rostamizadeh, and A. Talwalkar, Foundations of machine learning. MIT press, 2018.

[18] P. Ongsulee, “Artificial intelligence, machine learning and deep learning,” in 2017 15th In-ternational Conference on ICT and Knowledge Engineering (ICT&KE). IEEE, 2017, pp.