Mälardalen University Press Licentiate Theses

No. 141

STEREO VISION ALGORITHMS IN RECONFIGURABLE

HARDWARE FOR ROBOTICS APPLICATIONS

Jörgen Lidholm

2011

School of Innovation, Design and Engineering

Mälardalen University Press Licentiate Theses

No. 141

STEREO VISION ALGORITHMS IN RECONFIGURABLE

HARDWARE FOR ROBOTICS APPLICATIONS

Jörgen Lidholm

2011

Copyright © Jörgen Lidholm, 2011 ISBN 978-91-7485-033-8

ISSN 1651-9256

Printed by Mälardalen University, Västerås, Sweden

Abstract

This thesis presents image processing solutions in FPGA based embedded vi-sion systems. Image processing is a demanding process but the information that can be extracted from images is very useful and can be used for many tasks like mapping and navigation, object detection and recognition, collision detection and more.

Image processing or analysis involves reading images from a camera sys-tem, improve an image with respect to colour fidelity and white balance, re-moving distortion, extracting salient information. The mentioned steps are often referred to as low to medium level image processing and involve large amounts of data and fairly simple algorithms suitable for parallel processing. Medium to high level processing involves a reduced amount of data and more complex algorithms. Object recognition which involves matching image fea-tures to information stored in a database is of higher complexity.

A vision system can be used in anything from a car to industry processes to mobile robots playing soccer or assisting people in their homes. A vision system often works with video streams that are processed to find pieces that can be handled in an industry process, detect obstacles that may be potential hazards in traffic or to find and track landmarks in the environment that can be used to build and navigate from. This involves large amount of calculations and this is a problem, even though modern computers are fast they may still not be able to execute the desired algorithms with the frequency wanted. Even if there are computers that are fast enough they are bulky and require a lot of power. They are not suitable for incorporating on small mobile robots.

In this thesis I will present the image processing sequence to give an under-standing of the complexity of the processes involved and I will discuss some processing platforms suitable for image processing. I will also present my work that is focused on image algorithm implementations for reconfigurable hardware suitable for mobile robots with requirements on speed an power con-sumption.

Copyright © Jörgen Lidholm, 2011 ISBN 978-91-7485-033-8

ISSN 1651-9256

Printed by Mälardalen University, Västerås, Sweden

Abstract

This thesis presents image processing solutions in FPGA based embedded vi-sion systems. Image processing is a demanding process but the information that can be extracted from images is very useful and can be used for many tasks like mapping and navigation, object detection and recognition, collision detection and more.

Image processing or analysis involves reading images from a camera sys-tem, improve an image with respect to colour fidelity and white balance, re-moving distortion, extracting salient information. The mentioned steps are often referred to as low to medium level image processing and involve large amounts of data and fairly simple algorithms suitable for parallel processing. Medium to high level processing involves a reduced amount of data and more complex algorithms. Object recognition which involves matching image fea-tures to information stored in a database is of higher complexity.

A vision system can be used in anything from a car to industry processes to mobile robots playing soccer or assisting people in their homes. A vision system often works with video streams that are processed to find pieces that can be handled in an industry process, detect obstacles that may be potential hazards in traffic or to find and track landmarks in the environment that can be used to build and navigate from. This involves large amount of calculations and this is a problem, even though modern computers are fast they may still not be able to execute the desired algorithms with the frequency wanted. Even if there are computers that are fast enough they are bulky and require a lot of power. They are not suitable for incorporating on small mobile robots.

In this thesis I will present the image processing sequence to give an under-standing of the complexity of the processes involved and I will discuss some processing platforms suitable for image processing. I will also present my work that is focused on image algorithm implementations for reconfigurable hardware suitable for mobile robots with requirements on speed an power con-sumption.

Swedish Summary - Svensk

Sammanfattning

Robotar blir allt vanligare i vårat samhälle. De finns allt ifrån traditionella in-dustrirobotar till robotar som hjälper oss i hemmen med de tråkigaste sysslorna, robotar som används för att övervaka stora områden, robotar som vi bara har för nöjes skull och en hel uppsjö med olika varianter.

En robot är mer än både än maskin och en intelligent dator, en robot är just en robot om den kan känna, planera och agera. Att känna innebär att roboten skall med sensorer känna av fysiska fenomen i sin omgivning, det kan vara allt ifrån att känna av temperatur, bestämma ett avstånd till någonting, känna igen en person och så vidare. Att planera innebär att roboten skall genom intelligens utifrån vad den känner till om sin omgivning utifrån sensordata, planera på vilket sätt den skall agera. Till slut så innebär agera, att roboten skall utföra en fysisk handling, vilket kan bestå i att den förflyttar sig, lyfter upp ett objekt av något slag eller följer en boll med ögonen (kameror).

En central del för en robot är följaktligen en intelligent sensor som helst skall kunna användas till så mycket som möjligt, kunna leverera den informa-tion som roboten vill ha med tillräcklig hastighet så att roboten hinner fatta ett beslut och dessutom skall den leverera information som roboten har använd-ning av. Då ögonen fyller en viktig funktion för människan och vi använder dom främst till att se vad som finns i vår omgivning, vi kan med våra ögon också bestämma avstånd med tillräckligt god noggrannhet men ögonen hjälper oss också att hålla balansen. För att kunna bygga ett system för artificiell syn för robotar behövs främst kameror men också metoder för att analysera bilderna som kamerorna tar. Att analysera bildinformation kräver stora resurser med avseende på beräkningskraft, både för att de metoder som normalt används är relativt avancerade men främst beroende av den stora mängden information.

Swedish Summary - Svensk

Sammanfattning

Robotar blir allt vanligare i vårat samhälle. De finns allt ifrån traditionella in-dustrirobotar till robotar som hjälper oss i hemmen med de tråkigaste sysslorna, robotar som används för att övervaka stora områden, robotar som vi bara har för nöjes skull och en hel uppsjö med olika varianter.

En robot är mer än både än maskin och en intelligent dator, en robot är just en robot om den kan känna, planera och agera. Att känna innebär att roboten skall med sensorer känna av fysiska fenomen i sin omgivning, det kan vara allt ifrån att känna av temperatur, bestämma ett avstånd till någonting, känna igen en person och så vidare. Att planera innebär att roboten skall genom intelligens utifrån vad den känner till om sin omgivning utifrån sensordata, planera på vilket sätt den skall agera. Till slut så innebär agera, att roboten skall utföra en fysisk handling, vilket kan bestå i att den förflyttar sig, lyfter upp ett objekt av något slag eller följer en boll med ögonen (kameror).

En central del för en robot är följaktligen en intelligent sensor som helst skall kunna användas till så mycket som möjligt, kunna leverera den informa-tion som roboten vill ha med tillräcklig hastighet så att roboten hinner fatta ett beslut och dessutom skall den leverera information som roboten har använd-ning av. Då ögonen fyller en viktig funktion för människan och vi använder dom främst till att se vad som finns i vår omgivning, vi kan med våra ögon också bestämma avstånd med tillräckligt god noggrannhet men ögonen hjälper oss också att hålla balansen. För att kunna bygga ett system för artificiell syn för robotar behövs främst kameror men också metoder för att analysera bilderna som kamerorna tar. Att analysera bildinformation kräver stora resurser med avseende på beräkningskraft, både för att de metoder som normalt används är relativt avancerade men främst beroende av den stora mängden information.

iv

En bild innehåller miljontals små färgvärden, för att kunna bestämma djup i bilderna krävs dessutom två bilder från olika perspektiv vilket dubblerar infor-mationsmängden. För att en robot skall kunna röra sig någorlunda snabbt krävs dessutom en uppdateringsfrekvens på bilderna med tiotals bilder i sekunden.

En vanlig modern persondator innehåller en processor som är generell och jobbar med hög klockfrekvens. Trotts den höga klockfrekvensen tar det tid att göra beräkningar på stora mängder data. En så kallad FPGA är en enhet som kan programmeras att lösa ett antal specifika uppgifter och bara dessa. FPGAn tillåter att man köra otroligt stora mängder beräkningar parallellt och kan därför resultera i en enorm förbättring i beräkningskapacitet jämfört med en standarddator och dessutom med lägre energiåtgång.

I den här avhandlingen diskuterar jag övergripande vad en robot är, vilka olika sorters robotar som finns. Fokus för avhandlingen ligger dock på bild-analys för robotapplikationer i just FPGA-er. Jag diskuterar bland annat prob-lem och lösningar för bildanalys i FPGA och tar även upp relaterade forskn-ingsområden som kan appliceras på detta problem.

iv

En bild innehåller miljontals små färgvärden, för att kunna bestämma djup i bilderna krävs dessutom två bilder från olika perspektiv vilket dubblerar infor-mationsmängden. För att en robot skall kunna röra sig någorlunda snabbt krävs dessutom en uppdateringsfrekvens på bilderna med tiotals bilder i sekunden.

En vanlig modern persondator innehåller en processor som är generell och jobbar med hög klockfrekvens. Trotts den höga klockfrekvensen tar det tid att göra beräkningar på stora mängder data. En så kallad FPGA är en enhet som kan programmeras att lösa ett antal specifika uppgifter och bara dessa. FPGAn tillåter att man köra otroligt stora mängder beräkningar parallellt och kan därför resultera i en enorm förbättring i beräkningskapacitet jämfört med en standarddator och dessutom med lägre energiåtgång.

I den här avhandlingen diskuterar jag övergripande vad en robot är, vilka olika sorters robotar som finns. Fokus för avhandlingen ligger dock på bild-analys för robotapplikationer i just FPGA-er. Jag diskuterar bland annat prob-lem och lösningar för bildanalys i FPGA och tar även upp relaterade forskn-ingsområden som kan appliceras på detta problem.

Acknowledgements

I would like to take the opportunity to mention the people that has supported my work at Mälardalen university in one way or the other. I have really enjoyed the years that has passed by to fast and would not hesitate to make the same journey again.

First I would like to thank the ones that has provided financial support to enabled this and they are the Knowledge Foundation, Robotdalen and Xilinx (that has supported by providing tools and hardware).

My main supervisor Lars Asplund has not only been my supervisor, he has also been an never ending source of ideas. It has not always been so easy to keep up, but it has always been great fun! I also consider Lars a dear friend and I have enjoyed the many discussions we have shared during the years. A great thank you also goes to my two assisting supervisors. Mikael Ekström that became more and more involved and was of great help while I was writing my final paper. Giacomo Spampinato brought fresh research thinking into the group and I hold you to your word that if I need help, your Sicilian family can help.

During the course of my studies, both at graduate and under graduate level, I have had the pleasure to meet many great friends. I would like thank some of you by sending an extra large hug to you. Thank you Andreas Hjertström for all the interesting talks and for being a great friend since we started the computer engineering program in 2001. Fredrik Ekstrand, my comrade in arms, thank you four all the great talks both on private and professional matters. Hüseyin Aysan, the man of strong prepositions and probably the most curious person I’ve ever met, I’m looking forward to ride Siljan runt with you! Carl Ahlberg, a good friend and an inspiration when it comes to enjoying life, hopefully I can convince you to ride Finnmarskturen or Cykelvasan, or both, with me. Peter Wallin, a great friend since we started the computer engineering program in 2001.

Acknowledgements

I would like to take the opportunity to mention the people that has supported my work at Mälardalen university in one way or the other. I have really enjoyed the years that has passed by to fast and would not hesitate to make the same journey again.

First I would like to thank the ones that has provided financial support to enabled this and they are the Knowledge Foundation, Robotdalen and Xilinx (that has supported by providing tools and hardware).

My main supervisor Lars Asplund has not only been my supervisor, he has also been an never ending source of ideas. It has not always been so easy to keep up, but it has always been great fun! I also consider Lars a dear friend and I have enjoyed the many discussions we have shared during the years. A great thank you also goes to my two assisting supervisors. Mikael Ekström that became more and more involved and was of great help while I was writing my final paper. Giacomo Spampinato brought fresh research thinking into the group and I hold you to your word that if I need help, your Sicilian family can help.

During the course of my studies, both at graduate and under graduate level, I have had the pleasure to meet many great friends. I would like thank some of you by sending an extra large hug to you. Thank you Andreas Hjertström for all the interesting talks and for being a great friend since we started the computer engineering program in 2001. Fredrik Ekstrand, my comrade in arms, thank you four all the great talks both on private and professional matters. Hüseyin Aysan, the man of strong prepositions and probably the most curious person I’ve ever met, I’m looking forward to ride Siljan runt with you! Carl Ahlberg, a good friend and an inspiration when it comes to enjoying life, hopefully I can convince you to ride Finnmarskturen or Cykelvasan, or both, with me. Peter Wallin, a great friend since we started the computer engineering program in 2001.

viii

I would also like to thank all the people taking part in coffee break dis-cussions, you are many and you have all taken part in making my time at the university a pleasure.

A big thank you to my parents, Sonja and Tommy, for supporting me during my life and pushing me to follow my heart, you mean everything to me. To my brother Johan, thank you for being an inspiration in life, for the times we have shared discussing things and life over a whiskey by the whiskey-holk or elsewhere. Thank you my sister Linda for being supportive and a great sister. Thank you Sandra and Robert for believing in me and giving me the privilege to become “godfather” to your three lovely boys, Albin, Hampus and Viktor.

Teuvo ja Raili, Kiitos kaikesta tuesta.

And last but not least, thank you Pia for always supporting me, you are the love of my life!

Thank you all!!

Jörgen Lidholm Göteborg, September, 2011

List of Publications

Papers Included in the Licentiate Thesis

Paper A: Two Camera System for Robot Applications; Navigation,

Jörgen Lidholm, Fredrik Ekstrand and Lars Asplund, In Proceedings of 13th IEEE International Conference on Emerging Technologies and Fac-tory Automation (ETFA’08), IEEE Industrial Electronics Society, Ham-burg, Germany, (2008).

Paper B: Validation of Stereo Matching for Robot Navigation,

Jörgen Lidholm, Giacomo Spampinato, Lars Asplund, 14th IEEE Inter-national Conference on Emerging Technology and Factory Automation, Palma de Mallorca, Spain, September, 2009.

Paper C: Hardware support for image processing in robot applications,

Jörgen Lidholm, Lars Asplund, Mikael Ekström and Giacomo Spamp-inato, In submission.

viii

I would also like to thank all the people taking part in coffee break dis-cussions, you are many and you have all taken part in making my time at the university a pleasure.

A big thank you to my parents, Sonja and Tommy, for supporting me during my life and pushing me to follow my heart, you mean everything to me. To my brother Johan, thank you for being an inspiration in life, for the times we have shared discussing things and life over a whiskey by the whiskey-holk or elsewhere. Thank you my sister Linda for being supportive and a great sister. Thank you Sandra and Robert for believing in me and giving me the privilege to become “godfather” to your three lovely boys, Albin, Hampus and Viktor.

Teuvo ja Raili, Kiitos kaikesta tuesta.

And last but not least, thank you Pia for always supporting me, you are the love of my life!

Thank you all!!

Jörgen Lidholm Göteborg, September, 2011

List of Publications

Papers Included in the Licentiate Thesis

Paper A: Two Camera System for Robot Applications; Navigation,

Jörgen Lidholm, Fredrik Ekstrand and Lars Asplund, In Proceedings of 13th IEEE International Conference on Emerging Technologies and Fac-tory Automation (ETFA’08), IEEE Industrial Electronics Society, Ham-burg, Germany, (2008).

Paper B: Validation of Stereo Matching for Robot Navigation,

Jörgen Lidholm, Giacomo Spampinato, Lars Asplund, 14th IEEE Inter-national Conference on Emerging Technology and Factory Automation, Palma de Mallorca, Spain, September, 2009.

Paper C: Hardware support for image processing in robot applications,

Jörgen Lidholm, Lars Asplund, Mikael Ekström and Giacomo Spamp-inato, In submission.

xi

Additional Papers by the Author

ROBOTICS FOR SMEs - 3D VISION IN REAL-TIME FOR NAVIGATION AND OBJECT RECOGNITION,

Fredrik Ekstrand, Jörgen Lidholm, Lars Asplund, 39th International Sym-posium on Robotics (ISR 2008), Seoul, Korea.

Stereo Vision Based Navigation for Automated Vehicles in Industry,

Giacomo Spampinato, Jörgen Lidholm, Fredrik Ekstrand, Lars Asplund, 14th IEEE International Conference on Emerging Technology and Fac-tory Automation, Palma de Mallorca, Spain, September, 2009.

Navigation in a Box: Stereovision for Industry Automation,

Giacomo Spampinato, Jörgen Lidholm, Fredrik Ekstrand, Carl Ahlberg, Lars Asplund, Mikael Ekström, Advances in Theory and Applications of Stereo Vision, Book edited by: Asim Bhatti, ISBN: 978-953-307-516-7, Publisher: InTech, Publishing date: January 2011.

An Embedded Stereo Vision Module for 6D pose estimation and mapping, Giacomo Spampinato, Jörgen Lidholm, Carl Ahlberg, Fredrik Ekstrand, Mikael Ekström, Lars Asplund, 2011 IEEE/RSJ International Confer-ence on Intelligent Robots and Systems, In submission.

Additional Bookchapters by the Author

Navigation in a Box: Stereovision for Industry Automation,

Giacomo Spampinato, Jörgen Lidholm, Fredrik Ekstrand, Carl Ahlberg, Lars Asplund and Mikael Ekström (2011). Advances in Theory and Ap-plications of Stereo Vision, Asim Bhatti (Ed.), ISBN: 978-953-307-516-7, InTech, Available from: http://www.intechopen.com/articles/show/title /navigation-in-a-box-stereovision-for-industry-automation

xi

Additional Papers by the Author

ROBOTICS FOR SMEs - 3D VISION IN REAL-TIME FOR NAVIGATION AND OBJECT RECOGNITION,

Fredrik Ekstrand, Jörgen Lidholm, Lars Asplund, 39th International Sym-posium on Robotics (ISR 2008), Seoul, Korea.

Stereo Vision Based Navigation for Automated Vehicles in Industry,

Giacomo Spampinato, Jörgen Lidholm, Fredrik Ekstrand, Lars Asplund, 14th IEEE International Conference on Emerging Technology and Fac-tory Automation, Palma de Mallorca, Spain, September, 2009.

Navigation in a Box: Stereovision for Industry Automation,

Giacomo Spampinato, Jörgen Lidholm, Fredrik Ekstrand, Carl Ahlberg, Lars Asplund, Mikael Ekström, Advances in Theory and Applications of Stereo Vision, Book edited by: Asim Bhatti, ISBN: 978-953-307-516-7, Publisher: InTech, Publishing date: January 2011.

An Embedded Stereo Vision Module for 6D pose estimation and mapping, Giacomo Spampinato, Jörgen Lidholm, Carl Ahlberg, Fredrik Ekstrand, Mikael Ekström, Lars Asplund, 2011 IEEE/RSJ International Confer-ence on Intelligent Robots and Systems, In submission.

Additional Bookchapters by the Author

Navigation in a Box: Stereovision for Industry Automation,

Giacomo Spampinato, Jörgen Lidholm, Fredrik Ekstrand, Carl Ahlberg, Lars Asplund and Mikael Ekström (2011). Advances in Theory and Ap-plications of Stereo Vision, Asim Bhatti (Ed.), ISBN: 978-953-307-516-7, InTech, Available from: http://www.intechopen.com/articles/show/title /navigation-in-a-box-stereovision-for-industry-automation

Contents

I

Thesis

2

1 Introduction 5

1.1 Thesis outline . . . 6

2 Background and Motivation 7 2.1 The Robot: Sense, plan and act . . . 7

2.1.1 Types of robots . . . 9

2.1.2 Robotics tasks . . . 10

2.1.3 Vision in robotics application . . . 15

2.1.4 Robotics for SME. . . 16

2.2 Vision and image processing . . . 17

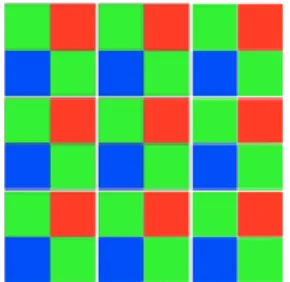

2.2.1 A camera system/Image sensor . . . 18

2.2.2 Colour domains . . . 20

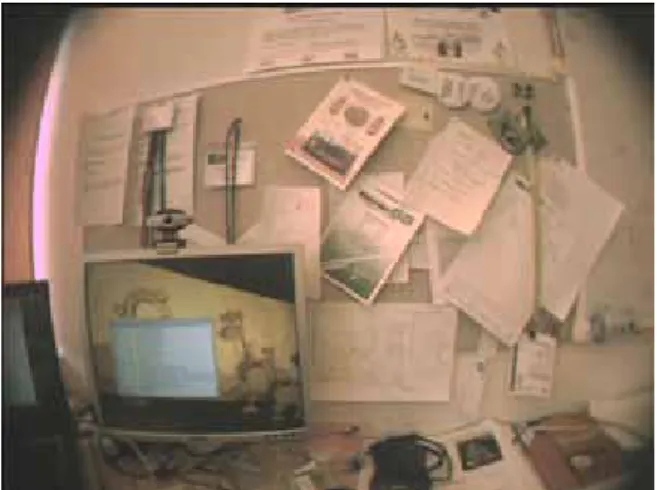

2.2.3 Distortion and correction . . . 21

2.2.4 Image features . . . 23

2.2.5 Stereo vision . . . 24

2.3 Hardware support for heavy computation tasks . . . 26

2.3.1 Digital Signal Processors . . . 27

2.3.2 Vector processors . . . 27

2.3.3 Systolic array processors . . . 28

2.3.4 Asymmetric multicore processors . . . 28

2.3.5 General Purpose GPUs (GPGPU) . . . 29

2.3.6 Field Programmable Gate Arrays . . . 29

2.4 FPGA for (stereo-) vision applications . . . 30

2.4.1 Designing FPGA architectures (What they need) . . . 31

2.5 Embedded FPGA based vision system . . . 32 xiii

Contents

I

Thesis

2

1 Introduction 5

1.1 Thesis outline . . . 6

2 Background and Motivation 7 2.1 The Robot: Sense, plan and act . . . 7

2.1.1 Types of robots . . . 9

2.1.2 Robotics tasks . . . 10

2.1.3 Vision in robotics application . . . 15

2.1.4 Robotics for SME. . . 16

2.2 Vision and image processing . . . 17

2.2.1 A camera system/Image sensor . . . 18

2.2.2 Colour domains . . . 20

2.2.3 Distortion and correction . . . 21

2.2.4 Image features . . . 23

2.2.5 Stereo vision . . . 24

2.3 Hardware support for heavy computation tasks . . . 26

2.3.1 Digital Signal Processors . . . 27

2.3.2 Vector processors . . . 27

2.3.3 Systolic array processors . . . 28

2.3.4 Asymmetric multicore processors . . . 28

2.3.5 General Purpose GPUs (GPGPU) . . . 29

2.3.6 Field Programmable Gate Arrays . . . 29

2.4 FPGA for (stereo-) vision applications . . . 30

2.4.1 Designing FPGA architectures (What they need) . . . 31

2.5 Embedded FPGA based vision system . . . 32 xiii

xiv Contents

2.6 Component based software development for hybrid FPGA

sys-tems . . . 34

2.7 Simultaneous Localization And Mapping (SLAM) . . . 35

2.8 Summary . . . 36

3 Summary of papers and their contribution 39 3.1 Paper A . . . 39 3.1.1 Contribution . . . 39 3.2 Paper B . . . 40 3.2.1 Contribution . . . 40 3.3 Paper C . . . 40 3.3.1 Contribution . . . 41

4 Conclusions and Future work 43 4.1 Conclusions . . . 43

4.2 Future work . . . 44

Bibliography 45

II

Included Papers

49

5 Paper A: Two Camera System for Robot Applications; Navigation 51 5.1 Introduction . . . 53 5.2 Related work . . . 53 5.3 Experimental platform . . . 54 5.3.1 Image sensors . . . 54 5.3.2 FPGA board . . . 56 5.3.3 Carrier board . . . 56 5.4 Feature detectors . . . 575.4.1 Stephen and Harris combined corner and edge detector 57 5.4.2 FPGA implementation of Harris corner detector . . . . 58

5.5 Interest point location . . . 59

5.5.1 Image sequence feature tracking . . . 63

5.5.2 Spurious matching and landmark evaluation . . . 63

5.5.3 Experiments . . . 65 5.6 Results . . . 66 5.7 Future work . . . 68 Bibliography . . . 69 Contents xv 6 Paper B: Validation of Stereo Matching for Robot Navigation 71 6.1 Introduction . . . 73

6.2 Theory . . . 74

6.2.1 Definitions . . . 74

6.2.2 Navigation process overview . . . 76

6.2.3 Stereo triangulation . . . 77

6.2.4 Back projection of landmarks onto the image sensor . 80 6.2.5 Planar egomotion estimation . . . 80

6.3 Experiments . . . 81

6.3.1 Experimental Platform . . . 81

6.3.2 Stereo Camera Calibration and rectification . . . 83

6.3.3 Experimental setup . . . 83 6.4 Results . . . 84 6.4.1 Stereo matching . . . 84 6.4.2 Landmark location . . . 84 6.4.3 Egomotion estimation . . . 85 6.5 Conclusions . . . 87 6.6 Future work . . . 89 Bibliography . . . 91 7 Paper C: Hardware support for image processing in robot applications 93 7.1 Introduction . . . 95

7.2 Image Processing; Hardware and Software support . . . 96

7.2.1 The image processing sequence . . . 97

7.2.2 Hardware for Image Processing . . . 98

7.2.3 Image processing in FPGAs . . . 101

7.3 Developing Software in FPGA . . . 102

7.4 Lens distortion correction . . . 103

7.4.1 Method 1: For cases when the tangential distortion is negligible . . . 108

7.4.2 Method 2: For cases when the tangential distortion must corrected . . . 109

7.5 Implementation details . . . 111

7.5.1 Proposed hardware design for component based em-bedded image processing . . . 112

7.5.2 Fixed point arithmetic . . . 113

xiv Contents

2.6 Component based software development for hybrid FPGA

sys-tems . . . 34

2.7 Simultaneous Localization And Mapping (SLAM) . . . 35

2.8 Summary . . . 36

3 Summary of papers and their contribution 39 3.1 Paper A . . . 39 3.1.1 Contribution . . . 39 3.2 Paper B . . . 40 3.2.1 Contribution . . . 40 3.3 Paper C . . . 40 3.3.1 Contribution . . . 41

4 Conclusions and Future work 43 4.1 Conclusions . . . 43

4.2 Future work . . . 44

Bibliography 45

II

Included Papers

49

5 Paper A: Two Camera System for Robot Applications; Navigation 51 5.1 Introduction . . . 53 5.2 Related work . . . 53 5.3 Experimental platform . . . 54 5.3.1 Image sensors . . . 54 5.3.2 FPGA board . . . 56 5.3.3 Carrier board . . . 56 5.4 Feature detectors . . . 575.4.1 Stephen and Harris combined corner and edge detector 57 5.4.2 FPGA implementation of Harris corner detector . . . . 58

5.5 Interest point location . . . 59

5.5.1 Image sequence feature tracking . . . 63

5.5.2 Spurious matching and landmark evaluation . . . 63

5.5.3 Experiments . . . 65 5.6 Results . . . 66 5.7 Future work . . . 68 Bibliography . . . 69 Contents xv 6 Paper B: Validation of Stereo Matching for Robot Navigation 71 6.1 Introduction . . . 73

6.2 Theory . . . 74

6.2.1 Definitions . . . 74

6.2.2 Navigation process overview . . . 76

6.2.3 Stereo triangulation . . . 77

6.2.4 Back projection of landmarks onto the image sensor . 80 6.2.5 Planar egomotion estimation . . . 80

6.3 Experiments . . . 81

6.3.1 Experimental Platform . . . 81

6.3.2 Stereo Camera Calibration and rectification . . . 83

6.3.3 Experimental setup . . . 83 6.4 Results . . . 84 6.4.1 Stereo matching . . . 84 6.4.2 Landmark location . . . 84 6.4.3 Egomotion estimation . . . 85 6.5 Conclusions . . . 87 6.6 Future work . . . 89 Bibliography . . . 91 7 Paper C: Hardware support for image processing in robot applications 93 7.1 Introduction . . . 95

7.2 Image Processing; Hardware and Software support . . . 96

7.2.1 The image processing sequence . . . 97

7.2.2 Hardware for Image Processing . . . 98

7.2.3 Image processing in FPGAs . . . 101

7.3 Developing Software in FPGA . . . 102

7.4 Lens distortion correction . . . 103

7.4.1 Method 1: For cases when the tangential distortion is negligible . . . 108

7.4.2 Method 2: For cases when the tangential distortion must corrected . . . 109

7.5 Implementation details . . . 111

7.5.1 Proposed hardware design for component based em-bedded image processing . . . 112

7.5.2 Fixed point arithmetic . . . 113

xvi Contents

7.5.4 Method 1: Implementation details . . . 114

7.5.5 Method 2: Implementation details . . . 116

7.6 Results . . . 117 7.6.1 Execution time . . . 117 7.6.2 Resource requirements . . . 119 7.6.3 Precision . . . 120 7.7 Discussion . . . 122 7.8 Future work . . . 124 Bibliography . . . 124

Acronyms

ADC Analog-to-Digital Converter AGV Autonomous Guided Vehicle AUV Autonomous Underwater Vehicle ASIC Application Specific Integrated Circuit CBSE Component Based Software Engineering CCD Charge Coupled DeviceCMOS Complementary Metal-Oxide Semiconductor CPU Central Processing Unit

DSP Digital Signal Processor

FPGA Field Programmable Gate Array GPS Global Positioning System GPU Graphics Processing Unit HDL Hardware Description Language IC Integrated Circuit

ROV Remotely Operated Vehicle SIMD Single Instruction Multiple Data MIMD Multiple Instructions Multiple Data

xvi Contents

7.5.4 Method 1: Implementation details . . . 114

7.5.5 Method 2: Implementation details . . . 116

7.6 Results . . . 117 7.6.1 Execution time . . . 117 7.6.2 Resource requirements . . . 119 7.6.3 Precision . . . 120 7.7 Discussion . . . 122 7.8 Future work . . . 124 Bibliography . . . 124

Acronyms

ADC Analog-to-Digital Converter AGV Autonomous Guided Vehicle AUV Autonomous Underwater Vehicle ASIC Application Specific Integrated Circuit CBSE Component Based Software Engineering CCD Charge Coupled DeviceCMOS Complementary Metal-Oxide Semiconductor CPU Central Processing Unit

DSP Digital Signal Processor

FPGA Field Programmable Gate Array GPS Global Positioning System GPU Graphics Processing Unit HDL Hardware Description Language IC Integrated Circuit

ROV Remotely Operated Vehicle SIMD Single Instruction Multiple Data MIMD Multiple Instructions Multiple Data

2 Contents

SLAM Simultaneous Localisation And Mapping SME Small and Medium Enterprises

SoC System-on-Chip

UAV Unmanned Aerial Vehicle VLIW Very Long Instruction Words

I

Thesis

2 Contents

SLAM Simultaneous Localisation And Mapping SME Small and Medium Enterprises

SoC System-on-Chip

UAV Unmanned Aerial Vehicle VLIW Very Long Instruction Words

I

Thesis

Chapter 1

Introduction

In industry, robots have existed for decades. The main purpose of an industrial robot is to carry out heavy monotone tasks that often require high precision, i.e. car manufacturing, machine tending. However, to maintain high precision over time and to be able to lift heavy objects, the robot is often bolted to the ground. For a machine tending robot that would also mean that the robot is occupying a machine.

A mobile industrial robot that can be utilised when needed or to tend several machines at the same time would enable smaller (small to medium size) enter-prises to invest in a robot. The robot can easily be moved to free a machine, enabling an operator to run a short series manually. To make an industrial mobile robot flexible, to meet requirements of small and medium enterprises, requires a navigation system for the robot to know its location and move be-tween different machines or to fetch material from warehouse when needed. A navigation system should be flexible and easy to operate for a small company, enabling easy changing of which route the robot should move. One possibility is to let a person guide the mobile platform using a well known interface like a joystick. Basically two different sensors would provide sufficient precision and flexibility for such a system, i.e. laser range finders and cameras. A stereo camera provide higher flexibility and could still be less expensive than a laser range finder. An image must be corrected from distortion and salient informa-tion extracted which can be utilised to create landmarks through multiple view cameras. Image analysis requires calculations in several steps of large amounts of data and is the foundation for advanced robot tasks, e.g. navigation. Com-putational performance is still a problem in image processing, mainly due to

Chapter 1

Introduction

In industry, robots have existed for decades. The main purpose of an industrial robot is to carry out heavy monotone tasks that often require high precision, i.e. car manufacturing, machine tending. However, to maintain high precision over time and to be able to lift heavy objects, the robot is often bolted to the ground. For a machine tending robot that would also mean that the robot is occupying a machine.

A mobile industrial robot that can be utilised when needed or to tend several machines at the same time would enable smaller (small to medium size) enter-prises to invest in a robot. The robot can easily be moved to free a machine, enabling an operator to run a short series manually. To make an industrial mobile robot flexible, to meet requirements of small and medium enterprises, requires a navigation system for the robot to know its location and move be-tween different machines or to fetch material from warehouse when needed. A navigation system should be flexible and easy to operate for a small company, enabling easy changing of which route the robot should move. One possibility is to let a person guide the mobile platform using a well known interface like a joystick. Basically two different sensors would provide sufficient precision and flexibility for such a system, i.e. laser range finders and cameras. A stereo camera provide higher flexibility and could still be less expensive than a laser range finder. An image must be corrected from distortion and salient informa-tion extracted which can be utilised to create landmarks through multiple view cameras. Image analysis requires calculations in several steps of large amounts of data and is the foundation for advanced robot tasks, e.g. navigation. Com-putational performance is still a problem in image processing, mainly due to

6 Chapter 1. Introduction

the large amount of data.

Robots are not only for industrial applications, there are several areas in our everyday life where a robot can assist. For many applications the robot needs information on its location and also knowledge of objects in the environment that either can be obstacles or objects that is supposed to be handled in some way. In this thesis I will describe the task at hand when building a system utilising an Field Programmable Gate Array (FPGA), what is required and what limitations there are in such a system. In parallel with this work two stereo camera systems have been designed and used as validation platforms.

1.1

Thesis outline

The outline of this thesis is divided into two parts:

Part Ipresents the background and motivation for the thesis and puts my pa-pers into a context. Chapter 2 presents robots, image processing, hardware for image processing and implementation considerations for FPGAs. Chapter

3presents my papers and my contributions in each paper. Chapter 4 gives a

summary and I summarize future relevant work.

Part IIpresents the technical contribution of the thesis in the form of three

papers.

Chapter 2

Background and Motivation

This chapter will give an overview of the research area and a motivation to my work. I will start with a presentation of my view of what a robot is, continuing with an introduction to the processes involved in image analysis and finally I will present hardware support for image processing, with focus on the FPGA and the development process involved. Some ideas that emerged during the course of my research such as the Component Based Software Engineering (CBSE) paradigm as an influence to FPGA architecture development, will also be discussed.

2.1

The Robot: Sense, plan and act

A robot is, in my opinion, defined by three abilities; sense, plan and act. A robot collects information of the environment and uses the information to plan and perform a physical act. The ability to sense and plan are what differentiate a robot from a mechanical machine. In this section I will discuss the three parts and draw some parallels to humans.

Sense

A sensor is a device that converts a physical phenomenon to an electric signal which, when converted to a digital value, can be used in a software program. A wide range of sensors exists for sensing most physical properties and in different constructions can sense anything from rotation of a wheel to distance to a wall.

6 Chapter 1. Introduction

the large amount of data.

Robots are not only for industrial applications, there are several areas in our everyday life where a robot can assist. For many applications the robot needs information on its location and also knowledge of objects in the environment that either can be obstacles or objects that is supposed to be handled in some way. In this thesis I will describe the task at hand when building a system utilising an Field Programmable Gate Array (FPGA), what is required and what limitations there are in such a system. In parallel with this work two stereo camera systems have been designed and used as validation platforms.

1.1

Thesis outline

The outline of this thesis is divided into two parts:

Part Ipresents the background and motivation for the thesis and puts my pa-pers into a context. Chapter 2 presents robots, image processing, hardware for image processing and implementation considerations for FPGAs. Chapter

3presents my papers and my contributions in each paper. Chapter 4 gives a

summary and I summarize future relevant work.

Part IIpresents the technical contribution of the thesis in the form of three

papers.

Chapter 2

Background and Motivation

This chapter will give an overview of the research area and a motivation to my work. I will start with a presentation of my view of what a robot is, continuing with an introduction to the processes involved in image analysis and finally I will present hardware support for image processing, with focus on the FPGA and the development process involved. Some ideas that emerged during the course of my research such as the Component Based Software Engineering (CBSE) paradigm as an influence to FPGA architecture development, will also be discussed.

2.1

The Robot: Sense, plan and act

A robot is, in my opinion, defined by three abilities; sense, plan and act. A robot collects information of the environment and uses the information to plan and perform a physical act. The ability to sense and plan are what differentiate a robot from a mechanical machine. In this section I will discuss the three parts and draw some parallels to humans.

Sense

A sensor is a device that converts a physical phenomenon to an electric signal which, when converted to a digital value, can be used in a software program. A wide range of sensors exists for sensing most physical properties and in different constructions can sense anything from rotation of a wheel to distance to a wall.

8 Chapter 2. Background and Motivation

For humans, vision is very important, it helps us to see objects in our vicin-ity and we are able to identify or at least categories them. With our eyes we can estimate depth and distance between objects to acquire a good knowledge of the environment, comparable to a map. Vision also assist other abilities such as balance.

Because vision is so important for humans, it can also be a powerful source of information for a robot. Modern cameras are fairly low cost, however “dumb”, a camera must be complemented with an image analysis system. An image consists of a dense colour matrix, where salient regions are extracted with a feature detector which can detect corners, edges, blobs or be matched using custom templates. A human vision system can be mimicked to some extend, once the features have been acquired.

There is a good reason why visual cortex takes up such a large part of the human brain. Both due to the enormous amount of information that has to be handled for us to understand what it is we see, and also by the complex understanding humans can gain from the rays of light projected on the retina of our eyes. A human can easily extract interesting information from what we see, and our knowledge is not disrupted by artefacts like shades, texture, occlusion, light temperature and colour. In computer vision applications, artificial lighting is often used to remove any unwanted shadows.

A large amount of research has been performed in the area of image pro-cessing and related topics for many years and have matured a great deal, how-ever computational performance is still an obstacle.

Plan

When a robot is able to understand what is in the environment, its own loca-tion, both in relation to interesting objects as well as on a higher level, it can start to plan an act. Planning is often implemented with artificial intelligence (AI) algorithms. In the AI field there are different types of algorithms that tries to mimic intelligence through different concepts, i.e. genetic algorithms, reinforcement learning, neural networks, fuzzy logic, case based reasoning.

A robot should be able to handle a set of cases without knowing exactly what will happen. For example an automatic door is not a robot, which is too simple and the behaviour is predictable. AI tries to mimic the human way of thinking, which require abstraction of information. For instance a location could be described as in front of the refrigerator in the kitchen as opposed to

(32.3, 123.4, 0.0). In other words, my understanding of my location is only relevant with respect to what I want to accomplish.

2.1 The Robot: Sense, plan and act 9

Act

A robot acts through a physical action, it can be anything from moving to a dif-ferent location, to push a button or grab an object. To do this, motors, pneumat-ics, hydraulpneumat-ics, gearboxes and other mechanical constructions are used. This will not be discussed any further in this thesis however interesting it may be.

2.1.1

Types of robots

Designing a robot is a matter of finding a good design for what the robot is supposed to do. For simpler tasks a simple machine is all that is required but for some tasks intelligence and advanced mechanical functionality is a require-ment. Robots that will help humans in their daily life at home should probably mimic some properties of humans, since the environment created by humans are also created for humans. Other tasks may not be so simple to carry out by robots inspired by humans, but it is often a good solution to take inspiration from animals, be it a snake, spider, dolphin or something else.

Robots exists in many different shapes and designs. Robots in production industry from the middle of the twentieth century looked more or less the same as they do today. The dexterity has increased as well as precision, speed and reliability. The industrial robot is the most common kind and is also meant to perform tasks that humans used to do in production industry. Some robots imitates snakes, some are built to climb walls or trees others to travel along an electric power line. But there are also other kinds of robots with wheels, one or more legs, caterpillar tracks, arms, head. A wheeled robot may have two, three, four or even more wheels, some have wheels based on a design by an inventor named Bengt Ilon, enabling omnidirectional motion. Robots with legs can be inspired by humans, having two legs, some have six or even eight legs, inspired by insects. There are also autonomous robots designed to fly, Unmanned Aerial Vehicle (UAV), that also come in different forms, standard model planes, helicopters and quadrocopters. Of course there are also robots designed for under water mobility, Autonomous Underwater Vehicle (AUV) where some look like torpedoes.

A robot can be fully autonomous, semi autonomous or teleoperated (in which case it probably should not be called a robot any more).

Mobile robots are power by batteries which are getting increasingly pow-erful but still pose a limitation. Power constraints enforce power efficient com-puting devices and efficient algorithms that are advanced enough to accomplish a certain task.

8 Chapter 2. Background and Motivation

For humans, vision is very important, it helps us to see objects in our vicin-ity and we are able to identify or at least categories them. With our eyes we can estimate depth and distance between objects to acquire a good knowledge of the environment, comparable to a map. Vision also assist other abilities such as balance.

Because vision is so important for humans, it can also be a powerful source of information for a robot. Modern cameras are fairly low cost, however “dumb”, a camera must be complemented with an image analysis system. An image consists of a dense colour matrix, where salient regions are extracted with a feature detector which can detect corners, edges, blobs or be matched using custom templates. A human vision system can be mimicked to some extend, once the features have been acquired.

There is a good reason why visual cortex takes up such a large part of the human brain. Both due to the enormous amount of information that has to be handled for us to understand what it is we see, and also by the complex understanding humans can gain from the rays of light projected on the retina of our eyes. A human can easily extract interesting information from what we see, and our knowledge is not disrupted by artefacts like shades, texture, occlusion, light temperature and colour. In computer vision applications, artificial lighting is often used to remove any unwanted shadows.

A large amount of research has been performed in the area of image pro-cessing and related topics for many years and have matured a great deal, how-ever computational performance is still an obstacle.

Plan

When a robot is able to understand what is in the environment, its own loca-tion, both in relation to interesting objects as well as on a higher level, it can start to plan an act. Planning is often implemented with artificial intelligence (AI) algorithms. In the AI field there are different types of algorithms that tries to mimic intelligence through different concepts, i.e. genetic algorithms, reinforcement learning, neural networks, fuzzy logic, case based reasoning.

A robot should be able to handle a set of cases without knowing exactly what will happen. For example an automatic door is not a robot, which is too simple and the behaviour is predictable. AI tries to mimic the human way of thinking, which require abstraction of information. For instance a location could be described as in front of the refrigerator in the kitchen as opposed to

(32.3, 123.4, 0.0). In other words, my understanding of my location is only relevant with respect to what I want to accomplish.

2.1 The Robot: Sense, plan and act 9

Act

A robot acts through a physical action, it can be anything from moving to a dif-ferent location, to push a button or grab an object. To do this, motors, pneumat-ics, hydraulpneumat-ics, gearboxes and other mechanical constructions are used. This will not be discussed any further in this thesis however interesting it may be.

2.1.1

Types of robots

Designing a robot is a matter of finding a good design for what the robot is supposed to do. For simpler tasks a simple machine is all that is required but for some tasks intelligence and advanced mechanical functionality is a require-ment. Robots that will help humans in their daily life at home should probably mimic some properties of humans, since the environment created by humans are also created for humans. Other tasks may not be so simple to carry out by robots inspired by humans, but it is often a good solution to take inspiration from animals, be it a snake, spider, dolphin or something else.

Robots exists in many different shapes and designs. Robots in production industry from the middle of the twentieth century looked more or less the same as they do today. The dexterity has increased as well as precision, speed and reliability. The industrial robot is the most common kind and is also meant to perform tasks that humans used to do in production industry. Some robots imitates snakes, some are built to climb walls or trees others to travel along an electric power line. But there are also other kinds of robots with wheels, one or more legs, caterpillar tracks, arms, head. A wheeled robot may have two, three, four or even more wheels, some have wheels based on a design by an inventor named Bengt Ilon, enabling omnidirectional motion. Robots with legs can be inspired by humans, having two legs, some have six or even eight legs, inspired by insects. There are also autonomous robots designed to fly, Unmanned Aerial Vehicle (UAV), that also come in different forms, standard model planes, helicopters and quadrocopters. Of course there are also robots designed for under water mobility, Autonomous Underwater Vehicle (AUV) where some look like torpedoes.

A robot can be fully autonomous, semi autonomous or teleoperated (in which case it probably should not be called a robot any more).

Mobile robots are power by batteries which are getting increasingly pow-erful but still pose a limitation. Power constraints enforce power efficient com-puting devices and efficient algorithms that are advanced enough to accomplish a certain task.

10 Chapter 2. Background and Motivation

Mobile robots interacts with the environment and thus must also be able to acquire information from it. In the environment there can be obstacles that needs to be avoided, interesting objects that the robot should interact with, i.e. a human, a machine, a can of beer. This require sensory equipment, and the more advanced the sensors are, the more information there is to process further increasing load on computing devices and batter consumption.

Early mobile robots where sometimes designed with a traditional desktop computer and modern robots often uses laptops (with their own power source) or embedded computer systems.

On the market today a large amount of power efficient devices exists driven by the mobile market where performance is becoming increasingly important in our society with smart phones and tablets. A cell-phone today, can incorpo-rate a System-on-Chip (SoC) with multi-core processor, Digital Signal Proces-sor (DSP)s and even a Graphics Processing Unit (GPU).

2.1.2

Robotics tasks

Of course all different kinds of robots are meant to execute different tasks and the design is chosen to best suit the task at hand. For example there are robots for transportation of beds, material and documents in hospitals, for military purposes such as precision bombing, surveillance, exploration both airborne and ground vehicles. Civilian robots exists for search and rescue, surveil-lance, pool cleaning, lawn mowing, vacuum cleaning, planetary exploration (Mars rovers), various transportation operations, teleoperated surgery and also “semi-autonomous” and fully autonomous cars (still at research stage). With the vast amount of research in robotics and related areas, robots are for exam-ple becoming increasingly intelligent and humanoids more expressive through speech synthesis and human like facial expressions, which governs for a broad application area and a large variety physical abilities.

Robotics in medical appliances

Teleoperated surgical robot systems are clever for many reasons, they allow skilled surgeons to perform an operation on a patient at a remote location. A Swedish surgeon could perform a surgery on a patient in America, without hav-ing to travel. But such a robotic system also increases precision, by allowhav-ing the surgeon to set how much an instrument should move for given hand motion.

2.1 The Robot: Sense, plan and act 11

Figure 2.1: Teleoperated robot Giraff. (Photo: Giraff Technologies AB/Robot-dalen )

Another type of robot is for elderly care, a teleoperated robot with mobility, a video camera and a screen, see figure 2.1. The robot is operated by a care giver or relatives and allows the operator to virtually "visit" a care taker without physically going there. The robot enables the operator to virtually move around in the house or apartment and see what is going on. A robot like this requires acceptance from both care giver and care taker, but will probably in time be semi-autonomous to simplify the task of transporting between different rooms. The increased autonomy will require more advanced sensory equipment and software that allows navigation, obstacle detection and avoidance. This is im-portant, especially in a dynamic environment such as a home where furnitures are moved around and with moving people as well.

Persons with physical handicaps are often bound to a wheel chair, an ex-oskeleton could be an alternative to a wheel chair which also could work as a rehabilitation tool by gradually decreasing the amplification. An exoskeleton enhances a motion using actuators of varying kind, this can also be utilised to allow a person to lift heavier things than he or she normally would be capable of lifting. Modern technology has reached a level where it is actually feasible to construct an exoskeleton which is light, durable and powerful enough.

Robots for personal use

A robot is meant to simplify our daily life and to some extent for joy. Vacuum cleaning and lawn mower robots are popular, probably due to a relatively

af-10 Chapter 2. Background and Motivation

Mobile robots interacts with the environment and thus must also be able to acquire information from it. In the environment there can be obstacles that needs to be avoided, interesting objects that the robot should interact with, i.e. a human, a machine, a can of beer. This require sensory equipment, and the more advanced the sensors are, the more information there is to process further increasing load on computing devices and batter consumption.

Early mobile robots where sometimes designed with a traditional desktop computer and modern robots often uses laptops (with their own power source) or embedded computer systems.

On the market today a large amount of power efficient devices exists driven by the mobile market where performance is becoming increasingly important in our society with smart phones and tablets. A cell-phone today, can incorpo-rate a System-on-Chip (SoC) with multi-core processor, Digital Signal Proces-sor (DSP)s and even a Graphics Processing Unit (GPU).

2.1.2

Robotics tasks

Of course all different kinds of robots are meant to execute different tasks and the design is chosen to best suit the task at hand. For example there are robots for transportation of beds, material and documents in hospitals, for military purposes such as precision bombing, surveillance, exploration both airborne and ground vehicles. Civilian robots exists for search and rescue, surveil-lance, pool cleaning, lawn mowing, vacuum cleaning, planetary exploration (Mars rovers), various transportation operations, teleoperated surgery and also “semi-autonomous” and fully autonomous cars (still at research stage). With the vast amount of research in robotics and related areas, robots are for exam-ple becoming increasingly intelligent and humanoids more expressive through speech synthesis and human like facial expressions, which governs for a broad application area and a large variety physical abilities.

Robotics in medical appliances

Teleoperated surgical robot systems are clever for many reasons, they allow skilled surgeons to perform an operation on a patient at a remote location. A Swedish surgeon could perform a surgery on a patient in America, without hav-ing to travel. But such a robotic system also increases precision, by allowhav-ing the surgeon to set how much an instrument should move for given hand motion.

2.1 The Robot: Sense, plan and act 11

Figure 2.1: Teleoperated robot Giraff. (Photo: Giraff Technologies AB/Robot-dalen )

Another type of robot is for elderly care, a teleoperated robot with mobility, a video camera and a screen, see figure 2.1. The robot is operated by a care giver or relatives and allows the operator to virtually "visit" a care taker without physically going there. The robot enables the operator to virtually move around in the house or apartment and see what is going on. A robot like this requires acceptance from both care giver and care taker, but will probably in time be semi-autonomous to simplify the task of transporting between different rooms. The increased autonomy will require more advanced sensory equipment and software that allows navigation, obstacle detection and avoidance. This is im-portant, especially in a dynamic environment such as a home where furnitures are moved around and with moving people as well.

Persons with physical handicaps are often bound to a wheel chair, an ex-oskeleton could be an alternative to a wheel chair which also could work as a rehabilitation tool by gradually decreasing the amplification. An exoskeleton enhances a motion using actuators of varying kind, this can also be utilised to allow a person to lift heavier things than he or she normally would be capable of lifting. Modern technology has reached a level where it is actually feasible to construct an exoskeleton which is light, durable and powerful enough.

Robots for personal use

A robot is meant to simplify our daily life and to some extent for joy. Vacuum cleaning and lawn mower robots are popular, probably due to a relatively

af-12 Chapter 2. Background and Motivation

fordable price, but also due to that those tasks are quite tedious. The robots are simple but perfect examples of use cases for robots, the tasks they perform are non-critical and we (at least I) are just happy if we don’t have to do them ourselves, see figure 2.2 for examples.

(a) A vacuum cleaning robot (courtesy of iRobot)

(b) A lawn mowing robot (courtesy of Husq-varna)

Figure 2.2: Robots for your home.

Semi autonomous cars

Human factor is almost always the main cause of car accidents. This has been recognised by car manufacturers, who introduce more technology to aid the driver to notice potential hazards, change of speed limits and also to act in critical situations, i.e. emergency brake. Comfort systems are also available,

i.e. adaptive speed control. Sensors used today are both based on vision and

radar technologies.

There are also systems in active development, which are more focused on the drivers attention, i.e. to detect drowsiness of the driver.

Robots in production industry

The first robots where made for industrial production, where the robot is a solution for improving quality of production, lower production cost through increased production speed. Industrial robots are extremely robust and built for constant operation while maintaining high precision. Industrial robots exists in many different designs to meet different demands, see figure 2.3.

2.1 The Robot: Sense, plan and act 13

(a) Robots for spot welding (b) High speed robot for pick and place operations

Figure 2.3: Industrial robots exists in a wide range of configurations. (Photos: Courtesy of ABB)

UAV

Unmanned aerial vehicles has been interesting mainly in military applications, where an UAV allows close and relatively cost efficient surveillance missions without putting human lives in harms way. Figure 2.4 shows two examples, but there are other kinds and different applications.

(a) The Predator UAV, (U.S. Air Force photo/Tech. Sgt. Erik Gudmundson)

(b) The Reaper UAV, (U.S. Air Force photo/Tech. Sgt. Erik Gudmundson)

12 Chapter 2. Background and Motivation

fordable price, but also due to that those tasks are quite tedious. The robots are simple but perfect examples of use cases for robots, the tasks they perform are non-critical and we (at least I) are just happy if we don’t have to do them ourselves, see figure 2.2 for examples.

(a) A vacuum cleaning robot (courtesy of iRobot)

(b) A lawn mowing robot (courtesy of Husq-varna)

Figure 2.2: Robots for your home.

Semi autonomous cars

Human factor is almost always the main cause of car accidents. This has been recognised by car manufacturers, who introduce more technology to aid the driver to notice potential hazards, change of speed limits and also to act in critical situations, i.e. emergency brake. Comfort systems are also available,

i.e. adaptive speed control. Sensors used today are both based on vision and

radar technologies.

There are also systems in active development, which are more focused on the drivers attention, i.e. to detect drowsiness of the driver.

Robots in production industry

The first robots where made for industrial production, where the robot is a solution for improving quality of production, lower production cost through increased production speed. Industrial robots are extremely robust and built for constant operation while maintaining high precision. Industrial robots exists in many different designs to meet different demands, see figure 2.3.

2.1 The Robot: Sense, plan and act 13

(a) Robots for spot welding (b) High speed robot for pick and place operations

Figure 2.3: Industrial robots exists in a wide range of configurations. (Photos: Courtesy of ABB)

UAV

Unmanned aerial vehicles has been interesting mainly in military applications, where an UAV allows close and relatively cost efficient surveillance missions without putting human lives in harms way. Figure 2.4 shows two examples, but there are other kinds and different applications.

(a) The Predator UAV, (U.S. Air Force photo/Tech. Sgt. Erik Gudmundson)

(b) The Reaper UAV, (U.S. Air Force photo/Tech. Sgt. Erik Gudmundson)

14 Chapter 2. Background and Motivation

AUV

The most common type of underwater vehicle is the Remotely Operated Vehi-cle (ROV). ROVs are often used in deep water exploration and has been used for locating drowned persons after boat accidents. The sensory equipment are mainly based on sonar technology. Complementary vision systems allow an operator to see what exists in the near vicinity of the ROV.

An ROV can measure its own location using sonars that senses distinctive formations at the sea floor. Together with a surface reference localisation with a support surface ship as reference, maps can be created. The step from an ROV to an AUV is not so far, what is needed is intelligence. An AUV can be utilised for surveillance of off-shore installations, e.g. oil rigs, intruder surveillance of sea-ports.

Figure 2.5: The autonomous underwater vehicle, designed by students at Mälardalen University.

Rovers

A rover is a vehicle, man or unmanned, which is used for terrain exploration. The unmanned Mars rover Spirit landed on planet Mars in the beginning of 2004, to start its geological research looking for water and analysing minerals. The rover contains several cameras, tools to drill in rocks and collect samples of the surface for analysis using a wide range of instruments. Collected infor-mation was sent to earth with low speed long range radio.

An autonomous rover must plan paths to travel, be rugged and mobile to handle the rough terrain that it will encounter.

2.1 The Robot: Sense, plan and act 15

Figure 2.6: A concept drawing of Spirit, one of the Mars rovers. Designed to withstand the hostile environment on planet Mars. (Courtesy of NASA)

2.1.3

Vision in robotics application

The old saying: “An image says more than a thousand words”, is very true. A lot of information can be collected from an image, which can be used for obstacle detection, object detection/recognition, self localisation and mapping, face recognition and much more.

On top of the obvious ability to see things with vision is that, for a robot a camera system can be utilised for several purposes, i.e. navigation, object recognition, obstacle avoidance. Modern camera- and image processing per-formance, allows video rate processing and understanding which is vital for high speed applications. A robot is only useful if it can perform a given task in reasonable time, and for time critical systems, i.e. obstacle avoidance and vehicle accident avoidance, the image processing must produce a result with sufficient quality and speed so that a critical situation can be avoided.

Speed is not always the main problem. It is vital for mobile robots, which are battery powered, that an image processing system consumes little power and that it is low weight. Consider a robot that harvests trees by climbing it and disassembling the tree while the tree is still standing. Carrying a heavy computer for image processing is not an option.

There is a need for power efficient and compact vision systems, that can perform advanced image analysis at video rate (30Hz).

14 Chapter 2. Background and Motivation

AUV

The most common type of underwater vehicle is the Remotely Operated Vehi-cle (ROV). ROVs are often used in deep water exploration and has been used for locating drowned persons after boat accidents. The sensory equipment are mainly based on sonar technology. Complementary vision systems allow an operator to see what exists in the near vicinity of the ROV.

An ROV can measure its own location using sonars that senses distinctive formations at the sea floor. Together with a surface reference localisation with a support surface ship as reference, maps can be created. The step from an ROV to an AUV is not so far, what is needed is intelligence. An AUV can be utilised for surveillance of off-shore installations, e.g. oil rigs, intruder surveillance of sea-ports.

Figure 2.5: The autonomous underwater vehicle, designed by students at Mälardalen University.

Rovers

A rover is a vehicle, man or unmanned, which is used for terrain exploration. The unmanned Mars rover Spirit landed on planet Mars in the beginning of 2004, to start its geological research looking for water and analysing minerals. The rover contains several cameras, tools to drill in rocks and collect samples of the surface for analysis using a wide range of instruments. Collected infor-mation was sent to earth with low speed long range radio.

An autonomous rover must plan paths to travel, be rugged and mobile to handle the rough terrain that it will encounter.

2.1 The Robot: Sense, plan and act 15

Figure 2.6: A concept drawing of Spirit, one of the Mars rovers. Designed to withstand the hostile environment on planet Mars. (Courtesy of NASA)

2.1.3

Vision in robotics application

The old saying: “An image says more than a thousand words”, is very true. A lot of information can be collected from an image, which can be used for obstacle detection, object detection/recognition, self localisation and mapping, face recognition and much more.

On top of the obvious ability to see things with vision is that, for a robot a camera system can be utilised for several purposes, i.e. navigation, object recognition, obstacle avoidance. Modern camera- and image processing per-formance, allows video rate processing and understanding which is vital for high speed applications. A robot is only useful if it can perform a given task in reasonable time, and for time critical systems, i.e. obstacle avoidance and vehicle accident avoidance, the image processing must produce a result with sufficient quality and speed so that a critical situation can be avoided.

Speed is not always the main problem. It is vital for mobile robots, which are battery powered, that an image processing system consumes little power and that it is low weight. Consider a robot that harvests trees by climbing it and disassembling the tree while the tree is still standing. Carrying a heavy computer for image processing is not an option.

There is a need for power efficient and compact vision systems, that can perform advanced image analysis at video rate (30Hz).