Neural Network with Data Augmentation

Mehenika Akter10000-0003-4354-5994, Mohammad Shahadat

Hossain1*0000-0002-7473-8185, Tawsin Uddin Ahmed20000-0002-2684-5955,

and Karl Andersson30000-0003-0244-3561

1 Department of Computer Science and Engineering, University of Chittagong,

Chittagong, Bangladesh

mhnk.a.mitu@gmail.com, hossain ms@cu.ac.bd, tawsin.uddin@gmail.com

2

Department of Computer Science, Electrical and Space Engineering, Lule˚a University of Technology, Skellefte˚a, Sweden

karl.andersson@ltu.se

Abstract. Mosquitoes are responsible for the most number of deaths every year throughout the world. Bangladesh is also a big sufferer of this problem. Dengue, malaria, chikungunya, zika, yellow fever etc are caused by dangerous mosquito bites. The main three types of mosquitoes which are found in Bangladesh are aedes, anopheles and culex. Their identifica-tion is crucial to take the necessary steps to kill them in an area. Hence, a convolutional neural network (CNN) model is developed so that the mosquitoes could be classified from their images. We prepared a local dataset consisting of 442 images, collected from various sources. An ac-curacy of 70% has been achieved by running the proposed CNN model on the collected dataset. However, after augmentation of this dataset which becomes 3,600 images, the accuracy increases to 93%. We also showed the comparison of some methods with the CNN method which are VGG-16, Random Forest, XGboost and SVM. Our proposed CNN method outperforms these methods in terms of the classification accu-racy of the mosquitoes. Thus, this research forms an example of human-itarian technology, where data science can be used to support mosquito classification, enabling the treatment of various mosquito borne diseases. Keywords: Mosquito, Classification, Dengue, Malaria, Convolutional Neural Network, Data Augmentation

1

Introduction

Mosquitoes may seem to be tiny little creatures but they are one of the dead-liest animals of the world. They bring significant harm to humans since they are the main reason behind various transmissible diseases like dengue, malaria, chikungunya, zika, yellow fever etc. Their ability to carry and spread diseases to humans and animals causes millions of killings each year. As stated by the World Health Organization, deaths of millions of people every year are caused by mosquito bites [30]. With almost 2.5% case fatality, almost 500,000 people

having severe dengue need to be hospitalized each year annually [31]. Malaria is responsible for more than three hundred million acute illnesses and it kills at least one million people every year. There are more than 3,000 species of mosquitoes but the most dangerous ones are aedes, anopheles and culex because aedes cause dengue, yellow fever, chikungunya etc. Anopheles causes malaria whereas culex causes zika, west nile virus etc.

Bangladesh, which is a densely populated country, and has a very low aver-age income, is one of the unhealthiest places to live in. Every year hundreds of people lose their lives by mosquito bites and thousands get sick. They mainly get affected by dengue, malaria and chikungunya. But in recent years, the prob-lem has became acute. In 2019, at least 18 people died of dengue and 16,223 got infected in Bangladesh by August [7]. The number of malaria cases is also shock-ing. Approximately 150,000-250,000 malaria instances are found in this country every year [24].

The goal of this research consists of developing a model that is capable of detecting three different classes of mosquitoes: Aedes, anopheles and culex from a given input image. The goal would be accomplished by training a model using machine learning techniques on our dataset. Then we tried to improve the ac-curacy compared to other systems and maintain roughly equal recognition rates for the classes.

The background and some related works on mosquito classification are pre-sented in the upcoming section. The methodology of this research is demon-strated in Sect. 3. Then Sect. 4 gives an overview of the dataset constructed which is used in this research along with data augmentation. Sect. 5 defines the implementation of the presented system. After that, Sect. 6 shows the inspection of the result and finally, Sect. 7 gives the conclusion of the paper by providing a brief description of the future works.

2

Related Work

There has been some work done on vision-based mosquito classification. The most recent work on mosquito classification was done by Okayasu et al. [29]. They constructed their own dataset which consisted of 14,400 images of 3 types of mosquitoes. They used three types of deep classification methods and showed a comparison of the accuracy.

Motta et al. [27] presented a classification of adult mosquitoes. They trained CNN to implement morphological classification of the mosquitoes. They used a dataset consisting of 4,056 images of the mosquitoes. Using three neural network models: LeNet, AlexNet and GoogleNet, they found the finest result(76%) with GoogleNet.

In 2018, Li-Pang Huang et al. [16] classified mosquitoes using edge computing along with deep learning. They managed to have validation accuracy of 98%. On the other hand, they achieved a test accuracy of 90.5%.

Fuchida et al. [12] showed the pattern and exploratory validation of a self-operating factor for vision-based classification of mosquitoes. The factor could

determine mosquitoes from other bugs, with the separation of the dialectal fea-tures. It has also used the classification based on support vector machine (SVM). With a maximum recall of 98% having used different classification methods, they proved the efficiency and validity of their proposed method. However, the clas-sification of mosquito species was not considered there.

Ortiz et al. [32] presented a work based on convolutional neural network to classify mosquito larvae. They used 1,118 images of larvae 8th segment. Using a pre-trained model (VGG16), they achieved an accuracy of 97%.

MAM Fuad et al. [11] classified aedes aegypti larvae and float valve using transfer learning and implementing Inception-V3. They performed the experi-ment on 534 images and used three different learning rates achieving an average accuracy of about 99%.

Minakshi et al. [25] classified seven species with sixty mosquitoes by using classifiers and indicating pixel merits. They implemented the random forest al-gorithm and achieved a validation accuracy of 83.3%. But as their dataset was very small having only sixty images, the classification was not very suitable.

Jeffrey Glick and Katarina Miller [13] classified insects using Hierarchical Deep CNNs (HD-CNNs). They worked on 217,657 images of different insects. They implemented their method on 277 distinctive classes.

Several research on mosquito classification using mosquito wingbeat have been implemented too. Fanioudakis et al. [10] classified six species of mosquitoes using observable recordings of their wingbeats. They achieved a classification accuracy of 96%. Their dataset consisted of 279,566 recordings of mosquitoes’. They used top-tier deep learning approaches for implementing the classification. Kiskin et al. [22] worked on wavelet transformations of audio recordings of mosquitoes in 2017. They built a CNN model to detect the presence of mosquitoes by their wingbeat recordings.

Kyukwang Kim et al. [20] built an automatic mosquito sensing and control system using deep learning. They found an accuracy of 84% for Fully Convolu-tional Network (FCN) and regression which was built on neural networks.

The key difference from the existing systems with our system is that they did not use a custom CNN model for classifying the mosquitoes whereas our system has done that. We know that CNN models perform better than other approaches in classifying images as well as retrieval of images [28]. Therefore, custom CNN model has been used as well as other pre-trained CNN models like VGG-16 to compare their performances. As for the limitations are concerned, even though many of them used a dataset consisting of a large amount of images, the results are not that great in comparison to the dataset. In contrast, we tried to maintain a fair accuracy rate even after having a dataset containing a small amount of images.

As there are not so many mosquito images found online, that restricts us to research more on their classification. There is also no standard dataset for this purpose. So, the dataset has to be built on our own to determine the species of the mosquito. We made our own dataset by gathering the images of these mosquitoes and developed the classification method in this research.

3

Methodology

The system was developed by Convolutional Neural Network(CNN) with data augmentation in this research. Fig 1 illustrates the system flow chart of this system. First of all, the model takes the images from the dataset. Then it starts preprocessing. After that, the images are augmented by using some augmentation functions. Finally, the augmented dataset is run into the CNN model so that it can predict the class.

Fig. 1. System Flow Chart

The preprocessing of the images has been done in a simple way. First of all, the figures of the mosquitoes have been detected using OpenCV. Then, the body portions of the mosquitoes have been cropped so that the unnecessary parts from the background are not available in the images. After that, the images are converted into gray-scale so that the model could learn the images easily. Next, the images have been normalized to a certain limit to be recognized in a better way. The feature extraction of the images has been conducted by CNN itself. Images could be detected by using haars features too [8].

Using a filter w(m, n), convolution over an image f(m, n) is defined in equation (1): w(m, n) ∗ f (m, n) = a X s=−a b X t=−b w(s, t)f (m − s, n − t) (1)

ReLU activation function has been applied in the convolution layer. To make sure the non-linearity of the model [14], ReLU is applied which is shown in equation (2):

f (m) = max(0, m) (2)

The model has been provided with 442 images. It includes 4 convolutional layers: 1st Convolution Layer 2D, 2nd Convolution Layer 2D, 3rd Convolution Layer 2D and 4th Convolution Layer 2D. The 1st convolution layer has 16 filters of size 2*2 whereas the 2nd convolution layer has 32 filters. The 3rd convolution layer has 64 layers of size 2*2 and finally, the 4th convolution layer has 128 layers. The kernel size is 2. The model consists of 2*2 pool size pooling layer after each convolution layer. Max pooling layer has also been chosen after each convolution

layer. ReLU activation function has been administered in hidden layers like the convolution layer. After every hidden layer, there is a dropout layer. The value of dropout was 0.5. The work of the dropout layer is to deactivate 50% nodes from every hidden layer so that overfitting [35] could be avoided. One Global Average Pooling layer has been added in the last hidden layer which takes the average as it is suitable for feeding into our dense output layer. The hyperparameters have been tuned by adding layers until the error did not improve. Also, the value of the dropout layer has been chosen by experimenting with multiple values. As the dropout value of 0.5 has helped to avoid overfitting more than other values, it has been selected as the final value of the dropout layer. The parameters have been optimized to improve the result.

Table 1. System Architecture

Model Contents Details

1st Convolution Layer 2D 16 filters of size 2x2, ReLU 1st Max Pooling Layer Pooling Size 2x2

Dropout Layer Excludes 50% neurons at random 2nd Convolution Layer 2D 32 filters of size 2x2, ReLU 2nd Max Pooling Layer Pooling size 2x2

Dropout Layer Excludes 50% neurons at random 3rd Convolution Layer 2D 64 filters of size 2x2, ReLU 3rd Max Pooling Layer Pooling size 2x2

Dropout Layer Excludes 50% neurons at random 4th Convolution Layer 2D 128 filters of size 2x2, ReLU 4th Max Pooling Layer Pooling size 2x2

Dropout Layer Excludes 50% neurons at random Global Average Pooling Layer N/A

Output Layer three nodes for three classes, SoftMax Optimization Function Adam

Callback ModelCheckpoint

Finally, the output layer of the model includes three nodes because it has three classes. As the activation function, SoftMax [37] has been used in the model. Sof tmax(m) = e j P i ei (3)

As the model optimizer, we have used Adam [21] in our system and in case of the loss function, we have used Categorical Crossentropy. ModelCheckpoint is also added as callback function. The CNN model which has been composed for this study is illustrated in Table 1

4

Dataset and Data Augmentation

4.1 Data Acquisition

As there is no standard dataset on mosquitoes available online, we had to con-struct the dataset from different online sources. We collected mosquito images from websites like Pixabay [3], Getty Images [1], Shutterstock Images [4], iStock [2] etc. We collected approximately 40 images from Pixabay, 120 images from Getty Images, 90 images from Shutterstock Images, 60 images from iStock and the rest from other sources. We had a total of 442 images; 188 of aedes species, 130 of anopheles species and 124 of culex species. Fig 2 displays some sample images of our dataset. We took help from some sources like [23] to label the data correctly.

Fig. 2. Dataset Samples

4.2 Characteristics of Aedes, Anopheles and Culex

There are some properties associated with the mosquitoes by which one can recognize the mosquitoes and differentiate between them. Fig 3 shows example images of the three mosquito species: aedes, anopheles and culex. The charac-teristics of aedes, anopheles and culex are given below:

Aedes. Aedes mosquitoes can be identified differently as they possess black and white markings all over the body. These mosquitoes stay awake in the daytime in dark corners. Primarily female aedes bites humans and sucks blood so that they can lay eggs. This mosquito is the carrier for infectious diseases like dengue, chikungunya etc. These diseases are mediated to humans by the bites of an affected female aedes.

Anopheles. The body color of an anopheles mosquito’s body is brown or black. It consists of 3 body parts: the head, abdomen and thorax. The lower body of the vector points to the top while they are resting. It lays eggs after sucking blood. Even though it can live some weeks to a month, it is able to produce eggs in that time span. The anopheles mosquito is considered throughout the world for bearing one of the most infectious diseases called malaria. It is also responsible for heartworm.

Culex. Culex appears to be a black mosquito with some white stripes on some body parts. Male and female culex, both of them, live on honey and herb liquids. When a female culex is willing to produce eggs, it feeds on the blood of humans, other beasts and also birds. It is compulsory for the female culex to have blood so that they can reproduce. Though the female mosquitoes bite only the birds at some point, they also attack the mammals sometimes. This culex mosquito is responsible for spreading the Zika virus. It is also found in charge of spreading west nile virus, encephalitis and filariasis.

Fig. 3. Example Images of Aedes, Anopheles and Culex

4.3 Data Augmentation

It is known that we need a big amount of data in the dataset to get a finer performance with convolutional neural network [38] [17] [9]. If the dataset is big, more features can be extracted from the data and matched to the unknown data. But when there is not sufficient data, we could use data augmentation so that the performance of the model can be improved [6]. By putting in some augmentation functions on the existing dataset, image augmentation can gen-erate more images, like random rotation, shift, zoom, noise, flips, etc. We used four types of augmentation functions on the dataset; vertical flip, horizontal flip, random rotation and noise. Scikit-image [40] and Image Data Generator were used for the augmentation. After using augmentation, the augmented dataset consisted of 3600 images containing 1200 images for each class. Fig 4 shows the original image along with the augmented images made from the original one. Image or data augmentation is helpful to better the effectiveness of image clas-sification problems [33]. That is why data augmentation techniques have been selected in image processing for this research. We used 70% of the images for training the model and the existing 30% is for validating the system during the implementation.

Fig. 4. Data Augmentation

5

Implementation Process

The system’s code has been written and developed in a python programming language using the Spyder IDE [34]. The libraries that we used in this research are Keras [15], Tensorflow [5], NumPy [41], and Matplotlib [36]. Tensorflow has been selected for the backend of the system and keras has been used in the system to provide built-in functions like activation functions, optimizers, layers etc. Data augmentation has been performed by keras API. NumPy is used for numerical analysis. Sklearn is used for generating confusion matrix, splitting train and test data, modelcheckpoint, callback function etc where matplotlib library has been used to make the graphical representations, such as confusion matrix, loss versus epochs graph, accuracy versus epochs graph, etc. When an image is provided as an input in our system, it preprocesses the image. The preprocessing is done in the exact way when the model is being trained. Then it predicts the class based on the processes.

6

Result and Discussion

The intended model brings a strong output for the classification in spite of hav-ing a dataset which does not contain many images. Although there is a small variation in the classification rate for the three classes, it is quite decent. Our proposed model, the convolutional neural network (CNN), could be able to gain the validation accuracy of about 70% without data augmentation.

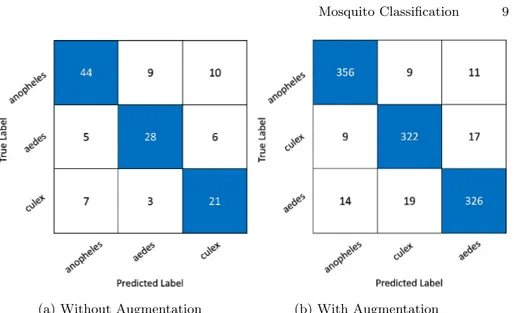

Fig 5 shows the confusion matrix of the system: with and without data augmen-tation. Here, the x-axis represents the predicted labels and the y-axis represents the true levels. The validating accuracy of each class of the model (before and after augmentation) is shown in Table 2. Before the augmentation, we can see that the individual accuracy for anopheles is 70%. For aedes, the accuracy is 72% and finally, the accuracy found for culex is 68%. But after the augmenta-tion is done, the accuracy for anopheles becomes 94% and the accuracy for both aedes and culex become 92%. With the help of data augmentation, our proposed

(a) Without Augmentation (b) With Augmentation Fig. 5. Confusion Matrix

model could achieve an overall accuracy of 93% which is way better than the accuracy without data augmentation.

Table 2. Accuracy before and after Augmentation Class Before Augmentation After Augmentation

Anopheles 70% 94%

Aedes 72% 92%

Culex 68% 92%

Fig 6 displays the training accuracy versus validation accuracy of the model before augmentation. We can see that the training accuracy keeps increasing but the validating accuracy increases up to 300 epochs. But it keeps decreasing in the last 200 epochs. Fig 7 displays the training loss versus validation loss of the model before augmentation. The training loss keeps decreasing until the end but the validation loss decreases up to 100 epochs but keeps increasing after that. The training accuracy versus validation accuracy of the model after data aug-mentation is shown in Fig 8. We can see that both training accuracy and vali-dation accuracy keep increasing gradually until the end.

Fig 9 displays the training loss vs validation loss of the model after data augmentation. Here both training and validation loss keeps decreasing until the end unlike the validation loss without augmentation. Table 3 represents the com-parison of model evaluation matrices before and after data augmentation. The validation accuracy was 70%, the training accuracy was 86%, the precision was 71%, the recall was 70% and finally, the F1-score was 69% without augmentation. But after the data augmentation, the accuracy becomes 93% and the training

Fig. 6. Training Accuracy versus Validation Accuracy

Fig. 7. Training Loss versus Validation Loss

accuracy becomes 97%. Precision, recall and F1-score are 93%, 93% and 92% respectively.

A commendable improvement can be observed in model learning. The model could not perform well in recognising unseen images before augmentation due to data limitation. But after the augmentation, the model overfitting problem

Fig. 8. Training Accuracy versus Validation Accuracy after Data Augmentation

Fig. 9. Training Loss versus Validation Loss after Data Augmentation

is resolved. As our previous dataset consisted of inadequate images, data aug-mentation increased the system’s efficiency by adding augmented data to our dataset.

Overall performance comparison among several models is demonstrated in Table 4 to validate the effectiveness of our proposed CNN architecture.

Sup-Table 3. Model Evaluation Matrices before and after Data Augmentation Acc Train. Acc Precision Recall F1-Score

Before AUG 70% 86% 71% 70% 69%

After AUG 93% 97% 93% 93% 92%

Table 4. Models’ Performance Comparison with Augmentation Model Name Accuracy Precision Recall F1-Score Proposed CNN 0.93 0.93 0.93 0.923

VGG16 0.91 0.909 0.901 0.905

Random Forest 0.83 0.833 0.833 0.830

XGboost 0.69 0.690 0.687 0.687

SVM 0.66 0.677 0.660 0.663

port Vector Machine (SVM), Extreme Gradient Boosting (XGBoost), Random Forest and deep learning pre-trained model VGG net (VGG16) are assigned for this mosquito classification task. These models are compared in terms of valida-tion accuracy, recall, precision and f1 score. SVM achieves the lowest validavalida-tion accuracy (66%) which is beaten by the validation accuracy of XGBoost model with a margin of 3%. However, random forest outperforms the other machine learning models and is able to gain 83% validation accuracy. On the other side, when it comes to applying the transfer learning approach, VGG16 crosses the machine learning models in all performance evaluation matrices as it gives an accuracy of 91%. But our proposed CNN method surpasses all of them and gives the highest accuracy. In addition to that, an integration of data-driven (CNN) and knowledge-driven (BRBES) approach can be proposed to portrait risk assessment of a mosquito bite in the human body [18] [39] [26] [19].

7

Conclusion and Future Works

This research focuses on determining the opportunities to improve and build a mosquito classifier, which could bring benefits to human beings. Therefore, the goal of the research was to classify the mosquitoes when too many images of mosquitoes are not available. The proposed Convolutional Neural Network model can give more efficiency if it can be run on a good amount of data. The Convolutional Neural Network with data augmentation should be more efficient and robust while comparing to other machine learning methods in image processing. We will increase the data for each class to get close to equal accuracy of each class since it is obvious that CNN is a procedure that is data-driven. We can apply more augmentation functions to make the augmented dataset bigger. We can gather a large amount of data so that we could make a standard dataset for mosquitoes. We will also try to make the system capable of classifying the mosquitoes in real-time so that the system can be more efficient. It can be tried to build the model more effectively to make a better mosquito classification system by using the convolutional neural network (CNN) in the future.

References

1. Royalty free stock photos, illustrations, vector art, and video clips. in: Getty images (Accessed September 17, 2019), https://www.gettyimages.com/

2. Stock images, royalty-free pictures, illustrations videos - istock. in: istock-photo.com (Accessed September 20, 2019), https://www.istockistock-photo.com/ 3. 1 million Stunning Free Images to Use Anywhere - Pixabay (Accessed September

24, 2019), https://pixabay.com/

4. Stock images, photos, vectors, video, and music. in: Shutterstock (Accessed September 24, 2019), https://www.shutterstock.com/

5. Abadi, M., Barham, P., Chen, J., Chen, Z., Davis, A., Dean, J., Devin, M., Ghe-mawat, S., Irving, G., Isard, M., et al.: Tensorflow: A system for large-scale machine learning. In: 12th {USENIX} Symposium on Operating Systems Design and Im-plementation ({OSDI} 16). pp. 265–283 (2016)

6. Ahmed, T.U., Hossain, S., Hossain, M.S., Ul Islam, R., Andersson, K.: Facial ex-pression recognition using convolutional neural network with data augmentation. In: 2019 Joint 8th International Conference on Informatics, Electronics & Vision (ICIEV) and 2019 3rd International Conference on Imaging, Vision & Pattern Recognition (icIVPR). pp. 336–341. IEEE (2019)

7. Akram, A.: Alarming turn of dengue fever in dhaka city in 2019. Bangladesh Jour-nal of Infectious Diseases 6(1), 1–2 (2019)

8. Bong, C.W., Xian, P.Y., Thomas, J.: Face recognition and detection using haars features with template matching algorithm. In: International Conference on Intel-ligent Computing & Optimization. pp. 457–468. Springer (2019)

9. Chowdhury, R.R., Hossain, M.S., ul Islam, R., Andersson, K., Hossain, S.: Bangla handwritten character recognition using convolutional neural network with data augmentation. In: 2019 Joint 8th International Conference on Informatics, Elec-tronics & Vision (ICIEV) and 2019 3rd International Conference on Imaging, Vi-sion & Pattern Recognition (icIVPR). pp. 318–323. IEEE (2019)

10. Fanioudakis, E., Geismar, M., Potamitis, I.: Mosquito wingbeat analysis and classi-fication using deep learning. In: 2018 26th European Signal Processing Conference (EUSIPCO). pp. 2410–2414. IEEE (2018)

11. Fuad, M.A.M., Ghani, M.R.A., Ghazali, R., Izzuddin, T.A., Sulaima, M.F., Jano, Z., Sutikno, T.: Training of convolutional neural network using transfer learning for aedes aegypti larvae. Telkomnika 16(4) (2018)

12. Fuchida, M., Pathmakumar, T., Mohan, R.E., Tan, N., Nakamura, A.: Vision-based perception and classification of mosquitoes using support vector machine. Applied Sciences 7(1), 51 (2017)

13. Glick, J., Miller, K.: Insect classification with heirarchical deep convolutional neu-ral networks. Convolutional Neuneu-ral Networks for Visual Recognition (CS231N), Stanford University FINAL REPORT, Team ID 283, 13 (2016)

14. Glorot, X., Bordes, A., Bengio, Y.: Deep sparse rectifier neural networks. In: Pro-ceedings of the fourteenth international conference on artificial intelligence and statistics. pp. 315–323 (2011)

15. Gulli, A., Pal, S.: Deep learning with Keras. Packt Publishing Ltd (2017) 16. Huang, L.P., Hong, M.H., Luo, C.H., Mahajan, S., Chen, L.J.: A vector mosquitoes

classification system based on edge computing and deep learning. In: 2018 Confer-ence on Technologies and Applications of Artificial IntelligConfer-ence (TAAI). pp. 24–27. IEEE (2018)

17. Islam, M.Z., Hossain, M.S., ul Islam, R., Andersson, K.: Static hand gesture recog-nition using convolutional neural network with data augmentation. In: 2019 Joint 8th International Conference on Informatics, Electronics & Vision (ICIEV) and 2019 3rd International Conference on Imaging, Vision & Pattern Recognition (icIVPR). pp. 324–329. IEEE (2019)

18. Jamil, M.N., Hossain, M.S., Ul Islam, R., Andersson, K.: A belief rule based expert system for evaluating technological innovation capability of high-tech firms under uncertainty. In: 2019 Joint 8th International Conference on Informatics, Electronics & Vision (ICIEV) and 2019 3rd International Conference on Imaging, Vision & Pattern Recognition (icIVPR). pp. 330–335. IEEE (2019)

19. Kabir, S., Islam, R.U., Hossain, M.S., Andersson, K.: An integrated approach of belief rule base and deep learning to predict air pollution. Sensors 20(7), 1956 (2020)

20. Kim, K., Hyun, J., Kim, H., Lim, H., Myung, H.: A deep learning-based automatic mosquito sensing and control system for urban mosquito habitats. Sensors 19(12), 2785 (2019)

21. Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

22. Kiskin, I., Orozco, B.P., Windebank, T., Zilli, D., Sinka, M., Willis, K., Roberts, S.: Mosquito detection with neural networks: the buzz of deep learning. arXiv preprint arXiv:1705.05180 (2017)

23. Littig, K., Stojanovich, C.: Mosquitoes: Characteristics of anophe-lines and culicines. Available Available from: http://www. cdc. gov/nceh/ehs/docs/pictorial key/mosquitoes. pdf.[Last accessed on 2017 Jan 06] (2005)

24. Maude, R.J., Hasan, M.U., Hossain, M.A., Sayeed, A.A., Paul, S.K., Rahman, W., Maude, R.R., Vaid, N., Ghose, A., Amin, R., et al.: Temporal trends in severe malaria in chittagong, bangladesh. Malaria journal 11(1), 323 (2012)

25. Minakshi, M., Bharti, P., Chellappan, S.: Identifying mosquito species using smart-phone cameras. In: 2017 European Conference on Networks and Communications (EuCNC). pp. 1–6. IEEE (2017)

26. Monrat, A.A., Islam, R.U., Hossain, M.S., Andersson, K.: A belief rule based flood risk assessment expert system using real time sensor data streaming. In: 2018 IEEE 43rd Conference on Local Computer Networks Workshops (LCN Workshops). pp. 38–45. IEEE (2018)

27. Motta, D., Santos, A. ´A.B., Winkler, I., Machado, B.A.S., Pereira, D.A.D.I., Cav-alcanti, A.M., Fonseca, E.O.L., Kirchner, F., Badaro, R.: Application of convolu-tional neural networks for classification of adult mosquitoes in the field. PloS one 14(1) (2019)

28. Nandagopalan, S., Kumar, P.K.: Deep convolutional network based saliency pre-diction for retrieval of natural images. In: International Conference on Intelligent Computing & Optimization. pp. 487–496. Springer (2018)

29. Okayasu, K., Yoshida, K., Fuchida, M., Nakamura, A.: Vision-based classification of mosquito species: Comparison of conventional and deep learning methods. Applied Sciences 9(18), 3935 (2019)

30. Omodior, O., Luetke, M.C., Nelson, E.J.: Mosquito-borne infectious disease, risk-perceptions, and personal protective behavior among us international travelers. Preventive medicine reports 12, 336–342 (2018)

31. Organization, W.H., et al.: Dengue and severe dengue. Tech. rep., World Health Organization. Regional Office for the Eastern Mediterranean (2014)

32. Ortiz, A.S., Miyatake, M.N., T¨unnermann, H., Teramoto, T., Shouno, H.: Mosquito larva classification based on a convolution neural network. In: Proceedings of the In-ternational Conference on Parallel and Distributed Processing Techniques and Ap-plications (PDPTA). pp. 320–325. The Steering Committee of The World Congress in Computer Science, Computer . . . (2018)

33. Perez, L., Wang, J.: The effectiveness of data augmentation in image classification using deep learning. arXiv preprint arXiv:1712.04621 (2017)

34. Raybaut, P.: Spyder-documentation. Available online at: pythonhosted. org (2009) 35. Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I., Salakhutdinov, R.: Dropout: a simple way to prevent neural networks from overfitting. The Journal of Machine Learning Research 15(1), 1929–1958 (2014)

36. Tosi, S.: Matplotlib for Python developers. Packt Publishing Ltd (2009)

37. T¨uske, Z., Tahir, M.A., Schl¨uter, R., Ney, H.: Integrating gaussian mixtures into deep neural networks: Softmax layer with hidden variables. In: 2015 IEEE Inter-national Conference on Acoustics, Speech and Signal Processing (ICASSP). pp. 4285–4289. IEEE (2015)

38. Uddin Ahmed, T., Hossain, M.S., Alam, M., Andersson, K., et al.: An integrated cnn-rnn framework to assess road crack. In: 2019 22nd International Conference on Computer and Information Technology (ICCIT) (2019)

39. Ul Islam, R., Andersson, K., Hossain, M.S.: A web based belief rule based expert system to predict flood. In: Proceedings of the 17th International conference on information integration and web-based applications & services. pp. 1–8 (2015) 40. Van der Walt, S., Sch¨onberger, J.L., Nunez-Iglesias, J., Boulogne, F., Warner, J.D.,

Yager, N., Gouillart, E., Yu, T.: scikit-image: image processing in python. PeerJ 2, e453 (2014)

41. Walt, S.v.d., Colbert, S.C., Varoquaux, G.: The numpy array: a structure for ef-ficient numerical computation. Computing in Science & Engineering 13(2), 22–30 (2011)