Gesture-Driven Interaction in

Head-Mounted Display AR

Guidelines for Design Within the Context of the

Order Picking Process in Logistic Warehouses.

Robin Medin

Interaktionsdesign Bachelor

22.5HP Spring 2018

Abstract

This thesis investigates how gesture-driven interfaces, integrated in HMD-based (Head-Mounted Display) Augmented Reality (AR) devices can be used in the context of order picking processes in logistic warehouses as a substitute to existing solutions for visualisation and interaction with order picking lists: which hand gestures should be used? What qualities are essential for designing a successful substitute? By applying an User-Centered Design approach, generative ideation methodologies, prototyping and usability testing this research results in a contribution to a framework of design guidelines for designing gesture-driven AR in the order picking process, as well as a proposition of a gestural interaction language grounded in both earlier research and empirical findings. The empirical findings include details of the end users’ gestural interaction preferences and content-related considerations, which was implemented in a low fidelity prototype and evaluated in the contextual domain of the end users.

Acknowledgements

I would like to thank all the participants of the touchstorming and usability testing sessions for taking the time and effort to provide me with the insights that made this thesis possible, as well as my supervisor Henrik Svarrer Larsen for his incredibly valuable feedback during the process.

I would also like to thank Thomas and Carl at Crunchfish for introducing me to the world of gesture-driven AR and for inspiring me to write this thesis. Lastly, I would like to thank Sofie for her endless, loving support and Louie, for being the best son one could possibly hope for.

Table of Contents

1 INTRODUCTION 6

1.1 RESEARCH AREA AND CONTEXT 6

1.2 PURPOSE 6 1.3 RESEARCH QUESTIONS 7 1.4 LIMITATIONS 7 1.5 ETHICAL CONSIDERATIONS 7 1.6 THESIS STRUCTURE 8 2 BACKGROUND 9 2.1 WHAT IS AR? 9

2.2 HMDARINPUT METHODS AND THEIR PROBLEMS 10 2.3 COULD GESTURE INTERACTION BE A SOLUTION? 11

2.4 BEST PRACTICE IN ARDESIGN 12

2.5 CURRENT USE OF GESTURE INTERACTION IN TECHNOLOGY 13

2.6 RELATED RESEARCH 14

3 METHOD 15

3.1 USER-CENTERED DESIGN 15

3.2 TOUCHSTORMING AND BODYSTORMING 15

3.3 WIZARD OF OZ 15

3.4 PROTOTYPING 15

3.5 USABILITY TESTING 16

3.6 DESIGN PROCESS MODEL 16

3.7 TIME PLAN 20

4 UNDERSTANDING THE END USER AND THE CONTEXT 21

4.1 EXISTING SOLUTIONS AT LOGISTIC WAREHOUSES 21

4.2 PROBLEMS WITH THE EXISTING SOLUTIONS 24

4.3 END USER ARCHETYPES 25

4.4 TOUCHSTORMING SESSIONS 26

5 FINDING A DESIGN OPPORTUNITY 33

5.1 WORKSHOP FINDINGS 33

5.2 CONSIDERATIONS FOR DESIGN DECISIONS 36

5.3 HOW MIGHT WE…? 36

5.4 DESIGN OPPORTUNITY 37

6 IDEATION OF POTENTIAL SOLUTIONS 37

6.1 IDEATION SESSION 37

6.2 EVALUATION AND MERGING OF IDEAS 38

6.3 SKETCHING 39

6.4 PLANS FOR EMULATING AR AND FEEDBACK 41

7.1 PROTOTYPING 43 7.2 PLAN FOR USABILITY TESTING STRUCTURE 45

7.3 USABILITY TESTING 46

7.4 FINDINGS FROM USABILITY TESTING 47

8 CONCLUSION 50

9 DISCUSSION 52

9.1 SELF-CRITIQUE 52

9.2 END RESULT VALUE 54

1 Introduction

When we talk, our words are often accompanied by hand gestures. We use them in verbal communication as an amplification and enhancement of the spoken word, when sharing information about identification and direction (“my car’s over there”), size (“the fish was this big!), speed and motion (“the motorcycle went whoooosh!”). Often unconsciously, hand gestures help us in communicating with one another, adding nuance and dynamics to the words we speak. Some gestures are learned from cultural heritage, some are invented instinctively during exuberant conversations. Safe to say, hand gestures are a natural means of expressing ourselves. This natural means of communication can be implemented into the field of interaction design, where it may provide a more intuitive and expressive way of communicating with computers.

1.1 Research Area and Context

In the context of logistic warehouses, there is a need for a smooth, effective and faultless work process. The subject of this research revolves around this environment, more specifically the order picking process. In this process, the workers gather articles from a warehouse assortment and prepare the articles for distribution to external customers – a task that demands efficiency of the worker, supported by a clear, detailed order picking list. In many warehouses, the use of working gloves is frequently occurrent, which prohibits interaction with digital touch-based solutions of visualizing the order picking list. Instead, other solutions are used, implementing analogue, paper-based solutions (which adds an inconvenient hand-held burden to the user) and solutions based on two-way voice communication between the worker and a computer, which might be unsuitable in noisy environments. The existing solutions are not perfect, thus encouraging experimentation with other means of providing the worker with opportunities to view and interact with the order picking list. Using hand gestures to control a head-mounted Augmented Reality (AR) display which visualises the order picking list may potentially be a favourable substitute to the existing solutions, since it allows the user to interact with a system both while wearing gloves and while working in loud environments.

1.2 Purpose

The purpose of this research is to, by involving the end users in the design process, formulate a contribution to a gestural interaction language, intended for use in the context of the order picking process of warehouses. Characteristics such as intuitiveness, effortlessness and ease of learning are considered valuable qualities of a gestural interaction language, and therefore prioritized in the formation of said contribution. The results of this research

aim to contribute to the Interaction Design community by providing designers with relevant insights and potential guidelines when designing for gesture-driven AR. Optimally, some of the findings acquired during this research would be applicable to AR design outside of the context focused upon in this case.

1.3 Research Questions

Main research question:

How can existing solutions be replaced by a gesture-driven interface, integrated in a HMD-based (Head-Mounted Display) Augmented Reality system, and which hand gestures should optimally be included?

Sub-questions:

What qualities are essential for designing a usability-centered and gesture-driven interface for the context of warehouse work?

How can we minimize the interaction learning curve of a gesture-driven interface?

1.4 Limitations

Technical aspects such as matters of gesture recognition and optimization of CPU performance has been taken out of consideration during this project. Therefore, ethnic diversity of the end users potentially involving variations in skin colour which may require alterations in gesture recognition algorithms has also been taken out of consideration. The research is limited to the use of Head-Mounted Display (HMD) Augmented Reality devices using two dimensional user interfaces rather than devices powered by 3D-enabling AR engines such as Google’s ARCore (Google Developers, 2018) or Apple’s ARKit (Apple Inc., 2018a). The research is also limited to the context of logistic warehouses and the order picking process, as described in section 4.1.

1.5 Ethical Considerations

The identities and employments of the participants of the research in this thesis have been withheld, as well as company names representing the described warehouses in order not to reveal any details that would be preferred not to be made public by the company. Since workshop and usability testing participants have declared a reluctance towards recorded video material being made public, this request has been respected, and the results from these sessions are described by text in the corresponding sections instead.

Disclaimer: the potential exacerbation of user safety and well-being derived

peripheral vision of the user in an environment often occupied by motorized vehicles has been considered, yet not thoroughly evaluated.

1.6 Thesis Structure

The structure of this thesis is intended to correlate with the chronology of the Double Diamond design process as presented by the British Design Council (2007) which was used in this study. The sections describing the design process are divided into four sections (Discover, Define, Develop and

Deliver) corresponding to the four phases of the Double Diamond design

process. This to give the reader a sense of chronological order and continuity, related to the work itself in a manner that is cohesive and logical. Section 4 represents the Discover phase, section 5 the Define phase, section 6 the Develop phase and section 7 the Deliver phase.

2 Background

2.1 What is AR?

Augmented Reality (AR) may be described as an enhanced version of reality where the user’s view of the real world is augmented with digital data, images, 3D models, instructions or other virtual elements. While Virtual Reality (VR) offers a fully immersive experience within a virtual environment, AR simply adds an additional overlay of virtual data on top of the real world. With the recent popularity of the Pokémon GO (Niantic Inc., 2016) mobile game and the filters on Snapchat, (Snap Inc., 2018) the use of AR applications on mobile phones has increased significantly. Drew Lewis (2016) states that 30% of American smart phone users use AR enabled mobile applications at least once a week. With 64% of the American population 0wning smart phones, the number of AR users in the United States exceed 60 million.

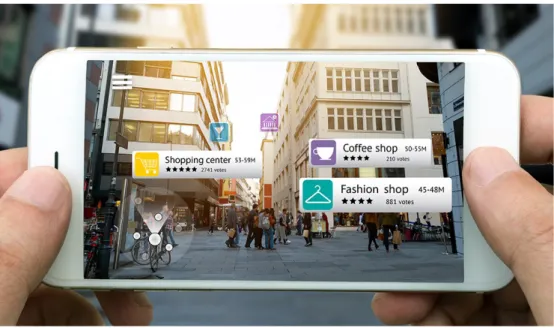

Figure 1. AR used on a smart phone to mark specific points of interest. The view of the real-life environment is here overlaid with digital information, in this case the location and user reviews of shopping venues and a coffee shop.

AR devices consist of a display, some sort of input device, sensors and a processor. AR may be experienced through a variety of platforms, among them smart phones (fig. 1), head mounted-displays/smart glasses (fig. 2), monitors and even contact lenses. Another form of AR is the virtually imposed illustrations and graphics often seen during live sports broadcasts, e.g. when the scores are “projected” onto the game field.

Figure 2. Epson Moverio BT-200 HMD/smart glasses with a remote controller enabling user interaction with the device.

There are two different approaches of overlaying virtual data on the real world. One approach is based on projecting two dimensional virtual elements on a transparent screen, often seen in HMD/smart glass devices. Practically, this approach might be experienced as having a transparent smart phone held in front of the eyes. This enables a wide range of use cases as the device is often capable of the same tasks as a smart phone - the user may then watch movies, browse the web and more. The second approach is based on implementing 3D models in real-life three dimensional space, as seen in the

Pokémon GO mobile game. This enables the user to move in relation to the virtual object which are often “glued” to specific coordinates on a surface.

The AR market is growing rapidly, with over 200 million users worldwide (Lewis, 2016), therefore potentially offering lucrative opportunities for developers and actors on the AR scene as well as the field of Interaction Design in general.

2.2 HMD AR Input Methods and Their Problems

The engaging and futuristic technology of HMD-based Augmented Reality is an emerging technology that has been anticipated, yet never made any considerable impact on the consumer market. Companies such as Epson, Vuzix, Microsoft, ODG and Snap Inc., to only mention a few, are all working on AR solutions that they hope to take the market by storm and introduce the technology as a common, widely used appliance. With Google’s ARCore and Apple’s ARKit recently released, new applications and concepts spire across the Internet, bringing new areas of use with them. However, using Augmented Reality HMDs at this stage of development might be perceived as

clunky, rough and unpolished. Yet, strangely appealing and intriguing with its futuristic feel, showing great potential for experimentation and defining of use cases. When testing a HMD-based AR device, one’s imagination easily start to wander, and potential ideas for applications and improvements appear almost instantly.

The current ways of interacting with HMD AR devices often involve physical inputs such as touchscreens and buttons, sometimes mounted either on the temple of the glasses or as a controller, connected to the glasses by cable. For example, the Vuzix M300 Smart Glasses (Vuzix, 2018), which are built for enterprise purposes, feature a touch pad, programmable buttons and voice navigation as inputs for interaction. These input options might not be optimal for use in enterprise contexts, as many environments may be noisy, interfering with voice recognition. The worker may also wear gloves, which renders the touch pad useless. The ODG R-9, (ODG, 2018) which are designed for entertainment and personal use, also feature buttons and a touch pad, which might arguably work better in its leisurely context. Epson’s Moverio BT-200 smart glasses (Seiko Epson Corporation, 2018) (fig. 2), also purposely designed to be used in enterprise environments, are controlled by a hefty controller, attached to the frame of the glasses by cable. The controller is solidly built, well designed for its purpose, and feature four buttons for different input commands, an on-off switch, a lock button and four arrow keys for controlling direction. This solution might be perceived as tethering and compromising the user’s degree of freedom, as well as forcing additional carry weight on the user.

These methods of interaction, especially the controller, can be considered retrogressive and disconnected to the otherwise futuristic feel of the smart glasses, maintaining the paradigm of the computer mouse which arguably upholds a gap between the user and the system. Should not the interaction method correlate with the otherwise futuristic feel of using HMD AR?

2.3 Could Gesture Interaction be a Solution?

The ultimate goal of Human-Computer Interaction is to mitigate the gap between the human and the computer, where interactions between them will be equally intuitive and natural as interactions between humans (Rautaray & Agrawal 2012). The degrees of freedom (DOF) offered by touch pads and buttons cannot offer the same naturalness as using hand gestures, seeing as humans already use hand gestures on a daily basis as we interact with objects when moving them, turning them, throwing them away and shaping them. Using hand gestures to interact with a computer can therefore offer a more intuitive way of controlling the technology.

Hand gestures can be defined as meaningful, expressive motions of, used to 1) convey meaningful information or 2) interact with the environment (Mitra & Acharya, 2007).

Blokša (2017) speculates that gesture control for AR in an industrial environment (such as warehouses) could be cumbersome, yet considers controlling the device with gestures natural and important for the ordinary home user. One might question Blokša’s speculation, since industrial workers are often highly active in a physical aspect, a small addition to the work load (using hand gestures) might be insignificant and barely noticeable, as a home user might prefer not to operate their devices by physical motion when e.g. watching a movie or browsing the internet since the home environment is arguably more relaxed. However, using gestures in a repetitive work environment would most likely yield a larger quantity of performed gestures during the course of the work day, thus adding additional strain to the user. In the context of logistic warehouses, gesture-driven interaction might offer a quick and easy way to interact with information required to accomplish the work tasks. Since it alleviates the worker of the burden of carrying physical objects by hand, it enables the worker to use their hands for different tasks, such as picking up articles more easily etc. Additionally, exploring the use of hand gestures to interact with computers may be regarded as an attempt of

taking the next step in Interaction Design – pushing forward towards

designing a Human-Computer relationship with a communication that is equally intuitive as communication between humans (Rautaray & Agrawal 2012).

2.4 Best Practice in AR Design

Augmented Reality has been a topic of both research and scientific writing for over two decades (Ritsos, 2011), and a vast number of frameworks, design guidelines and solutions have been published, showing the complexity of the nature of Augmented Reality. Guidelines presented in earlier research, while not necessarily entirely relevant to the specific use case described in this thesis, could offer applicable insights and design guidelines in the general use of AR for conveying virtual information to the user. Looking at recent research, Blokša (2017) defines a number of guidelines that should be considered when designing user interfaces for Augmented Reality:

1) Notifications and other interface components should not cover the user’s field of view, especially when the user is moving.

2) The user should be able to individually decide on how to control the device, e.g. use buttons instead of gestures when needed.

3) Allow the user to hide and show the interface when desired to avoid unnecessary information being communicated to the user.

4) Place content in natural viewing zones, and not in the periphery. 5) Reactive and responsive UI elements (e.g. buttons) are important.

Haller (2006) accentuates the importance of an “island” environment – to design a very specific application for one user, one location and one task as

opposed to equipping several workers with a diverse multi-purpose system. Thus, increasing the chances of success for said application. This was considered during this project, where the intended end user and use case are distinctly specified and purposely designed for.

Guidelines for Augmented Reality described in Apple’s Human Interface

Guidelines (Apple Inc., 2018b) have been considered during the course of this

research, such as employing the entirety of the AR display for presenting content and a general mindfulness of the user’s comfort and safety concerns.

2.5 Current Use of Gesture Interaction in Technology

There are some consumer-grade products in the market that currently use hand- and bodily gestures as input for navigation and manipulation. Among them is Microsoft Kinect (Microsoft, 2018a), a motion sensing input device to be used with Microsoft’s home video game console Xbox 360. Kinect enables the user to interact with the system by using gestures and body movements in a natural way. A waving gesture is used to enable the use of gestures to control the system rather than the Xbox controller. On-screen selection is achieved by hovering a hand over an element and keeping the hand static for a defined amount of time, resembling the Gaze interaction technique often seen in VR applications.

Microsoft HoloLens (Microsoft, 2018b) is based on two core gestures as a means for interaction, Air Tap and Bloom. Air Tap is a tapping gesture with the hand held at an upright position, closely resembling a mouse click. This gesture is used as an equivalent of the basic “click” interaction used with personal computers and is dependent on the user aiming a Gaze cursor at the intended UI element before performing the gesture. The second gesture, Bloom, is used as an equivalent of the Home button on an Apple iPhone and is reserved only for the purpose of enabling the user to go back to the start menu. The gesture is performed by holding out the hand, palm facing up and the fingertips held together, after which the hand is opened as to emulate a blooming flower. HoloLens is based around these two gestures alone, and the Bloom gesture is purposely designed to be unique, as not to be the subject of false readings.

Other products that enable the use of hand gestures for interacting with a system, such as CyberGlove II (Kevin et al. 2004), depend on the use of additional hardware, such as a glove infused with sensors to capture motion data. While this approach may offer stable and accurate recognition of the hands, the wearer is tethered to a computer by cable, compromising the freedom of the user. A different approach is developed by Crunchfish AB (Crunchfish AB, 2018), which enables the recognition of hand gestures using only a standard camera (integrated in HMD devices, mobile phones etc) and therefore mitigates the need for additional hardware and cables.

2.6 Related Research

Research related to this thesis was made by Bouchard et al (2013). The purpose of their research was to define and evaluate creative approaches designed to generate ideas for gestures, which may be used in future gesture-driven interfaces. In touchstorming sessions (a gesturally oriented translation of brainstorming) the participants generated gestures using their hands and arms to command specified functions on a “magic screen”. The results of this research indicate that gestures generated during the touchstorming session was heavily influenced by existing touch interfaces, such as mobile phones, ATMs etc. The research advocates the use of familiar gestures, which can be translated from e.g. existing touch interfaces to gesture-driven interfaces, since originality often may compromise the intuitiveness, ease of use and affordance. An interpretation of the touchstorming workshop described in Bouchard et al. (2013) was used in this research.

Using Head Mounted Display-based (HMD) Augmented Reality for use in order picking environments has been researched earlier (Schwerdtfeger & Klinker, 2008), with the end goal of 1) optimizing the efficiency of the work process by presenting the user with intuitive and detailed work instructions and 2) to minimize the risk of the user making mistakes which may result in costly follow-up work. This research, however, does not include the use of hand gestures as a means for interacting with the AR enabled HMD, instead focusing on conveying one-way visual information to the user, indicating the warehouse location of specific articles in the order picking list. This approach was considered, but deemed technically infeasible to implement during this project. Instead, a visualisation approach built upon the expressed desires of the end users was produced.

Other relevant research is presented by Buxton (1986) who states that using two hands as tools for input when interacting with a computer can be more efficient, since it allows the execution of two tasks performed in parallel conjunction. Two handed interaction may also assist the user in spatial comprehension, as the hands function as a spatial reference to each other (Hinckley, 1994). This relies on kinaesthetic feedback (Hand, 1997), as we may perceive the position of our hands relative to the position of each other and our body.

3 Method

3.1 User-Centered Design

The design work that is reported in this thesis is influenced by an User-Centered Design (UCD) approach (Norman & Draper, 1986), as to include the end user in the process to shape and influence the final design. During the design process, time and effort were put into understanding the needs of the end user and involving them at certain stages of the process. The UCD approach practiced may however be criticized as executed from an “expert perspective” (Sanders & Stappers, 2008), where the end user plays a role in the design process, albeit a relatively passive one. The end user was however widely included (and active) in certain parts of this design process, described in sections 4 and 7.

3.2 Touchstorming and Bodystorming

Brainstorming, one of the most powerful tools in creative work processes, were first described in by Alex Osborn (1948) in “Your Creative Power”. The concept of brainstorming is used as a means to generate a large quantity of ideas. Bouchard et al. (2013) translated the brainstorming technique to involve the use of hands, arms and the whole body, then more appropriately named touchstorming or bodystorming. This technique was applied in this research as a means to generate ideas for gestures and investigate the intuitive gestural responses of the end user when presented with different tasks, described in section 4.4.

3.3 Wizard of Oz

During both the touchstorming workshops and final usability testing session, a Wizard of Oz approach was used, mainly as a means to convey emulated visual and sonic feedback to the user, but also to become responsible for the user’s potential confusion and discomfort with using the prototype which evocates revisions of the design (Maulsby, 1993). This technique aims to provide the user with functions that are otherwise expensive, hard to implement or currently technically infeasible. As a low fidelity prototype was used, the Wizard of Oz approach was considered an appropriate complement and applied during both the touchstorming and the usability testing. The Wizard of Oz technique was used in sections 4 and 7, during the touchstorming workshops and usability testing sessions.

3.4 Prototyping

As a means to evaluate and validate the design proposition, low fidelity prototyping was used. The benefit of developing a low fidelity prototype is to, in a quick and simple way, identify potential issues with the design and to

involve the end user in shaping the final result. With using fast and cheap low fidelity prototypes, it is often possible to identify usability issues or “brick walls” (Rudd et al., 1996). In order to involve the end user in shaping the final outcome of the design, low fidelity prototyping was used as a means to provide the end user with something to examine and validate. The prototyping activities in this research are described in section 7.

3.5 Usability Testing

To measure and evaluate the usability of the final design proposition, usability testing sessions inspired by the structure for validating and testing the design declared by Cooper (2014) were held. At the stage of usability testing, the design should be elaborate enough to give the end user something to provide feedback and critique on, with the ultimate goal of identifying issues, areas of improvement and a general evaluation of the usability. Cooper (2014) states that usability testing first and foremost is a means to evaluate, not create – a statement not entirely complied during this project: during the usability testing sessions, one goal was to gather additional insights for further iterations and fine-tuning of the prototype, thus including the user to participate in creating new, improved versions.

The description of usability testing, provided by Dumas & Redish (1993) encapsulates the intention of applying the process in this research - usability testing aims to improve the products usability, involve real users in the testing, give the users real tasks to accomplish, enable testers to observe and record the actions of the participants, enable testers to analyse the data obtained and make changes accordingly. Usability testing, along with prototyping, occurred during the final stages of the design process, described in section 7.

3.6 Design Process Model

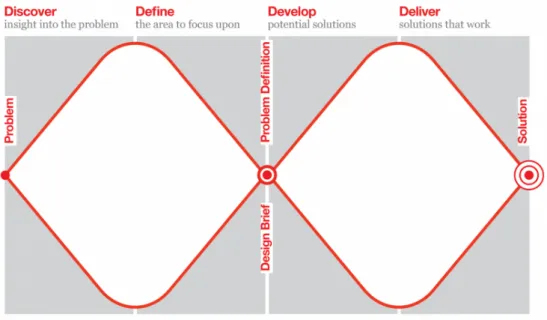

In this research, the design process model The Double Diamond (fig. 3), as presented by the British Design Council (2007) was used.

Figure 3. The Double Diamond design process model as described by the British Design Council (2007).

The Double Diamond process is divided into four phases – Discover, Define, Develop and Deliver, alternating between diverging and converging thinking. First, the model advocates for the designer to ground their design in an understanding, or empathy, of the situation and the end users for which the final design outcome is intended, to acquire insights into the problem by gathering data. The insights and findings are then condensed into a concrete definition of a design problem in the first converging phase, after which a second diverging phase takes place, exploring potential solutions to the design problem. Lastly, the designer should once again converge, to identify and refine solutions that works as a solution the design problem.

Using the Double Diamond design process model as a foundation for the design work encapsulated in this thesis stems from the reason of including the user in select parts of the design process, also supported by the philosophy of User-Centered design. Early in the process, a decision was made: in order to design for the user, the user had to be included in the process. Therefore, applying the Double Diamond model in this research was considered suitable.

In the following four sections, the activities of this research are described in relation to the Double Diamond design process stages.

Discover

The first stage of the design process, Discover, is designed to assist the designer in understanding the user and their needs. Empathizing and

divergent thinking are keywords for this part of the process, where the

designer should talk to people, observe and listen to them, with the intended outcome being an understanding of a problem present in the context.

The discovery phase of this work consisted of an involvement with people who are working in logistic warehouses, in an attempt to understand their situation, their needs, frustrations and desires – an endeavour which proved fruitful and effective, yielding several relevant insights and perspectives. This was accomplished by dialogues with warehouse workers and did not follow any specific interview techniques – a choice that was made with the intention of keeping a relaxed, comfortable atmosphere for the warehouse worker. The focus was to listen and let the warehouse worker tell their story. A few prepared questions were asked to instigate the dialogue, such as “how do you feel about that?” and “does that solution work well?” etc. in order to keep the conversation flowing.

During this stage of the process touchstorming workshops accompanied by a Wizard of Oz approach were hosted, inviting warehouse workers to perform gestures to accomplish a defined collection of navigational tasks in an emulated AR context. The tasks were simple in nature, and consisted of pressing buttons, browsing among on-“screen” content, closing notification windows etc. The goal was to investigate how, with little verbal directive, the users would intuitively complete these tasks using hand gestures. Questions were asked during the workshop: “why did you do that?”, “which gesture

feels better?” etc, in an attempt to evoke a dialogue with the workshop

participants and better understand their actions and the thoughts behind them. The workshop was recorded on video, which was later revisited and more closely analysed in order to probe for additional insights.

Since workers in some warehouses use protective gloves while working, a second workshop was held, where the participant was wearing thick, protective gloves. This took place immediately after the initial workshop was completed and used the same structure and participants. The purpose of this second workshop was to investigate whether the participants gestural actions would differ when wearing thick gloves that might make their hands and fingers less nimble and dextrous.

As the Discover phase was finished, a much clearer view of the work environment at an order picking warehouse was acquired, the people who work there and how their existing situation can be improved.

The Discover phase of the design process is described in section 4. Define

The second stage, Define, is a convergent phase where the designer begins to focus on key areas relevant to the insights acquired from the previous phase, and define pain points that need a solution. By re-framing problems as “HMW” questions (“How Might We…?”) the desired outcome is a clearly defined design opportunity.

The British Design Council (2007) proposes a number of exercises to achieve this goal, such as discussion in focus groups etc. To converge and compile the

acquired findings from the Discover phase in this project, one of the proposed exercises, “comparing notes”, was used as it was considered to be effective in individual work, as opposed to group-oriented exercises such as focus group discussions.

The exercise started with closely analysing video material recorded in the

Discover phase, and compiling all participant comments, remarks, actions

and gestural responses in a text document. Acquired knowledge were then written down on individual post-it notes and arranged under general insights, patterns (similarities of gestural interaction between participants) and issues/problems that should be considered. The end result of that exercise was a set of HMW questions and a definition of a design opportunity aimed to produce a condensed solution to the problems with the existing solutions. This exercise proved to be incredibly valuable, as to get a clear overview of every finding and insight from which to leap into the next phases of the design process. The Define phase is described in section 5.

Develop

The third stage of the design process, Develop, is the second diverging phase of the design model. Here, the designer explores potential solutions to the design problems defined in the previous phase. The phase is initiated by an ideation session where the designer should avoid limitations and keep an open mind and focus on the quantity of the ideas rather than the quality. The second half of the developing phase involves an evaluation of the produced ideas and a selection of the most relevant/interesting ones.

This stage would benefit from working in a group as it would both generate more ideas and potentially lead to fruitful discussions. While working creatively alone, there might be a risk of becoming blind to flaws and to overlook certain aspects due to the lack of external perspective. Therefore, this stage of the process would be more rewarding if executed in a group of designers.

The ideation session was executed individually, using post-it notes containing different ideas and potential solutions. The evaluation phase resulted in both ideas for a prototype and a framework of key aspects to be considered. After the evaluation stage, sketches of different solutions were produced. The intended outcome of the process is one or a small number of ideas for prototyping and testing, in order to find a solution to the design problem formulated in the Define phase, which was successfully accomplished during this stage in the process (described in section 6).

Deliver

The fourth and final stage of the Double Diamond design process is an iterative converging phase, where the designer aims to build, test and iterate the idea(s). In this stage of the process, the prototype was built, tested and evaluated with the participation of end users. Including users in the process

proved to be valuable and fruitful, and the previous phase of the process might also have benefitted if users were invited to participate in the ideation and evaluation of ideas.

At this stage of the process, a visit to an actual warehouse was made and the prototype, accompanied by a Wizard of Oz approach, tested on the intended end users. This resulted in the prototype and its gestural interaction language receiving evaluation and critique for further iterations and finalising of the final design. The activities of the Develop phase is described in section 7.

3.7 Time Plan

In order to present an overview of the different stages of this project, a visual representation of the time plan was produced (fig. 4). The time plan as a whole and the activities of this research was organized according to the Double Diamond design process in chronological order on both axis. As the illustration suggests, the four stages of the Double Diamond are represented as a backdrop, visualising the diverging and converging phases. The four phases are also colour coded, with blue indicating activities intentionally native to the Discover phase, yellow to the Define phase etc. However, a few of these venture beyond the intended borders, such as “Understanding the Context” and “Analysing Findings” – this because additional insights were acquired during other phases. For instance, more knowledge about the warehouse environment was also acquired during the visit to the warehouse during the usability testing sessions in the Deliver phase.

Figure 4. An overview of the time plan of this project, merged with the four phases of the Double Diamond design process. Week numbers are declared at the bottom, indicating the duration of the stages.

However, this visualisation should only be considered as a model, and not entirely accurate of how the design process was executed. Many activities were not abruptly finished at a specific time, rather faded out or reoccurred at other stages of the design process. This model is intended to give the reader a rough and simplified grasp over the activities sorted in a chronological overview but should be regarded as imprecise.

This concludes the Method section, and in the following sections the actual design process is initiated, describing the activities, insights, problems and design decisions made during the course of this project.

4 Understanding the End User and the

Context

The first stage of the Double Diamond design process is to discover, where the goal is to acquire knowledge of the users and their needs, desires and frustrations, the workplace environment and its existing solutions and how the end users would intuitively use gestures in order to navigate an AR enabled system. The focus in these chapter is to acquire as much insights and findings as possible by divergent thinking. This phase was initiated by involvement with real end users employed at different warehouses, by means of hosting workshops consisting of dialogues/interviews and touchstorming exercises.

4.1 Existing Solutions at Logistic Warehouses

During the workshops, three different variations of solutions for visualizing order picking lists in warehouses were described by end users – one variant is shown on a computer monitor mounted on a trolley/wagon, another is communicated by computer generated voice in a pair of headphones, and one consists of a sheet of paper with the picking list printed on it.

Information regarding the warehouses described below is a result of verbal communication and interviews with end users/warehouse workers during the touchstorming sessions.

Airline Supply Warehouse

An international airline company has a warehouse in Lund, Sweden, where the job assignment is to pack alcoholic beverages and snacks for consumption on airplanes. The workers push around metallic trolleys, which has 40 slots in them, where the items are to be packed. The trolley is perceived by many

workers as cumbersome, so it is often left behind when the worker needs to approach the shelves to pick up items. The workers often then choose to carry as much as they can in one trip, rather than going back for more. Mounted on the trolley, sits a computer with a monitor, which displays an interface guiding the employee which item to pick, how many, and where in the warehouse said item is located (specified by the shelf number and the section of the shelf). The interface contains information of one item at a time and is navigated using the arrow keys and the enter key on the keyboard. The articles are listed in the interface in alphabetical order.

The worker carries a finger-mounted scanner, either attached to the computer by cable or wireless, for registration of the barcode of the picked items. When an item is scanned, item information is automatically transferred to the interface. The wireless finger scanners are in high demand, and often times employees have to settle for the much less desired version with a cable, thus shackling them to the trolley and forcing them to take off the finger scanner for each excursion, since bringing the trolley into the tight spaces of the warehouse is considered inconvenient and slow. Therefore, many workers choose to ignore using the finger scanner and register each picked item manually into the computer instead, writing the article number and the amount, and then pressing the enter button on the keyboard.

The warehouse environment is comfortable in temperature, eliminating the need for warming gloves which might hinder the user’s performance and the system’s recognition of hand gestures. Every item on the list takes approximately 10-20 seconds to fetch, register and pack, and each employee picks around 700 items each day. After an item is registered with the finger scanner, the computer interface moves on to the next item. The company has taken serious measures to minimize all risks of injury and accidents by prohibiting the use of headphones for consumption of music and other means for amusement which might reduce the awareness of the wearer due to partial loss of hearing. Spread across the warehouse, there are special roads assigned for motorized forklifts, which might cause serious injury if involved in accidents with pedestrian workers. The work situation is, according to the warehouse employee participating in the workshop/interview, monotonous, repetitive and dull.

Dairy Product Warehouse

A large dairy company in Oslo, Norway has a warehouse where one of the workshop participants is working as a “order-picker” and forklift operator. The warehouse uses a Pick-by-Voice system, in which the employee receives verbal directive from a computer generated voice that communicates relevant information (shelf number, shelf spot and amount) about the item that is to be picked and then packed. The employee navigates the system by using voice commands, describing the item’s article number and the amount that has been picked. Before starting a shift, the employee calibrates the audio-based system by speaking to it. This to allow the system to recognize the ambient

noise level of the warehouse, thus optimizing vocal recognition. The work environment temperature is cold, around +5 degrees Celsius, compelling the employees to wear warm clothes and thick gloves. There are also freeze-temperature sections in the warehouse, in which the employees wear even thicker clothing and gloves. The work situation is described as physically straining, with much heavy lifting and bodily motion. The work tasks are described as monotonous, repetitive and tedious.

Second Dairy Product Warehouse

A second dairy warehouse was also described by another participant. This warehouse is also cold, around +5 degrees Celsius, and bears many similar characteristics as the previously described warehouse. The environment is dirty, cold, wet and loud. The employees receive the packing list as A4 papers, which describe the item name, shelf location and amount. The worker might prefer to mark the picked items with a pencil, in order to keep track of the progression of the work or memorize which items has been packed. The items are stacked on top of pallets, which are moved either by a motorized or a hand-operated forklift. The pallet is moved to a close proximity of the desired location, then left behind as the worker walks to the shelf, lifts the items off the shelf, carries them, and stacks them on the pallet. When all items on a picking order are picked and packed, the worker places the pallet at a specified location for delivery. The interviewee did not know how many items were picked each day. The work was described as heavy and unforgiving, quite stressful and physically demanding.

Clothing Company Central Warehouse

Oslo, Norway, marks the location of the Norwegian central warehouse of an international clothing manufacturer. The warehouse is enormous and serves as a distribution hub that provides each corresponding store in Norway with wares each day. The person who participated in my research works on different departments of the warehouse, but mainly the order handling and picking and packing department. The workers receive sheets of A4 paper each morning, containing information about the items that are to be picked, 2000 items for each list. The employees have to pick at least 2500 items each day. The participant is working via a consultancy company, which, according to him, leads to a significant amount of stress and pressure to perform effectively each day. Since the employers choose to extend the employment of the consulting workers with the highest picking quantity (a fact that apparently was unofficial, classified information), the consultancy workers strive to pick as many items as possible each day, to make sure their employment is prolonged.

The order picking list contains article number (e.g. A1234) and the quantity of said item to pick. The workers use a four-wheeled wagon, which they push around and stack plastic boxes onto. These plastic boxes serve as containers for the picked items, and when one container is full, a new one is stacked on

top of it. The participant stated that he usually holds the picking list with one hand, pressed against the containers, with his thumb placed at the specific point of progression on the list. The participant stated that newly employed workers often carry a pencil to mark picked items, but more experienced workers prefer to use their memory or, as described above, their thumb. The work environment is described as “OK” – the ambient noise level is acceptable and the temperature is comfortable. The work is also described as physically demanding with frequent heavy lifting required.

4.2 Problems With the Existing Solutions

Each of the methods for conveying information about articles, quantity and their location that has been described above has both strengths and weaknesses in how well they perform when used on a daily basis.

The method that integrates a computer monitor-based interface forces the employee to constantly be in close proximity to the computer, thus adding additional weight and bulk to the portable trolley in which articles are stored after having been picked and registered. Furthermore, the system relies on either the use of a finger-mounted scanner (which may or may not be attached to the computer by cable) or the worker’s manual input commands on the computer keyboard. If the finger-worn barcode scanner is attached to the computer by cable, it forces the worker to either bring the trolley along with them to spaces which might be narrow and incommodious, or to take off the finger scanner each time they are leaving the trolley behind to collect articles from the shelves. In such cases it is, according to the workshop participant, often preferred not to use the finger scanner at all, instead forcing the worker to enter article information manually on the computer keyboard – a method which is more time consuming and results in both loss of efficiency and demands more interaction with the system. However, if the finger-mounted scanner is wireless, it allows for more freedom and efficiency. The workshop participant did not remark on any issues or personal frustrations regarding the use of a wireless finger scanner. Instead, the attitude towards using a wireless finger scanner was positive, with the participant speaking of it as convenient and well-functioning. One of the main frustrations communicated by the participant was the non-customizable sorting structure of articles in the interface, which abided by an alphabetical sorting rather than e.g. the location of the article in the warehouse, weight and fragility. The participant preferred to stack heavier items (such as bottles) at the bottom of the trolley and more fragile (bags of peanuts etc.) on top, to reduce the risk of articles breaking or tearing. Therefore, the participant has to manually go through the order picking list, choosing items to pick in disregard to the set order of the list itself. Since the computer monitor interface only shows one item at a time, a lack of overview of the articles in the list is causing frustration due to the increased amount of effort needed to obtain a desired article.

The method using a Pick-by-Voice was described as quite convenient and well-functioning, except the fact that it negates the consumption of sound-based amusement, such as music or podcasts – a desire that all workshop participants expressed, since the task of order picking has generally been described as repetitive and monotonous. One participant, whom have had earlier experiences with Pick-by-Voice, expressed negative opinions about the system regarding the sensory discomfort of listening to a computer-generated voice for an entire work day: “it drives you crazy!”. However, the same participant also stated that using the Pick-by-Voice system increases the efficiency of the work flow. Here, the worker also lacks the overview of the article list, forcing them to follow the set order of the system. False voice readings might also be an issue, one which was never described by the participants.

The analogue method of using a pen and paper for communicating article information and tracking progression also has a few negative aspects to it. The paper is a fragile, physical object that needs to be handled delicately in order not to get torn, wet or lost. It also has to be carried by the worker, occupying one hand, unless the paper is put down somewhere (in which case it might get lost, as described by a participant). The need to constantly carry around the paper was described as very annoying, since the workers has to push a wagon at the same time.

4.3 End User Archetypes

After dialogues with the workshop participants, two distinct types of warehouse employees were defined. The characteristics of these users were considered during the entire design process. The employees that participated in the workshop had the characteristics as the first group of users described below. Clustering and categorizing the end users into two archetypes proved valuable later in the process, as the minor differences among the employees were blurred, leaving two distinct users to design for rather than hundreds of them.

Firstly, the work force on the investigated warehouses contains mostly of younger students (predominantly of male gender), between the ages of 18-25 who are working extra alongside their ordinary occupation. These users were described as most of them being rather technically versed in the use of modern technology. However, the level of ambition of this group was expressed as rather low – the work should run smoothly with as little cognitive work as possible. The work should optimally allow them to think freely or “zone out” mentally. An expressed desire of this group was the ability to listen to music while working. This group was also described as healthy, in good physical shape and eager to improve their work situation.

The second group involves older, more experienced workers, approximately between the ages 35-55. Workers fitting the description of this group was described as being less occurring. The proficiency of using modern

technology was described as rather low, and one participant described them as “bitter”, adding “it is pure darkness” when describing their personalities. Naturally, not every employee on the described warehouses is represented by these characteristics, yet acquiring a sense of the most common personalities in a warehouse was useful during the design process. Since the first group of employees (extra working students) was proclaimed as the most common, design decisions in the following sections has first and foremost been based on the needs, desires and frustrations of that specific user group.

4.4 Touchstorming Sessions

After the workplace environment and the archetypal end users had been described, the next step of the workshop was to investigate the intuitive gestural responses performed by the end users when presented with a specific task, such as interacting with buttons, closing pop-up notifications and browsing through content.

4.4.1 Overview and Desired Outcome

After discussing their situations at work, the participant was considered to be in a relevant mindset for visualizing themselves in the context of their workplace, yielding results linked to the warehouse context. After the participant had given their initial gestural response to completing a task, they were encouraged to try different ideas for gestures as well. The first-performed gesture was considered more valuable from an intuitiveness aspect, since it often was performed immediately, without reasoning or thinking. However, performing more variations of gestures was encouraged in order to give the participant some perspective on which gestures they would prefer to use.

Six participants were recruited for the touchstorming workshops, which were executed individually with the exception of the first (in which two participants were present). During that workshop, the participants seemed to influence each other regarding which gestures to use to complete the tasks. Therefore, following workshops were executed individually in order to provoke the participants to act from their own intuitive responses.

The workshops were held at quiet, secluded areas free from distractions and noise. A Nikon D5100 camera mounted on a tripod was used to capture video material of the workshop, which was later closely observed and analysed in greater detail. During the workshops, great care was taken not to give any verbal clues that could provoke a specific gesture. For example, instead of asking the participant to “swipe between these images” (which might have led the participant to perform a swiping finger gesture as performed on mobile phones and tablets), they were asked to “browse the content”, thus inspiring the participant to come up with their own solution. All participants were right handed.

The most frequently occurring gestures (interpreted as the most “popular” and intuitive) were later considered as the most relevant candidates for evaluation in a prototype.

Emulating HMD AR

In order to mimic the feel of using HMD-based AR devices, where digital components semi-transparently overlay the scenery of the real world, a 15x10 cm sheet of fully transparent acrylic plastic was used to mimic the screen of AR glasses. UI elements, such as buttons, were drawn onto the sheet of plastic with a black whiteboard marker, which were erased between tasks to give room for new UI elements. The sheet of plastic, hereinafter referred to as “the screen”, containing the “interactive” objects was then held at a distance of approximately 30 centimetres from the eyes of the participant, which was considered as a comfortable viewing distance.

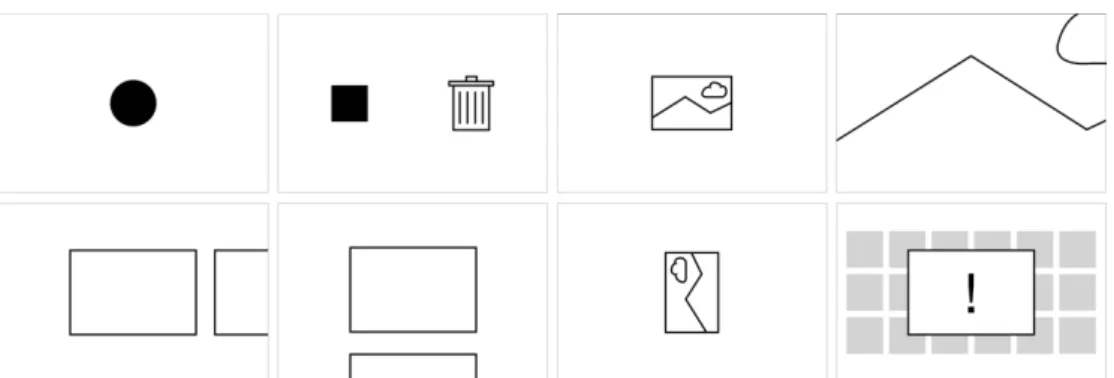

Screen Content

All representations of UI content drawn onto the sheet of plastic were minimalistic in nature in order to avoid influencing the actions of the participant (fig. 5) The first basic interaction task that was presented to the participant was to simply press a button, with the verbal directive “how would you interact with this button?”. The button was drawn as a black circle in the centre of the screen. The next task was to move a black cube to the trash bin on its right. In order to do this, the participant had to do a click-and-drag type of gesture. On the third task the participants were asked to zoom in on an image. The image was small, and consisted of a mountain range and a cloud in order to clarify what the participants were seeing. Immediately after a gestural response had been made, the participant was presented with an already zoomed in version of the same image, and given the directive to zoom

out.

Figure 5. A digitized representation of the “screens” presented to workshop participants, which contain the assignments or tasks they were asked to complete during the workshop.

During the fifth task, the participants were presented with two rectangles of which one was centred and the other cropped along the right edge of the screen. They were asked to browse between these rectangles, to bring the cropped rectangle into the centre of the screen. The sixth task was a vertical variation of the fourth. The seventh task inquired the participants to

manipulate an image that was rotated 90 degrees and bring it to its intended viewing alignment. The ability to rotate objects might not be a primary function of a gesture-driven interface intended to be used in an order picking warehouse context but was explored nonetheless in case of an application appearing unexpectedly (considering this stage was a diverging phase as described by the British Design Council (2007)). In the eighth and final task, the participants were presented with a grid of squares, visibly overlapped with a rectangle intended to mimic a pop-up notification, calling for immediate attention. The tasks were performed in the order described below (correlating to the order of images in fig. 5, from top left to bottom right):

1) Click

2) Click-and-Drag

3) Zoom In

4) Zoom Out

5) Horizontal Browse

6) Vertical Browse

7) Rotate

8) Close Pop-Up Notification

4.4.2 Observed Gestural Responses During Touchstorming

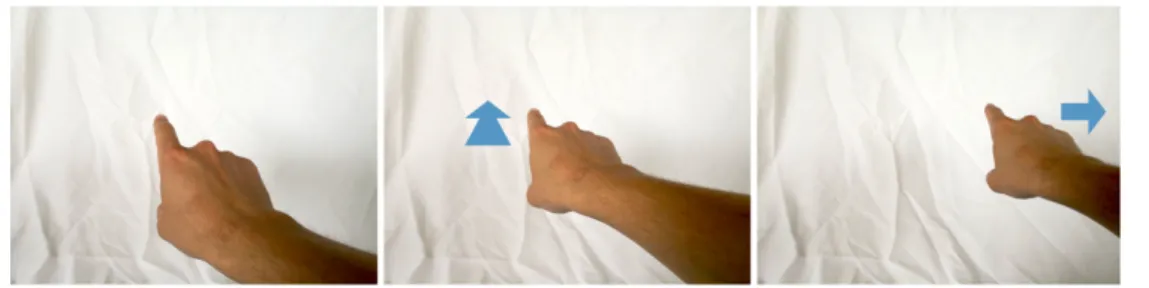

Task 1. ClickThe first task yielded immediate responses in all participants: their dominant hand brought up to the screen, performing a forward pushing motion with their index finger facing away from their bodies – the manner of which one might push a physical button. The gestural response in this task was univocal, with few exceptions. One digressing gestural action was a whole-handed forward pressing motion, but this was performed as an alternative to the initial and much preferred “Index Tap” (fig. 6). Also, one participant suggested using the index finger to “draw” a check marker (✓) in the field of view.

Figure 6. The Index Tap

The participants reaction indicated a consentient inclination towards the index tap gesture as well, with comments such as “this is the obvious choice”,

“there’s no doubt about it, I would do it like this” and “this feels most natural”.

Task 2. Click-and-Drag

After the first task had been accomplished, the gestures performed during the

Click-and-Drag task appeared to be closely connected to how the participant

had completed the previous task. All participants started the sequence by “tapping” the cube with their dominant index finger, then keeping the finger static in a spatial Z-axis, while moving the finger towards the trash bin. The cube was then “released” or “dropped”, with the participant bringing their finger backwards towards the body. Though the first part of the gesture sequence was performed almost identically by the participants, how they moved the cube towards the trash bin and where they released it differed. Some participants moved the cube in an arcing motion towards the trash bin, while others moved it in a straight line. Also, a digression occurred when one participant released the cube above the trash bin, as if it would “fall down”, whereas the remaining participants released the cube on the trash bin.

Figure 7. The Tap-Hold-Drag gesture sequence.

Except for one small digression, the gestural responses for the Click-and-Drag task was univocal (fig. 7). Comments from the participants supported their choice by saying “I did like I would on a computer, where I click and

drag an icon to the trash”. This gesture is hereinafter referred to as the

“Tap-Hold-Drag” gesture. Task 3. Zoom In

When asked to enlarge the size of an image in task 3, most participants seemed to translate mobile interaction patterns into spatial gestures: the most popular gesture was to start with the thumb and the index finger pinched together and then move them away from each other, expanding the space between the two fingers. (fig. 8)

Figure 8. The Opening Pinch gesture.

Deviations of this gesture include two hands being used in an otherwise similar fashion: two pinching poses, “grabbing” two corners of the image and then stretching it out by widening the distance between the hands. This gesture occurred two times, but was afterwards rejected by the participant as being inferior and “less convenient” than its one handed counterpart. The comment “I’d prefer to use one hand instead of two” was uttered.

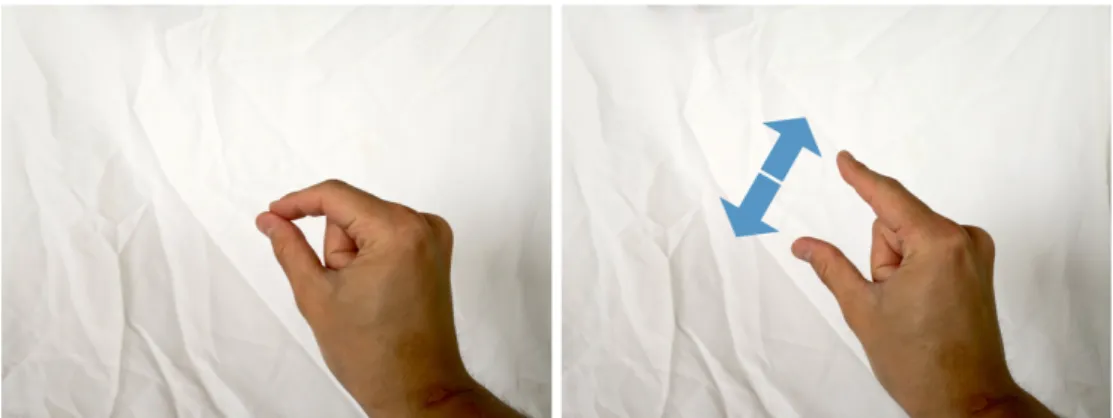

Task 4. Zoom Out

The gestures performed during task 4 was in all cases an inverted version of the Opening Pinch gesture favoured in task 3, where the participants started with their thumb and index finger spread apart, and then conjoined in a pinching motion (fig. 9). This gesture is later referred to as Closing Pinch, rather than just Pinch to avoid confusion.

Figure 9. The Closing Pinch gesture.

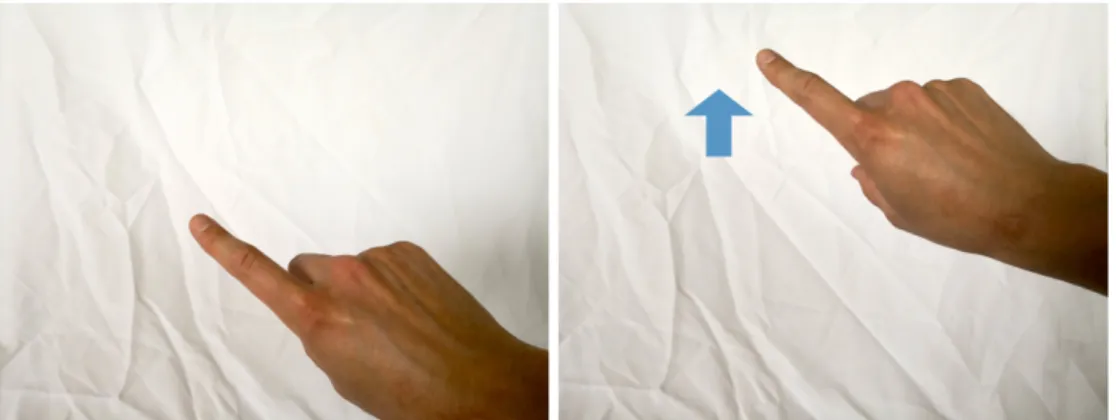

Task 5. Horizontal Browse

At this stage of the workshops, a paradigm of preference had been made clear: participants seemingly preferred to use their index finger as a main tool for interaction. This was also the case in task 5, where all subjects performed similar gestures. In most cases the index finger was used, facing upwards with the palm of the hand facing away from the body (fig. 10). The index finger was then moved, in varying velocity, towards the left of the screen. Two of the participants executed the gesture slowly and steadily, as if pulling the UI elements to the left. The remaining four participants instead performed a

rapid motion in a sweeping manner. Looking at smart phone interaction patterns for swiping between home screens for instance, both versions are available, ultimately resulting in the same outcome. Since the gesture is closely related to how we interact with smart phones, this gesture is referred to as Swipe.

Figure 10. The Swipe gesture.

Task 6. Vertical Browse

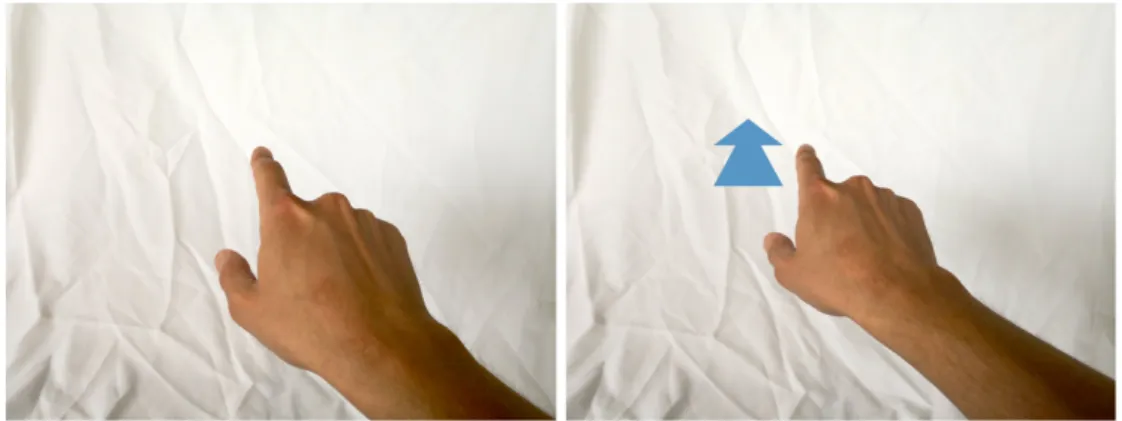

The gestural responses performed during task 6 expectedly had similarities with its horizontal counterpart: index finger moved upwards, towards the top of the screen. All participants carried out the gesture with their hand tilted at a (approx.) 45 degree angle (fig. 11).

Figure 11. The Vertical Swipe.

A few deviations from the upwards index swipe appeared when two participants moved their whole hand in an upwards motion – gestures later deemed less preferable by the executors in favour of the index swipe.

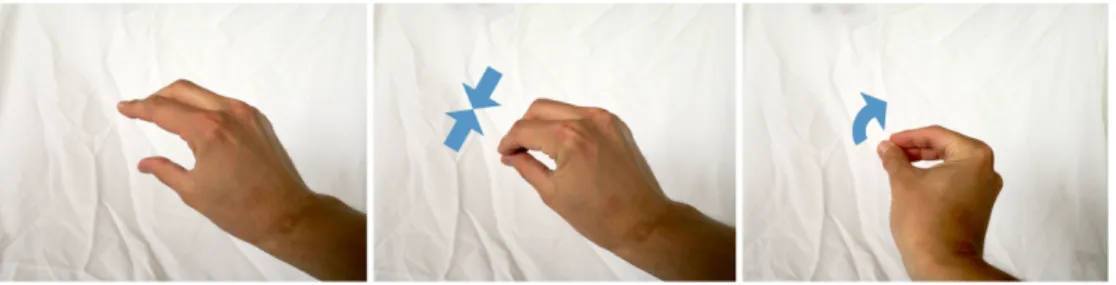

Task 7. Rotate

Inquiring the participants to rotate an image with hand gestures yielded interesting results, tightly connected to how we use our hands to turn knobs in real life. The preferred method involved three fingers (index, thumb and middle finger) forming a gripping pose around the image, then rotating the hand, wrist and arm clockwise (fig. 12).

Figure 12. The Rotation gesture.

Task 8. Close Pop-Up Notification

When attempting to close or dismiss an overlapping pop-up notification with gestures, the participants presented a few different ideas. Some participants declared the wish for a “close button” in any of the upper corners of the window (as windows are closed in Microsoft Windows and Apple operating systems), which would then be used with an Index Tap gesture. Another participant used the Index Tap gesture to “click” the area next to the pop-up window, a method used by Facebook among others when e.g. closing overlaying image windows. Another gesture response was to perform a limp “slapping” or “swatting” gesture (either the back of the hand first or the palm, both of which appeared in the workshops), where the participants moved their hand from the centre of the screen towards the edges, in a dismissive manner (fig. 13). Since the results of this task were scattered, it is hard to clearly define a single gesture that is the most intuitive. Other factors might play a role in deciding which gesture is the most fitting choice for a gesture-driven system, such as technical implementation etc. The different examples of gestures described above are the subjects of further exploration and evaluation in the usability testing phase in section 7.

Figure 13. The Dismiss Swipe gesture.

4.4.3 Gesture Interaction With Gloves

In many warehouses the workers allegedly wear gloves, which potentially might influence how gestures are performed. For instance, gestures involving smaller hand and finger motions such as the Opening Pinch might be hard to perform when wearing thick gloves. Therefore, additional touchstorming exercises were held, using the exact same tasks with the exception of the participant wearing working gloves. Participants commented on their hands feeling less nimble but performed gestures identical to how they were

performed without the gloves. While this aspect should optimally be investigated more deliberately, the restricting factor of wearing working gloves were disregarded in later design decisions due to the indifference in user gesture responses.

This concludes the Discover phase of the design process and leads into the following phase: Define.

5 Finding a Design Opportunity

The second stage of the Double Diamond is to Define. This is where insights, participant comments and other findings from the Discover phase are presented and analysed, with the ultimate goal of defining a specific design opportunity to be developed in stage 3 of the Double Diamond – the Develop phase. This section is initiated with a presentation of the statements of the participants regarding the use of gesture-driven interaction and general findings that should be considered at a later stage of the process. This stage of the process was initiated by writing down crucial findings and observations on post-its and sorting them by insights, patterns, problems and “How Might We…?”-questions (HMW). The purpose being to get an overview of essential knowledge to simplify the defining of a specific design opportunity.

5.1 Workshop Findings

5.1.1 End User Comments on Gesture Interaction

The attitude towards using gestures for controlling an interface was mainly positive: participants stated it felt “natural”, “quite convenient” and generally “good”. A negative remark was added by one participant: that it might feel “silly” to use gestures in public, since it is an uncommon way of interacting with technology and the general population might be perplexed by the sight of someone waving strangely into empty space. When asked if they felt physically strained after using gestures, the participants answered no, one of them claiming “humans use gestures all the time anyway” and “I didn’t use

any large gestures. If I did, it might be different, but smaller gestures such as the ones I did wasn’t straining at all”.

Since all participants gestural responses involved their index finger as a primary tool for interaction, they were asked why that might be the case. The answers were based in how we use existing technology, especially smart phones, which was seemingly a strong influence on how they intuitively responded within a gesture-based system. One participant said, “I did the