M¨alardalen University

School of Innovation Design and Engineering V¨aster˚as, Sweden

Thesis for the Degree of Master of Science in Computer Science with

Specialization in Embedded Systems 30.0 credits

Resource Virtualization for

Real-time Industrial Clouds

Sharmin Sultana Sheuly

ssy14002@student.mdh.se

Examiner: Moris Behnam

moris.behnam@mdh.se

Supervisor: Mohammad Ashjaei

mohammad.ashjaei@mdh.se June 10, 2016

Abstract

Cloud computing is emerging very fast as it has potential in transforming IT industry by replacing local systems as well as in reshaping the design of IT hardware. It helps companies to share their infrastructure resources over internet ensuring better utilization of them. Nowadays developers do not need to deploy ex-pensive hardware or human resource to maintain them because of cloud computing. Such elasticity of resources is new in the IT world. With the help of virtualization, clouds meet different types of customer demand as well as ensure better utiliza-tion of resources. There are two types of virtualizautiliza-tion technique (dominant): (i) hardware level or system level virtualization, and (ii) operating system (OS) level virtualization. In the industry system level virtualization is commonly used. How-ever it introduces some performance overhead because of its heavy weight nature. OS level virtualization replacing system level virtualization as it is of light weight nature and has lower performance overhead. Nevertheless, more research is nec-essary to compare these two technologies in case of performance overhead. In this thesis, a comparison is made in between these two technologies to find the suitable one for real time industrial cloud. XEN is chosen to represent system level virtu-alization and Docker and OpenVZ for OS level virtuvirtu-alization. To compare them the considered performance criteria are: migration time, downtime, CPU con-sumption during migration, execution time. The evaluation showed that OS level virtualization technique OpenVZ is more suitable for industrial real time cloud as it has better migration utility, shorter downtime and lower CPU consumption during migration.

Contents

1 Introduction 5 1.1 Motivation . . . 6 1.2 Thesis Contributions . . . 7 1.3 Thesis Outline . . . 7 2 Background 8 2.1 Cloud computing . . . 82.2 System Level Virtulization . . . 9

2.2.1 XEN . . . 10

2.2.2 XEN Toolstack . . . 11

2.2.3 XEN Migration . . . 11

2.3 Operating System (OS) Level Virtualization . . . 12

2.3.1 Docker . . . 13 2.3.2 OpenVZ . . . 14 2.3.3 OpenVZ Migration . . . 16 3 Problem Formulation 17 4 Solution Method 18 5 System set-up 22 5.1 Local Area Network (LAN) Set up . . . 22

5.2 XEN Hypervisor . . . 23

5.2.1 XEN installation . . . 24

5.2.2 Setting up Bridged Network . . . 24

5.2.3 DomU Install with Virt-Manager . . . 24

5.2.4 Virtual machine migration . . . 24

5.3 Docker . . . 28

5.3.1 Docker Installation, Container Creation and Container Migration . . . 28

5.4 OpenVZ . . . 29

5.4.1 OpenVZ Installation . . . 29

5.4.2 Container Creation . . . 29

5.4.3 Container Migration . . . 30

6 Evaluation 31

6.1 Migration time . . . 31

6.2 Downtime . . . 35

6.3 CPU consumption during migration . . . 36

6.4 Execution Time . . . 36 6.5 Evaluation Result . . . 37 7 Related work 37 8 Conclusion 43 8.1 Summary . . . 43 8.2 Future Work . . . 43 Appendix A 51 A.1 XEN toolstack . . . 51

A.1.1 XEND Toolstack . . . 51

A.1.2 XL Toolstack . . . 52

A.1.3 Libvirt . . . 52

A.1.4 Virt-Manager . . . 52

Appendix B 53 B.1 Docker Installation, Container Creation . . . 53

List of Figures

1 Different cloud types ([1]). . . 8

2 XEN architecture (adopted from [2]). . . 10

3 Major Docker components (High level overview) [3]. . . 14

4 OpenVZ architecture [4]. . . 15

5 Solution method used in this thesis. . . 19

6 Industrial Real time cloud. . . 21

7 LAN set up. . . 23

8 Start of Migration Virtual machine vmx. . . 26

9 vmx migrated and paused in the destination host. . . 27

10 Migration of vmx completed. . . 28

List of Tables

1 Test bed specifications of XEN . . . 31

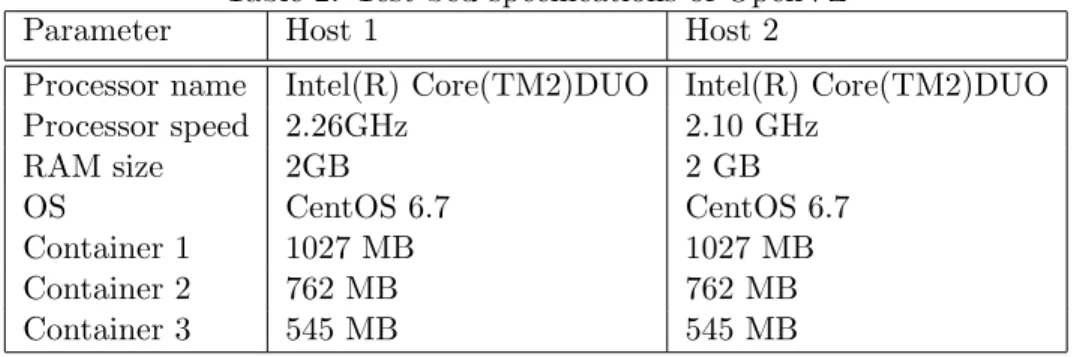

2 Test bed specifications of OpenVZ . . . 31

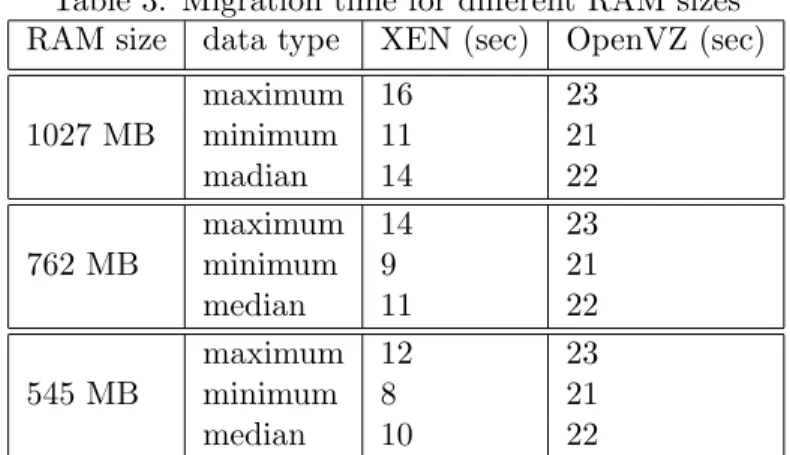

3 Migration time for different RAM sizes . . . 32

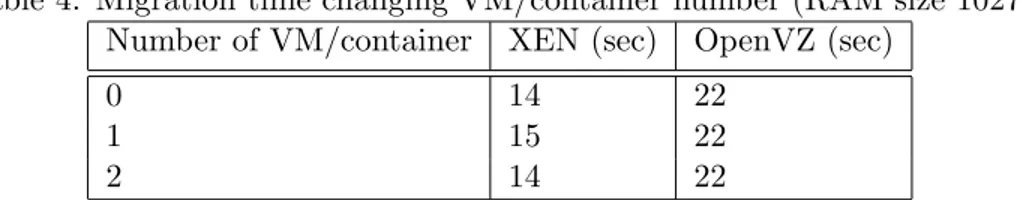

4 Migration time changing VM/container number (RAM size 1027) . . . 34

5 Migration time changing host CPU consumption (RAM size 1027) . . . 34

6 Downtime changing RAM size . . . 35

7 CPU conumption during migration (in percent) . . . 36

8 Execution time . . . 36

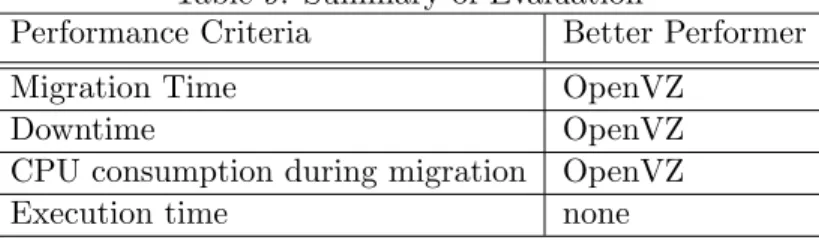

1

Introduction

Cloud computing has emerged as a very convenient word in the networking world. All over the world users utilizes IT services offered by cloud comput-ing. It provides infrastructure, platform, and software as services. These services are consumed in a pay-as-you-go model. In the industrial world, cloud became an alternative to local systems as it performs better [5], [6]. In industrial real time cloud all the required computation is conducted in cloud upon an external event and depending on this computation response is provided within a predefined time. However, In 2008 a survey was con-ducted with six data centres, which showed servers utilize only 10-30% of total processing capacity. On the other hand, CPU utilization rate in desk-top computers is on an average below 5% [7]. In addition to this, with the growth of VLSI technology, there is a rapid advancement in computing power. Unfortunately, this power is not exploited fully as single process running on a system does not exploit that much resource.

The solution of the above problem lies in virtualization which refers to unification of multiple systems onto a single system without compromis-ing performance and security [8], [9]. Virtualization technologies became dominant technology in the industrial world for this reason. Server vir-tualization increases capability of data centers. Application of virtualiza-tion in different areas such as Cloud Environments, Internet of Things, and Network Functions is becoming more extensive [10]. In addition to these, High-Performance Computing (HPC) centres are using this technology to fulfil their clients need. For example, some clients may want their applica-tion to run in a specific operating system while others may require specific hardware. To address these issues at first the users specify their need. De-pending on this specification virtual environment is set up for them which is known as plug-and-play computing [11]. There exists three virtualization techniques: (i) hardware level or system level virtualization, (ii) operat-ing system level virtualization and (iii) high-level language virtual machines [12], [8]. Among these techniques the first two are dominant. Difference in these two virtualization technique lies in the separation of host (virtualizing part) and domain (virtualized part) [8]. In hardware level virtualization the separation is in hardware abstraction layer while in operating system level virtualization it is in system call/ABI layer.

1.1 Motivation

Hardware level virtualization is the dominent virtualization technology in the industrial world as it provides good isolation and encapsulation . One virtual machine (VM) cannot access codes running in another VM because of isolated environment. However, this isolation comes at a cost of perfor-mance overhead. System calls in VMs are taken care of by virtual machine monitor or hypervisor introducing additional latency. If a guest issues a hardware operation, it must be translated and reissued by the hypervisor. This latency introduces higher response time of tasks which are running in-side the VM. Another challenge is that, there exists a semantic gap between the virtual machine and the service. The reason behind this is that, guest OS provides an abstraction to the VM and VMs operate below it [13]. In addition to this, VM instances should not have RAM size higher than host RAM. This fact imposes a restriction on the number of VM that can run on the host. Every VM instance has its own operating system which makes it of heavyweight nature. Because of the above problems, industry is moving towards operating system level virtualization which is a light weight alter-native to hardware level virtualization. There is no abstraction of hardware in OS level virtualization rather there exists partitions among containers. A process running in one container cannot access process in another container. Containers share the host kernel and no extra OS is installed in each con-tainer. Therefore, it is of light weight nature. However, in case of isolation hardware level virtualization outperforms operating system level virtualiza-tion.

There exists work where comparision made among virtualization techniques considering industrial systems like MapReduce (MR) clusters [14], general usage [15], [16], [17], high performance scientific applications [18]. No work have been conducted to find suitable virtualization technique for real time industrial cloud. In real time system timely performance is very impor-tant. For this reason, virtualization techniques should be compared taking into account performance criteria that effect real time constraint (in time response). In the previous work authors considered industrial systems or general usage or high performance scientific applications without consider-ing real time constraint at the time of comparison. Therefore, in this thesis our focus will be on contrasting hardware level virtualization and operating system level virtualization taking into account different performance criteria to find suitable virtualization technique for real time industrial cloud.

1.2 Thesis Contributions

There exists research work comparing different hardware level virtualization techniques. There also exists comparison between hardware level virtualiza-tion and operating system level virtualizavirtualiza-tion. However, no work have been conducted to find suitable virtualization technique for real time industrial cloud.

The goal of the thesis is to find a virtualization technique which is suitable to be used in real-time cloud computing in industry.

We achieve the main goal by presenting the following contributions: • We review the work related to cloud performance overhead. • We investigate virtualization techniques.

• We set up a system consisting of hardware level virtualization and operating system level virtualization consecutively.

• We evaluate performance criteria in the system to find suitable virtu-alization technique for real time industrial cloud.

1.3 Thesis Outline

This thesis report consist of 8 sections. These sections are organised as fol-lows:

Section 2 describes background of this thesis. It describes cloud computing, system level virtualization technique XEN, Operating System virtualization technique Docker and OpenVZ.

In section 3 the problem is formulated and the research questions are de-scribed.

Section 4 describes the solution method which is used to answer the research questions.

Section 5 contains the procedure which is followed to set up the systems. Section 6 describes the evaluation of the systems that is set up. Different performance criteria is measured and the data is mention in this section. Section 7 contains the work related to performance evaluation in virtualized cloud environment. It also includes the difference of the thesis from these works.

2

Background

In this section background of this thesis which includes cloud computing, system level virtulization and operating system (OS) level Virtualization is described.

2.1 Cloud computing

Cloud computing has emerged as an alternative to on premise computing services [6]. It means services are provided to the consumer with elements which are not visible to the consumer (as if covered by a cloud) and these services are accessed via internet [1]. Cloud service refers not only to the software provided to the consumer but also to the hardware in the data center that are utilized to support those services. Advantages of cloud are: (i) Provision of infinite computing resource on demand, (ii) User can start on small scale and increase resources when it is necessary and (iii) User can let go the resources when it is no longer useful [19]. If the service is provided to the public as pay-as-you-go manner, then it is called public cloud and the sold services are called utility computing. On the other hand, cloud services provided by the internal data center, which are not available to the public are called private cloud [19]. Combination of private and public cloud is called hybrid cloud [20]. On the other hand, community cloud is built for a special purpose. Figure 1 shows different types of cloud.

Figure 1: Different cloud types ([1]).

Cloud services are divided into three layers (As a business model) Software as a Service (SaaS), Platform as a Service (PaaS), and Infrastructure as a Service (IaaS) [21].

SaaS refers to the applications provided to the consumer with the help of cloud platform and infrastructure layer [22]. Example of SaaS is GoogleApps (google calender, word and spreadsheet processing, Gmail etc.) and these applications are accessible via web browser. The underlying infrastructure (i.e. servers, network, operating systems etc.) is not manageable by the customer [23,22].

PaaS provides a platform to the consumers where they can write their ap-plications and load the corresponding code in the cloud. It contains all the necessary libraries and services required to develop the application. The developer can control the configuration of the application environment, but not the underlying platform. For example Grails, Ruby and java applica-tions can be deployed with Google App Engine [23,22].

IaaS facilitates virtulization technology (i.e.provision of storage, network, storage resource). With the help of this service consumer can run arbitrary software in the cloud. For example, Amazon provides computing resource and storage resource to the consumers [23,22].

2.2 System Level Virtulization

System level virtualization which is also known as hardware level virtualiza-tion means virtualizing hardware to create an environment which behaves as if an individual computer with OS and many of such virtualized com-puter can be created on top of single hardware [24]. Abstraction of memory, operation on CPU, networking, I/O activity are very close to real machine (sometimes equal)[8]. The reason of using system level virtualization are: (a) secure separation, (b) server consolidation and (c) portability [2], [25]. Hypervisor has become the fundamental software to realize System level vir-tualization. It is the entity in a system which is responsible of creating and managing virtual machines running on top of it. Companies like Red Hat, VMware, xen.org, Microsoft etc. are familiar provider of this technology. A computer on which virtual machines are running is called host computer and the virtual machines are called guest machines [9].

There are two types of hypervisor, type I hypervisor and type II hypervisor. As it is explained earlier, in this work XEN was chosen to represent system level virtulization and below a brief description of it is given.

2.2.1 XEN

XEN hypervisor is an x86 virtual machine manager (VMM) which facili-tates efficient utilization of resource by consolidation [26], hosting services in the same location, compuatation in a secured environment [27] and easy migration of virtual machines [28]. It manages multiple virtual guest OS to run simultaneously on a single hardware. XEN architecture made of two distinct domains Dom0 and DomU, as shown in Figure 2. The Dom0 is a Linux OS which has been modified to serve special purpose. All the virtual machines which are also known as DomUs are created and configured by Dom0 as it provides control panel to do so (Figure 2) [9].

Figure 2: XEN architecture (adopted from [2]).

Here, we briefly describe three basic aspects of the virtual machine in-terface (x86 inin-terface which is paravertulized ) [2].

Meomory management

One of the most difficult part of paravirtualized x86 architechture is the memory management part, as special technique required both in hypervi-sor and at the time of migration. If Translation look aside buffer (TLB) is managed by software these difficult tasks become easier [29]. The segment descriptor does not have full privilege as XEN does. In addition, to this it may not have access to address space reserved by XEN.

Guest operating system does not have direct write access to the page tables. A validation process is conducted by XEN before every update.

CPU

In general, no other entity in a system have more privilege than Operating system (OS). However, in virtualized environment XEN has more privileges than guest operating system. For this reason, guest OS are changed. For

taking care of system calls a fast handler can be installed in the guest OS. This will prevent indirection through XEN [2].

Device I/O

Instead of imitating hardware devices XEN introduces device abstractions which enables isolation. Each I/O data destined or coming from the guest OS redirected through XEN with the help of shared-memory and asyn-chronous buffer descriptor rings [2].

2.2.2 XEN Toolstack

XEN uses different toolstack to enable user interface, which are: 1. XEND Toolstack

2. XL Toolstack 3. Libvirt 4. Virt-Manager

Detailed description of these toolstack can be found in Appendix A. 2.2.3 XEN Migration

Virtual machines are loosely connected to the physical machine and because of this reason they can be migrated to another physical machine. XEN has the feature of migrating virtual machines from one host to another. Some of the advantages of migration of VMs are:

• Load balancing: If a host becomes overloaded some VMs can be moved to host with lower resource usage.

• Failure of hardware: If the host hardware fails, the guest VMs can be migrated to another host, and the failed hardware can be repaired. • Saving energy: If a host is not running significant number of VMs,

then the VMs can be redistributed to other hosts and the host can be powered off. In this way energy can be saved at the time of low usage. • Lower latency: To have lower latency VMs can be moved to another

It is not required to restart the server after migration of virtual machine. In addition to this, downtime is very low [31]. There are two types of migration: (i) Offline Migration (ii) Live Migration.

Offline Migration In offline migration, the VM is suspended and a copy of its memory is moved to destination host. The VM is restarted in the destination host while memory in the source host is freed. The migration time mainly depends on network bandwidth and latency [30]. Different tool stack can be used for offline migration. Below some of them are provided

• XM tool stack: xm migrate <domain> <host> • XL tool stack: xl migrate <domain> <host> • Libvirt API: virsh migrate GuestName libvirtURI

Live Migration In live migration, at the time of transferring the memory of the migrating VM, the VM keeps running. If any modification is made to any page at the time of migration the change is also sent to the destination. The sent memory is updated taking into account the change. In the new host the registers are loaded and the migrating VM restarted. Different tool stack can be used for live migration. Below some of them are provided

• XM tool stack: xm migrate –live <domain> <host> • XL tool stack: xl migrate –live <domain> <host> • Libvirt API: virsh migrate –live GuestName libvirtURI 2.3 Operating System (OS) Level Virtualization

In OS level virtualization the operating system is changed in a way that it acts as a host for virtualization and it contains a root file system, executables and system libraries [32], [33]. Applications run on containers (domains) and each container has access to virtulized resources. There is no redundancy as only one OS runs in the system. In System level virtualization, the hypervisors work as an emulator, which try to emulate the whole computing environment (i.e. RAM, Disk drive, processor type etc.). On the other hand, in OS level virtualization instaed of emulating actual machine, resources (disk space, kernel etc.) are shared among guest instances. In the following subsections Docker and OpenVZ will be described as a representative of Operating System (OS) Level Virtualization [34].

2.3.1 Docker

With container based technology several domains (containers) can run on single OS and for this reason its of lightweight nature [35]. It creates an extra layer of operating system abstraction to deploy applications. It has APIs to create containers that run processes in isolation. The Docker so-lution consists of the following components (high level overview) (Figure 3).:

• Docker image

The Docker containers are launched from Docker images, which builds the Docker world. It can be considered as the source code of Docker containers [36]. If one considers Linux kernel is layer zero, whenever an image is run, a layer is put on top of it [37].

• The Docker daemon

It is the responsibility of Docker daemon to create and monitor Docker containers. It also takes care of Docker images. The host oprating sys-tem launches the daemon [3].

• Docker containers

Each container in Docker acts as a separate operating system which can be booted or shut down. There is no hardware virtualization or emulation in Docker. Any application can be converted to lightweight container with the help of Docker [35]. A Docker container not only contains a software component but also all of its dependencies (bina-ries, libra(bina-ries, scripts, jars etc.) [34].

• Docker client

Docker clients communicate with the Docker daemon to manage the containers and for this communication a command line client binary Docker is used [36].

• Registries

Docker registries are used for storage and distribution of Docker im-ages. There exists two types of registries: private and public. The public registry is called Docker Hub which is operated by Docker Inc..

Private registries are owned by organizations and does not have over-head due to download from internet [3,36].

Figure 3: Major Docker components (High level overview) [3].

2.3.2 OpenVZ

OpenVZ is a OS level virtualization technology for Linux. It creates mul-tiple containers on a physical server ensuring isolation which are known as virtual environments (VEs) or containers. It uses a modified version of linux kernel. A container has its own root account, IP address, users, files, ap-plications, libraries, CPU quotas, network configuration. All the containers share the resources as well as the kernel of the host. Containers must run same OS as the physical server. Compared to real machine a container has limited functions. There are two types of virtual network interfaces to the containers: (i) virtual network device (or venet), (ii) virtual Ethernet device (or veth). A virtual network (venet) device has limited functionality and creates simply a connection between container and host OS. However it has lower overhead. On the other hand, overhead in virtual Ethernet device (veth) is slightly higher than in virtual network device. Its advantage is that it acts as an Ethernet device. Architechture of OpenVZ is provided in Figure 4 [38], [39], [4].

Kernel Isolation

• Process: Namespace is the technology of abstracting global system resource. A process within a namespace enjoys privileges as if it has

. Figure 4: OpenVZ architecture [4].

its own instance of resource. In OpenVZ, there exists a separate PID namespace for each container. Because of this feature, a set of pro-cesses inside a container can be suspended or resumed. In addition to this, every container has its interprocess communication (IPC) names-pace. IPC resources like System V IPC objects and POSIX message queues are separated by IPC namespace [40].

• Isolating Filsystem: In OpenVZ, filsystem is isolated using chroot. Chroot is a process of changing root directory of a parent process and its children for isolating them from the rest of the computer. It is analogous to putting a process in jail. If a process which is being executed in a root directory changed environment, it cannot access file outside that directory. Using this mechanism isolation is provided to applications, system libraries etc. [40], [41].

• Isolating network: In OpenVZ, network isolation is achieved using net namespace. Each container has its own IP address. There also exists routing tables and filters [41].

Management of resource

For proper functionality of multiple containers on a single host resource management is necessary. In OpenVZ, there are four primary controllers for resource management:

• User beancounters (and VSwap): It is a set of limits and guarantees for preventing a single container using all the system resources. • Disk quota: There can be disk quota for each container. In addition to

this inside a container there can be user/group disk quota. Therefore, there is two level disk quota in OpenVZ.

• CPU Fair scheduler: The OpenVZ CPU scheduler consists of two level scheduler. The container which is going to have the next time slice is determined by the first level scheduler. And this scheduling is per-formed taking into account containers CPU priority. The second level scheduler decides which process to run inside a container taking into account process priority [4].

• I/O priorities and I/O limits: Every container has its own I/O priority. Available I/O is distributed according to the assigned priority [41]. 2.3.3 OpenVZ Migration

In OpenVZ, a container can be migrated from one host to another. This is done by checkpointing (an extension of the OpenVZ kernel) the state of a container and restoring it in the destination host. However, the container can be restored in the same host too. Without checkpointing migration was only possible through shutting down the container and then rebooting. This mechanism introduces a long downtime and this downtime is not transpar-ent to the user too. Checkpointing solves this problem.

There are two phases in the process of checkpoint/restore. Firstly in the checkpointing phase, the current state of the process is saved. Address space, register set, allocated resources, and other process private data are included in it. In the restoration phase, the checkpointed process is recon-structed from the saved image. After that, the execution is resumed at the point of suspension. OpenVZ provides a special utility called vzmigrate for supporting migration. With the help of this utility live migration can be performed. Live migration refers to the process where container freezes for a very short amount of time during migration and the process of migration remains transparent to the user. Live or online migration can be performed with the command vzmigrate online < host > VEID, where ’host’ refers to the ip address of destination host and ’VEID’ refers to ID of the container. Migration can also be performed without the help of vzmigrate utility. For this, vzctl utility and a file system backup tool is necessary. A container can be checkpointed with the command: vzctl chkpnt VEID dumpfile <path>.

With the help of this command, the state of the migrating container is saved to a dump file specified by the path. In addition to this, the container is stopped. The dump file and file system is transferred to the destination node. In the destination node the target container is restored using the command: vzctl restore VEID dumpfile <path>. The file system at the time of checkpointing must be same as the file system at the time of restora-tion [42,43].

3

Problem Formulation

For controlled and efficient usage of resources and isolation virtual machines (VMs) are used traditionally. Isolation is achieved by deploying applications in separate VMs while controlled usage of resource is obtained by creating VMs with resource constraints. VMs create an extra level of abstraction which comes at a cost of performance. An experiment showed that system level virtualiztion technique XEN increases response time by 400% as the number of applications increased from one to four. Another experiment showed that the peak consumptions from two nodes are at 3.8 CPUs. How-ever, CPU consumption can rise beyond 3.8 CPUs after consolidating two virtual containers because of virtualization overhead. This rate becomes higher because of migration. Experiments showed that OS-level virtualiza-tion outperforms system level virtualizavirtualiza-tion in case of performance overhead [44]. However, system level virtualization is used traditionally. Therefore, more experiments should be conducted to compare these two types of vir-tualization technologies in case of performance overhead.

Virtualization technology reduces cost while increasing the probability of lower availability of applications. With the failure of host all the VMs run-ning on it fails stopping the applications runrun-ning them. For continuous performance of applications features like live migration and checkpoint re-store was introduced to virtualized environment. However, in spite of these features still there is a small amount of time during migration when ap-plications become unavailable. In addition to this, not all virtualization environment provide the migration features [16]. On the other hand, for the cloud providers it is very crucial to maximize resource usage to make return-on-investment. Migration of VMs is one of the methods used for maximizing resource usage [45]. There was an experiment which showed there was 1% drop in sales of Amazon’s EC2 because of 100 msec latency. In the same way, because of rise in search response time, Googles profit decreased by

20% [46]. For this reason, there should not be any (or as low as possible) presence of latency due to migration. Therefore, analysis of performance overhead (related to migration) in real time industrial cloud is very impor-tant.

The research question on which this thesis is based on is:

Which virtualization technique is suitable to be used in real-time cloud com-puting in industry?

4

Solution Method

To conduct the thesis, we decided to follow several steps which are shown in Figure 5.

First Step: In the first step, we reviewed research papers, to get an insight of real time industrial cloud performance overhead.

Second Step: In the second step, review was conducted to find conve-niently used OS-level virtualization software and system level virtualization software in the same context. For hardware level virtualization XEN was chosen as the virtual machine (VM) hypervisor. The reason behind choos-ing XEN was that it provides secure execution of virtual machines without any degradation in performance while the other commercial or free solutions provide one service compromising the other [2]. In addition to this, it has migration utility. XEN is used by popular internet hosting service compa-nies like Amazon EC2, IBM SoftLayer, Liquid Web, Fujitsu Global Cloud Platform, Linode, OrionVM and Rackspace Cloud.

Figure 5: Solution method used in this thesis.

To represent operating system level virtualization Docker and OpenVZ were chosen which are open source projects. The reason behind choos-ing Docker was that it is open source and provides easy containerization. In addition to this, Companies like Google, Microsoft adopting this tech-nology [47]. Schibsted Media Group, Dassault Systemes, Oxford Univer-sity Press, Amadeus, EURECOM, Swisscom, The New York Times, Orbitz, Cloud Foundry PaaS [48], Red Hat’s OpenShift Origin PaaS [49], Apprenda PaaS [50] etc. are some of the customers of Docker. It is a very fast growing technology [51]. However, Docker was excluded from evaluation as Docker containers could not be migrated. On the other hand, the reason behind choosing OpenVZ is that it is open source and has some advantages like: (i) better efficiency (ii) better scalability (iii) higher container density (iv)

bet-ter resource management. In addition to this, it has utility for conducting migration while Docker does not provide such utility. Some of the OpenVZ customers are: Atlassian, Funtoo Linux, FastVPS, MontanaLinux, Parallels, Pixar Animation Studios, Travis CI, Yandex etc [52].

Third Step: In the third step, crucial performance criteria in industrial real time cloud was identified. Migration time, downtime, execution time and CPU consumption at the time of migration are some of the performance criteria that effect real time industrial cloud. Migration time refers to the time taken by a virtual machine (VM)/container to migrate from one host to another while downtime refers to the time period when the VM/container service is not available to the user. CPU utilization indicates the usage of computer’s processing power. Execution time of a program refers to the time needed by the system to execute that program.

In real time system, an action is performed upon an event within an prede-fined time. It is very crucial to perform the action within the time constraint. In Figure 6, a high level overview of an industrial real time cloud contain-ing two servers is shown. It performs some computation on the sensor data sent to it and transmits the result to the actuator. For example, consider a real time task A is executing in server1’s VM/container. In between the execution migration of that VM/container is performed. If the downtime and migration time is too high the task can miss its deadline. Therefore, good migration utility and lower downtime is expected in this case. On the other hand resource consumption during migration should be lowered to ensure a good turnover. In this work, the considered resource was CPU consumption. High CPU consumption during migration may hamper func-tionality of other task. For this reason, CPU consumption during migration is considered as one of the performance criteria. If a tasks execution time is high than there is a probability of deadline miss of task and degradation of overall performance. For this reason, execution time is considered as one of the performance criteria during evaluation.

Figure 6: Industrial Real time cloud.

Fourth Step: In the fourth step, the method of evaluating the chosen performance criteria was selected reviewing related work. Akoush et al. [53] conducted post copy migration and measured migration time and downtime. In pre-copy migration there are six stages (initialisation, reservation, iter-ative pre-copy, stop-and-copy, commitment, activation). The authors mea-sured migration time by measuring time taken by all six stages. Downtime is the time taken by last three steps (Stop-and-copy, Commitment, Activa-tion) of migration. However, the authors did not write the measurement steps in details. Hu et al. [54] designed an automated testing framework for measuring migration time and downtime. The test actions were conducted by benchmark server. To measure the total migration time authors initiated migration using command line utility and recorded the time before and after the migration commands to complete. For measuring the downtime authors pinged the target VM. In this work, we followed the above method to mea-sure migration time and downtime. The ping interval was 1 second as the downtime was in the second range. The migration time and downtime was

measured changing RAM size of the migrating VM/container. In addition to this, migration time was measured changing the number of VM/container in the source host while the target VM/container was migrating. Another experiment was conducted where migration was conducted when the CPU consumption was at normal state and the migration time was measured. Then the source host was loaded as heavily as possible with burnP6 stress program. The migration was conducted from loaded source host and migra-tion time was measured.

Shirinbab et al. [55] measured CPU utilization using xentop command. In this work, we decided to use this command for measuring CPU consumption in XEN installed system. In OpenVZ top command was used. To measure execution time of a program a clock was started at the beginning of the program and stopped at the end. The time interval indicated by the clock is the execution time.

Fifth Step: In the fifth step a small scale cloud environment was set up where XEN, Docker and OpenVZ was implemented successively.

Sixth Step: In the last step the implemented systems was compared taking migration time, downtime, CPU consumption during migration and execu-tion time into account.

5

System set-up

5.1 Local Area Network (LAN) Set up

For the experiment in this thesis we made a LAN network with two personal computers connected with a Cisco WS-C2960-24TT-L Catalyst switch (net-work is shown in Figure 7).

Figure 7: LAN set up.

Express set up was used to configure the switch as no console cable was provided. The ip address ”http://10.0.0.1” provided access to the swtich. To check if the LAN is setup correctly or not, in the first laptop the static ip address was set to 192.168.2.2 and laptop was disconnected from internet. Then the first laptop was pinged from the other one. The other laptop was also disconnected from internet.

The main idea was installing XEN in the computers shown in Figure 7 and then measuring all the selected performance criteria. Afterwards erasing the computers and installing Docker and measuring all the selected performance criteria. And same procedure was followed in OpenVZ.

5.2 XEN Hypervisor

In this work two computers were used where Community Enterprise Oper-ating System (CentOS) 6.7 [56] was installed. Different versions of Ubuntu are tried for this setup and Ubuntu 12.04.5 is chosen. On top of Ubuntu four virtual machines were created. We observed crashes of the system dur-ing bootdur-ing after trydur-ing migration of VM. Therefore, it was decided to set up the system with CentOS, which is based on Red Hat Enterprise Linux (RHEL) and more compatible with XEN than Ubuntu.

5.2.1 XEN installation

We started with 32 bit CentOS 6.7 as one of the computers has only 2GB RAM. Unfortunately Xen4CentOS repository was not available in 32 bit kernel. For this reason, two computers were set up with 64 bit CentOS 6.7. Xen4CentOS Stack was installed afterward [57]. Before booting the XEN enabled kernel we made sure that boot configuration file is updated. After rebooting the system it was checked if new kernel is running. It was ensured XEN is running ( ’xl info’ and ’xl list’ command ran in the terminal). It was observed that XEN version 4.4 Dom0 was running.

5.2.2 Setting up Bridged Network

Bridge is set up to provide XEN guests networking. In /etc/sysconfig/network-scripts directory a file called ifcfg-br0 was created to make a bridge named br0. The main network device was eth0. There was no Ethernet inter-face configuration file in CentOS 6.7. For this reason, a configuration file ifcfg-eth0 was created in the directory /etc/sysconfig/network-scripts. This configuration file was modified so that eth0 interface point to bridge inter-face. In both computers bridge was named as br0.

5.2.3 DomU Install with Virt-Manager

The virtual machines (DomU) was created with virt-manager. However, it can also be created with the help of XL toolstack or XM toolstack. After the Virt-manager interface appears it was connected to XEN. With virt-manager fully virtualized virtual machines are created. In this work, XEND service is enabled as migration of VM using XL toolstack and libvirt showed some problem.

5.2.4 Virtual machine migration

Virtual machine was migrated from one computer to another running XEN. The migration requirements are as follow:

1. If the VM is created using shared storage (both Dom0’s see the same disk), xm migrate <domain> <host> command migrates the VM to destination host. If the VM is not created using shared storage, it is necessary that the destination host gets access to the root filesystem

of the migrating VM. In this work, VM is not created using shared storage. The root filesystem was contained in a disk image with a path /var/lib/libvirt/images/VMx.img. For a successful migration it is necessary that the destination host have access to VMx.img image file via the same path /var/lib/libvirt/images/VMx.img. In this work this was done by placing the image in a network file system (NFS) server (source host) and then mounting it in the NFS client (destina-tion host).

2. The destination host has adequate memory to accommodate the mi-grating VM.

3. In this work source and destination had the same version of XEN hy-pervisor.

4. Configuration of host and destination is changed to allow migration of VM. VM migration is disabled by default. The settings in /etc/xen/xend-config.sxp is changed to allow relocation request from any server.

5. Firewall is disabled. There were some difficulties in connecting NFS client to the NFS server [58,59].

After settig up the required configurations the migration command runs on the terminal. Figure 8 shows virtual machine called vmx is started to migrate from source host to destination host (ip address 192.168.0.12). vmx migrated and paused in the destination host (Figure 9). In Figure 10 it can be seen that virtual machine vmx has completed its migration and it is running in the destination host.

Figure 10: Migration of vmx completed. 5.3 Docker

In this subsection, installation method of Docker, container creation and problem faced during migration will be described. Docker was installed in two computers with Ubuntu 14.04 OS.

5.3.1 Docker Installation, Container Creation and Container Mi-gration

Docker does not have any utility for migration of containers. However, in this work most of the selected performance criteria are migration related. For this reason, a Docker experimental binary was used rather than installing Dockers official release from docker web page [60]. Building an experimental software gives users opportunity to use features early and helps maintainers get feedback. The used Docker experimental binary was downloaded from git repository and has the extra utility checkpoint and restore which are not present in the Dockers official release. With this checkpoint utility a process can be friezed and with restore utility it can be restored at the point

it was friezed. These utilities are necessary to checkpoint the migrating container in the host node and restoring it in the destination node. In this work, Docker containers could not be migrated because of the problem of a supporting software. Detailed steps of Docker Installation, Container Creation and Container Migration is provided in the Appendix B.

5.4 OpenVZ

In this subsection the method followed to install OpenVZ, creation of new container and migration of new container will be provided.

5.4.1 OpenVZ Installation

For installing OpenVZ 64 bit CentOS 6.7 was installed in two comput-ers as in OpenVZ installation webpage [61] it was recommended to use RHEL(CentOS, Scientific Linux) 6 platform. Rather than creating sepa-rate partition default partition /vz was used. The OpenVZ repository was downloaded and placed in the computers repository. In addition to this, OpenVZ GPG key was imported. OpenVZ kernel was installed as without it OpenVZ functionality is limited. The main part of OpenVZ software is OpenVZ kernel. Before rebooting into the new kernel, it was checked if the new kernel was the default in the grub configuration file. In addition to this, for proper functionality of OpenVZ some of the kernel parameters needed proper settings and this change was made in the /etc/sysctl.conf file. SELinux was disabled in both computers and some user-level tools were in-stalled. Afterwards computer was rebooted. OpenVZ was working properly on both nodes [61].

5.4.2 Container Creation

For creating OpenVZ container an OS template is necessary. An OS tem-plate refers to Linux distribution which is installed in a container. After-wards gzipped tarball is used for packing it. With the help of such cache container creation can be completed within a minute. Precreated template cache was downloaded [62]. In the next stage, the downloaded tarbell was placed in the /vz/template/cache/ directory. To create a container used command was: vzctl create CTID ostemplate osname, where CTID is the container ID. The container was started using the command: vzctl start CTID. To Confirm container is created successfully command: vzlist is used which shows list of all the containers [61].

5.4.3 Container Migration

OpenVZ has the utility vzmigrate for migrating containers from one hard-ware node to another. For connecting to the destination node Secure Shell (SSH) network protocol is used. With ssh a secure channel is created be-tween ssh client and server. Each time a connection is made bebe-tween source host and the destination host with ssh, password insertion is necessary. To avoid password prompt each time a migration is made a public key is made in the source host with the command ssh keygen t rsa. The created public key was transferred to the destination node and saved in the .ssh/authorized keys/ folder. From the source host it was tested to see if a ssh login can be made without password. After successfully setting up the ssh public key the command: vzmigrate [ online] destination address <CTID> is used for live migration of the container.

5.5 Test Bed Specifications Summary

In previous subsections installation process of XEN hypervisor, Docker and OpenVZ is provided. In this subsection the test bed specification is provided in a summarized way. The configuration of the used hosts (for XEN VMs) and XEN VMs are given below in Table 1. In the host which has 2GB RAM XEN VMs were not created all together for meeting physical RAM constraint. The configuration of the used hosts (OpenVZ) and OpenVZ containers are given below in Table 2.

For XEN and OpenVZ different hardware platform (computers) was used as OpenVZ container live migration was not successful in between the same hosts (used in XEN). According to expert opinion, sometimes live OpenVZ migration is not possible between computers with different processor family. For this reason Different hardware platform is used. This does not effect validity of comparison. During migration a file is transferred from source host to destination host. File transfer in wireless network is effected by WiFi radio, bandwidth, misconfigured network settings etc.. However, in this work LAN with same network bandwidth is used instead of internet. Besides, CPU speed and RAM size of host in a LAN does not effect file (small size) transfer Largely. Therefore, there is no risk of large change in migration time and downtime because of different hardware platform. Execution time could have been affected by CPU speed and RAM size of host if a compute intensive application were used. However, in this work no compute intensive application were used to evaluate execution time.

Table 1: Test bed specifications of XEN

Parameter Host 1 Host 2

Processor name Intel(R) Core(TM) i7 Intel(R) Core(TM2)DUO Processor speed 1.73GHz 2.26GHz

RAM size 8GB 2 GB

OS CentOS 6.7 CentOS 6.7

XEN version XEN 4.4 XEN 4.4

VM 1 1027 MB ( Ubuntu 14.04) 1027 MB ( Ubuntu 14.04) VM 2 762 MB ( Ubuntu 14.04) 762 MB( Ubuntu 14.04) VM 3 545 MB (Ubuntu 14.04) 545 MB ( Ubuntu 14.04)

Table 2: Test bed specifications of OpenVZ

Parameter Host 1 Host 2

Processor name Intel(R) Core(TM2)DUO Intel(R) Core(TM2)DUO Processor speed 2.26GHz 2.10 GHz RAM size 2GB 2 GB OS CentOS 6.7 CentOS 6.7 Container 1 1027 MB 1027 MB Container 2 762 MB 762 MB Container 3 545 MB 545 MB

6

Evaluation

In This section, the performance criteria migration time, downtime, Execu-tion time and CPU consumpExecu-tion during migraExecu-tion will be evaluated in XEN and OpenVZ . As Docker container could not be migrated it was excluded from evaluation.

6.1 Migration time

Live migration is based on the concept of migration and it refers to migra-tion of virtual machine/container from one host to another in such a way that the process of migration remains transparent to the user [63]. In this work, virtual machines of different RAM size were created in XEN which were migrated from one host to another with the command: xm migrate --live <domain id> destination ip address. Three virtual machines were created of RAM size : 1027 MB, 762 MB and 545 MB. The experiment was

conducted for six hours. Within this six hours, each virtual machine was migrated 12 times and the migration time was measured using the script mig time (Figure 11). Therefore, in total 36 VM migrations were com-pleted within six hours.

In case of OpenVZ, three containers were created and RAM size was set to 1027 MB, 762 MB and 545 MB with the command: vzctl set CTID - - ram <RAM Size> - - swap <Swap Size> - - save. Swap memory size was set to zero. Each container was migrated from one host to another 12 times and migration time was measured using the script mig time (Figure 11). The experiment was conducted for four hours while 36 migration time were measured. The maximum, minimum and median of the recorded migration time for XEN and OpenVZ is given below in Table 3. However, experiment conduction time (six hours for XEN and four hours for OpenVZ) does not indicate bad performance of XEN and good performance of OpenVZ.

Table 3: Migration time for different RAM sizes RAM size data type XEN (sec) OpenVZ (sec) 1027 MB maximum 16 23 minimum 11 21 madian 14 22 762 MB maximum 14 23 minimum 9 21 median 11 22 545 MB maximum 12 23 minimum 8 21 median 10 22

From the evaluated data (Table 3), it can be observed that with the de-crease in RAM size XEN VM migration time dede-creases, but OpenVZ does not show such characteristics and migration time remains constant in all the containers. This happens because in XEN at the time of migration the whole memory gets copied to destination host. It is monitored if any change is made to any memory page during migration and when any change takes place the corresponding change is transferred to the destination. Therefore, in case of XEN the higher the RAM size the longer it takes to transfer it. In a word, migration time is proportional to RAM size. On the other hand, in OpenVZ for container migration state of processes in the container and global state of container is saved to an image file which is transferred

to the destination and restored there later. State of a process includes its private data which are: address space, opened files/pipes/sockets, current working directory, timers, terminal settings, System V IPC structures, user identities (uid, gid, etc), process identities (pid, pgrp, sid, etc) etc. There-fore, it is more of like taking snapshot of a program’s state which is known as checkpointing and transferring the resulting image file. The larger the image file is, the longer it takes it to transfer. In a word, migration time is proportional to the overall size of the transferred image file. And this size is proportional to the memory usage of processes running inside the OpenVZ container. In this work, no processes were running inside the con-tainer during migration. For this reason, only global state of the concon-tainer was saved in the image file and transferred. Therefore the image file is of same size in each cases, irrespective of the RAM size (as the whole RAM does not get copied) [64,65,42]. For this reason, with change in RAM size migration time changed in XEN but not in OpenVZ. Another fact that can be observed from Table 3 is that, XEN has lower migration time than in OpenVZ. However, migration time in OpenVZ can be lowered using shared storage [66]. In this work, no shared storage was used in case of OpenVZ. In addition to this, in case of XEN with increase in RAM size migration time increases whether or not the the corresponding RAM pages are in use. However, for OpenVZ no such thing happens and one can set upper bound of memory usage without effecting the migration time.

Figure 11: Algorithm for measuring migration time.

Another experiment was conducted where number of virtual machines running on the host were changed from 0 to 2 and the effect of this change on migration time was observed. It means in the first case there was no VM running in the source host except the target VM which is going to be mi-grated. The target VM was migrated and the migration time was observed. Then in the second case there was 1 VM running on the source host except

the target VM which is going to be migrated and the migration time was measured. For each case four migrations were conducted within an hour. The Average of the four migration time was calculated. In the same way, OpenVZ container migration time was measured while number of containers running on the host changed from 0 to 2. The result of this experiment is given in Table 4.

Table 4: Migration time changing VM/container number (RAM size 1027) Number of VM/container XEN (sec) OpenVZ (sec)

0 14 22

1 15 22

2 14 22

From this experiment (Table 4), it can be observed that, number of VM/container running on the host do not effect migration time. The reason behind this is that neither VM nor container monopolize the host slowing down the migration process and because of this increasing VM/container number did not effect migration time.

In the third experiment, the effect of CPU consumption on migration time was observed. For this the migration time was observed in normal state. Then the Host CPU was loaded as heavily as possible with burnP6 (A stress tester for Linux) and migration time was measured by migrating target VM. Only one core was loaded with the command: burnP6 & rather than loading all the available cores. In the same way, OpenVZ host was loaded with burnP6 and migration time was measured. The result of this experiment is given in Table 5.

Table 5: Migration time changing host CPU consumption (RAM size 1027) Host CPU state XEN (sec) OpenVZ (sec)

No burnP6 14 22

With burnP6 14 22

From these data (Table 5), it can be observed that stressing (utilizing 100%) only one core does not effect migration time. It indicates migration of VM/container does not consume high CPU resource. If all the cores were stressed (utilizing 100%), it would have effected migration time. However,

in this work old computer was used and stressing all the cores could harm the machine. For this reason only one core was stressed.

6.2 Downtime

Downtime is a duration of time where the migrating virtual machine is not available to the user [67]. To calculate the downtime of the migrating VM, it was pinged. If the ping does not receive any reply, then the virtual machine is not functioning. The number of ping packets that does not receive any reply multiplied by the ping interval indicates the downtime. For measuring downtime ping sequence of 1 second interval was used. Three VMs were created of RAM size: 1027 MB, 762 MB, 545 MB. For each VM 10 migrations were conducted to measure downtime. In total 30 migrations were conducted in 4 hours. In the same way downtime of migrating container is measured in OpenVZ. The maximum, minimum and median of recorded downtime are given in Table 6.

Table 6: Downtime changing RAM size RAM size data type XEN (sec) OpenVZ (sec)

1027 MB maximum 6 2 minimum 3 2 madian 4 2 762 MB maximum 6 2 minimum 4 2 median 5 2 545 MB maximum 6 2 minimum 2 2 median 4 2

In XEN there was a slight decrease in downtime with decrease in RAM size (Table 6). However, in case of OpenVZ, no such characteristics was observed. The reason behind this is that, in case of OpenvZ a snapshot of process state is transferred not the whole memory (as it happens in XEN). Therefore, RAM size does not effect OpenVZ downtime. Another obser-vation that can be made from Table 6 is that, downtime in XEN VM was higher than in OpenVZ container. In XEN VM migration transferring and restoration of whole memory takes place while in OpenVZ only the image file is transferred and restored. For this reason a longer downtime is involved in XEN VM.

6.3 CPU consumption during migration

The CPU consumption of the host was recorded when no migration was taking place and also the consumption at the time of migration. The output is shown in Table 7.

Table 7: CPU conumption during migration (in percent) Time XEN (percent) OpenVZ (percent) Before migration 10-20% 10-15%

At migration 40-80% 22-30% After migration 10-20% 10-11%

From the evaluated data (Table 7) it can be observed that XEN con-sumes higher CPU resources (40-80%) during migration than OpenVZ (22-30%). In XEN the whole memory is copied and transferred during migration consuming higher CPU resources while in OpenVZ only a snapshot of the current processes are transferred consuming lower CPU resources.

6.4 Execution Time

To find execution time of an application running in a virtual machine and container, two testing applications were made. The first application prints the sentence ”Hello world” 10,000 times. The other application calls the function gettimeofday() 100000 times and saves the output in a structure. After that it performs some operations (finding maximum and minimum) on the saved data. A clock was started at the beginning of each application and stopped at the end. The output of the evaluation is shown in Table 8.

Table 8: Execution time

Application XEN(microsec) OpenVZ (microsec) Application 1 23123 23122

Application 2 21738 21738

The execution time of an application running in a virtual machine and container is same (Table 8). However, in this work very simple applications were chosen. If High Performance Computing (HPC) application which has intensive calculation was chosen like, Adufu et al. [18] there would have been a difference in execution time.

6.5 Evaluation Result

XEN has lower migration time however if the RAM size of XEN VM were in-creased further it would have taken longer time for migration than OpenVZ. In OpenVZ no transfer of unnecessary RAM pages takes place while in XEN whole RAM transferred irrespective of their usage which is inefficient. Therefore, OpenVZ has a better migration utility than XEN. OpenVZ has a lower downtime than XEN. In addition, it consumes lower CPU resource during migration, which makes it more economically attractive. Docker can be excluded as it does not have migration utility. Deployment of OpenVZ container is easier and less time consuming than XEN VM. Creating an OpenVZ container takes approximately 1 sec while XEN VM creation takes approximately 30 minutes as it involved installation of OS. Another prob-lem with XEN was that at the time of creating VM it should be considered that VM RAM size should not exceed host RAM size. In OpenVZ, no such consideration is necessary. Based on the analysis it can be concluded that OpenVZ is more suitable than XEN for industrial real time cloud. Summary of the comparison is shown in Table 9 indicating better performer between XEN and OpenVZ.

Table 9: Summary of Evaluation

Performance Criteria Better Performer

Migration Time OpenVZ

Downtime OpenVZ

CPU consumption during migration OpenVZ

Execution time none

7

Related work

Nowadays there is a tendency to use containers which is an alternative to traditional hypervisor based virtualization in MapReduce (MR) clusters for sharing of resource and performance isolation. MapReduce (MR) clusters are used for generation and process of large data sets. Xavier et al. [14] conducted an experiment to compare performance of container-based sys-tems (Linux VServer, OpenVZ and Linux Containers (LXC)) in terms of running on MapReduce (MR) clusters. Linux VServer, OpenVZ and Linux Containers (LXC) are similar in terms of security, isolation and performance while the difference lies in resource management. The basis of the

experi-ment was micro and macro benchmarks. Micro benchmark refers to com-paratively small piece of code for evaluating performance of a system while macro benchmark evaluates the system as whole. The benchmark code usu-ally consists of test cases. The results obtained from micro benchmarks supposed to effect the macro benchmarks. This made it useful to identify bottlenecks. Four identical nodes with two 2.27 GHz processors each, L3 cache of size 8M in each core, RAM of size 16GB and one disk of size 146 GB was used for the experiment. The chosen micro benchmark was TestDF-SIO benchmark which gives an idea of the efficiency of the cluster in terms of I/O. Th measurement of throughput is done in Mbps. The time taken by individual task for writing and reading files is the basis of this metric. The file size has direct effect on the result. The behaviour of the system becomes linear when the file size gets larger than 2GB. The authors assumed the re-sult was influenced by network throughput. The selected macro benchmarks are: Wordcount, Terasort, IBS. The macro benchmark Wordcount is used for counting the occurrence number of a word in a dataset. This bench-mark is well known for comparing performance among Hadoop clusters. The authors created the input file of 30GB size by looping the same text file. Result showed that, all the container based system reach a near native performance.Terasort Benchmark is used to measure the speed of a Hadoop cluster. This benchmark is used to sort data very fast. It comprise of two steps: (i) input data generation (ii) sorting the input data. The result was same as wordcount. With IBS benchmark the authors tested performance isolation in a modified Hadoop cluster. They measured execution time of an application in one container. Then the same application rerun side by side with a stress test and execution time was measured. The performance degradation of the chosen application was observed. There was no perfor-mance degradation during CPU stress test. However, during memory and I/O stress test there was a little degradation. The authors have reached a conclusion that all the container based systems perform similarly. In addi-tion to this they concluded that Linux Containers (LXC) outperforms others in terms of resource restriction among containers.

Felter et al. [15] conducted an experiment where traditional virtual ma-chines were compared with Linux container by stressing CPU, memory, net-working and storage resources. KVM was used as the hypervisor and Docker as a container manager. The authors did not created containers inside a VM or VMs inside a container to avoid redundancy. The target system resources were saturated by the benchmarks for the measurement of overhead. The used benchmark software are Linpack, STREAM, RandomAccess, nuttcp,

netperf request-response. Linpack is used to measure system’s computing power which solves a system of linear equations. Most of the computations are double-precision floating point multiplication of a scalar with a vector. The multiplication result is added to another vector. By running Linpack a good amount of time is spent in solving mathematical operations and it re-quires regular memory accesses. In addition to this, it stresses floating point capability of the core. Docker outperformed KVM in this case. This hap-pened because of the abstracting system information. STREAM benchmark performs simple operations on vectors to measure memory bandwidth. The performance is mainly dependent on bandwidth to memory. Though it also depends on TLB misses to a lesser extent. The benchmark was executed in two configurations: (i) one NUMA ( non-uniform memory access) node con-tained the complete computation (ii) the computation was in both nodes. The performance of Linux, Docker and KVM were the same in both con-figurations. In RandomAccess benchmark random memory performance is stressed. A section of memory is initialized as the working set of the bench-mark. In that memory section, arbitrary 8-byte word are read, modified and then the modified word is written back. The RandomAccess performance was evaluated in single socket (8 cores) and on both sockets (all 16 cores). In case of single socket (8 cores), Linux and Docker performs better than KVM while in case of both sockets (all 16 cores) all three of them perform identically. To measure network bandwidth between the target system and a system identical to the target system nuttcp tool was used. These two systems were connected using direct 10 Gbps Ethernet link. Nuttcp client runs on the SUT (system under test) and nuttcp server runs on the other system. In case of client-to-server SUT performs as a transmitter while server-to-client case it becomes the receiver. In all three systems (native, Docker,KVM) throughput reaches 9.3 Gbps in both directions. The authors used fio tool to measure overhead introduced by virtualizing block storage. In cloud environment block storage is used for consistency and better per-formance. For testing they added a 20 TB IBM FlashSystem TM 840 flash SSD to the server under test. In case of sequential read and write all the systems performed identically. However in case of random read, write and mixed (70% read, 30% write) Docker outperforms KVM. In case of Redis (data structure store) both native and Docker system performs better than KVM. Therefore the authors concluded that, containers perform equal or better in all cases than VMs. It was also concluded that very little over-head for CPU and memory performance was introduced for both KVM and Docker. In addition to this, both of these virtualization technologies should be used with extra care in case of I/O intensive workloads.

Wubin et al. [16] compared hypervisor-based platform and container-based platform from a high availability (HA) perspective (live migration, failure detection, and checkpoint/restore). HA means a systems is contin-uously functional (almost all the time). The considered Hypervisor based platforms are VMware, Citrix XenServer and Marathon everRun MX. Docker/LXC and OpenVZ are the considered container based platform. In VMware high availability (HA) is provided with the help of Distributed Resource Sched-uler (DRS), VMware FT, VMware HA and vMotion. The strategy behind VMware HA is failover clustering strategy. With the failover of ESXi host sending of heartbeat signal to vCenter server is stopped. If vCenter server does not receives any heartbeat signal, it sesets the VM. The same thing happens in case of application failover. At the time of maintenance VMs can be migrated with the help of vMotion to achieve HA. Fault tolerance (FT) in VMware is achieved through vLockStep protocol. With the help of this protocol primary and secondary copy of a VM (FT protected) is syn-chronised. XenServer also provides HA services though host levels failovers can only be handled. Third party software can be used with XenServer to address VM and application failovers. In addition to this, FT is not sup-ported in XenServer. Remus can be integrated to it to enable FT support. In case of container based system to provide HA checkpoint/restore utility is necessary. In Docker checkpoint/restore utility is not available while in OpenVZ this utility is ready to use. In addition to this, HA feature like Live migration is supported in OpenVZ. However, features like automatic state synchronization,failure detection, failover management are not supported in both OpenVZ and Docker/LXC. The authors concluded that high availabil-ity features in container-based platforms are not adequate though it is ready to use in Hypervisor based platforms.

Tafa et al. [17] compared the performance of five hypervisors: XEN-PV, XENHVM, Open-VZ, KVM-FV, KVM-PV in terms of CPU Consumption, memory utilization, total migration time and downtime. To implement mi-gration the authors created a script called heartcare. This script sends a message to heartbeat which initiates migration. In XEN-PV (XEN paravir-tualized) to measure CPU consumption they used xentop command. Before migrating a virtual machine was lower than the time of migration. To mea-sure usage of memory in XEN the authors use MemAccess tool. Primarily the utilization was 10,6 % which increased to 10,7% after migration. The authors conducted migration within same physical host to measure migra-tion time. For this measurement with the sending of the migramigra-tion initiamigra-tion

message to heartbeat tool, a counter is started. This counter indicates the transfer time. The migration time was 2.66 second. The measured down-time was 4ms. The reason behind very low downdown-time is that they conducted the migration within the same physical host, the CPU was very fast and the application was not big. The authors also changed MTU (Message Trans-fer Unit) and evaluated all the four (transTrans-ferring time, downtime, memory utilization and CPU consumption) performance criteria. Changing MTU automatically changes packet size. The used MTU was 1000B and 32B. The result showed CPU consumption, memory utilization, migration time and downtime increases when packet size decreases. The same experiment was conducted on Xen Full virtual machine with MTU sizes: 1500B, 1000B, 32B. A comparison is made between Xen Full virtual machine (XEN-HVM) and XEN paravirtualized machine which showed in XEN-HVM consumes more CPU and memory than XEN-PV. In OpenVZ the authors measured CPU wasted time in /proc/vz/vstat to measure CPU consumption and used stream tool to measure memory utilization. In case of OPenVZ the used MTU sizes are: 1500B, 1000B, 32B. The CPU consumption and memory utilization was a litle higher in OpenVZ compared to XEN. The migration time and downtime was smaller than XEN. In KVM hypervisor the evalu-ation of CPU consumption was done using SAR Utility tool and Memory Utilization was measured using an open source stress tool with modification. The used MTU size was same as OpenVZ. The CPU consumption is higher in KVM-HVM compared to XEN-HVM. The performance of KVM-HVM was lower than other hypervisor. KVM-PV (paravirtualized) performed better than KVM-HVM and it showed similarity to XEN-HVM. Finally, the authors concluded that there is no single hypervisor which is good in all aforementioned performance criteria.

Sometimes virtualization with a good degree of both isolation and effi-ciency is required. Soltesz et al. [68] presented an alternative to hypervisor based system in scenarios like HPC clusters, the Grid, hosting centers, and PlanetLab. A prior work related to resource containers and security contain-ers applied to general purpose operating systems are synthesised to provide a new approach. Container based system that was considered in this paper for describing design and implementation is Linux-VServer. To contrast this container based system a hypervisor based system (XEN)is presented. For efficient computation in High Performance Computing (HPC) applications maximum usage of limited resources are required. In these cases, there is a large balancing required between effective resource allocation and execu-tion time minimizaexecu-tion. The comparison showed that XEN supports more