School of Innovation Design and Engineering

Västerås, Sweden

Thesis for the Degree of Bachelor of Computer Science 15.0 credits

MEASURING SITUATION AWARENESS

IN MIXED REALITY SIMULATIONS

Viking Forsman

img13001l@student.mdh.se

Examiner: Gabriele Capannini

Mälardalen University, Västerås, Sweden

Supervisor: Rikard Lindell

Mälardalen University, Västerås, Sweden

Company supervisor: Markus Wallmyr,

CrossControl, Uppsala, Sweden

Company supervisor: Taufik Akbar Sitompul,

CrossControl, Västerås, Sweden

Abstract

Off-highway vehicle, such as excavators and forklifts, are heavy machines that are capable of causing harm to humans or damage property. Therefore, it is necessary to be able to develop interfaces for these kind of vehicles that can aid the operator to maintain a high level of situational awareness. How the interface affects the operators’ situational awareness is consequently an important metric to measure when evaluating the interface. Mixed reality simulators can be used to both develop and evaluate such interfaces in an immersive and safe environment.

In this thesis we investigated how to measure situational awareness in a mixed-reality off-highway vehicle simulation scenario, without having to pause the scenario, by cross-referencing logs from the virtual environment and logs from the users’ gaze position. Our method for investi-gating this research question was to perform a literature study and a user test. Each participant in the user test filled out a SART post-simulation questionnaire which we then compared with our measurement system.

Contents

1 Introduction 1

2 Background 2

2.1 Head-up display and head-down display . . . 2

2.2 Simulators . . . 2

2.2.1 Virtual Reality simulators . . . 2

2.2.2 Mixed Reality simulators . . . 3

2.3 Situation Awareness . . . 3

2.4 Techniques for Measuring Situation Awareness . . . 4

2.4.1 Query-based techniques . . . 4

2.4.2 Self-rating technique . . . 4

2.4.3 Performance-based technique . . . 5

3 Related Work 6 3.1 Measuring situation awareness of drivers in real-time . . . 6

3.2 Usage of eye-tracking technology to measure situation awareness . . . 7

3.3 Comparison between assessing situational awareness based on eye movements and SAGAT . . . 7

4 Problem Formulation 8 4.1 Limitations . . . 8

5 Method 9 5.1 Literature study . . . 9

5.2 Model and formative evaluations . . . 9

5.3 Implementation . . . 9

5.4 Evaluation . . . 9

5.5 Data analysis . . . 9

6 Ethical Considerations 10 7 Implementation of the SA measurement system 11 7.1 The creation of the model . . . 11

7.2 Logs from the eye-tracking equipment . . . 11

7.3 Logs from the virtual environment . . . 13

7.4 Cross-referencing of logs . . . 14

7.5 Test scenario . . . 16

8 Results 17 8.1 SART result . . . 17

8.2 SA-measurement system . . . 18

8.3 Comparison between SART and our measurement system . . . 19

9 Discussion 20 9.1 Discussion on our measurement system . . . 20

9.2 Observations on the test scenario and simulator . . . 20

10 Conclusions 22 10.1 Future work . . . 22

References 24

Appendix A Participation survey 25

List of Figures

1 Example of head-up displays and head-down displays . . . 2

2 Depiction of the reality–virtuality continuum . . . 3

3 Simplified version of Endsley’s model of SA in dynamic making. . . 4

4 Depiction of the reality–virtuality continuum . . . 6

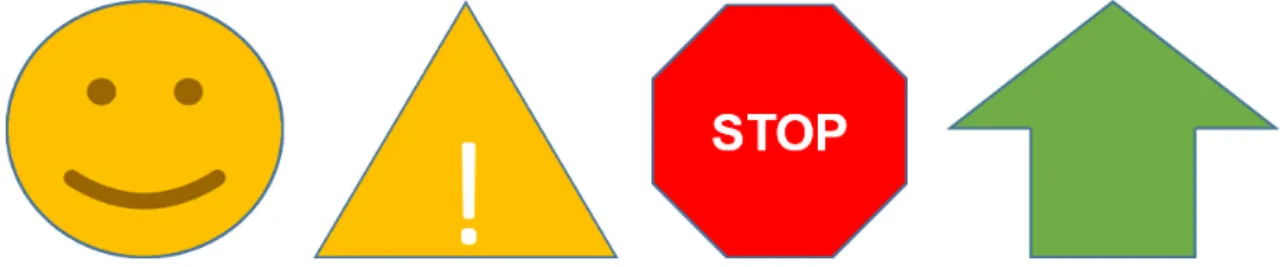

5 Depiction of the icons that can be displayed on the excavator’s interface . . . 11

6 Early concept of the placement of the surface markers . . . 12

7 The image depicts our final concept of where to place the surface markers . . . 12

8 Depiction of the operator’s field of view . . . 13

9 Depiction of the excavator from a bird’s eye view . . . 13

10 Flowchart of how we determined if warnings were noticed before collisions. . . 15

12 Coordinate system shows the participants results from the SART questionnaire and our measurement system . . . 19

13 Depiction of the construction workers patrol path . . . 20

List of Tables

1 The result from the SART post-simulation questionnaire. . . 172 The statistics from the SART post-simulation questionnaire. . . 17

3 The result from the logs and the SA-measurement system. . . 18

4 The statistic from the logs and the SA-measurement system. . . 18

List of Equations

1 The SART equation which was invented by Taylor R. M. . . 51

Introduction

Off-highway vehicles are a broad description of wheeled vehicles, such as excavators, cranes and forklifts, that are capable of cross-country travel over natural terrain. These vehicles are often large and heavy and in the occurrence of an accident can cause significant damage to both property and humans [1]. Therefore, it is important to minimize the likelihood of such accidents. Two possible ways of achieving this are to increase the training of the operator and improving the operator’s decision-making process by providing additional information and warnings during operations. Both of these alternatives can be done using a simulator where real scenarios can be recreated safely in a virtual environment. Researchers can also use simulators to develop and evaluate different interfaces that can improve the operators’ decision-making skills. An important metric to measure when evaluating such interfaces is how they affect the operators’ situational awareness. The reason for this is that maintaining situational awareness is critical for the operator to make safe decisions in the present and in the near-future.

The goal for this thesis work is that we want to investigate a way to measure situational aware-ness in a mixed reality simulator, without pausing the simulation. The context for this goal is that we want to, in a future-work, investigate if there are differences in situational awareness between using head-up displays and traditional head-down displays in an off-highway vehicle scenario.

2

Background

In this section, we introduce some background information regarding the concepts that will be discussed in the thesis. These concepts are briefly explained to help the reader to better understand the thesis and its motivation.

2.1

Head-up display and head-down display

Head-up displays is a display type that provide information on a transparent screen that is placed so that the operator do not need to look away from their default viewpoint [2]. See Figure 1 for an example of a head-up display and a head-down display. Head-up display was originally developed for use in military aircraft but is now also used in other types of vehicles. The main advantage with this type of display is that the of risk overlooking changes in the environment when reading the instruments is decreased. There are however cases when traditional head-down displays may be preferable. If too much information is presented on the head-up display, it can obscure the operator’s line of sight or cause unnecessary distractions. In this case, it is better to use traditional head-down displays instead of head-up displays.

Figure 1: The images depict the field of view of two drivers that are supported by different display alternatives. The driver on the right is using a head-down display while the driver on the left is using a head-up display.

2.2

Simulators

Simulators can replicate real scenarios in a virtual environment, which can be used to train op-erators or for developing and testing user interfaces (UI) for off-highway vehicles. This does not only avoid the risks involved in performing the scenarios in reality, but might also improve the scenarios reproducibility, controllability and standardization [3]. There are many different types of simulators with complexity ranging from low-cost simulators using normal screens to highly real-istic simulators with motion feedback and 360◦ visualization. Some simulators use virtual reality or mixed reality to immerse the user in the experience.

2.2.1 Virtual Reality simulators

Virtual reality head-mounted displays are a popular alternative for creating immersive simulations where the users head movements can be tracked and transferred into the virtual environment, so they can naturally look around inside the virtual world. One of the major drawbacks of virtual reality is that the user’s view is obscured from the real environment and is unable to interact with physical artefacts in an effective manner [4]. The user will not necessarily be able to retain their full performance even if the physical artifact were to be recreated within the virtual environment. As an example, a recent study [5] showed that novice users were able to retain only about 60% of their typing speed when using virtual keyboards.

2.2.2 Mixed Reality simulators

The reality–virtuality continuum [6] is a continuous scale that can be used to describe different combinations of real and virtual environments, see Figure 2. The left extreme on this scale is the unaltered physical environment. The opposite extreme is the virtual environment where the user is completely immersed in a virtual reality and is oblivious to the physical environment. Mixed reality can be placed in the middle between these two points, where the virtual environment is merged with the real environment, so that the user can observe and interact with co-existing physical and digital objects in real time. The advantage of performing simulations in a mixed reality environment is that the user still can see and use physical artifacts, such as secondary displays or control sticks, while interacting with the virtual environment.

Figure 2: Interpretation of the reality–virtuality continuum, which was invented by Paul Milgram [6]. Augmented reality is when digital objects is introduced into the physical environment, while augmented virtuality is when physical objects are introduced into the virtual environment.

A mixed reality environment can be created using a Cave Automatic Virtual Environment (CAVE) setup, where projectors are directed at the wall of a cubical room to display the virtual environment. This method also requires that the user’s head is tracked so the projectors can display the right perspective. An advantage with the CAVE setup is that the user’s field of view is not restricted as it would be by a head-mounted display [7], which means they do not have to rely as much on head movements to survey their surroundings. The field of view of a human is roughly 180◦ while commercially available head-mounted displays offer a field of view that is around 100◦ [8].

2.3

Situation Awareness

Endsley defines Situation Awareness (SA) as "the perception of the elements in the environment within a volume of time and space, the comprehension of their meaning, and the projection of their status in the near future" [9]. SA can therefore be defined in a model that consists of three separate levels according to Endsley [10]:

• SA level 1: Perception

The first level of SA is perceiving the relevant elements in the current situation, which is not necessarily only done visually but can also involve other senses. These elements could be things such as reading information presented on the display, seeing a pedestrian cross the road or hearing a seat belt alarm.

• SA level 2: Comprehension

The second level of SA is to comprehend the relevance of the perceived information. This requires an understanding on how the elements in the environment will affect the subject’s goals. An example of this could be that a driver should not only see a traffic sign, but also comprehend its meaning.

• SA level 3: projection

The third and final level of SA is projecting the near-future status based on the comprehension of the current situation. For example, an operator should slow down or stop if he/she notices that a pedestrian is about to cross the road in front of the vehicle, in order to avoid a collision.

Maintaining a good SA is important for the vehicle operator to be able to avoid dangerous situations that could cause harm to humans or property [11]. Therefore, SA is an important metric to be measured when evaluating the usefulness of interfaces that are meant to improve the operator’s decision-making skills. See Figure 3 for a depiction of Endsley’s model of SA in dynamic decision-making.

Figure 3: Simplified version of Endsley’s model of SA in dynamic decision-making [10]. The model shows how SA is used to make a decision, which is then put into action, which results in a change of environmental state and a need to update the SA to reflect the current situation.

2.4

Techniques for Measuring Situation Awareness

There are many methods to measure SA and each of them has its own advantages and disadvantages [12]. Endsley stated in her paper [13] from 2015 that “much of the disagreement on SA models that has been presented ultimately has boiled down to a disagreement on the best way to measure SA”. In this section we will explain three popular techniques from different measuring constructs. 2.4.1 Query-based techniques

One of these techniques is the Situation Awareness Global Assessment Technique (SAGAT) [14] which is a query-based technique. This method requires the test to be paused at randomly selected times and blank out the test environment. The subject will then be queried about their perception of the current situation in the test. The subjects’ responses are then compared to a screen dump of when the tests was halted in order to evaluate their SA. The reason for randomly selecting the time when pauses should occur is to prevent the participants from mentally preparing for the query questions, which could skew the result. The main advantage with SAGAT is that it avoids problems that can occur when SA is measured after the test. However, the pauses in the test can have a negative effect on the result, since they break the natural flow of the test scenario.

2.4.2 Self-rating technique

An alternative method for measuring SA is the Situation Awareness Rating Technique (SART) [15]. In this method, the subjects’ get to rate their own perceptions in terms of ten dimensions after the simulation is completed. The dimensions are things like the complexity of the situation, division of attention, and readiness for action. These dimensions can be divided into three domains which can be used in a equation to calculate the SA, see Equation (1). The questionnaire used in this method is using a 7-degree scale, where 1 is the lowest score and 7 is the highest score. A problem with post-simulation questionnaires is that the subjects may not recall the details of the simulation scenario. It can also be difficult to separate between the subjects’ perceived SA with the subjects’ actual SA. Using self-rating techniques, such as SART, when measuring SA might be

unobtrusive, but the result may not reflect the subjects’ actual SA.

Situation awareness = U nderstanding − (Demand − Supply) (1)

Equation 1: shows the SART equation which was invented by Taylor R. M. [16]

2.4.3 Performance-based technique

There are also performance-based measurements of SA [12], where the subjects’ performance during a task is used as an indirect measure of SA. In a simulated driving scenario, metrics such as hazard detection or crash avoidance can be used to measure the SA of the vehicle operator. The advantages of this method are that it is non-intrusive and the collected data is objective. However, there are some problems when using performance-based measurements. Performance is the end result of a long pipeline of cognitive processes, which makes it hard to pinpoint the causes for low performance. Performance can also be influenced by factors that are not directly related to SA, such as poor decision making or lack of information.

3

Related Work

In this section we will review some of the existing knowledge on how to measure SA in real-time from previous works. We have chosen to look at works that are published during or after 2010 because SA is an extensively researched field and advances in technology may introduce new and effective ways of measuring SA. The first two works describe the prototype of a tool that can be used to measure the SA of drivers in both simulated and on-road scenarios. The third and fourth work explore possible ways of measuring SA using eye-tracking equipment. The fifth and final work compare the query-based technique SAGAT with a method that use eye movements to asses SA. This work served as an inspiration on how we could evaluate our measurement system.

3.1

Measuring situation awareness of drivers in real-time

Martelaro et al. have developed a tool called Daze which can be used to measure the SA of drivers in simulated or on-road driving scenarios [17]. The tool presents closed-ended questions via a head-down display to measure the SA of the driver in real-time. The questions are related to on-road events involving things like traffic accidents, police officers, construction sites, school zones, hazards, and heavy traffic. The tool can also prompt the driver to mark the location of the events on a map. Each question is presented after a fixed distance from the location of the event, to prevent drivers from viewing the event through rear or side windows. The correctness of the answer and the latency before the driver answer is used to determine the SA. They explain that DAZE is functionally tested in both simulated and real environments, but also states that it requires further testing with validated SA measurements methods.

Figure 4: The left side of the image shows Daze during an on-road driving scenario [18] and the right side shows a closeup of the display interface [17].

Daze was recently used to investigate possible ways of measuring SA in the context of a "driver" of an autonomous vehicle [18]. Autonomous vehicles are not always able to identify hazardous sit-uations. In such cases the driver has to retake control over the vehicle. However, this requires the driver to maintain enough SA in their supervisory role to notice the danger and intervene accordingly. Therefore, it is important to be able to measure SA in different situations when evaluating interfaces for autonomous vehicles. Both on-road and simulated driving scenarios were used in this study. They describe that participants were more likely to focus on the tool interface during the simulation and more likely to focus on the environment during the on-road scenarios. They speculate that the cause for this might be the vehicles movements and concerns for immi-nent dangers. To decrease the number of missed questions, they introduced an audible cue when questions appeared on the interface. Although they are satisfied with the tool’s performance in the simulations, they note that the tool’s ability to trigger event questions during on-road scenarios is insufficient to fully reflect the happenings in the physical environment. Currently, event questions are pre-programmed to a specific GPS location or can be triggered ad-hoc from a laptop in the backseat.

3.2

Usage of eye-tracking technology to measure situation awareness

A preliminary study [19] performed by Tien at al. showed that eye-tracking technology has po-tential to be used as a tool for measuring SA of surgeons during operations. Eight participants took part in the study, four of which were novice surgeons and the remaining four were expert surgeons. Their experimental setup consisted of a training dummy, a head-mounted eye-tracker to record the users’ eyes movements, a display showing the dummy’s vital signs, and another display showing the simulated task. Each participant performed two simulated surgeries on the dummy while wearing the eye-tracker. The experienced surgeons glanced at the display that showed the dummy’s vital signs much more often than the novice surgeons who primarily only focused on the surgical display, even when the dummy’s heart rate audibly changed. The researchers conclude that the expert surgeons had higher SA during the operation in comparison with the novices, based on how many times they glanced at the secondary display and the information gained from the secondary display. For future work, they suggest a larger pool of participants for statistical validity and that the participants also conduct a self-evaluation after the simulation is completed.Kang et al. have performed a study where they investigate how eye-tracking can be used to evaluate SA and process safety in drilling operations [20]. Lack of SA during drilling operations, due to things such as monotony or fatigue, is a factor in causing hazardous and non-productive situations. Therefore, it is important to be able to measure the operator’s SA in real-time to be able to develop feedback and alert systems. In this study, an experiment was conducted where participants perform several drilling-related tasks in a virtual reality simulator. Each participant used eye-tracking glasses during the simulation that recorded the participant’s eye fixation, fixation duration, and pupil dilation. Previous works have showed that the size of the pupil is directly related to brain activity, and it can be used as a rough measurement of the individual’s cognitive load. The SA of the participants was assessed based on their performance, eye fixation, and duration inside areas of interest. The recorded pupil dilations of two participants, who were deemed to have different levels of SA during the simulation, were selected for a further study. Their analysis showed that there were differences in pupil dilation that correlate to the participants’ performance during the simulation. The researchers speculate that feedback or alarm systems could use frequently closed eyelids or contracted pupils as an indication that the operator is lacking SA. Their reasoning for this is that a fatigued operator is more likely to close their eyes to rest, and more contracted pupils could be a sign that the operator isn’t comprehending the displayed information.

3.3

Comparison between assessing situational awareness based on eye

movements and SAGAT

Winter et al. have performed a comparative study where they compare the query-based technique SAGAT with a method that use eye movements in relation to the task environment to asses SA [21]. Their goal with this study was to explore if eye movements could be used as a preferable alternative for measuring SA without the inherit drawbacks that query-based techniques have. They initiated their study by performing an investigation on SAGAT where they outlined six different limitations with this method. Two prominent examples are that the validity of SAGAT relies on that the operator is to able to remember details in the situation, and that the task has to be interrupted during the query probes. They then conducted an experiment with 86 participants where each participant had to supervise a display that was populated with six dials for 10 minutes. Participants wore eye-tracking equipment during the test, so that their gaze position could be recorded. If the dial pointer crossed a certain threshold value, the participant was tasked to press a button. Query probes where prompted every 90 seconds where the display with the dials was blanked out. During these probes the participant had to fill out a form regarding the status of each dial. Once the test was completed, they were able to analyze the data by comparing the percentage of dials glanced at during the crossing of the threshold with the task performance. They also compared the SAGAT score with task performance. This showed that there was a stronger correlation between the task performance and the eye movement measurements than there were between the task performance and the SAGAT score. The researchers also discussed the limitations of their experiment. For example, it could be argued that their method only is capable of measuring the first level of SA, and that their participants were not representative of the general population.

4

Problem Formulation

The research question in the thesis is:“Is there a way to, in real-time, measure situation awareness in a mixed reality off-highway vehicle simulation scenario?”

The overarching motivation for this thesis work is that we want to be able to investigate if there is a difference in SA between using head-up display and traditional head-down display in a mixed reality off-highway vehicle simulation scenario. For this we need a way to confidently measure SA in real-time within this context.

It can be difficult to separate between the users’ perceived SA with the users’ actual SA. Using self-rating techniques when measuring SA might be unobtrusive, but the result may not reflect the subjects’ actual SA. Therefore, there is a need to find a way to confidently measure SA, which would be able to produce more reliable results. Some techniques halt the simulation when mea-suring SA, in these pauses the subject can explain their comprehension of the simulated situation. However, this could have a negative effect on the subject’s immersion and might affect the result of the scenario. Therefore, it would be optimal if we could measure SA in real-time.

4.1

Limitations

A limiting factor is the given timeframe of 10 weeks, which limits what realistically can be im-plemented or investigated during this period. Therefore, the thesis work is primarily focus on how to measure situation awareness using recorded logs in mixed reality simulations. Due to time limitation, the developed system can measure the first level of SA only.

5

Method

The workflow we used to answer the research question can be divided into five phases. The first phase was a literature study, the second phase was the creation of a model for the measurement system, the third phase was the implementation of this model, the fourth phase was the evaluation of the measurement system using user tests, and the fifth and final phase was analyzing the collected data and writing a conclusion.

5.1

Literature study

The first step to answer the research question is to gather information regarding simulations, off-highway vehicles, mixed reality, SA, and SA measuring techniques so that we are aware of the existing knowledge in these areas and how similar problems have been solved in previous works. We intend to follow the advice of Zobel regarding how to find and evaluate research papers [22, pp. 19-32].

5.2

Model and formative evaluations

Kade et al. [23] have created a low-cost mixed reality simulator for industrial vehicles, which can be used to evaluate how different approaches of information visualization can benefit the operator. The simulator is running on the Unity engine and is using a head-mounted projection display to create a mixed reality environment in a CAVE-like room. The simulator also has a transparent screen which is used as a head-up display (HUD) and a secondary screen which is used as a head-down display (HDD). This setup was used to create a scenario for an excavator, which was used to evaluate how different displays influence the user’s performance [24]. We decided to use the aforementioned simulator and excavator scenario as a base for creating a model for the SA measurement system. We used formative evaluations during the development process to test the scenarios suitability and for identifying SA-related data in the mixed reality simulator. We chose this assessment method because it can provide ongoing feedback during the creation of the SA measurement system for a relative low cost in regard to time and resources. An important aspect in this step was identifying the type of user input/acting that will result in measurable SA.

5.3

Implementation

The next step was to develop a way to measure this concept in a virtual model were the user is supported by different display alternatives (HDD and HUD). In practice this means that the system should be able to record when objects occur, and symbols are presented. The system could then cross-match the logs from the visualization and the logs from the user inputs to determine the SA of the operator. Since we were primarily interested in measuring perception, which is the first level of SA, we decided to incorporate the eye-tracking equipment to detect when display elements are shown and map that to which the operator is looking at.

5.4

Evaluation

When the prototype of our measurement system had been implemented, we used user tests to compare our measurement system with another SA-measurement method to determine its usability. This way, we can determine whether the measurement system work better or worse than using other methods. If the result from our measurement system will deviate significantly from the well-tested method, it would mean that our SA measurement system is less reliable. We decided to use SART as the comparative well-tested method because it is unobtrusive and requires less effort from the participants.

5.5

Data analysis

The last step was to analyze the collected data and write a conclusion based on the result, all the other parts of the thesis report could be written continuously during the given time frame.

6

Ethical Considerations

Our method for evaluating the SA-measurement system involves human participants, thus there were some ethical considerations that had to be taken into account:

• Participants were informed of the context and motivation behind the thesis work.

• Participants were informed that participation was voluntary and that they could withdraw their participation at any point, even in the middle of a simulation.

• They were also informed that any personal data that could be used to identify individuals will be anonymized. It is important to anonymize such data, otherwise there is a risk that the participant’s personal integrity is violated. It is also important that the participants are aware of this anonymization or they can be discouraged from taking part in the study or might even answer dishonestly in the study.

• We used eye-tracking in the measurement system, which recorded the participants eye move-ments during the simulations. We informed the participants that any videos that were recorded during the simulation would not be viewed outside the context of the thesis work.

7

Implementation of the SA measurement system

In this section we will describe how the SA-measurement system was designed, implemented, and then evaluated. We will also describe the motivation for the choices we took during these steps.

7.1

The creation of the model

The simulator we used to implement the measurement system was created by Kade et al. [23] and is using a head-mounted projector in a CAVE-like room to create a mixed reality environment. The simulator is written in C# and is using the Unity engine. The application itself is running on a mobile phone that is strapped to the user’s head. The mobile’s gyroscope is used to track the user’s head movements, so that the projector can display the correct field of view in the virtual environment.

The scenario we choose as a base for the evaluation scenario was created by Wallmyr et al. [24]. In this scenario the user can steer an excavator via a keyboard, and control the excavator’s cabin rotation and bucket arm movement with a joystick. The excavator cabin can be rotated 360◦ and the bucket arm that has three joints that can be extracted or contracted. The simulator supports three different display alternatives. Information can be displayed to the user in the virtual environment using a HUD inside the excavator cabin. In the physical environment, information can either be displayed on a HUD or on a HDD. The excavator interface consists of four elements that can alert the user to potentially dangerous situations, see Figure 5.

Figure 5: The image depicts the icons that can be displayed on the excavator’s interface, which are created by Wallmyr et al. [24]. The yellow circle is a warning that is displayed when there are pedestrians near the vehicle. The yellow triangle is a general warning sign that is displayed when obstacles are near. The red octagon is a stop sign that is displayed during a collision. The green arrow points toward the next scenario objective.

Since the processing power of mobile phones are limited, some consideration had to be taken when choosing what data should be logged during the simulation. We deemed that collisions should be logged since they can be used as a possible indication of lack of SA. If a user collides with an object that is clearly visible means that they were not able to perceive that object in time to stop the vehicle. Another possible indication on lack of SA is if the user ignores warnings from the interface, which could either mean that they did not perceive the warning or did not comprehend its meaning. To be able to know if the user noticed the display element, we need to be able to know what they look at.

7.2

Logs from the eye-tracking equipment

We choose to use eye-tracking equipment to determine when the user was looking at the interface elements during the simulation. The eye-tracker we had access to during the thesis work was developed by the company Pupil Labs [25]. This eye-tracker had to be calibrated before use, so that a mapping between the user’s pupils and gaze position can be established. This eye-tracker can not only track the users gaze but can also be used to track predefined surfaces. The surface that is to be tracked is provided with square markers, each of which contains a unique 5x5 grid pattern that the eye-tracking equipment can identify. The eye-tracker can then log the time intervals when the user’s gaze is fixed on the tracked surface. The logs from the eye-tracking equipment are exported to the csv file format. The logs contain information such as timestamps, a Boolean value

determining if the user’s gaze was inside the tracked surface, the users gaze position, and a float value ranging from 0.0 to 1.0 on how confident the eye-tracker is that the gaze position is correct. 0.0 means 0% certainty and 1.0 means 10% certainty.

Initially we tried to put the eye-tracker markers on the interface elements themselves, but we encountered several problems with this solution. First, we tried to place the marker in the center of the interface element, in order to retain the overall shape of the element. The markers where also large enough to be easily identified by the eye-tracking equipment. However, the icons and text on the interface elements where obscured by the surface marker, which could make it harder for the operator to comprehend its meaning. Another problem was that the actual shape of the interface element was not tracked, only its center was accurately tracked. We tried to fix this problem by putting multiple surface markers on the borders of the interface elements. This made the surfaces more accurate tracked and the text and icons on the interface elements were no longer obscured. However, the marker size was reduced which made them harder to identify for the eye-tracking equipment. See Figure 6 for a depiction of the surface-markers placement in the two aforementioned versions.

Figure 6: The images depict two early concepts of where to place the surface markers. Initially we explored ways of marking the interface elements themselves. The placement of the markers in the middle of the interface elements was easy to track, but it did also obscure the text and icons. Placing the markers on the borders was easier to comprehend, but harder to track.

We decided to track the entire interface instead of individual elements. The reason for this change was that it allowed us to use larger markers, which the eye-tracking equipment could identify easily, without obscuring any of the elements. This method required us to calculate which one of the interface elements the user is looking at by using the user’s gaze position. The users’ gaze position is given in two axis values, x_norm and y_norm. If both of these values are within the interval [0,1] the user’s gaze is inside the tracked surface, otherwise the user’s gaze is outside the tracked surface. This normalized coordinate system uses the OpenGL convention, where (0,0) is the bottom left corner of the surface while (1,1) is the the top right corner of the surface.

The excavator’s interface can at the maximum show three elements simultaneously, which are display side by side on a horizontal line. The interface element that points out the direction toward the next objective is always displayed and is positioned on the right side of the display. The interface element for caution and the element for stop are both displayed in the middle of the display, but only one of them can be active at any given moment. Lastly, the element that warns for nearby pedestrians is displayed on the left side of the display. Our measurement system can therefore use the x_norm value, in conjunction with the display logs, to determine which of the elements the user is looking at. See Figure 11 for a depiction of where we placed the markers.

Figure 7: The images depict our final concept of where to place the surface markers. Tracking the entire interface instead of the elements themselves allowed us to use large and easily identifiable markers without obscuring any information. However, this version requires the user’s gaze positions to determine which of the interface elements the user is looking at.

This method worked fine when we were using the HUD in the virtual environment, but we encountered some difficulties related to the illumination of the room when we were using the method on the physical HUD. We therefore choose to use this method with the HDD during the user tests.

7.3

Logs from the virtual environment

Every time an operator would collide with an object, we logged what type of object it was, the time of impact and, weather the object was visible to the operator during the collision. We could not use the eye-trackers equipment’s surface tracking to check if the object was visible during collisions in the virtual environment, because the surfaces on objects we want to keep track on are not always flat. A secondary reason is that multiple markers in the virtual environment could have a negative effect on the participants’ immersion, which in turn might change the result of the scenario. We decided to rely on the view frustum in the simulator to determine if the object is within the user’s field of view. We compare the object’s position with the near, far and side planes of the view frustum to determine if it is inside. If the object is inside the view frustum, we send a ray from the camera toward the object’s position. If the ray hits another object along the way, we know that the object is obscured or partly obscured when the collision occurs. See Figure 8 and 9 for visual examples of how we determine if objects are visible, obscured, or hidden.

Figure 8: The images depict the operator’s field of view in two different situations. On the left side the construction worker is clearly visible, while the worker on the right side is obscured by a stack of boxes.

Figure 9: The images depict the excavator from a bird’s eye view in three different situations. The worker on the left is outside the view frustum, and is therefore hidden. The worker in the middle is inside the view frustum and is therefore visible. The worker on the right is inside the view frustum but the ray will hit a box instead of the worker, which means that the worker is obscured.

We considered to use occlusion culling as a possible tool to determine the object’s visibility. Occlusion culling is a built-in feature in the Unity engine that disables the rendering of objects that are not currently seen by the camera. The Boolean variable "isVisable" is used by the renderer during the occlusion culling process to determine whether or not the object should be rendered. However, we noticed some problems when using this variable for taking logs. Objects will be rendered if they cast shadows, which means that the object counts as visible even if only its shadow is within the camera’s field of view. Another problem is that the object counts as fully visible no matter how little of the object is visible in the camera. We tried to solve this by measuring how many pixels of the object was used in the current camera frame and count the object as hidden if it was below a certain threshold. Although this method worked, it required mobile phones with high-performance computing.

We also decided to log when elements on the interface are displayed, so that we could evaluate if the user was retrieving information from the display interface. We did not have to determine if the interface elements were inside the operator’s field of view, because the logs from the eye-tracking equipment provided us with that information. We encountered a problem when trying to implement logs for the interface, where sometimes the interface elements were only displayed for a fraction of a second before disappearing again. We assumed that these time intervals were too short for the operator to be able to perceive and comprehend the displayed element. Therefore, we decide to only log the interface elements if they are visible for more than 0.2 seconds. We also took logs on the user’s task completion by measuring the height coordinate of the gate arch, orange cubes, and grey cubes once the user crossed the finish line. If an object were below a certain height, it meant that it had been knocked down.

7.4

Cross-referencing of logs

For each logged collision, we check if the appropriate warning was displayed long enough to be noticed, if the operator noticed the warning before the collision occurred, and if the operator noticed the stop sign after the collision occurred. We decided to ignore any collision with orange cubes when trying to estimate the operator’s SA, since these collisions are necessary to complete the scenario. To know what the operator was looking at before and after the collision, we need to compare log data from both the eye-tracking equipment and the scenario. The clock that is used for taking timestamps in the virtual environment starts at zero and keep track of the time by counting seconds since the start of the scenario. The eye-tracking equipment used a separate system for tracking time which count down the number of seconds since the last PUPIL EPOCH [26], which is usually set to the time of the last reboot. To be able to compare timestamps from the logs, we had to rewrite the timestamps from the eye-tracking equipment to correspond with the start of the scenario. We did this by reading the first timestamp in the eye tracking log and then subtracting each timestamp in the log file with this value. See Figure 10 for a conceptual flowchart of how we determine whether the operator looked at the warning before the collision.

We formulated an equation to try and measure the operator’s SA in the off-highway vehicle scenario, see equation 2. In this measuring system, the value 0 represents a flawless execution of the scenario. Every time the operator collides with an object in the virtual environment, a penalty is added. The severity of this penalty is calculated based on the type of object the operator collided with, the visibility of the object during the collision, if the operator noticed the warning before the collision, and stop sign after the collision.

For example, the system will give a higher penalty if the operator collides with a worker then if he/she would collide with a traffic cone. Every object in the scenario is given a tag which represents its object type. If the operator noticed the warning but still collides with the object, it might be an indication that he/she could not react in time or comprehend the significance of the warning. If the operator did not notice the warnings it could be an indication that he/she is not focused on the operation of the excavator, which will increase the penalty further. No increased penalty will be given if the warning was displayed too briefly to be noticed.

V isibility ∈ {visible = 0.33, obscured = 0.22, hidden = 0.11} W arning ∈ {noticed = 0.22, unnoticed = 0.33, unnoticeable = 0} Stop ∈ {noticed = 0, unnoticed = 0.33, unnoticeable = 0}

T ag ∈ {worker = 4, traf f ic cone = 2, untagged = 1} n = number of collisions

Situation awareness penalty = n X

i=0

T agi(V isibilityi+ W arningi+ Stopi) (2) Equation 2: shows the equation for calculating the SA penalty in our measurement system

7.5

Test scenario

We choose to use SART as a comparison during the user test because it is an unobtrusive measuring technique that is well-tested. Since SART is a subjective technique, we also intended to use the participants’ performance in the simulated scenario as an indirect and more objective measure of SA. However, we were unable to analyze these data due to time constraints. We were able to recruit 13 participants to take part in the tests. Only two of the participants had previous experience with operating heavy off-highway vehicles before in real life, but we do not think that is a necessary requirement since the controls and objective in the simulated scenario are fairly simple and easy to understand. The majority of the participants were in their 20s or 30s, with the exception of two participants who were older than 60 years old. Only three of the participants were female, and this was not a conscious decision, but rather a consequence of our available recruitment pool.

The test scenario’s objective is to navigate the excavator through a construction site while avoiding collision with construction workers, traffic cones, cubes, and other miscellaneous obstacles. The scenario’s secondary objective is to knock down orange cubes on top of the pillars, that are made out of orange and grey cubes, while trying to avoid knocking down any grey cubes. The navigation of the excavator requires that the operator look around in the virtual environment to be able to perceive potential obstacles. To finish the scenario, the operator needs to drive the vehicle through a gateway which is lower than the excavator’s boom if it isn’t contracted. The green arrow on the display always point towards the next objective and can aid the participants to navigate the test scenario. Before each test, we explained the simulator controls and display elements to the participants.

We initially intended to use a head-mounted projector that was equipped with a fisheye lens during the user test, which widened the projection of the virtual environment. However, the cable that connected the mobile phone and the projector were severed a short period before the scheduled test period and repairs could not be finished in time. This forced us to use another head-mounted projector that was not equipped with a fisheye lens. Another problem with this new projector was that it was not able to properly display objects that were colored in bright red or orange. To solve this, we had to re-texture certain objects in the virtual environment. These problems put us behind the original time plan. Although the projection was smaller without the fisheye lens, the projection also became brighter. This solved some of the problems we had with the surface detection on the physical HUD. When we used the fisheye lens, the simulator needed to be used in a sparsely lit room or the projection of the virtual environment would be hard to see. the lack of light made it difficult for the eye-tracking equipment to be able to identify the markers in the corners of the display’s surface. The brighter projection allowed us to use the simulator in a more well-lit room were the markers were identifiable, without sacrificing the visibility of the virtual environment.

Figure 11: The images depict the head-mounted projector and CAVE-like room we used during the user tests. The black box on top of the dolls head is hollow and is meant to contain the projector’s battery and the mobile phone that runs the scenario.

8

Results

8.1

SART result

Table 1 contains the result from the SART post-simulation questionnaire. The first ten rows of the table contain the different dimensions in the SART questionnaire. The following three rows contain the domains in SART, that represent the attentional demand, the attentional supply, and the understanding of the situation. Each domain is calculated by adding its corresponding dimensions together. The last row contains the SA score which is calculated by adding the understanding with the supply, and then subtract this sum with the demand. See Equation 1 for a detailed description. SART scores are limited to values between -14 and 46. The participants’ SART scores were on average above the midpoint of this range, which reflects that they had good SA during the test scenario.

The result from the SART questionnaire

Dimension & domains P1 P2 P3 P4 P5 P6 P7 P8 P9 P10 P11 P12 P13

Instability of situation 5 2 1 4 2 2 6 2 2 4 4 2 1

Variability of situation 2 6 1 2 2 4 6 5 6 3 5 3 2

Complexity of situation 3 2 4 3 2 2 6 2 6 5 3 3 2

Arousal 4 7 7 6 6 5 2 1 5 5 5 5 7

Spare mental capacity 7 7 7 5 3 5 3 2 6 2 3 6 4

Concentration 7 7 7 6 3 3 2 2 6 3 6 6 2 Division of attention 7 3 5 2 5 4 1 4 2 1 3 2 4 Information quality 6 6 2 2 6 5 5 4 4 3 2 3 1 Information quantity 6 7 6 3 6 5 5 2 6 2 2 6 1 Familiarity 1 2 7 2 3 4 2 3 1 5 6 2 1 Demand 10 10 6 9 6 8 18 9 14 12 12 8 5 Supply 25 24 26 19 17 17 8 9 19 11 17 19 17 Understanding 13 15 15 7 15 14 12 9 11 10 10 11 3 Situation awareness 28 29 35 17 26 23 2 9 16 9 15 22 15

Table 1: The result from the SART post-simulation questionnaire. Each column after the first one represents a participant in the user test.

The statistical result from the SART questionnaire

Dimension & domains Min Max Average Range Standard

Deviation

Instability of situation 1 6 2.84 5 1.51

Variability of situation 1 6 3.62 5 1.73

Complexity of situation 2 6 3.30 4 1.43

Arousal 1 7 5.00 6 1.75

Spare mental capacity 2 7 4.62 5 1.82

Concentration 2 7 4.62 5 2.02 Division of attention 1 7 3.31 6 1.68 Information quality 1 6 3.77 5 1.67 Information quantity 1 7 4.38 6 1.98 Familiarity 1 7 3.00 6 1.88 Demand 5 18 9.76 13 3.45 Supply 8 26 17.54 18 5.43 Understanding 3 15 11.15 12 3.37 Situation awareness 2 35 18.92 33 8.97

Table 2: The statistics from the SART post-simulation questionnaire. The rows are the same as in the previous table but the columns contains the minimum value, maximum value, average value, range between the maximum and minimum and the standard deviation.

8.2

SA-measurement system

The three first rows contain information regarding how many objects of certain types were involved in collisions during the test scenario. The next three rows contain information regarding the visibility of those objects during the impact. The following nine rows contain information regarding how many times the participants noticed or missed warnings. We had to cross-reference logs from the virtual environment and the eye-tracking equipment to determine if user was looking at the warnings, see Figure 10. The last row contains the SA penalty from our measurement system, which is calculated with Equation 2.

The result from the SA-measurement system

Logs from the user tests P1 P2 P3 P4 P5 P6 P7 P8 P9 P10 P11 P12 P13 Collision with workers 8 2 2 4 12 5 64 0 11 17 3 9 15

Collision with traffic cones 6 6 7 16 6 5 23 51 18 10 20 4 24

Collision with untagged 4 6 14 10 7 4 22 75 52 76 22 38 42

Object was visible 1 5 5 3 1 4 11 23 16 26 1 8 12

Object was obscured 9 5 16 21 9 7 15 3 43 22 6 22 58

Object was hidden 8 4 2 6 15 3 83 100 22 55 38 21 11

Noticed warning icon 5 1 3 5 0 1 3 2 4 1 2 4 1

Noticed worker icon 1 0 2 1 0 1 0 0 3 1 0 0 0

Noticed stop icon 6 1 6 4 2 0 6 1 8 2 3 2 2

Unnoticed warning icon 0 3 3 6 6 1 9 10 15 9 15 3 16

Unnoticed worker icon 2 1 0 1 5 0 7 0 4 5 1 1 3

Unnoticed stop icon 6 8 9 16 12 8 26 27 32 34 21 14 31 Unnoticeable warning icon 5 8 15 15 7 7 33 114 51 76 25 35 49

Unnoticeable worker icon 5 1 0 2 7 4 57 0 4 11 2 8 12

Unnoticeable stop icon 6 5 9 10 11 6 77 98 41 67 21 35 48

SA penalty 20.7 14.9 19.9 30.5 33.3 17.4 84.5 42.6 64.4 69.9 32.3 28.1 69.1

Table 3: The result from the logs and the SA-measurement system. Each column after the first one represents a participant in the user test.

The statistical result from the SA-measurement system

Logs from the user tests Min Max Average Range Standard

Deviation

Collision with workers 0 64 11.69 64 15.93

Collision with traffic cones 4 51 15.08 47 12.49

Collision with untagged 4 75 28.63 71 24.88

Object was visible 1 26 8.92 25 8.01

Object was obscured 3 58 18.15 55 15.44

Object was hidden 2 100 28.31 98 30.81

Noticed warning icon 0 5 2.46 5 1.60

Noticed worker icon 0 3 0.69 3 0.91

Noticed stop icon 0 8 3.31 8 2.37

Unnoticed warning icon 0 16 7.38 16 5.25

Unnoticed worker icon 0 7 2.31 7 2.19

Unnoticed stop icon 6 34 18.77 28 9.80

Unnoticeable warning icon 5 114 30.96 109 33.85

Unnoticeable worker icon 0 57 8.69 57 14.44

Unnoticeable stop icon 6 98 33.38 92 29.85

Situation Awareness penalty 14.9 84.5 40.58 69.6 22.48

Table 4: The statistic from the logs and the SA-measurement system. The rows are the same as in the previous table but the columns contains the minimum value, maximum value, average value, range between the maximum and minimum and the standard deviation.

8.3

Comparison between SART and our measurement system

Figure 12 depicts a coordinate system that is populated with the results from the user tests, see Table 1 and 3. Each dot represents one of the participants. The y-axis represents the SART score, a low value on this axis indicates a low level of SA while a high value indicates a high level of SA. The x-axis represents our measurement system which measures SA based on a penalty system, where 0 represents a flawless execution of the scenario and subserviently higher number represents more flawed executions of the scenario. This means that a low value on this axis indicates a high level of SA, while a high value indicates a low level of SA.

If both methods were able to measure SA with complete accuracy the dots would be arrayed in an even diagonal with a negative inclination, where a high SART score would infer a low SA penalty and vice versa. Otherwise the two measuring methods would contradict each other. This would indicate that our measurement system was not able to measure the participants’ SA. However, some unevenness on this diagonal is to be expected since SART may not always reflect the subjects’ actual SA, as previously mentioned.

The dots are aligned in a rough outline of a diagonal with a negative inclination, which is an indication that the SART score and the SA penalty from our measurements system are correlated. The blue dashed line is the Linear Least Squares (LLS) regression, which means that the line inclination and height are calculated to be as close to all dots as possible. This line can be used to make the diagonal more visually apparent. The most severe deviation seems to have occurred between participants P4, P9, P11 and P13 who had similar SART score but different SA penalty. Nevertheless, this deviation was not enough for the overall shape of the diagonal to be distorted. The result from the user tests indicate that our measurement system is capable of measuring SA.

15 20 25 30 35 40 45 50 55 60 65 70 75 80 85 0 5 10 15 20 25 30 35 P1 P2 P3 P4 P5 P6 P7 P8 P9 P10 P11 P12 P13 SA measurement system SAR T

Figure 12: The coordinate system shows the participants results from the SART questionnaire and our measurement system, each dot represents a participant in the user test.

9

Discussion

The results from the tests indicate that cross-referencing logs from the eye-tracking equipment, virtual environment, and display interface have the potential to be used as a way to measure SA without the need to pause the scenario. There are, however, several things that should be refined before this type of measurement system can be used confidently. In this section, we will discuss our observations on the user tests and measurement system.

9.1

Discussion on our measurement system

The average SA score from the SART questionnaire was 18.92 with a standard deviation of 8.97 while the average SA penalty in our measurement system was 40.58 with a standard deviation of 22.48, see Table 2 and 4.

To be able to compare the result from both system, we created a coordinate system where SART represent the y-axis and our measurement system represent the x-axis, see figure 12. The top left corner in this coordinate system represents high level of SA with both methods, while the lower right corner represents a low level of SA with both methods. We populated this coordinate system with dots that represents the participants in the user tests. The placement of the dots create a rough outline of a diagonal which could be an indication that our measurement system works as intended. We could then calculate the Linear Least Squares regression y = −0.326 · x + 32.141, which can be used to make the outline of the diagonal more visible. We also calculated the Pearson correlation coefficient R of the population in this coordinate system. This coefficient is a measure that describes the linear correlation between two variables, the SART score and SA penalty of each participant in our case. R can be set to values between -1 and 1. The value -1 represents a strong negative correlation, 0 represents no correlation at all, and 1 represents a strong positive correlation. In our case the coefficient received a value of R = −0.81.

This indicates a strong negative correlation between the the SA penalty and the SART score, which means that a high SA penalty infers a low SART score and vice versa. However, it should also be noted that a higher number of participants would be needed to bring statistical validity to this comparison.

9.2

Observations on the test scenario and simulator

Although we are for the most part satisfied with the tasks in the test scenario and the number of participants we were able to recruit, there are some things that we would have done differently given the chance. For example, we noticed some situations during the tests that reflected extreme case behavior. There are two construction workers in the scenario that patrols between static points who will walk into the excavator if its in their patrol path, see Figure 13. These workers will not try to avoid collision and will collide with the excavator, even if it is stationary. This cannot be considered normal human behavior and the participant might have projected a future status based on previous experiences that did not correlate with this behavior. This behavior might have skewed the result in the tests because collision with workers has a high penalty rating in our system.

Figure 13: The image depicts the test scenario from a bird’s-eye view, this scenario is based on the scenario that was created by Wallmyr et al. [24]. The red points mark the construction workers’ patrol paths. The first worker patrol between A and B, while the second worker patrol between C and D.

Another problem was that pillars sometimes were knocked down so that they blocked the only traversable path, forcing the participant to drive over the remnants of the pillar to complete the scenario. This greatly increased the number of logged collisions, which the measurement system will interpret as low situational awareness, even though they had no other option in order to complete the scenario. Two possible solutions to this could have been to either restart the scenario or manually remove those collisions from the logs.

Some participants also seem to have been confused with the stop symbol that is displayed when a collision occur, which lasts until the excavator breaks contact with the other object. Participants seemed hesitant to move the excavator in any direction after this icon appeared. Perhaps it would have been more clear to the participants that they should break contact with the object if we had chosen some other text on this particular display element.

The second head-mounted projector, which we used during the user tests, was much more stable and did not have any problems with the connection between the projector and the mobile phone which the first one had. However, it was heavier due to a bigger shell which some of the participants raised concern about being uncomfortable to wear. The head-mounted projector also had a tendency to tilt slightly towards the user right hand side, due to the uneven weight caused by the mobile phone, which made the projection of the virtual environment tilt as well. This could have distracted the participants from being fully immersed in the virtual environment. This problem could probably be solved by adding more padding to the head straps, which would allow us to fasten the projector more securely without causing discomfort to the participant. This would also decrease the risk of the head-mounted projector coming lose and nudging the eye-tracking equipment, which then would need to be recalibrated.

10

Conclusions

The goal of this thesis was to explore ways to measure SA in a mixed reality off-highway vehicle simulation scenario, without having to pause the scenario. We created a prototype measurement system that cross-references logs from the environment, display elements, and the operator’s gaze to measure SA. We conducted user tests with 13 participants where we compared the results from our measurement system with a SART post simulation questionnaire, to evaluate the feasibility of such measurement technique. We created a coordinate system which x-axis and y-axis are our measurement system and SART respectively, which we then populated with dots that represented the participants in the user test. The placement of these dots indicated that there was a correlation between the SART score and our measurement system. We also calculated Pearson correlation coefficient with the population in the coordinate system, which showed that there was a strong negative correlation between the SART score and the SA penalty from our measurement system.

We believe that this measurement system has the potential to be used as an unobtrusive method for measuring the first level of SA in off-highway vehicle simulation scenarios which can be used alongside other methods.

10.1

Future work

We took some logs that we did not end up using in the measurement system because we could not find a clear connection between the recorded information and SA. However, this information could be very interesting when trying to find differences in how operators interact with HUD and HDD. For example, we were able to record how the operator divided their focus between the virtual environment and the display. The system could also record much the user’s attention was divided among the individual display elements. There is also room to improve the functionality of the measurement system, which currently only measures the first level of SA. Alternative, and potentially less intrusive, methods for detecting if the operator is looking at a display element could also be explored. Object recognition could be used to determine if the user is looking at a specific display element, which would eliminate the need to have surface markers on the display.

Originally, we also intended to compare the result from our measurement system with the task performance in the scenario, as an indirect measurement of SA, but we were not able to do this due to time constraints. This type of comparison could be interesting in a future work since it could show if there is a correlation between the SA penalty and the task performance score.

References

[1] H. Lingard, T. Cooke, and E. Gharaie, “A case study of fatal accidents involving excavators in the australian construction industry,” Engineering, Construction and Architectural Man-agement, vol. 20, no. 5, pp. 488–504, September 2013.

[2] M. Smith, J. L. Gabbard, and C. Conley, “Head-up vs. head-down displays: Examining tra-ditional methods of display assessment while driving,” in Proceedings of the 8th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, ser. Auto-motive’UI 16. New York, NY, USA: ACM, 2016, pp. 185–192.

[3] J. de Winter, P. Leeuwen, and R. Happee, “Advantages and disadvantages of driving simula-tors: A discussion,” January 2012, pp. 47–50.

[4] M. McGill, D. Boland, R. Murray-Smith, and S. Brewster, “A dose of reality: Overcoming usability challenges in vr head-mounted displays,” in Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, ser. CHI ’15. New York, NY, USA: ACM, 2015, pp. 2143–2152.

[5] J. Grubert, L. Witzani, E. Ofek, M. Pahud, M. Kranz, and P. O. Kristensson, “Text entry in immersive head-mounted display-based virtual reality using standard keyboards,” in 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), March 2018, pp. 159–166. [6] P. Milgram and F. Kishino, “A taxonomy of mixed reality visual displays,” IEICE Trans.

Information Systems, vol. vol. E77-D, no. 12, pp. 1321–1329, December 1994.

[7] A. Philpot, M. Glancy, P. J. Passmore, A. Wood, and B. Fields, “User experience of panoramic video in cave-like and head mounted display viewing conditions,” in Proceedings of the 2017 ACM International Conference on Interactive Experiences for TV and Online Video, ser. TVX ’17. New York, NY, USA: ACM, 2017, pp. 65–75.

[8] L. E. Buck, M. K. Young, and B. Bodenheimer, “A comparison of distance estimation in hmd-based virtual environments with different hmd-hmd-based conditions,” ACM Trans. Appl. Percept., vol. 15, no. 3, pp. 21:1–21:15, Jul. 2018.

[9] M. R. Endsley and D. J. Garland, Situation awareness analysis and measurement. CRC Press, 2000.

[10] M. Endsley, “Toward a theory of situation awareness in dynamic systems,” Human Factors: The Journal of the Human Factors and Ergonomics Society, vol. 37, pp. 32–64, March 1995. [11] C. Key, A. Morris, and N. Mansfield, “Situation awareness: Its proficiency amongst older and

younger drivers, and its usefulness for perceiving hazards,” Transportation Research Part F: Traffic Psychology and Behaviour, vol. 40, pp. 156–168, July 2016.

[12] M. Endsley, “Measurement of situation awareness in dynamic systems,” Human Factors, vol. 37, pp. 65–84, March 1995.

[13] M. R. Endsley, “Situation awareness misconceptions and misunderstandings,” Journal of Cog-nitive Engineering and Decision Making, vol. 9, no. 1, pp. 4,32, March 2015.

[14] M. R. Endsley, “Situation awareness global assessment technique (sagat),” in Proceedings of the IEEE 1988 National Aerospace and Electronics Conference, May 1988, pp. 789–795 vol.3. [15] P. M. Salmon, N. A. Stanton, G. H. Walker, D. Jenkins, D. Ladva, L. Rafferty, and M. Young, “Measuring situation awareness in complex systems: Comparison of measures study,” Inter-national Journal of Industrial Ergonomics, vol. 39, no. 3, pp. 490,500, May 2009.

[16] Single European Sky ATM Research. (2012, October) Situation awareness rating technique (sart). [Online]. Available: https://ext.eurocontrol.int/ehp/?q=node/1608

[17] N. Martelaro, D. Sirkin, and W. Ju, “Daze: A real-time situation awareness measurement tool for driving,” in Adjunct Proceedings of the 7th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, ser. AutomotiveUI ’15. New York, NY, USA: ACM, 2015, pp. 158–163.

[18] D. Sirkin, N. Martelaro, M. Johns, and W. Ju, “Toward measurement of situation awareness in autonomous vehicles,” in Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, ser. CHI ’17. New York, NY, USA: ACM, 2017, pp. 405–415.

[19] G. Tien, M. S. Atkins, B. Zheng, and C. Swindells, “Measuring situation awareness of surgeons in laparoscopic training,” in Proceedings of the 2010 Symposium on Eye-Tracking Research Applications, ser. ETRA ’10. New York, NY, USA: ACM, 2010, pp. 149–152.

[20] R. Kiran, S. Salehi, J. Jeon, and Z. Kang, “Real-time eye-tracking system to evaluate and enhance situation awareness and process safety in drilling operations,” IADC/SPE Drilling Conference and Exhibition, March 2018.

[21] J. de Winter, Y. Eisma, C. Cabrall, P. Hancock, and N. Stanton, “Situation awareness based on eye movements in relation to the task environment,” Cognition, Technology & Work, vol. 21, no. 1, pp. 99–111, February 2019.

[22] J. Zobel, Writing for Computer Science, 3rd ed. London: Springer London, 2014.

[23] D. Kade, M. Wallmyr, T. Holstein, R. Lindell, H. Ürey, and O. Özcan, “Low-cost mixed reality simulator for industrial vehicle environments,” Lecture Notes In Computer Science (Including Subseries Lecture Notes In Artificial Intelligence And Lecture Notes In Bioinformatics), vol. 9740, pp. 597–608, 2016.

[24] D. Kade, M. Wallmyr, and T. Holstein, “360 degree mixed reality environment to evaluate interaction design for industrial vehicles including head-up and head-down displays,” Lecture Notes In Computer Science, vol. 10910, pp. 377–391, 2018.

[25] Pupil Labs. (2019, April) Pupil docs - master. [Online]. Available: https://docs.pupil-labs. com/#introduction

[26] ——. (2019, Juni) pupil-docs/user-docs/data-format. [Online]. Available: https://github. com/pupil-labs/pupil-docs/blob/master/user-docs/data-format.md

A

Participation survey

Participation information: Participation in this study is voluntary. Participants can withdraw their participation at any point, even in the middle of a simulation. Any information that can be used to identify individuals will be anonymized to protect the participants personal integrity. We will use the eye-tracking equipment during the simulation to record the participants’ gaze posi-tions. These recordings will not be displayed outside the context of the thesis work. By filling out this form you agree to participate in the study.

The motivation for the study: The motivation behind this study is that we want to be able to measure situation awareness without pausing the simulation, which could break the immersion of the participants. We intend to perform user tests to try and answer this research question. The testing scenario: Participants are expected to perform a number of tasks in off-highway vehicle scenario in a mixed reality simulator. In the scenario you will operate an excavator in a construction zone and knock down orange cubes on top of the pillars, while avoiding traffic cones, workers and other obstacles. We intend to use cross referencing of logs from the scenario in conjunction with a SART questionnaire to measure the situation awareness of the participants and evaluating the measuring system.

About you

1. First name: 2. Last name: 3. Age: 4. Gender: 2 man 2 woman5. Which demographic group do you belong to? 2 Bachelor student

2 Master student

2 PhD student or staff at MDH 2 Other:

6. Have you operated off-highway vehicles such as excavators or forklifts before? 2 Yes, I have operated off-highway vehicles in real life

2 No, I have never operated a off-highway vehicle in real life

7. Have you played driving games or used other forms of vehicle simulators before? 2 Yes, quite often

2 Yes, but not often

2 No, I have never used a vehicle simulators or played a driving games

8. Have you used a head mounted display or head mounted projector before? 2 Yes, quite often

2 Yes, but not often

![Figure 2: Interpretation of the reality–virtuality continuum, which was invented by Paul Milgram [6]](https://thumb-eu.123doks.com/thumbv2/5dokorg/4721403.124625/7.892.143.751.332.493/figure-interpretation-reality-virtuality-continuum-invented-paul-milgram.webp)

![Figure 3: Simplified version of Endsley’s model of SA in dynamic decision-making [10]](https://thumb-eu.123doks.com/thumbv2/5dokorg/4721403.124625/8.892.139.758.238.507/figure-simplified-version-endsley-model-dynamic-decision-making.webp)

![Figure 4: The left side of the image shows Daze during an on-road driving scenario [18] and the right side shows a closeup of the display interface [17].](https://thumb-eu.123doks.com/thumbv2/5dokorg/4721403.124625/10.892.131.765.551.769/figure-image-shows-driving-scenario-closeup-display-interface.webp)