Cyborg, How Queer Are You?

Speculations on Technologically-Mediated Morality Towards

Posthuman-Centered Design

Sena Çerçi

Interaction Design 2-year Master’s Programme

Spring Semester 2018

TP1 - 15 CP

Supervisor: -

Abstract

This research deals with the highly-relevant issue of paternalism within the discipline and

practice of HCI with a particular focus on the autonomous decision-making AI technologies. It is

an attempt to reframe the problem of paternalism as a basis for posthuman-centered design, as

the emerging technologies have already started to redefine autonomy, morality and therefore

what it means to be a human. Instead of following traditional design processes, queering as an

analogy/method is used in order to speculate on the notion of technological mediation through

design fictions. Relying on arguments drawn from the relevant theory on philosophy of

technology and feminist technoscience studies as well as the insights from the fieldwork rather

than conventional empirical design research for its conclusions, this research aims to provide a

background for a possible ‘Design Thing’ to tackle the problem in multidisciplinary and

democratic ways under the guidance of the ‘queer cyborg’ imagery.

Keywords: human-computer interaction, artificial intelligence, machine learning, autonomous technologies, paternalism, ethical decision-making, feminist technoscience studies, design fiction

Table of Contents

INTRODUCTION

5

1.1 Autonomous Decision-Making AI and Paternalism

5

1.2 Us and Them

5

1.3 Research Question

6

1.4 The Structure of This Report

7

2. THEORY

7

2.1 Technological Mediation and Paternalism

7

2.2 Social Control Through Panoptic Technologies

8

2.3 Canonical Example

10

3. METHODS

13

3.1 RtD Through Speculative Design Toward Posthuman-Centered Design

13

3.2 Queering as An Analogy/Method

14

3.3 Project Plan

15

3.3.1 Surveillance Experiment

16

3.3.2 Survey #1: Machine Misbehavior

17

3.3.3 Interviews

17

3.3.4 Design Fiction Workshop

17

3.3.5 Survey #2: Paternalism

18

3.3.6 Thought Experiment

18

3.4 Research Ethics

19

4. DESIGN PROCESS

19

4.1 Surveillance Experiment

19

4.2 Survey #1: Machine Misbehavior

20

4.3 Interviews

21

4.4 Design Fiction Workshop

23

4.5 Survey #2: Paternalism

24

4.6 Thought Experiment

26

5. MAIN RESULTS AND FINAL DESIGN

27

5.1 Final Design: The Diegetic Prototype

28

5.1.1 The Context

28

5.1.2 The Narrative

28

5.1.3 The Aesthetics

29

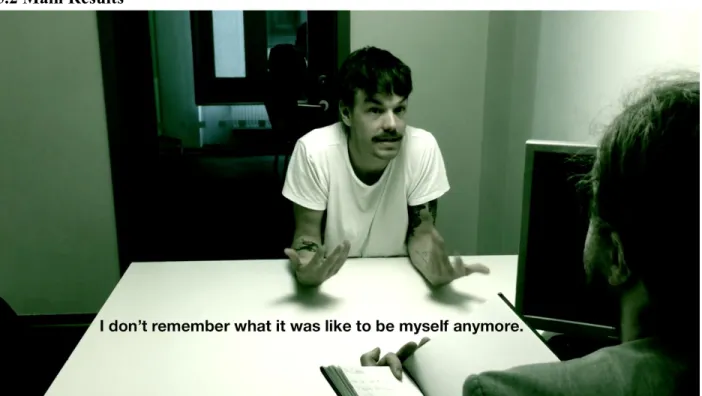

5.2 Main Results

30

6. EVALUATION / DISCUSSION

31

6.1 Reflection

31

6.2 The Scope & Role of Design

32

7. CONCLUSION

33

REFERENCES

34

APPENDIX

39

1. Surveys

39

1.1 Data Selfie Experiment

39

1.2 Machine Misbehavior

39

1.3 Paternalism

39

2. Design Fictions

39

2.1 Design Fiction Workshop

39

3. Thought Experiment

39

3.1 The Hypothetical Scenario

39

3.2 Responses

40

4. Responses to Final Design

42

1. INTRODUCTION

1.1 Autonomous Decision-Making AI and Paternalism

As the autonomous decision-making AI technologies are gaining more autonomy to make

decisions for us as well as for themselves, ethical decision-making has been a very relevant area

of research as well as the public debate. The research on ethical decision-making of AI

technologies have been predominantly on the canonical examples of these technologies, which

are Nest, self-driving car, (not necessarily humanoid) sociable robots (including sex robots), and

(anticipatory) UX Design, and algorithm design. While the relevant discussions for each of these

are quite distinct from each other, there are two contrary approaches to the problem of

paternalism in ethical decision-making in general: 1. Quantifying morality to improve algorithms

in order to avoid paternalism when outsourcing ethical decision-making to artefacts, (i.e.,

enabling artefacts to make better decisions fully autonomously) 2. Involving the user in the

decision-making process by making these algorithms more transparent to avoid paternalism (i.e.,

enabling user intervention in the decision-making process). These two approaches fundamentally

differ in how they view technology, denoting to the need for investigating the ontology of our

relationship to it.

Simply put as technology’s ability to make decisions for us, paternalism can have covert 1

consequences that alter what it means to be human as well as having overtly vital consequences .2 3

Regardless of its consequences, paternalism is still a matter of concern for many scholars like

Peter-Paul Verbeek as it would cause ‘moral laziness’ in the long run (2008). It has also proven to

spark strong negative reactions in the public as in the example of Ted Kaczynski . 4

“The trouble starts when science moves out of the laboratory into the marketplace, when

consumer desire enters the equation and things become more irrational and profit driven”

(Dunne & Raby, 2013). With the autonomous AI technologies having moved out of the laboratory

and already been integrated into our fundamental infrastructures, there have been many

examples to paternalism resulting in moral crisis and attempts to solve these crises. As the design 5

problem and solution pair co-evolves (Kolodner & Willis, 1996), the topic requires a deeper

investigation of the “swampy lowlands, where situations are confusing messes incapable of

technical solution and usually involve problems of greatest human concern" (Schön 2017, p.42).

For this reason, instead of attempting to offer a solution, this research aims to create a better

understanding of the complexity of the ‘design situation’ (Larsen, 2015) using critical design

methods.

1.2 Us and Them

Increasingly gaining ability to make fully autonomous decisions on things that directly affect

human lives, AI has been scapegoated for the negative consequences of these paternalistic

decisions. Is AI really evil? To be able to answer that, we first need to consider the nature of 6 technology: Can technology be inherently good or bad? Is it just a tool we use or does it actually

have an effect on shaping our culture (and therefore how we relate to each other)? In other

words, to what extent do we have control over this ‘tool’ we use? Take the example of guns for example: It is built to kill, but is it enough to conclude that technology is inherently bad? No, because it is not the guns that kill the people, but the people using guns to kill people. However,

on a different note, a speed bump to induce lower driving speed before approaching a school

1

Paternalism: “the behavior against or regardless of the will of a person, or also that the behavior expressing an attitude of superiority” (Shiffrin, 2000) “for our own good” (Dworkin, 1972) 2

Facebook and Google create filter bubbles that contribute to social polarization (Pariser, 2011). 3

A self-driving Uber recently killed a woman (Levin & Wong, 2018). 4

Also known as the "Unabomber", Ted Kaczynski is an American serial killer, domestic terrorist, and self-professed anarchist who promoted abandonment of such technologies to avoid a possible AI takeover. His vision of AI takeover was when we would reach a level of voluntary dependence on AI and their decision-making, where turning it off “would amount to suicide” (Kaczynski, 2017).

5

How ironic it is that the word ‘crisis’ comes from the Ancient Greek word κρίσις (krísis, “a separating, power of distinguishing, decision, choice, election, judgment, dispute”), from κρίνω (krínō, “pick out, choose, decide, judge”). Retrieved from Wiktionary.

6

Among those that fear AI are Stephen Hawking, Bill Gates, and Elon Musk.

clearly steers the behavior for a good purpose. In that case, Melvin Kranzberg is right by saying that “technology is neither good nor bad; nor is it neutral,” since it is not indifferent to our cultural progress (1986).

So, what is the ontology of our relationship to technology? Information architect and user

experience professional Steve Krug believes that any well-designed technology should play the

role of a butler when interacting with a human being (2000). However, the introduction of

autonomous decision-making AI technologies brought up the issue of paternalism into the

discussion because these technologies do not necessarily act as a butler to us. In fact, paternalism is caused by these technologies working as us rather than with us. Thus, I repeat the question: What are they to us?

There are a few issues with this predominant ‘us and them’ dualistic view to our relationship with

the autonomous decision-making AI: Firstly, it overlooks at the fact that we are already cyborgs.

We may not necessarily be cyborgs in the classical sense (Clynes & Kline, 1995), however, our

biological capabilities are extended, augmented, enhanced (Cranny-Francis, 2016), and even

partly shaped by technology (Haraway, 1985). Our brains have been undergoing a rewiring

process through technology (Beaulieu, 2017). Therefore, “perhaps, it is time to extend the

boundaries of the self to include the technologies by which we extend ourselves” as put by Aral

Balkan, a self-proclaimed cyborg rights activist (2016). Not only that, but the dualistic view also

neglects that we co-evolve with technology. Therefore, we need to start investigating the topic

from a reciprocal perspective. This will be explained in detail in section 2.1.

Another issue with this dualistic ‘us and them’ view is that it reduces the surveillance aspect of

autonomous decision-making AI technologies (as they gain their decision-making abilities through

machine learning that requires constant surveillance of the ‘cyborg’ self) to a “capture between

two actors”, when it is in fact a “violation of the [cyborg] self” (Balkan, 2016). By overlooking at the fact that these technologies are in fact designed and made by humans for humans, it shifts the

blame from the owners of these technologies onto the technological artefacts. In fact, what

matters in the ontology of human-technology relationship is rather about who controls the others

through technology rather than who controls the other in human-technology relationship. Zeynep

Tüfekçi, a socio-technologist, puts it that way: “It’s easy to imagine an alternate future where

advanced machine capabilities are used to empower more of us, rather than control most of us.

There will potentially be more time, resources and freedom to share, but only if we change how

we do things. We don’t need to reject or blame technology. This problem is not us versus the

machines, but between us, as humans, and how we value one another” (2015). This will be

explained further in section 2.2.

1.3 Research Question

In an attempt to reframe the ill-defined problem of paternalism, the research question aimed at

ambiguity rather than specificity when exploring the ontology of our relationship to these

autonomous technological artifacts. Therefore, my research question was: How do we deal with

machine ‘misbehavior’?

Here, autonomous decision-making AI technologies are meant by machines. The definition of

paternalism implies an attitude of superiority, however, in order to explore the possible different

modes and dynamics of human-technology relationship that may have not been considered

before, the question’s stance on who is superior is left as open-ended and neutral as possible to

expand the ‘design space’ for further ‘design imaginations’ (Larsen, 2015). The verb ‘deal with’ is

used instead of using words like train, teach or fix that would imply the superiority of human

over the machine and that they are tools for us. Paternalism is replaced by the word misbehavior for the same reason; to imply the possibility of some sort of ‘relationship’ between a machine and

Misbehavior is a personality trait, which does not necessarily denote to anthropomorphization of

the machine in this context, but to emphasize these technologies’ ability to learn and make

decisions autonomously that may result in undesired consequences.

1.4 The Structure of This Report

This research relies on arguments drawn from the relevant theory as well as the insights from the

fieldwork for its conclusions using the analogy/method of queering to investigate and speculate

on the topic. The background, design openings and the research question are introduced

throughout this section, which is followed by a summary of relevant theory and a canonical

example in the next section. In the Methods section, the reasoning behind the choice of design

methods and approaches are explained in detail. The application of these methods to the design

process with an analysis of results are presented in the Design Process section. The final outcome

of the design process and how it answers the research question posed in the Introduction is

evaluated in the Main Results and Final Design section. The following Evaluation/Discussion

includes a brief reflection on the process, and also a criticism of speculative design approach and

a questioning of the limitations of current design research practices for the posthuman future. In

the Conclusion section, a brief summary of the research is presented along with how this project

can be further elaborated into a ‘design thing’ to create multidisciplinary collaborations and

improve the research further to include the public in the ongoing debate.

2. THEORY

2.1 Technological Mediation and Paternalism

As introduced in section 1.2, by facilitating the relationship between people and reality,

technological artifacts actively co-shape humans’ ‘Being-in-the-world’ (Heidegger, 1996), therefore

can be understood as mediators of human-world relationships (Verbeek, 2011). Latour argues that

because of their constant role as mediators, the artifacts bear morality in his Actor-Network

Theory (1992). With the emergence of autonomous AI technologies, artifacts play an even more

active role in moral action and decision-making.

Technology cannot be moralized, it can only have a moralizing role. While the example of a speed

bump near a school denotes to how the moralizing role of technology may have the form of

exerting force on human beings to act in specific ways, the moralizing role of technology does not necessarily have to exert force on human beings to act in specific ways. Technology can also act as

a moral agent in our decisions when making moral decisions about abortion as in the example of

ultrasound technology (Verbeek, 2008). Verbeek calls this joint effort of human being and

technological artifacts ‘technological mediation’ (2011). He claims that a designer needs to

experiment with all these mediations and find ways to discuss and assess their possible outcomes

to figure out the plausible ones, since a high level of influence (technological mediation) would

account for paternalism (Verbeek, 2011).

Why are we so afraid of their paternalism, even when it might be “for our own good” (referring

back to Dworkin’s (1972) definition of paternalism)? Because attributing morality to technological

artefacts would equal to technological determinism, meaning we would not have free will! To

avoid such scenarios and paternalism by designers, Dutch philosopher Hans Achterhuis called for

an explicit ‘moralization of technology’, however his plea received severe criticism for the

decisions taken under the limited freedom conditions of moralized technology cannot be called

moral and would lead to technocracy, while some counter-argued that the technological

mediation is already real regardless of the explicit moralization of technology and that these

limitations do not differ much from the limitations brought by law, religion etc (Verbeek, 2006).

It might be true that technologies do not differ from laws in limiting human freedom, but laws do

come about in a democratic way, whereas the current moralization of technology does not.

“Technology design is inherently a moral activity. By designing artifacts that will inevitably play a

mediating role in people’s actions and experience, thus helping to shape (moral) decisions and

practices, designers ‘materialize morality’: they are ‘doing ethics by other means’ (Verbeek, 2006).

Technological design is based on the Aristotelian idea of leisure equals liberation and on the

Marxian idea of central management of undesirable work to liberate humans , which results in 7

limiting human freedom in subtler ways while uncoupling freedom from the responsibility it

comes with (Gertz, 2017). Therefore, today’s paternalistic technological design blocks our ability

to ‘think and act’ and be aware of the consequences of our actions, as a result, we get more

dependent on and needy towards them because of this ‘moral laziness’ (Verbeek, 2006). If

technologies are not moralized explicitly, after all, the responsibility for technological mediation

is left to the designers only. This would precisely amount to technocracy as Verbeek fears of the

behavior-steering technologies (2006). A better conclusion would be that it is important to find a

democratic way to moralize technology.

2.2 Social Control Through Panoptic Technologies

Apart from the few examples that directly attack our moral autonomy and even life (e.g.

self-driving car), it is hard to understand the covert subtle effects of paternalistic technologies on

us. Back in 2014, technology writer Dan Gillmor expressed his fear that we may be controlled by

the robots that are controlled by Silicon Valley (2014). Considering sociable robots, robot teachers,

self-driving cars and many other robots or autonomous technological artifacts with physical

entities, it is not hard to imagine catastrophic scenarios on how these robots may manipulate our

domestic relationships, brainwash young kids or decide who to kill. These effects are rather overt

and relatively easier to understand compared to a full understanding of the effects of paternalistic

technologies on us.

As introduced in section 1.1, the debate on paternalism and ethical decision-making of

autonomous AI technologies is based on two distinct approaches to technology summarized in

section 1.2. “Technology is neither the ‘other’ to be feared and to rebel against, nor does it sustain

the almost divine characteristics which some transhumanists attribute to it” (Ferrando, 2013).

However, it is not a mere neutral and functional tool either. “Technology is therefore no mere

means. Technology is a way of revealing” (Heidegger, 1977). The ontology of our relationship to

autonomous decision-making AI technologies, which my research question aims to investigate, is

therefore very complex and requires a deeper investigation of the social and ethical impacts of

these technologies, since “it is not clear who makes and who is made in the relation between

human and machine. [...] There is no fundamental, ontological separation in our formal

knowledge of machine and organism, of technical and organic.” (Haraway, 1985).

Haraway’s cyborg imagery allows us to think beyond the dualistic view of “us and them” by

suggesting “a way out of the maze of dualisms in which we have explained our bodies and our

tools to ourselves” (1985), and therefore reframe the problem of technological paternalism within

the problem of technological determination by pointing out the nature of the self that has been

already shaped by technology.

When reframed within the larger context of technological determination, the scope of the

problem of paternalism goes beyond specific decision-making instances, which is the concern of

most of the research on paternalism. Defined as “behavior against or regardless of the will of a

person” (Shiffrin, 2000) (see section 1.1), such reframing reveals the covert paternalism occurring

regardless of our will. For example, an ex-Google design ethicist, Tristan Harris warns us that a

handful of tech companies are already controlling billions of minds every day, which would equal

to paternalism as it happens regardless of our will: “I don't know a more urgent problem than

this, because this problem is underneath all other problems. It's not just taking away our agency

conversations, it's changing our democracy, and it's changing our ability to have the

conversations and relationships we want with each other. And it affects everyone, because a

billion people have one of these in their pocket” (2017).

He denotes to how technology is used as a means of social control through surveillance and

algorithmic paternalism. We already know that Facebook is stealing our attention (Kurzgesagt,

2015), Netflix is stealing our time and attention (Gertz, 2017), many social media platforms result

in ‘emotional contagion’ (Kramer et al., 2014), and many apps benefit from behavior design to

keep us further engaged to their products (Leslie, 2016); all regardless of our will. However,

because there is no obvious ethical dilemma as in the trolley problem of self-driving cars, this

type of paternalism (often happening in the form of algorithmic paternalism) and how we need to

deal with (referring back to my research question) is not investigated enough.

In order to understand how algorithmic paternalism of black-box algorithms attack our moral

autonomy and modify our behavior and therefore result in social control (Pariser, 2011), a

Foucauldian investigation of their panoptic effect is necessary. Proposed by the philosopher and

social theorist Jeremy Bentham in the late 18 th century as a new type of institutional building and

a system of control (see Figure 1), panopticon aimed to discipline the bodies through a “new mode

of obtaining power of [one’s] mind over [their own] mind in a quantity hitherto without example”

(Bentham 1995, p. 5). By creating an illusion of constant

surveillance and, panopticon is a symbol of the disciplinary society

of surveillance (Foucault, 2012). Through controlling “our

bodies...the maps of power and identity” (Haraway, 1985), Foucault

argues that Bentham’s panopticon transforms discipline and social

control into a passive action rather than an active one through

surveillance, since the surveilled ones can’t tell if and when they

are being watched, and therefore come to regulate their behavior

on the chance that they are being watched (2012). This is “the major effect of the Panopticon: to induce in the inmate a state of conscious

and permanent visibility that assures the automatic functioning of

power” (Foucault, 2012). Today, most of the social institutions are

configured based on the panoptic idea of self-regulation.

Figure 1. Bentham’s Panopticon

One of the most controversial algorithmic paternalism examples, Facebook, is considered as a

self-reflexive ‘virtual panopticon’. Through surveillance, it is claimed to be used as a means of

social control, while also acting as a means for voluntary self-surveillance. The self-reflexive

structure to sharing content on Facebook makes it a virtual panopticon, where users’ virtual

bodies are exposed to the uncertainty of ‘conscious and permanent visibility’. It is not only the

service of Facebook itself that is ‘surveilling’ our behavior and interactions at all times, but also

the audience we exhibit our content to is constantly surveilling us while simultaneously being

surveilled by their own audiences. This self-reflexive structure of content sharing takes away the

neutrality of sharing content since sharing becomes a performative act, “an act that does

something in the world” (Austin, 1975).

Combined with its black-box algorithm, the pervasive and ubiquitous surveillance results in

algorithmic paternalism that modifies our behavior. Although many of its users may not be fully

aware of it, Facebook is an autonomous decision-making AI technology, which learns through

surveilling its users to serve them targeted content and ads. Apart from the privacy violation

issues regarding our data, it decides which content the users would see rather than neutrally

exhibiting all that is available to them, which is equal to algorithmic paternalism. As a result of

that, the services we receive are personalized and customized, telling us what we need to know,

even before we know it ourselves (Zuboff, 2015, p. 83). This seems innocent compared to a Google

car crash, however, this is a way to obtain power over minds by ownership of means of

behavioral modification, which Zuboff calls ‘surveillance capitalism’ (2015, pp. 82-83). “Behavior

is subjugated to commodification and monetisation” (Zuboff, 2015, p. 85) and “profits derive from

the unilateral surveillance and modification of human behavior” (Zuboff, 2016). Because we have

no idea about their black-box algorithms, we, as the users, cannot intervene to prevent it. This is

how our minds are controlled through subtle modifications of our behavior, and since we are not

even aware of this paternalism, we cannot “deal with machine misbehavior”. This is exactly why

this research is concerned with drawing attention to the topic and calling for a deeper

investigation of the situation in order to generate solutions to deal with them.

2.3 Canonical Example

Teacher of Algorithms - Simone Rebaudengo

Excerpt from the design fiction movie: ‘Teacher of algorithms’ speaks: “Leave your thing there,

these are all objects people bring me. They are called “smart”, but they are actually pretty dumb. Their owners have no time so they come to me to train them. I train objects. People think he is pretty dumb but he learns pretty fast. Look, as long as you control its behavior. When people bring these smart objects here, they think they have problems, but in reality, they are only reflecting the problems of their owners” (Rebaudengo, 2015).

Link to the design fiction “Teacher of Algorithms” by Simone Rebaudengo (2015): https://vimeo.com/125768041

From the project description: “ We think about smart/learning objects as yet not finished entities

that can evolve their behaviors by observing, reading and interpreting our habits. They train their algorithms based on deep learning or similar ways to constantly adapt and refine their decisions. But What if things are not that good at learning after all without some help?

What if in a near future, i could just get a person to train my products better as i do with pets? Why having to deal with the initial problems and risk that it might even learn wrongly? So, What if there would be an algorithm trainer?” (Rebaudengo, 2015).

As it says in the description, “built with cardboard and a lot of randomness”, this quick sketch of a

speculative vision explores algorithm training to question our relationship to the smart products

and indicate to many issues regarding them.

The movie starts with a conflict to be resolved: The ‘misbehavior’ of a smart coffee machine. The movie gives clues about its context, which seems to be taking place today, along with some clues on the incorporation of smart IoT devices in our lives, like a smart fridge that remind its user to

buy milk. The misbehavior of the coffee machine leads to the revealing of some sort of smart

machine ‘trainer’, to whom its owner takes the machine for training.

Addressing the smart machines as unfinished, learning entities that are like ‘blank slates’ to be

filled later through experience , the design fiction envisions a world where machines are in need 8

of our constant attention and care to perform their tasks. This is already a reality as learning

machines need more data about us to function better, and this happens through usage that also

functions as training.

The speculative movie envisions a side/support service for algorithm training for those that

cannot provide the necessary amount of attention and care (and data) to their machines.

However, it is not very clear whether this service is a legal or an illegal one: The ghetto-like

atmosphere around the location of the training center, the shabbiness of the shop and the sloppy

way the machines are stacked suggests that this side/support service may be illegal. The question

whether this service may be a cheap but illegal alternative to the relatively expensive licensed

support service is not answered throughout the movie, however the teacher of algorithms implies

that users bring their machines to him for training since they don’t have the time to do it

themselves, rather than implying they cannot afford the legal other service. Considering Simone

Rebaudengo’s other speculative vision on the same topic, ‘Addicted Toasters’ (Rebaudengo, 2012)

where he imagines a near future in which machines need our constant attention and care, it may

be the case that this training service is illegal for the lack of licensed support service offered by the smart machine company, since the users are likely to be obliged to devote their time, attention and care to their smart machines for these companies to harvest more data about the users. In the

case of ‘Addicted Toasters’, the machines were not smart, but needed constant attention and care,

meaning they just wanted to be used for the sake of being used. However, ‘Teacher of Algorithms’

depicts machines in need of not only our time, attention and care, but also our ‘knowledge’,

hinting at the data surveillance aspect of machine learning. This is also a criticism to designing addictive apps in order to obtain more data about the users (Leslie, 2016).

Although it suggests that this algorithm training service might be an illegal side service, it also

brings up questions regarding the possibility that algorithm training can be used as another

means for financial gains by the manufacturers. Because the user would have no insight into the

black-boxed algorithm of their machine, the algorithms may be deliberately left more open-ended

than they are supposed to be in order to provide customized, tailor-made service to their user.

Therefore, the side/support service may arise as the only option to prevent machine misbehavior

for the users not knowing about it, as well as for the users that do not have the time to train their

machines. Nicolas Nova of Near Future Laboratory resembles the speculative ‘teacher of

algorithms’ to “IoT equivalent of goldfarmers to some extent” (2015). In a world where digital

sweatshops are a reality, this is quite plausible.

Rebaudengo also questions the notion of smart machines and machine learning. His perspective

on paternalism is reflected through the comments of the teacher of algorithms on the smartness

of a vacuum-cleaner robot: “They think it is dumb, but it actually learns pretty fast”. Rebaudengo

subtly reminds that their smartness comes from their ability to learn, not necessarily from

making the right decisions. The teacher of algorithm also adds that the machine can learn fast “as

8

in reference to John Locke’s understanding of tabula rasa in his essay titled “An Essay Concerning Human Understanding” (1689).

long as you control its behavior”, emphasizing Rebaudengo’s take on paternalism and denoting to

his stance on the opening up of black-box algorithms to allow for user intervention to prevent

paternalism. The issue of paternalism and user intervention in such situations are conveyed

through the anthropomorphization of the machine by referring to it as ‘(mis)behavior’.

Rebaudengo further anthropomorphizes smart machines by attributing character to them (e.g.

the teacher of algorithm calls the ventilator ‘lazy’), comparing machines to kids and pets (e.g.

when he juxtaposes how some people teach kids, some animals and him objects), and introducing

a reward/punishment system to train the machines. In the last case, these machines are depicted

as needy towards our attention in parallel to Rebaudengo’s other works, therefore its reward is

user interaction. This may again be a criticism for how today’s technological design is based on

monetization of our data and behavior through addictive engagement with them rather than the

efficiency and usability to perform the tasks we need them for as a tool (see section 2.2).

Many scholars defend the idea that we should be teaching robots right from wrong just like we

would teach kids (Parkin, 2017). Rebaudengo’s perspective on how he sees the machines is not

clear, since it suggests two different perspectives: a pet and a kid. At the same time, it is implied that these smart objects are in fact extensions to us in parallel to Balkan’s view (see section 1.2)

when the teacher of algorithms says “When people bring these smart objects here, they think they

have problems, but in reality, they are only reflecting the problems of their owners”. Denoting to our

reciprocal relationship to technology, this also seems to be a criticism of the surveillance and

privacy aspect to the smart machines. These machines are extensions to us in the sense that we

project and/or extend ourselves onto them by feeding them with our data in exchange for their

services. In order to prevent misbehavior (i.e. to get the more accurately tailored service they

claim to offer), we need to devote more of our time, attention and data to them through usage.

The character teacher of algorithms comes across as a talkative, slightly wacky and old-school guy

to reflect his critical stance on the smart machines. Unlike the main character that stays mostly

silent and does not seem to be thinking critically of the products he uses, the teacher of algorithms seems to be eager about telling his work and reflections on these products and their users. As the

one that possesses the knowledge about these products and training them, the teacher of

algorithms seem to be critical of these products and their users, whereas the owner of the coffee

machine does seem to be unaware of the situation until his machine misbehaves, which may be

an indicator of the current situation with paternalism and the autonomous decision-making AI

systems regarding the lack of awareness amongst the users (and even designers) until a crisis

occurs.

So, how might we deal with crisis situations? The experts, the ‘teachers of algorithms’

acknowledge that they are extensions to us and therefore be more critical and conscious about

these artefacts and their usage because of their greater understanding of the design situation, but

what do the users think? How would they deal with the machine misbehavior?

Departing from Rebaudengo’s given incompatible perspectives on the ontology of our relationship

to technology, my research question (see section 1.3) therefore asked how to deal with machine

misbehavior (paternalism) through the methods explained in the next section in detail.

3. METHODS

Figure 2. How speculative design approach might foster democratic ways to moralization of technology

3.1 RtD Through Speculative Design Toward Posthuman-Centered Design

The line between the man and the machine is blurring as the computers are getting better at

learning, processing, and adapting to contexts. The line blurs further due to

anthropomorphization, and as they gain autonomy, this is enhanced. Haakon Faste, a

posthuman-centered design researcher, argues that thinking within the limitations of

human-centered design when they are already altering what it means to be human is likely to

cause the greatest existential crisis mankind has ever had (2016).

He denotes to the need for human-centered perspective on machine usability to evolve into

posthuman-centered one due to the reciprocity of the interaction between human and the

learning systems in particular (Faste, 2016). While these systems adapt to us, we also adapt to

their design limitations through an interplay between perception and action, affordance and

feedback (Faste, 2016), in parallel to what I’ve already discussed in section 1.2.

“Systemic thinking and holistic research are useful methods for addressing existential risks,” he

adds, however, is concerned that the existential risks regarding the future of these technologies

do not give us a chance to iterate (2016), since the design problem and solution pair co-evolves

(Kolodner & Willis, 1996). Therefore, he calls designers to use their designerly “investigative

research, systemic thinking, creative prototyping, and rapid iteration” methods to address the

social and ethical impacts of such technologies in the long run through “collective imagining” and

an analysis of these future predictions to help us with the challenge of designing for posthuman

experience (Faste, 2016).

On a similar note, Verbeek suggests performing mediation analysis to make informed predictions

on the future mediating roles of a technology in order to deal with the unpredictability of the

future (2006). Though it is not possible to predict the future, we can speculate about it to increase

the probability of intended futures, as well as recognizing and addressing the unwanted ones

(Dunne & Raby, 2013). However, making predictions should not be solely designers’ task,

particularly on a topic that involves different perspectives on human selfhood and its values.

Therefore, this research aims to holistically reframe the situation by engaging users in the fuzzy front end of design that “will most likely produce the largest benefit in terms of societal value”

(Sanders & Stappers, 2014, p. 28) and stimulating collective imagining through speculative design

approach.

Speculative design approach is also compatible with RtD approach that is defined as “a research

approach that employs methods and processes from design practice as a legitimate method of

inquiry” (Zimmerman, Stolterman, & Forlizzi, 2010). As opposed to scientific inquiry striving for

finding out the existing and the universal, or in this case finding the ultimate solution to the

problem defined in section 1, design speculates on what could be through reflective

reinterpretation and reframing of a problem through the creation of artefacts (Rittel & Webber,

1973; Schön, 2017; Stolterman, 2008). It can be said that RtD approach is already speculative in its

future-oriented reflectivity that involves continual reinterpretation and reframing of a

problematic situation by “addressing these problems through its holistic approach of integrating

knowledge and theories from across many disciplines” (Zimmerman & Forlizzi, 2014; Zimmerman

et al., 2010), but speculative design can benefit RtD further with its ability to “unsettle the present rather than predict the future” (Dunne & Raby, 2013) by framing the problem in order to initiate a

more democratic and collaborative “reflective conversation with the situation” (Schön, 2017)

through collective imagining.

Since AI is more than a technical shift and holds the culturally-transformative power, Crawford &

Calo further calls for a holistic and integrated multidisciplinary investigation drawing on

philosophy, law, sociology, anthropology and science-and-technologies studies, among other

disciplines to analyze every stage of these technologies - conception, design, deployment and

regulation to ensure a beneficial use of these technologies (2016). As “a discursive practice, based

on critical thinking and dialogue, which questions the practice of design (and its modernist

definition)”, speculative design also has the potential to create new interaction between various

disciplines in trans-disciplinary, post-disciplinary or post-designerly ways (Mitrovic, 2015). For

such a “wicked problem” that is complex with indefinite boundaries (Rittel & Webber, 1973),

speculative design approach can instigate multi-disciplinary collaborations in order to assess the

social and ethical impacts of autonomous decision-making AI technologies and build a solution

framework rather than aiming at generating technical solutions that may create further problems

(see Figure 2).

On the other hand, this kind of explorative, open-ended research might not have clearly defined

steps in the design process, since it departs from the conventional design approach (Gaver, 2012).

Also, because RtD approach is based on iterative reflection, the research questions and focuses

shift throughout the process according to the insights found (Krogh, Markussen & Bang, 2015).

These shifts will be explained in detail for each step in the process in section 4.

3.2 Queering as An Analogy/Method

Referring back to section 1.2, “technology is neither good nor bad; nor is it neutral” (Kranzberg,

1986). The political non-neutrality of technology has been pointed out particularly by feminist

technoscience studies. As a socially constructed object, technology is always implicated in power

relations (Wajcman, 1991). Although grounded in gender and sexuality, Queer Theory is

applicable to many other research areas outside gender and identity studies (including HCI) since

it provides a framework to explore the correlation between power distribution and identification.

Ann Light, who uses Queer Theory and queering as an analogy/method in her problematization of

perpetuating the political status quo in identity formation within HCI paradigm and resistance to

computer formalisation of identity defines queering as follows: “To queer something, taking the

Greek root of the word, is to treat it obliquely, to cross it, to go in an adverse or opposite direction.

It has movement and flex in it. Queering is problematizing apparently structural and foundational

relationships with critical intent, and it may involve mischief and clowning as much as serious

critique” (2011).

She further argues that digital tools, especially the intelligent, autonomous digital systems

mediate our relationships and have a “knock-on effect on how we understand and manage

ourselves as a world”, and believes in the urgency of the need for queering for a “more fluid

response to changes in the field of technology, to the methodological commitments of others and

to the possible domains to be touched by developments in computing” (2011). While there are

three prevailing modes to social-systems analysis that are each valuable, none is individually or

collectively sufficient, and there is no consensus on how to assess the social and ethical impacts of AI systems on their social, cultural and political settings as Crawford & Calo notes (2016). Because these assessments are contingent to their social, cultural and political settings, the impacts of AI

are likely to differ for individuals. Therefore, queering can also inform social-systems analysis

through speculations on the social and ethical impacts of such technologies in the long run as

explained in the previous section. For these reasons, the design experiments conducted for this

research aimed at queering rather than generating insights for “an ultimate particular”

(Stolterman, 2008).

Ann Light also warns about that. The outcome of the queering method is not “an analysis to

inform design, but an ongoing application of disruption as a space-making ploy and, thus, as a

hands-on method” (2011). By defying definition and embracing perpetual “off-centre, eccentric,

critical, reflexive and self-analytic” role, queering leads to no destination to give rise to new

truths, perspectives and engagements, and subverts hegemony through perpetual repositioning of

margins rather than fixation (Light, 2011).

Queering as an analogy/method is therefore very parallel to posthumanist thinking, which defies

the anthropocentric humanistic thinking and the traditional center of Western discourse

(Ferrando, 2013). While acknowledging the many centers of interests, posthumanism is not based

on hierarchical thinking and does not prioritize human over non-human, therefore provides “a

suitable way of departure to think in relational and multi-layered ways, expanding the focus to

the non-human realm in post-dualistic, post-hierarchical modes, thus allowing one to envision

post-human futures which will radically stretch the boundaries of human imagination”

(Ferrando, 2013). In posthumanist thinking, human is not the center nor “an autonomous agent”,

and tackled in terms of its relational ontology to other non-human entities (Ferrando, 2013). This

approach can be particularly useful to broaden our perspective when predicting the ontology of

human-technology relationship.

In parallel to speculative design approach (see section 3.1), using queering as a method to reframe

the problems regarding autonomous decision-making AI technologies can create “an unstable

zone for disciplines to meet and morph in post-disciplinary ways” (Light, 2011), and therefore

enable us to set “a comprehensive and generative perspective to sustain and nurture alternatives

for the present and the futures” (Ferrando, 2013). Transcending the limitations of

human-centered design and creating post-disciplinary collaborations by using the method of

queering within a speculative design approach, we might be able to speculate on and respond

better and more fluidly to the rapidly-evolving technologies and their knock-on effect on our

world, and become “a new breed of posthuman-centered designer [needed to] maximize the

potential of post-evolutionary life (Faste, 2016).

3.3 Project Plan

This section aims to provide an overview of the methods used for design experiments (see Figure

3).

Figure 3. An overview of the process. Notice the non-linear structure due to multi-facetedness of the problem.

3.3.1 Surveillance Experiment

Panopticon is a symbol of the disciplinary society of surveillance (Foucault, 2012), and Facebook

can be considered as a virtual metaphor of a panopticon (see section 2.2). To explore how the

panoptic features of Facebook is reflected upon its users and whether it manipulates their

behavior or not, I used a program called Data Selfie . Data Selfie provides insights to users on how 9

machine learning algorithms track users’ data to gain insights about them and reflects it back on

the users. The program collects data to make predictions based on the time spent on the posts on a

user’s newsfeed as well as tracking click-based interactions. Because the program makes itself

clearly visible once downloaded (notice the small timer in circle on the left bottom in Figure 4),

another objective was to observe the effect of constantly being surveilled and reminded of it to

explore the self-reflexive panoptic features of Facebook. I called for participants that were mostly

indifferent to the topic. Because the program needed a certain amount of time and data before it

can make its predictions, this experiment lasted about 2-4 weeks, which varied according to the

participants’ use frequency. Although the experiment was the first to be conducted in the design

process, the results were therefore obtained later on in the process. The experiment ended with

an individual discussion with the participants and a survey. (See Appendix 1.1)

Figure 4. How the Facebook newsfeed looks with the Data Selfie counter on the bottom left

9

3.3.2 Survey #1: Machine Misbehavior

An initial survey of 10 questions to find out about the dynamics of our ‘relationship’ to the

autonomous machines was published online, to receive responses from people of different age

groups, backgrounds and relevance to the topic. The aim was to discover how people relate to

technology, the dynamics and mode of their relationship to it and what is considered to be

machine ‘misbehavior’ and the level of tolerance for different kinds of misbehavior in different

situations. (See Appendix 1.2)

3.3.3 Interviews

The survey results led to a hypothesis and put forward some of the modes of relationship. To

validate the reliability of the online survey and the hypothesis, unstructured interviews were

conducted on 10 people from diverse age groups, backgrounds and levels of digital literacy skills.

Because they all had a different understanding and level of knowledge on the topic, the

interviews were hard to standardize for all. The focus was on training algorithmic AI technologies

and whether that would result in an intimate relationship with it, therefore the interviews took

place in an informal conversation format to gain more personal insights. The interviews were not

recorded in order to protect the participants’ privacy. Based on the participants’ interests, digital

literacy skills and already used digital technologies, the AI technology that was talked about

differed. Although most were rather simple products like smart thermostat, smart coffee machine

or smart alarm, an interview took place on a potential user for intelligent sex toys.

3.3.4 Design Fiction Workshop

Workshops allow for gaining insights about people’s experience around a specific problem or

scenario by engaging them in creative activities (Hanington & Martin, 2012). A workshop bringing

people together allows for co-creation and therefore more fruitful insights (Sanders & Stappers,

2014). For speculative design approach involving imagination and envisioning of possible future

scenarios, design fiction allows for researching futures by making them tangible (Sterling, 2012;

Raford, 2012; Dunne and Raby, 2013; Wakkary et al., 2013; Van Mensvoort & Gruijters, 2014).

Therefore, in order to stimulate the collective imagination, I’ve conducted a workshop using

design fiction scenarios.

In order to gain insights on their perspective [as users as well as potential designers of such

technologies] on paternalism in technologically-mediated intimate aspects of human life, this

workshop brought 4 fellow interaction designers together. The workshop was conducted through

design fiction scenarios on matchmaking (see Figure 5), where paternalism would have a direct

effect on the deepest and most important dimensions of human selfhood. In order to understand

the effect of technological mediation, the workshop included comparisons to

technologically-unmediated paternalism scenarios to find out if the technological involvement

had an effect on how paternalism was perceived or tolerated.

Figure 5. Frames from the design fiction movies used in the workshop

The participants were given a set of cards, which helped them follow the discussion moderated by

me. The structure of the workshop was as follows:

1. The definitions of paternalism were presented, with a brief explanation of how I use the

word and what I would like to focus on as explained in section 1.

2. A speculative vision on how machines will help us find our perfect match once the human

is quantified to be transformed into algorithms was shown. They were then asked to

discuss about the algorithmization and paternalism on such ‘humane’ topic, how such

technologies might affect morality and human relationships and how they would interact

with such technology.

The part from 2:59-4:34 of this video was shown: https://www.youtube.com/watch?v=5u45-x0-zoY

3. A speculative vision on near-future digital matchmaking apps, which was excerpts from

the episode ‘Matchmaker’ from the TV series ‘Dimension 404’, was shown to users. They

were then asked to discuss about the same issues while making comparisons to the

previous one.

4. They were then asked to make analogies regarding the paternalistic decision-making

depicted in the two speculative visions. The intention was to find out whether it makes a difference when the paternalism is exerted by a human or an algorithm.

5. They were explained about the features of Tinder in detail to discuss its subtle ‘algorithmic paternalism’ even though Tinder is neither intelligent, nor autonomous decision-making.

6. They were each served different brief speculative scenarios on a very near-future

intelligent autonomous Tinder and asked to discuss about these scenarios.

3.3.5 Survey #2: Paternalism

An online survey of 10 questions was served to people of different age groups, backgrounds and

relevance to the topic. The aim was to find out if algorithm training changed users’ perspective on

the autonomous decision-making AI technologies. The questions involved brief scenarios

involving different levels of paternalism and user intervention to autonomous decision-making of

the machines. The multiple choice options to the briefly described situations aimed to figure out

the acceptable ‘misbehavior’ of autonomous decision-making AI technologies, or in other words to

figure out the desired level of control/intervention over the decision-making processes.

3.3.6 Thought Experiment

The insights gained from the previous experiments and the theoretical research has revealed the

queer nature of the self, which was unique to each individual. In order to explore the

heterogeneity of opinions, I conducted a thought experiment as a mode to social-systems analysis

to spark a debate and allow for a plurality of voices (Crawford & Calo, 2016). Some of the most

known thought experiments 10 done to assess the social and ethical impacts of autonomous

decision-making AI technologies are evil AI takeover (Bostrom, 2017), trolley problem applied to

self-driving cars (Lin, 2015). The thought experiment also performed as a mode of communicating

the theoretical background for those that were not knowledgeable on the topic without having to

explain it all.

Since the early stages of the research, Plato’s ‘Allegory of The Cave’, Robert Nozick’s ‘Experience

Machine’, and Sam Harris’ ‘No Free Will’ thought experiments gave me inspiration to come up

with this thought experiment, which was a written scenario published online. The participants

were asked to deliver their response however they like, face-to-face discussion, written text etc,

3.4 Research Ethics

Some of the design experiments needed an explicit consent of the participants, such as the

surveillance experiment with Data Selfie and the design fiction workshop. Although I did not need

to violate the participants’ privacy for the experiment with Data Selfie (see section 3.3.1), I had to

make sure they understand what the program and the experiment was about. For example, I had

to make sure they understand that the program was not storing their personal data and they

could delete the program as well as the data it collected anytime after the experiment was over. I

also needed to make sure that I was not interested in the predictions the program makes about

them, which would reveal their personal data, but rather about their reflections on these

predictions.

The design fiction workshop (section 3.3.4) that took place with fellow designers involved

discussions on personal values and ethical views. Although I would have liked to conduct such

workshop with non-design affiliated participants as well as fellow designers to include various,

critical voices in the discussion, I chose to work with ‘designer friends’ that already knew me and

each other hoping they would feel comfortable. I still needed to check with them before, during

and after the workshop to make sure they were willing to continue the discussion and moderated

it at all times to avoid any privacy violation.

4. DESIGN PROCESS

This section summarizes the insights generated by each of the design experiments explained in

the previous section. Due to the use of “queering” as a design method (see section 3.2), the insights should not be assessed as “an analysis to inform design, but an ongoing application of disruption

as a space-making ploy and, thus, as a hands-on method” (Light, 2011) in an attempt to reveal

“perspectives rather than research questions, and provisional takeaways rather than results”

(Löwgren, Svarrer Larsen & Hobye, 2013, p. 98). However, the insights gained after these

experiments will be used to inform the speculative design fiction, which will be explained in

detail in section 5.

4.1 Surveillance Experiment

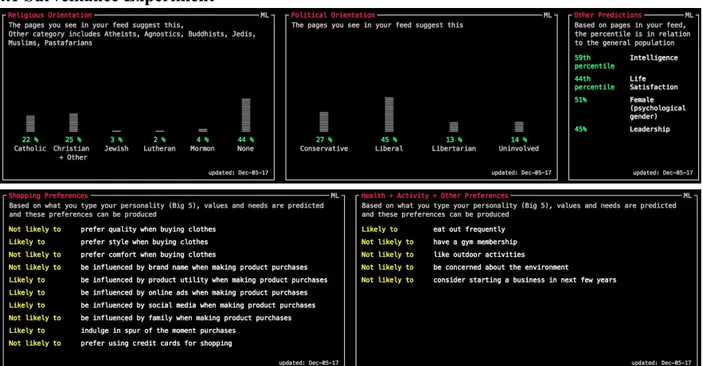

Figure 6. Screenshots from my own Data Selfie predictions

The aim of this experiment was to explore how black box algorithms learn and to observe the

effects of panoptic features of Facebook on the users. Even though the Data Selfie program was

not opening up the black-box algorithm but just sharing its insights and predictions, the

experiment was a way to understand the ‘design situation’ regarding how algorithms learn about

their users, how algorithmic paternalism exerted by these algorithms based on their learning, as

well as observing the self-reflexive panoptic effects. The majority of the participants in this

experiment said that it did not really affect their behavior of media consumption on their

Facebook, however, further discussions revealed that it actually did. The app collects data mainly

through the time spent and click-based interactions on the posts of others, and the counter that is

constantly added to the Facebook interface reminds users of its panoptic features as explained in

section 2.1.3. Because the participants knew the was about how Facebook algorithms learn about

them to make predictions, seeing the counter at all times on their newsfeed and keeping track of

the time spent on posts manipulated user behavior to an extent. They revealed that because they

did not want the counter to include some of the posts they see on their newsfeed in the

predictions, they tended to skip these posts fast rather than scrolling down at their usual pace.

Although I told them they could check their Data Selfie predictions occasionally and that it would

not manipulate the end result, many had not checked it until I asked them an the end of the

experiment. When they looked at their Data Selfies and the predictions it made about them, they

were surprised and a little disappointed, since the predictions were not very accurate and they

could not fully understand the complicated graphs and tables the selfie provided. However they

all agreed that it was interesting to find out how and what it learns and that having an awareness

on this matters. Their reactions confirmed what my research on the black-box algorithms, that we

need to open them up and make the algorithmic learning more understandable for users. Neither

I nor the participants had the necessary digital literacy skills to interpret most of the data

provided by the program, therefore it was a little disturbing for all of us to confront that the

program (and therefore Facebook) knows things about us that we do not know about ourselves.

The more disturbing part was how it came to such conclusions with the interpretable predictions

(see Figure 6), which exactly denotes to the problem with black-box algorithms and how they

cause algorithmic paternalism. To sum it up, the experiment revealed the necessity of opening up

black-box algorithms and equipping users with digital literacy skills to avoid the possible negative

consequences of algorithmic paternalism of autonomous decision-making AI technologies.

4.2 Survey #1: Machine Misbehavior

This survey was not directly relevant to artificial intelligence or ethical decision-making, but was

conducted to understand the current relationship to our ‘tools’. The ‘Machine Misbehavior’ survey

results demonstrated that some modes of relationship were considered closer to man-machine

relationship than the others. 11 Based on these results, I focused on some of these modes of

relationship, which offered unique dynamics each to investigate further: Human-pet,

Master-slave, Cross-species, Parent-kid, Master-apprentice. The survey results also revealed a few

key aspects of machine misbehavior and how we deal with it (For all answers, please see

Appendix 1.2):

- People don’t give up easily on their machines. When a machine stops working or gets to an

unacceptable level of ‘behavior’, people keep their misbehaving machine even though

they replace it with a new, working one. This is a great finding, because it suggests that

they still have hope and push their ‘relationship’ with the misbehaving machine. This

would be especially the case for learning AI technologies, where the users would need to

train the open-ended algorithms and therefore it suggests it would be harder to discard

such intelligent machines.

- Frustration, irritation are the reactions to machine misbehavior as expected, but the

methods and reactions to ‘fixing’ the misbehavior varies from “satisfying the ego by ruling