ENSURING PUBLIC ACCEPTANCE OF

ROBOTIC TECHNOLOGY

A study exploring the determinants of robotic acceptance

MÄLBERG, FREDRIK

ZYLBERSTEIN, ADAM

School of Business, Society & Engineering

Course: Master Thesis in Business Administration Course code: EFO 704

15 cr

Supervisor: Cecilia Lindh Date: 2017/06/05

ABSTRACT

Date: 5th June 2017

Level: Master Thesis in Business Administration, 15 cr

Institution: School of Business, Society and Engineering, Mälardalen University

Authors: Adam Zylberstein Fredrik Mälberg

91/06/29 92/02/04

Title: Ensuring Public Acceptance of Robotic Technology

Tutor: Cecilia Lindh

Keywords: Acceptance, Robotic technology, Trust, Perceived Benefits, Perceived Risks Research

questions: How does trust, perceived risks and perceived benefits influence acceptance of robotic technology?

How does the public perceive ambiguities regarding their jobs, safety and future when making their judgment about robotics?

Purpose: The aim of this paper is to enhance understanding of how trust, perceived risks and perceived benefits influences public acceptance of robotic technology.

Method: A quantitative study was carried out using an online survey. The sample consisted of a cross-national data set with a total of 514 respondents. The collected data has been analyzed using simple and multiple linear regression analysis.

Conclusion: This paper established that trust is a necessity to manage in future relationships inevitably affected by robotic technology. It revealed that the influence of public fears associated with jobs, safety and future are of great importance to cover, in the progression for robotic acceptance. This paper gives empirical evidence to consider trust, perceived risk and perceived benefits as important determinants to facilitate the process of public acceptance of robotic technology.

Acknowledgments

We are genuinely thankful for all the support and guidance received from our supervisor Cecilia Lindh together with co-assessor Steve Thompson. A big expression of gratitude is also aimed to Dennis Helfridsson, Vice President at ABB Robotics for taking his time to inspire us. This thesis would not have been possible to accomplish without our project group and for that we are deeply grateful.

Västerås, 5th of June 2017

Table of Content

1. Introduction ... 1

1.1 A contemporary view of robotic technology ... 1

1.2 Problem background ... 2 1.3 Problem formulation ... 3 1.4 Research purpose ... 4 2. Theoretical framework ... 5 2.1 Introduction to theory ... 5 2.2 Trust ... 5

2.3 Trust in various situations ... 6

2.3.1 Trust in humans ... 7

2.3.2 Humans trust in technology ... 8

2.4 Perception of risks and benefits of technology ... 9

2.5 Technology acceptance ... 10

2.6 Formation of hypotheses ... 11

3. Research design ... 14

3.1 Choice of theoretical framework & methodology ... 14

3.2 Primary data ... 14

3.3 Secondary data ... 15

3.4 Project group ... 16

3.5 Operationalization ... 16

3.6 Analysis design ... 18

3.7 Reliability & validity ... 19

4. Analysis ... 21 4.1 Hypotheses 1 a-b ... 21 4.2 Hypotheses 2 a-c ... 21 4.3 Hypotheses 3 a-c ... 22 4.4 Summary of analysis ... 22 5. Discussion ... 23 6. Denouements ... 25 7. Concluding remarks ... 26

7.2 Contribution & Implications ... 26

8. References ... 27

Appendix 1 ... i

Appendix 2 ... iii

List of Figures & Tables

Figure 1: Trusts influence on perceived benefits and risks ... 11Figure 2: Determinants of perceived risks ... 12

Figure 3: Determinants of acceptance ... 13

Figure 4: Conceptual model/Operationalization ... 18

Figure 5: Correlations ... 20

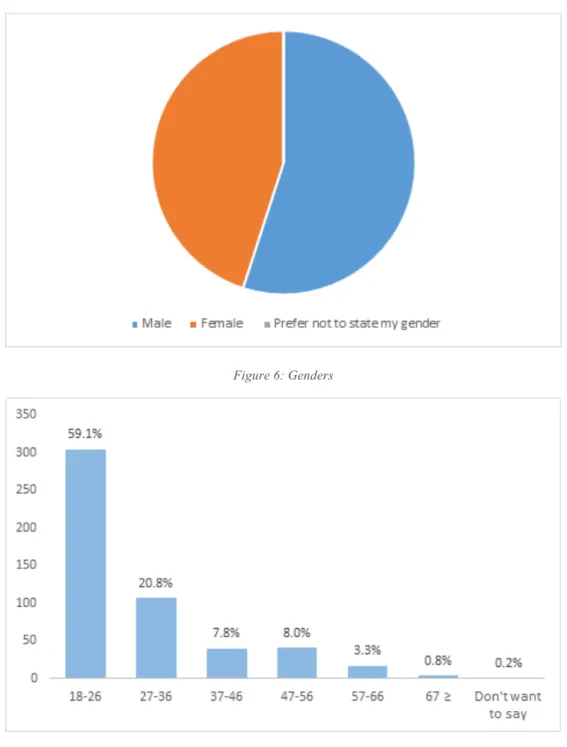

Figure 6: Genders ... Appendix 2 Figure 7: Ages ... Appendix 2 Figure 8: Countries ... Appendix 2 Table 1: Cronbach's Alpha ... 20

Table 2: Hypothesis 1a ... 21

Table 3: Hypothesis 1b ... 21

Table 4: Hypothesis 3a-c ... 22

Table 5: Hypothesis 3a-c (ANOVA) ... 22 Table 6: Descriptive statistics ... Appendix 1

“I make mistakes growing up. I’m not perfect; I’m not a robot.”

- Justin Bieber

1. Introduction

The advancements in robotic technology have not gone unnoticed and companies are well underway of replacing a lot of human labor in favor of robots that are more efficient and effective. Although innovative technology has forced humans to adapt in the past, the integration of robotic technology into society is obstructed by ambiguities expressed by the public.

1.1 A contemporary view of robotic technology

Robots stand on the threshold of transforming manufacturing as we know it. Robots have evolved from monotone and expensive machines into agile, resourceful and comparatively cheap investments. Using robots in manufacturing is claimed to enhance product quality and efficiency and is argued to be vital for Europe’s future competitiveness. (PWC, 2014) However, scholars claim that future development of technology is heavily reliant on public acceptance (Siegrist, 2000; Gupta, Fischer & Frewer, 2011).

As engineering progress continues robots are called upon to do more, above and beyond the conventional, monotonous and arduous undertakings in the manner of fusing or handling materials. The advancement in technology also means that robots now are able to acquire more human-like qualifications and characteristics in the manner of skills, senses, remembrance, ability to learn and identification. This means that robots are able to engage in more jobs that can facilitate production of commodities that society desire. (PWC, 2014) After a relatively unhurried development progress in the early 2000’s, the sales of industry robots have skyrocketed in later years. Since the latest financial crisis the volume of sales have four folded. In 2015, 250 000 industrial robots were sold globally, an escalation of 12 % compared to 2014. (Dagens Industri, 2016)

The automotive industry together with the electronics industry represents the largest consumer of industrial robots, with China representing the largest and fastest growing market. In 2015, it was estimated that 1.5 million robots were stationed on manufacturing facilities around the world. International Federation of Robotics (2016) predicts this to be closer to 2.3 million in 2018. If that prediction appears to be true, it corresponds to an increase by 50% in just three years. Automation and robots are auspicated to play a central role in the future, in the so-called “Fourth Industrial Revolution”. More than 1.6 million job opportunities within the manufacturing industry will disappear in the western world during 2015-2020. Concurrently in the USA, the number of industrial workers has withered from approximately 18 million to around 12 million, a decrease of over 30% over the last 10 years. However, industrial jobs will not be the most affected group in the future as 4.8 million white-collar jobs are expected to disappear by 2020. Using Sweden as an example, 53% of all jobs could be automated or performed by robots within 20 years. (Dagens Industri, 2016)

People tend to ground a subconscious model of what a robot is based on what they have experienced and what they have seen. Acceptance towards robots could be influenced by the fairly prototypical stereotype of how robotic advancement will influence the society in the future. (Hancock, Billings & Schaefer, 2011) Furthermore, they emphasize on how science fiction might have influenced this “According to science fiction tradition, the robot is meant to appear fundamentally human and to react in a somewhat human manner, receiving voice input and generating actions often via corresponding

voice output and the control of human-type limbs. The robot is thus a surrogate human but, of course, not fully human. Therefore, how a robot reacts now, and potentially will react in the future, is contingent on how we perceive its limitations and constraints.” (Hancock, Billings & Schaefer 2011, p.2)

People express fear and ambiguities concerning robotic technology that show that there are concerns of not yet fully known negative impacts (Jäger, Moll, & Lerch, 2016). An example of the public's ambiguity is the worldwide media coverage of a Volkswagen employee that was killed by an industrial robot (The Guardian, 2015; CNN, 2015; DN, 2015), although workplace related fatalities is nothing abnormal in the manufacturing industry. These fears for the unknown seems to be something people can not get enough of, a justification for this is the endless motion pictures feeding on the fears displayed by the public (e.g., “Terminator”, “The Matrix”, “I, Robot”, “Transformers” & “WALL-E”). The public's fears for robotic technology have been discussed for a long time, yet they have not been answered to their satisfaction (Jäger, Moll, & Lerch, 2016).

Today, automation is cheaper than ever before and concurrently as robots become more effective, the price will continue to fall. More and more companies now need to consider the fact of investing in robots instead of humans and looking at a period of systematic human-resource change as they call for a greater human-machine collaboration. (PWC, 2014) Technological innovations that depend upon the changes in people's routines stand in need of a longer process of development prior to achieving acceptance (Ram & Sheth, 1989). If the technology is not fully accepted, industries need to prepare for the upcoming implications and find methods to enhance acceptance (Siegrist, 2000). Dennis Helfridsson (2017) Vice President of Robotics at ABB Sweden, emphasize on the impact ambiguities expressed by the public has on the robotic industry. Helfridsson (2017) accentuated on the importance of safety in regards to their products. He claimed that ABB is on a continuous endeavor of making their robots as safe as possible, and that safety is always a part of the blueprint in ABB products. (Helfridsson, 2017)

1.2 Problem background

Companies of today are able to use new technological advancements within robotics to enhance their processes to create better, smarter and more efficient products than ever before. It is claimed that wherever automation displaces people in manufacturing it almost always increases output. (IFR, 2016) Despite all the positive traits that accompany the advancements within robotics, there is a clear confusion in society. Studies show that there still is a rather high disbelief towards robots (Hancock, Billings & Schaefer, 2011; Haring, Matsumoto and Watanabe, 2013; Jäger, Moll, & Lerch, 2016). In defiance of recent times evolution within robotics, where it displays progressive improvements and capabilities. The apprehension and confidence in robots are more forged by what we see in films, read online or see on the news, rather than actual interaction with a physical robot (Haring, Matsumoto and Watanabe, 2013).

Even though there is moderate understanding towards the benefits of robots in society, people are hesitant towards the technology (Hancock, Billings & Schaefer, 2011; Haring, Matsumoto and Watanabe, 2013; Jäger, Moll, & Lerch, 2016). While the alleged benefits to a company may be appealing, scholars and implementers argue that the final users, meaning the consumers, repeatedly resist many technological innovations presented by companies. Scholars argue that final users do not

tend to distinguish the benefits nor the risks of the innovations in the same way as business managers and planners. (Ellen, Bearden, & Sharma, 1991) Siegrist (2000) proposed that trust in companies that already have implemented gene technology influence how risks and benefits are perceived. In his research, he argued that acceptance of gene technology is being influenced by perceived benefits and risks, meaning that trust had a substantial indirect influence on acceptance of gene technology.

Trust can be defined as when one person is assured in an exchange partner’s reliability and integrity (Morgan & Hunt, 1994), and it is a significant component of all relationships and relational exchanges, disregarding the situation (Dwyer, Schurr & Oh, 1987; Morgan & Hunt, 1994). Trust is commonly illustrated as an element in communication amid conscious beings. Yet, trust does not need to be automatically bounded by what is commonly considered to be conscious. Trust can in regards to robotics be associated with technology that is inadequate of communicating human traits (Hancock, Billings & Schaefer 2011). Still, some prominent scholars altercate that trust do not occur between humans and machines. For instance, Friedman, Khan and Howe (2000) contended, “people trust people, not technology” (p. 36). Even though some discrepancy amid human-technology exchanges and social exchanges, progressively more scholars support that humans can and do trust technology. People trust non-human entities every day when driving their cars, fly with airplanes or walk under bridges (Lankton, McKnight & Tripp, 2015). Considering this in the realm of robotic technology; if final users do not trust and perceive robotic technology as beneficial, it could negatively impact the acceptance of the technology. Meaning that companies that rely on robotic technology need deeper understanding about the determinants that influence acceptance.

1.3 Problem formulation

Companies active in the robotic industry, as well as other industries, pay attention to the needs of their customers. Forming the entire value chain, on the needs of the end consumer. (Brumson, 2001) Hence the consumers’ possible rejection of products from companies that utilize robotic technology impacts the future development of robotic technology. Gupta, Fischer & Frewer (2011) claims that there are several determinants of acceptance. Although certain determinants tend to have greater influence on public acceptance than others. The association with, and knowledge about, certain determinants of a specific technology are necessary to examine, as it would facilitate the understanding as well as the prediction of technology acceptance (Gupta, Fischer & Frewer, 2011). The future competitiveness of western companies is claimed to rely on robotic technology (PWC, 2014). As the importance of robotic technology increases, companies will face difficulties if the public does not accept this technology. Trust, perception of risks and benefits are argued to be of immense importance for acceptance of technology (Gupta, Fischer & Frewer, 2011), although this remains relatively unexplored in the realm of robotic technology. People's perception of robots are influenced by what they see in movies or read in the news (Hancock, Billings & Schaefer, 2011; Haring, Matsumoto and Watanabe, 2013). People might perceive advantages of robotic technology, but the spread of workplace fatalities, apocalyptic robot movies and news regarding companies that lay off humans in favor of robots might raise public concerns. It is vital to know which determinants have an impact upon perceived risks to reduce the uncertainties expressed by the public (Gupta, Fischer & Frewer, 2011).

1.4 Research purpose

The aim of this paper is to enhance understanding of how trust, perceived risks and perceived benefits influences public acceptance of robotic technology.

To achieve the purpose this paper will be based on the following research questions:

• How does trust, perceived risks and perceived benefits influence acceptance of robotic technology?

• How does the public perceive ambiguities regarding their jobs, safety and future when making their judgment about robotics?

2. Theoretical framework

Siegrist (2000) presented a recognized model for acceptance of gene technology. This model has been used as inspiration where it has been modified and put in a different context to investigate the acceptance of robotic technology. To be able to investigate the acceptance of technology, each concept has been thoroughly examined. The theories are used to give a comprehensive view of trust, perceived benefits and perceived risks as determinants of acceptance. An overview of the conceptual model used in this paper is presented in figure four, section 3.5.

2.1 Introduction to theory

Morgan & Hunt (1994) argues that companies should aim for establishing, developing and maintaining successful relationships. Business is to be seen as a relational exchange rather than a discrete transaction with short duration (Dwyer, Schurr & Oh, 1987). The strategic view is that rather than manipulating the customer, a company must focus to generate genuine customer involvement by communicating and sharing knowledge (McKenna, 1991). Successful relational exchange reduces uncertainty and enhances social satisfaction among the public (Dwyer, Schurr & Oh, 1987). Failure or success of relationship marketing efforts is affected by several contextual factors. Customers need to believe that the company can be trusted. Customers must be able to identify with and share the values of the partnering company and perceive that the relationship is coherent with moral obligations. Furthermore relationships require significant transfer of technology and knowledge sharing. (Hunt, Arnett, & Madhavaram, 2006; Biggemann, 2012) Morgan and Hunt (1994) claim that trust and commitment is pivotal to succeed, as the global economy requires companies to be a trusted cooperator in order to be an adequate competitor.

2.2 Trust

Trust is a widely researched area with many prominent scholars having their own definition on what they conceive trust to be. Several economists, psychologists, sociologists and management scholars are congruent on the value of trust in the handling of human relationships. (Hosmer, 1995) There is a comprehensive understanding on the value of trust, but there is also proportionate disagreement on the definition of the concept. Berry (1993) emphasized, “Trust is the basis for loyalty” (p.1). Schurr and Ozanne (1985) believe trust to be paramount in the development of attaining coordinated problem solving and productive communication. Trust is so essential to relational transactions that Spekman (1988) propose it to be “The cornerstone of the strategic partnership” (p. 79). Morgan and Hunt (1994) known for their commitment trust theory of relationship marketing, consider trust to be evident when “One party has confidence in an exchange partner’s reliability and integrity” (p.23). In the same direction, Moorman, Deshpandé and Zaltman said (1993) “Trust is defined as a willingness to rely on an exchange partner in whom one has confidence.” (p. 82). The literature on trust regarding relationships proposes that a trustworthy relationship is dependent on variables such as reliability and integrity (Morgan & Hunt, 1994). Trust has through time been portrayed as the core of buyer-seller transactions that provide confidence for a pleasurable outcome in exchange relationships. Trust is a representative component of most general and commercial communications where ambiguity appears. (Pavlou, 2003)

Stewart, Pavlou and Ward (2002) contend that trust is paramount in consumer-marketing communication. Trust has consistently been a decisive factor in affecting consumer behaviour and has displayed to be highly important in ambiguous situations (Pavlou, 2003). Pavlou (2003) further argue that trust is basically only required in dubious environments, by reason that it effectively means hypothesizing risk and becoming susceptible to assured parties. Thus, trust is associated with the level of uncertainty related to a certain situation (Pavlou, 2003). A study performed by Sherman (1992) noted that a third of strategic alliances flounder in behalf of lack of trust amid exchange partners and that the most significant barrier of success is the absence of trust. In accession to the previous quote by Morgan and Hunt (1994) the result of trust is “Firm’s belief that another company will perform actions that will result in positive outcomes for the firm as well as not take unexpected actions that result in negative outcomes” (Anderson & Narus, 1990, p.45). Relationships with high level of trust tend to increase acceptance of risk, as well as more prone to expose themselves to uncertainty, divergent to low trust relationships. High trusting relationships also demonstrate tendencies of improved overall performance if mistrust would drop. (Kwon, 2004) The concept of trust has shown to be highly applicable to almost all situations and types of relations. Businesses are progressively attracted to the improvement of long-term and jointly profitable relationships with everything from other organizations, customers and their own employees. This is applicable to organizations selling to other businesses, pro bono and governmental agencies and even final consumers. A fundamental factor in accomplishing these long-term relationships is the formation, installation and conservation of trust. (Kennedy, Ferrell & LeClair, 2001) From an international aspect, trust has been shown to be one of the more unwieldy concepts to achieve. Kennedy, Ferrell & LeClair (2001) noted that both Fukuyama (1995) and Hosmer (1995) observed the gravity of societal-level trust in the buildup of jointly satisfying relationships. In the buildup of long-term relationships between buyers, sellers and other transaction partners like manufacturers, trust has shown to be pivotal (Kennedy, Ferrell & Leclair, 2001).

2.3 Trust in various situations

There is a distinction of what characterizes human beings and machines. Humans are conscious creatures, influenced by their emotional and rational thoughts throughout their decision making process. However it is argued that emotions have the strongest influence on human behavior. The behavior may take form out of analytic or analogic judgments where societal norms together with public opinions in one’s environment influence the analogic thought process. Analytical, rational judgment is possible when sufficient cognitive resources are accessible. Albeit, people tend to automatically make quick judgments outside of one's consciousness based on the analogical process when the cognitive resources are limited. (Lee & See, 2004; Hoff & Bashir, 2015).

When it comes to machines they are defined in the Cambridge dictionary as: “A piece of equipment with several moving parts that uses power to do a particular type of work”. ("Machine", 2017). Meaning that a machine is a product without conscious, constructed to work in a certain way by the intent of the creator. Its outcomes are invented to enhance capabilities and broaden human possibilities. Furthermore, robots are defined in Cambridge dictionary as “A machine controlled by a computer that is used to perform jobs automatically” ("Robot", 2017). Hence, a robot is nothing but a machine without conscious, programmed to perform tasks. Hassenzahl (2001) describes the relation between humans and products as distant. He continues to argue that various quality dimensions

grouped into two apparent quality aspects characterize products. These aspects refer to the underlying causes of product affection. Hence decisive aspects to establish a valuable relation. To successfully accept a product, it is crucial that people perceive the products intention without uncertainty. (Hassenzahl, 2001)

Already back in the 1950’s Alan Turing proposed the question “Can machines think?” (p. 1) To achieve this he developed the “Turing test” to measure a machine's ability to exhibit intelligent behavior similar or indistinguishable from a human. During the test all participants were separated and an evaluator would attempt to determine which participant was human and which was a computer. Turing predicted that within 50 years an average interrogator would not have more than 70% chance of making the correct identification. (Turing, 1950) Today 70 years after Turing’s research, technological advancements have resulted in that machines now have taken on more traits that is considered human, as robots now are able to obtain sensing, dexterity, memory and object recognition. (PWC, 2014) Robotic technology is argued to differ from general existing products and machines. The behavioral and movements of robotic technology impact the mental and reflective attitudes that people gain when interacting with them. (Oh & Kim, 2010)

Human’s relationship with technology tends to be affected by the technology’s resemblance with humans. Certain aspects of technology and how users interact with it could make technology more or less similar to humans (Lankton, McKnight & Tripp, 2015). In the context of robotics, visual appearance of robotic technology influences how humans predict the movements of it. A robot that was more human-like appeared to be easier to foresee its actions. Even though the comparing robot was constructed using identical mechanisms and programming. Human-like design draws expectations of biological motion due to a lifetime of experience of human interaction. Lack of experience with robotic technology generates lowered expectations of smooth, biological motions and the internal thought process gets biased. In the research conducted by Saygin & Stadler (2012) People’s perception of a robot with less human-like characteristics appeared slower than a robot with more human-like characteristics. (Saygin & Stadler, 2012)

2.3.1 Trust in humans

The concept of trust is conceivable in varied stems of research. Researchers from philosophy, political science, psychology, sociology and economics have tried to interpret trust and constructed ways to gestate the term. Seemingly, academics are far from meeting in harmony on a single definition of trust. An essential commonality between assorted fields of research is that practically all definitions of trust incorporate three elements. Initially, there must be a trustor in order to grant trust. There must be a trustee in order to obtain trust, and an object to lose. Further, the trustee needs to have a motivation, a stimulus to complete the assignment. The stimulus can be highly diversified from financial compensation to benevolent acts. Concluding, there must be a contingency that the trustee will fall short in performing the assignment, enticing ambiguity and uncertainty. These components make the framework for the idea that trust is essential when an object is traded in a relation portrayed by ambiguity. (Lee & See, 2004; Hoff & Bashir, 2015)

The embodiment of trust comprises of both feelings and thoughts, however emotions are the dominant explanation of trusting demeanor (Lee & See, 2004). For instance, people generally choose to not trust in another person as a result of suspicious feelings people are incapable to demonstrate logically.

Affective processing cannot go unnoticed when trying to tackle and understand trust formation and causing behavior. Without emotions and judgment, trust would not have such a considerable influence on human behavior. Even though a great deal of people pays no attention to it, humans make assessments and judgments reflexive about the dependability of people they meet regularly. These blink of an eye judgments are not one hundred percent correct, although humans have an exceptional capacity for pinpointing the trustworthiness of others by using refined hints. (Lee & See, 2004; Hoff & Bashir, 2015)

2.3.2 Humans trust in technology

Even though trust in technology is contrasting in distinction to human trust, Hoff and Bashir (2015) argue for the resemblance amid the two. At the most elemental level, these two types of trust are comparable by virtue of that they both symbolizes situation-specific attitudes that only are applicable in certain situations when something is traded in a relation portrayed by ambiguity. Supplementary to this theoretical appearance, Hoff & Bashir (2015) uncovered more definitive analogies. People practice socially applied rules, such as kindness, to communicate with machines. Equivalent to relational trust, trust constitute a prominent aspect in affirming the human consent to trust on automated systems in environments outlined by ambiguity. Mishaps do happen when users mismanage automation by over relying it, or either misuse automation by cause of under trusting it. Facilitating correct trust in automation is decisive for developing security and productiveness of human-automation interaction. Disregardless of how human-human-automation is characterized, significant variation occur between it and human trust concerning its base and form. (Hoff & Bashir, 2015)

Having confidence or assuming that technology has enticing qualities, or being trustworthy is justifiable since people engage in trusting non-human entities in their daily lives. Hence, people trust in technology. (Lankton, McKnight & Tripp, 2015) Trusting airplanes, cars or the Internet is nothing new, people depend on these technologies to operate properly so they can travel safe and proceed with their daily routines. According to Lankton, McKnight & Tripp (2015) literature and scholars have shown the breadth of trust in technology and its central benefits. Although they argue that previous research is very erratic as regards to what the constructs of technology-trusting beliefs are. Previous research have portrayed and calculated trust in technologies as it would be human. In other words, researchers like Vance, Elie-Dit-Cosaque and Straub (2008) have measured trust in technology adopting human-like trust constructs. Hoff & Bashir (2015), state these human constructs as ability, integrity or benevolence of a trustee. Mayer, Davis & Schoorman (1995) explain these as factors of trustworthiness. Ability is according to Mayer, Davis & Schoorman (1995) the group of skills, competencies and characteristics that permits a group to gain leverage in a specific area of expertise. The sphere of the ability is distinct as a result of that the trustee may be proficient in a certain area of expertise, allowing that person to be trusted with assignments linked to that area. The relation amid integrity and trust is associated with the trustors conception of that the trustee abide by a set of fundamental principles that the trustor take pride in. If a trustee does not apply to the trustors set of principles, the trustee would not be presumed trustworthy (Mayer, Davis & Schoorman, 1995). Benevolence is to what extent a trustee is devoted to the trustor, i.e., wanting to do good. Benevolence advocates that the trustee has a unique connection to the trustor. Benevolence is the image of a positive sense of direction of the trustee in relation to the trustor. (Mayer, Davis & Schoorman, 1995). Adopting an incorrect trust construct could be misleading and could cause disorientation and

uncertainty due to imbalance between the construct and the technology being evaluated (Lankton, McKnight & Tripp, 2015).

2.4 Perception of risks and benefits of technology

The conception of “risk” came to be prominent in the 1920s in the field of economics (Dowling & Staelin, 1994). From there on, it has been used extensively in theories in multiple fields. The field of marketing got its first addition in the 1960s when Bauer (1960) introduced us to the concept of perceived risk. The conceptualization of perceived risk is in many instances used in consumer research to define risk in circumstances regarding consumer perception of uncertainty and conflicting fallouts of using a service or buying a product. What this essentially means is that the probability and outcome of an event is uncertain. (Dowling & Staelin, 1994) In present day, risk managers have become seriously conscious that in democratic countries, the perception of a technology’s perceived benefits and risks are decisive elements of political processes, from fundamental arrangements to the development of a specific product or technology, to the approval of different management approaches and risk reduction. The acceptance of technology perceived as uncertain could be elevated by distinguishing and accentuating on its benefits. (Siegrist, Cvetkovich & Roth, 2000) However, dissenting correlations amid assessments of risks and benefits exist in various technologies (Alhakami & Slovic, 1994). Multiple descriptions for the detected associations have been provided. Alhakami & Slovic (1994) further argue that people determine risk by regular attitudes (favorable or unfavorable). If people are consistent in their judgments it results in a reduction of risks and boost the benefits of technologies perceived as favorable. Whereas technologies perceived as unfavorable would work contrarily, culminating in a perceived risk that is higher and benefits that are lower regarding the specific technology. However people are seldom consistent in their judgments. Meaning that people tend to contemplate risks and benefits in their reasoning. (Alhakami & Slovic, 1994)

Frewer, Howard & Shepherd (1998) consider that favorableness of a certain technology is altered by the apprehension of benefits and risks that are functionally interconnected with each other. Additionally they argue that it potentially could be possible to alter perceived risks by modifying the perceptions of benefits. Evidence exist that assurance in laws regulating technology and social trust in organizations utilizing the technology is essential for perceiving technology as safe and acceptable (Siegrist, 2000). The common man does not occupy a refined understanding about technology. Standing on empirical data, Miller (1998) concluded that as a result of inadequate knowledge and interest, the majority of people do not estimate risks and benefits correlated to various technologies. The public's attitudes and reactions in relation to new technology are influenced by the assurance and trust people have in governmental agencies and companies. A study conducted by Siegrist & Cvetkovich (2000) investigating the perception of hazards, found subsidy for that trust turn out to be decisive when there are limited resources for taking actions and making decisions. Siegrist & Cvetkovich (2000) further claim that the contrast between experts and laymen is of little surprise, by reason of that the common man's view on the outcome of risks and benefits generally differ.

The objective of trust is to diminish the intricacy that people encounter. Rather than making logical judgments standing on knowledge, people engage in social trust to elect people with proficient knowledge, which they perceive as credible and true. Empirical investigations have demonstrated that social trust is a meaningful element in relation to technologies (Siegrist & Cvetkovich, 2000). On the

contrary, Sjöberg (1999) contradicts that trust is a decisive element in the perception of risk. Sjöberg (1999) noted that trust in regards to politicians, (e.g., overall trust in governments) does not notably foresee perception of long-term risk and that the explanation power of trust is due to how it is operated. Sjöberg (1999) believes that social trust is limited in its explanation of perceived risk, neither is it of any theoretical or practical profit. Contrary relations amid estimated benefits and risks have been noted for contrasting technologies. People, in relation to technology, perceiving frequent benefits measured less risks, than people perceiving technology as less valuable (Alhakami & Slovic, 1994). Findings have shown that perceived risk means are heavily associated with perceived benefit means. Technologies considered to be valuable were considered to have lower risk than technologies perceived as not beneficial. Based on this groundwork, it has been contended that it may be achievable to alter perceived risks by modifying the perception of benefits (Frewer, Howard & Shepherd, 1998). A contrasting interpretation amid perceived benefits and risks has formerly been considered by Siegrist, Cvetkovich & Roth (2000). It is speculated that social trust concurrently impact both perceived benefits and risks. Usually, the correlated benefits and risks are not explicit and consequently to that, people choose to trust in knowledge administered by reputational sources (Siegrist & Cvetkovich, 2000).

2.5 Technology acceptance

People undergo a psychological process when forming their judgments about technology. The outcome variable of this process has been conceptualized as acceptance. (Gupta, Fischer & Frewer, 2011) Dillon & Morris (1996) claim that acceptance needs to be considered as the commencement of technological development. Practitioners and scholars are concerned in how and why people accept technology in order to find better methods for designing, evaluating and predicting how people will react to new technology (Gupta, Fischer & Frewer, 2011). If technologies are not accepted people seek alternatives as well as express anxiety (Dillon & Morris, 1996). Hence new technologies that enhance efficiency and effectiveness of work processes have a risk of never reaching its full potential, by the simple fact that it is rejected (Godoe & Johansen, 2012). Rejection of technologies and what affects public acceptance have gained significant interest by scholars in the field of social and behavioral research (Gupta, Fischer & Frewer, 2011). A considerable amount of research regarding acceptance is however in the context of the actual user (e.g. Fishbein & Ajzen, 1975, 1991; Davis, 1986).

Acceptance of technology is claimed to be influenced by several divergent determinants. Gupta, Fischer & Frewer (2011) reviewed this research area and mentioned ten technologies that were prominent: genetic modification, nuclear power, information and communication technology, mobile phones, chemicals used in agriculture, genetic modification, genomics, cloning, hydrogen technology, radio frequency identification technology and nanotechnology. Studies of acceptance have become increasingly sophisticated as the amount of determinants investigated has increased. Classical determinants mentioned are for example trust, knowledge, risk perception, benefit perception and individual differences. Regarding perception of risk and benefit, risk has gained more attention. Hence risk perception is claimed to be of greater importance than benefit perception as determinant of consumer acceptance. (Gupta, Fischer & Frewer, 2011)

2.6 Formation of hypotheses

As previously mentioned, development of technology rely on public acceptance (Siegrist, 2000; Gupta, Fischer & Frewer, 2011). What determinates acceptance of technology is a research area that has gained a lot of sophistication. Known determinants of acceptance tend to have various influences dependent on the technology. (Siegrist, 2000; Gupta, Fischer & Frewer, 2011) The future competitiveness of western companies are claimed to be dependent on robotic technology (PWC, 2014, Dagens Industri, 2016). Hence knowledge about the determinants of robotic technology are of significant value.

The importance of trust is repeatedly insisted by scholars (Schurr & Ozanne, 1985; Spekman, 1988; Berry, 1993; Morgan & Hunt, 1994; Hosmer, 1995; Stewart, Pavlou & Ward, 2002) In surroundings where people tend to be doubtful and anxious, the importance of trust increases (Pavlou, 2003). Siegrist (2000) argue that when people lack sufficient knowledge about technologies they rely on trust to manage anticipated risk. Furthermore, people who establish trust in scientific and technological capabilities display lesser concern about hypothetical threats. Siegrist’s (2000) proposal is still highly regarded amid acceptance scholars, however it is now almost 20 years since Siegrist (2000) published his paper. Today as Europe’s future competitiveness heavily depends on robotic technology (PWC, 2014; Dagens Industri, 2016), knowledge about how trust influences acceptance of robotic technology remains relatively unexplored.

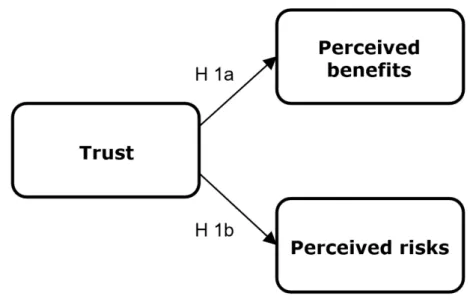

Based on previous research presented above, the following hypotheses are generated: • H 1a. Trust in robotic technology influences people’s perception of benefits • H 1b. Trust in robotic technology influences people’s perception of risk

Figure 1: Trusts influence on perceived benefits and risks (Own creation)

Apprehension of risks regarding technologies impacts the level of acceptance (Frewer, Howard & Shepherd, 1998; Siegrist, 2000). Perception of risk is often used in consumer research regarding uncertainty and conflicting fallouts when utilizing a certain product, service or technology, meaning that the technology’s outcome is uncertain (Dowling & Staelin, 1994). As people's interaction with

robot technology is fairly limited (Haring, Matsumoto and Watanabe, 2013), most people do not have sufficient knowledge to estimate risks and benefits related to various technologies (Siegrist & Cvetkovich, 2000). Due to this, people form their beliefs about benefits and risks based on what they see in movies or read online (Hancock, Billings & Schaefer 2011). The spread of workplace fatalities, apocalyptic robot movies and companies that lay off humans in favor of robots might raise public concerns. Three concerns were identified as determinants (sub-variables) of perceived risk based on reviews of secondary sources. These concerns were associated with jobs, safety and future.

Based on previous research presented above, the following hypotheses are generated:

• H 2a Risks associated with jobs are significant in relation to perceived risks of robotic technology

• H 2b Risks associated with safety are significant in relation to perceived risks of robotic technology

• H 2c Risks associated with future are significant in relation to perceived risks of robotic technology

Figure 2: Determinants of perceived risks (Own creation)

Despite positive traits that accompany the advancements within robotics (PWC, 2014; Dagens Industri 2016), there is a clear confusion in society. There is moderate understanding towards the benefits of robots, although people are hesitant towards the technology (Jäger, Moll, & Lerch, 2016; Hancock, Billings & Schaefer, 2011; Haring, Matsumoto and Watanabe, 2013). Siegrist, Cvetkovich & Roth (2000) argues for that technology perceived as uncertain could be elevated by accentuating and differentiating what influences acceptance. Acceptance of technology is claimed to be influenced by several divergent determinants, whereas perception of risks and perception of benefits has been claimed to be of most importance (Gupta, Fischer & Frewer, 2011). Siegrist (2000) argued for that perceived risks and perceived benefits had a significant impact on acceptance of gene technology whereas trust impacts these two determinants. Hence, trust have an indirect influence on acceptance of gene technology. As mentioned earlier, research about determinants of acceptance in regards to

robotic technology, continue to be relatively uncharted. Thusly, Siegrist’s (2000) conception of trusts indirect influence on acceptance is applied in a new contemporary context almost 20 years later. Based on previous research presented above, the following hypotheses are generated:

• H 3a Perceived benefits influence acceptance of robotic technology • H 3b Perceived risks influence acceptance of robotic technology • H 3c Trust indirectly influence acceptance of robotic technology

3. Research design

Following the research methodology of Siegrist (2000) a quantitative study was carried out using an online survey. The sample consisted of a cross-national data set with a total of 514 respondents that has been analyzed using single and multiple linear regressions.

3.1 Choice of theoretical framework & methodology

To cover the importance of acceptance for future development of robot technology the concept have been interpreted and described. This paper is influenced by the theoretical framework proposed by Siegrist’s (2000) acknowledged research about gene technology. Considering the passage of time subsequent to Siegrist (2000) research was published and the immense technological and societal progress that has been made since then. This research will modify and apply Siegrist’s (2000) model to a contemporary context concerning robotic technology that still, to this day, remains relatively unexplored.

Siegrist (2000) and Gupta, Fischer & Frewer (2011) claimed that perceived risks and perceived benefits should be considered as important determinants of acceptance. However these determinants have not been widely researched in the context of robotic technology, thus they were considered to be of interest in this paper. Theories regarding trust have been included since lack of knowledge of a certain technology increases the influence of trust (Siegrist & Cvetkovich, 2000). The concept of trust is interpreted to provide an explicit overview. The most influential papers in this subject concerns trust towards humans. Some people tend to see robotic technology as a form of technology that possesses more human traits comparing to other technologies. By that reason trust in various contexts have been portrayed, as there is a distinction on what characterizes trust towards technology and humans.

As mentioned earlier this paper modified and applied a research model created by Siegrist (2000). To maintain reliability of Siegrist (2000) research model, the methodology used in this paper is in conjunction with that research.

3.2 Primary data

Siegrist (2000) utilized a quantitative method in his research, collecting data using surveys. Accordingly, primary data was collected in this research mainly using surveys. Additional information was collected from Dennis Helfridsson, Vice President of Swedish robotics maker ABB Robotics, during a company visit in March. The survey was intended for English speaking respondents, using online survey tool Artologik Survey & Report to distribute it digitally during 2 weeks in April 2017. As researchers are not able to directly aid respondents that have concerns or issues (Bryman & Bell, 2013), the questions were simplified and a “Do not know/Does not apply” option was added to counterbalance any misinterpretations. Profound responses may not be attainable since the questionnaire cannot be too long. Be that as it may, surveys entail several benefits. The administration of surveys is uncomplicated and will take considerably less time to distribute. The gathered data is also more manageable, since an online survey has the possibility to reach a lot of respondents simultaneously, together with that the return rate of the respondents is gradual. (Bryman & Bell, 2013)

Surveys, in contrast to qualitative interviews do not induce in any interviewer effect, meaning that the interviewer is affecting the interviewee in any manner. A cross-national dataset is easier and cheaper to retrieve using online surveys. (Bryman & Bell, 2013) Surveys should have a proper design with a language that the respondents comprehend and should not contain any open questions. This survey followed the guidelines, as provided by Bryman and Bell (2013) and made it possible to avoid some of the negative aspects related to surveys.

The terminology was simplified and only closed questions were used. Response options were horizontally organized to decrease space as the survey contained a total of 82 questions. The survey had a 7-graded scale to facilitate coding, including one neutral option (Bryman & Bell, 2013). Respondents that marked higher numbers (5-7) indicated for a more positive response towards robotic technology. It should be emphasized that some questions were reversed to correspond with the scale. 3.2.1 Sample

The sample of this study constitutes of a convenience sample that is a form of nonprobability sampling method where currently available respondents were requested to participate. Snowball sampling was used in this survey as a way of increasing the quantity and spread of responses. Snowball sampling means that some respondents were asked to forward the survey. It should be emphasized that the respondents out of snowball sampling have various chance of affecting the sample. As a consequence of nonprobability sampling, the results of this study cannot be generalized and is not able to represent the opinions of the entire population. (Bryman & Bell, 2013) Demographic questions have been added in order to provide an overview of the sample. The spread of the sample is visualized in appendix two. Data was collected from a total of 514 respondents. Out of these respondents were 55% male and 45% female. No respondent chose the third alternative provided. A substantial portion of the respondents was between the ages of 18-26. The second largest age group consisted of people between the ages of 27-36. Thus respondents over the age of 37 represented only a small portion of the sample. Respondents from 44 different countries are represented in the survey. The sample consisted of a cross-national data set, a considerable portion of the respondents were Swedish (42.2%) or Dutch (20.6%).

3.2.2 Response rate

Distribution of the survey was divided among the groups within the project group. The increased amount of distributors facilitated the process of securing a greater and a more geographically spread data set. The survey was distributed to a total of 885 potential respondents, some of whom forwarded it to others. After the data collection period, a total of 514 respondents had partaken in the survey. The response rate constitutes 58% of total amount of approached.

3.3 Secondary data

To find compatible literature that is suitable for the purpose of the research, a review of published literature have been carried out. The intention of the review was to scrutinize already known information in the area of research and single out relevant theories and findings that were compelling for the intended research. For this research, multiple search words related to the research conducted by Siegrist (2000) was used, as mentioned by Zou and Stan (1998) it is imperative to tune the

terminology used in the area of interest. The use of alternate search words can contribute to other and wider findings (Zou & Stan, 1998). Hence the search words were combined with robotic technology. The selection of frequently searched terminologies were; Trust, Perceived risk, Perception, Robots, Robotic Technology, Distrust, Perceived benefits, Jobs, Safety, Future and Acceptance. These words have been searched for individually as well as in multiple combinations

Scientific sources have predominantly been used for secondary data, these were published in eminent scientific journals (Journal of Marketing, Journal of Consumer Research & Journal of Business Research) which have already been scrutinized before publishing, which adds value to the credibility of the information. The journals and publications used for this have been collected from credited database’s such Google Scholar, ABI/INFORM Global and DIVA.

Apart from the sources mentioned above, data has been retrieved from different newspapers reporting on current events have been used to widen the understanding of robotics. Data acquired from mass media can oftentimes constitute reliable and credited sources often suitable for social-scientific research (Bryman & Bell, 2013). The content have been thoroughly reviewed to ensure that the authors are truthful and have sufficient knowledge within the specific area using the guidelines provided by Bryman & Bell (2013). Nonscientific resources have not been used to support any results or endorse conclusions. The statistical data used in this paper have been supplied by credible sources such as PwC, the European Commission and International Federation of Robotics (IFR). The numbers used have been up to date and the aspiration was to curtail the risk of any inaccuracy. The entire secondary data set has been closely examined with remarks to any mistakes or biased material from the authors. Only confirmed and proven models and theories have been used in this thesis to analyze and endorse results. The use of confirmed theories reduce the risk of presumptions and inaccurate analysis that leads to a more trustworthy and reliable discussion which will open up to a conceivable conclusion.

3.4 Project group

This study was conducted in a project group consisting of 6 students divided into three smaller groups. Each group within this project investigated problems connected to robotic technology. The survey that serves as a foundation for this study was conjointly created and distributed by the project group. The number of questions for each group was limited by the reason that too lengthy questionnaires might confuse the respondents and increases the risk of losing those (Bryman & Bell, 2013). The limited allocated number of questions for each group had a small constraint on the depth of this survey. However, being part of a group project entailed benefits that outweighed the negatives. Group discussions have been immensely valuable and each group have been knowledgeable and educated in their area of research. The spread of the survey has also benefited the group project due to the increased amount of distributors of the survey. Hence, the sample consists of a larger amount of respondents and wider cross national spread than probably would have been attainable with fewer distributors.

3.5 Operationalization

For this study three demographic questions were used to identify gender, age and country of origin. The demographic questions was used to give an overview of the spread of the survey and acted as an

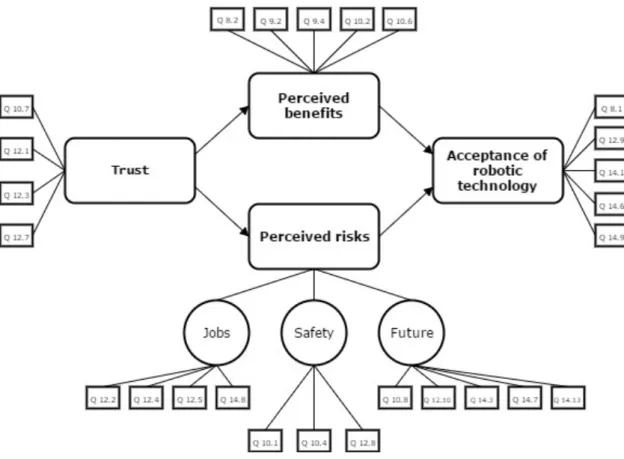

introduction. The conceptual model consists of four main variables from already existing research regarding acceptance to ensure high validity. Trust, Perceived benefits and Perceived risks are independent variables whereas Acceptance serves as dependent variable. The variable “Perceived risks” have been divided into three sub-variables in order to measure each determinants impact on perceived risk. The creation of the sub-variables is inspired by ambiguities in society based on reviews of secondary sources, such as reports, scientific journals and media. Since the sub-variables are of own creation, it could impact the reliability. Howbeit, the apprehension is that the sub-variables succeed in covering the ambiguities reflected in society.

All questions have been inspired from the research conducted by Siegrist (2000). Influence from preceding research and surveys are positively viewed from a reliability standpoint (Bryman & Bell, 2013). Below is a closer description of each variable, followed by a detailed model to clarify the surveys connection to the theoretical framework. All questions used for the constructs can be found in section 3.8 in table (1).

Trust (10.7, 12.1, 12.2, 12.7) Aims to measure the level of trust the individual have when thinking about robotics or potential interaction with robotics. The questions aim to determine whether the individual would trust robotic technology to help them and/or society.

Perceived Benefits (8.2, 9.2, 9.4, 10.2, 10.6) Refers to that acceptance of technology is often based on individual perception. By distinguishing and accentuating on the benefits of technology, it could be possible to alter perceived risk associated with technology. The questions cover benefits both in the individual’s personal life and work life as well as perception of robot made products.

Perceived Risks - Jobs (12.2, 12.2, 12.5, 14.8) Refers to investigate the opinions of the public, due to the potential replacement of human labor in favor of robotic technology. Robots are replacing humans as they can perform tasks more efficient or considered dangerous for humans.

Perceived Risks - Safety (10.1, 10.4, 12.8) Refers to measure already current and potential safety issues with robotic technology. The questions include direct as well as in direct safety issues.

Perceived Risks - Future (10.8, 12.10, 14.3, 14.7, 14.13) The questions refers to measure the individual's perception of a society in the future that contains more robots. The rationale is that the future is unknown however individuals might have been influenced by media in their predictions. Acceptance of robotic technology (8.1, 12.9, 14.1, 14.6, 14.9) The following questions determine whether the individual accept robot technology and consider the technology good for society. It also aims to measure whether the individual prefer companies that embraces robotic technology.

Figure 4: Conceptual model/Operationalization (own creation)

3.6 Analysis design

The gathered data from the survey will be analyzed by using simple and multiple linear regression analysis, in order to draw conclusions about the population. To give an explanatory description of the data, descriptive statistics is provided in Appendix 1 to portray the sample. (Lind, Marchal & Wathen, 2013). Questions from the survey have been merged into constructs to properly analyze the data. In total, four main constructs have been made. Trust, Perceived risks, Perceived benefits & Acceptance. Jobs, Safety and Future are all part of perceived risks and have been split into three sub-variables as the intention was to measure perceived ambiguities expressed by the public. At the creation of the different constructs, calculations of Cronbach’s Alpha were made. Cronbach’s Alpha is a statistical number between 0.00-1.00 that estimates the reliability of a test. This is also called construct validity and should be considered before further correlation tests are performed. (Pallant, 2010) A higher correlation within each different construct indicates a higher Cronbach’s Alpha. Santos (1999) argues that when Cronbach’s Alpha is above 0.6, the construct is sufficient. In concurrence with DeVon, Block, Moyle-Wright, Ernst, Hayden, Lazzara and Kostas-Polston (2007) construct validity is improved by the use of appropriate operationalized theories and concepts. It is important to ensure that the different questions used in the constructs properly measure as intended. Cronbach’s Alpha calculations are presented in table 1. Spearman’s Rho correlation has been applied in this study in order to calculate the correlation between variables. The correlations coefficient describes how strong the relationship is between the different variables. The correlation coefficient illustrate the significance in the relation between variables. This is represented by a number between +/- 1,00 where positive numbers express that the correlation is positive and the other way around. A correlation that is lower than +/- 0.10-0.29 is considered weak, +/- 0.3-0.49 is considered moderate and +/- 0.5-1.0 is

considered strong (Pallant, 2010). The correlations calculated for this research is presented in figure 5. Other variables that will be presented in this paper is R² which is described as the proportion of variability explained by the independent variable. Beta (β) is displaying the strength of the effect of each independent variable to the dependent variable. Meaning the absolute value of the β, indicates a stronger effect. (Lind, Marchal & Wathen, 2013)

3.7 Reliability & validity

The results shown below in table 1 displays the strength in each different construct measured using Cronbach’s Alpha. In accordance with Hair et al., (2006) the aim was that the variables should be above 0.5.

The questions used in this study have been inspired by previous research of variables that affect acceptance of technology. However they have been adapted to fit in the context of robotics.

Construct Questions Cronbach’s

Alpha (α) Trust 10.5: Robots are reliable

12.1: I wouldn’t mind talking to a robot instead of a human when buying things

12.3: Robots are a good thing for society 12.7: I would trust the advice of a robot

0.659

Perceived risks - Jobs

12.2: Robots are necessary as they can do jobs that are too hard or too dangerous for people

12.4: Robots should replace human labor if this is more effective/productive

12.5: Widespread use of robots can boost job opportunities

14.8: I like that robots could possibly be in many sectors

0.719

Perceived risks - Safety

10.1: I think robots are safer than humans 10.4 I would feel safe working close to a robot 12.8 Robots will keep human interests in mind

0.620

Perceived risks -

Future 10.8: The robots of the future will be a potential threat to mankind (reversed) 12.10: Robots will create a better future

14.3: Robots will be very important for society in the future

14.7: I fear that robots will take over many things in society (reversed)

14.13: I like the possibility of having more robots in society in the future.

0.699

Perceived benefits

8.2: Robots in production mean I can be sure of high quality

9.2: At my work, a robot would increase the productivity

9.4: I would accomplish tasks at home more quickly with a robot

10.2:I find products made by robots reliable 10.6: Robots are accurate

Acceptance 8.1: I like to buy products from companies that use robots in their production

12.9: I prefer human made products rather than robot made (reversed)

14.1: I will buy one

14.6: Robots will be important for most industries in the future

14.9: Companies should invest in robot technology

0.675

Table 1: Cronbach's Alpha

In figure 5 the correlation and significance between each variable is presented. The level of significance indicates the probability that the results are correct and not random. Social science usually accept “p<0.05”, although<0.001” is considered ideal. (Pallant, 2010)

Spearman’s Rho was applied to measure the strength of the relationships between each variable. As shown in figure 5 the correlation of trust in robotic technology and people’s perception of risks and benefits is strongly positive and significant. The correlation between trust and perceived risks is 0.769 and benefits 0.531. It needs to be stressed that in contrast to Siegrist (2000) research the scale of Perceived risks have been reversed in the manner that respondents that agreed with the statements in this construct illustrated that they perceived less risk. Hence, the correlation is positive.

Jobs, safety and future displayed a strong and significant correlation towards perceived risks. Risks associated with future potential concerns had the strongest correlation (0.875). Although the measured correlation is high, partly due to how the “Perceived risk” variable is constructed, it is still considered low enough for the discriminant validity and should be merged into one construct. The correlation amid perception of benefits and acceptance are 0.529, which is considered strong. The perception of risks and acceptance correlation is moderately stronger at the level of 0.565. In addition what attracts attention is the correlation between trust and acceptance, even though they correlated on a slightly weaker level 0.448 the correlation was still significant.

4. Analysis

Siegrist (2000) implied that trust had an impact on benefits and risks. The analysis gives further support for this in regards to robotic technology. It also gave support to that people's expressed ambiguities regarding their jobs, safety and future when it comes to robotic technology, are issues that needs to be covered to bolster acceptance. Furthermore the analysis confirmed Siegrist’s (2000) proposal on the influence of perceived benefits and risks towards acceptance of technology.

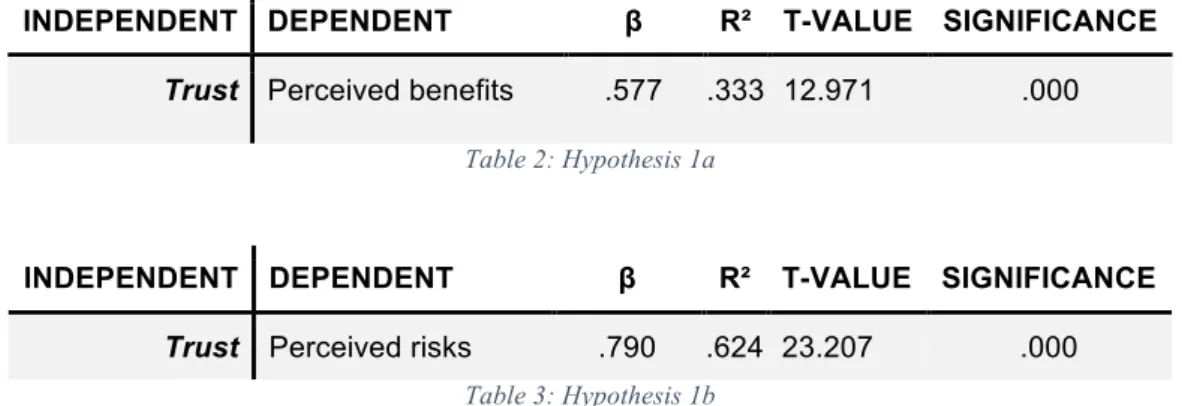

4.1 Hypotheses 1 a-b

• H 1a. Trust in robotic technology influences people’s perception of benefits • H 1b. Trust in robotic technology influences people’s perception of risk

A significant correlation was found between these variables, to convey direction the hypotheses were analyzed using two separate simple linear regression analysis. Perceived benefits and risks were used as the dependent variables and trust as independent. The result from the regression analysis is shown in tables 2 and 3 provides support for both hypothesis 1a and 1b. Therefore at the confidence level p<0.001 it is possible to conclude that trust has a positive influence on people’s perception of benefits and risks. Given that the beta (β), R² and t-value is stronger for trusts influence on perceived risks. This indicates that trusts impact on perceived risks is stronger than on perceived benefit.

INDEPENDENT DEPENDENT β R² T-VALUE SIGNIFICANCE

Trust Perceived benefits .577 .333 12.971 .000

Table 2: Hypothesis 1a

INDEPENDENT DEPENDENT β R² T-VALUE SIGNIFICANCE

Trust Perceived risks .790 .624 23.207 .000

Table 3: Hypothesis 1b

4.2 Hypotheses 2 a-c

• H 2a Risks associated with jobs are significant in relation to perceived risks • H 2b Risks associated with safety are significant in relation to perceived risks • H 2c Risks associated with future are significant in relation to perceived risks

Figure 5 portrays the correlation of determinants for perceived risks. Although the correlations are strong, it is still low enough to be merged into one construct. The strong correlations indicate that jobs, safety and future are determinants of risks that are of significant importance for perceived risks of robotic technology. Hence, hypotheses 2a, 2b and 2c are confirmed with the confidence level of p<0.001.

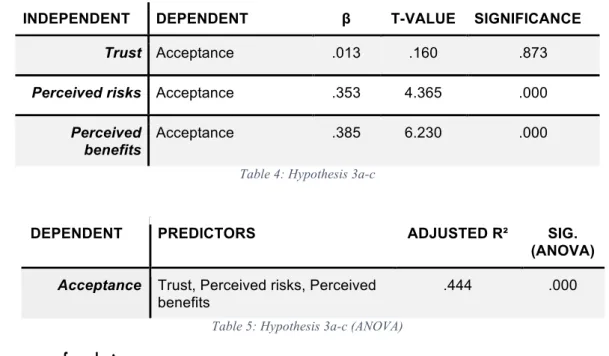

4.3 Hypotheses 3 a-c

All variables had significant correlations; multiple linear regression analysis was used to evaluate the direction of these relationships. In line with the hypotheses, acceptance was assessed as the dependent variable. This resulted in that perceived risks and perceived benefits directly influence acceptance of robotic technology, which can be observed in table 4 and 5. By that reason it is possible to confirm hypothesis 3a and 3b at the confidence level of p<0.001. The (β) and the t-value indicates that perception of benefits has a stronger impact on acceptance than perceived risks. The multiple regression analysis also shows that acceptance was not dependent on trust, considering that they correlated the relationship is indirect. Hence hypothesis 3c is confirmed. This model proved to be significant at confidence level: p<0.001, visualized in tables 4 and 5.

INDEPENDENT DEPENDENT β T-VALUE SIGNIFICANCE

Trust Acceptance .013 .160 .873

Perceived risks Acceptance .353 4.365 .000

Perceived benefits

Acceptance .385 6.230 .000

Table 4: Hypothesis 3a-c

DEPENDENT PREDICTORS ADJUSTED R² SIG.

(ANOVA)

Acceptance Trust, Perceived risks, Perceived

benefits .444 .000

Table 5: Hypothesis 3a-c (ANOVA)

4.4 Summary of analysis

According to theory, trust influences perception of risks and benefits. In the context of robotic technology this was confirmed by hypotheses 1a and 1b. Giving further support of placing trust in the focus of attention. Perception of risk is claimed to be an important determinant of acceptance. Due to lack of sufficient knowledge, people tend to rely on information channels such as motion pictures or mass media. Information channels that often portray the intent of robotics as devastating for mankind. Hypotheses 2a-c gives support that people’s ambiguities regarding jobs, safety and future are important to further explore and investigate. The confirmation of the hypotheses support that these variables should be merged into one construct. Previous research of acceptance indicated that it was directly influenced by perceived risks and benefits although not directly influenced by trust, the aim of hypotheses 3a-c was to investigate this in the realm of robotic technology.